2

WeRcome!

Welcome to my journey through the world of R!

✔

Become familiar with the lingua franca of statistics

✔

Discover applications of R in Evidence-Based Medicine

✔

Rock, squeeze and explore your data deeply - for free

✔

Find 13 reasons why you will love R!

cannot wait? jump now!

✔

Enhance your skills...

...and start using R today!

...and start using R today!

3

...befoRe we start...

DISCLAIMER

DISCLAIMER

All trademarks, logos of companies and names of products

used in this document

are the sole property of their respective owners

and are included here

for informational, illustrative purposes only

nominative fair use

•••

This presentation is based exclusively on information

publicly available on the Internet under provided hyperlinks.

•••

If you think I violate your rights, please email me: r.clin.res@gmail.com

5

Agenda: 13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

II 1/3 :) R is easy to maintain!

II 2/3 :) R is not resource consuming!

III R is supported by the world of science

IV R is supported by the community

IV ½ :) Books

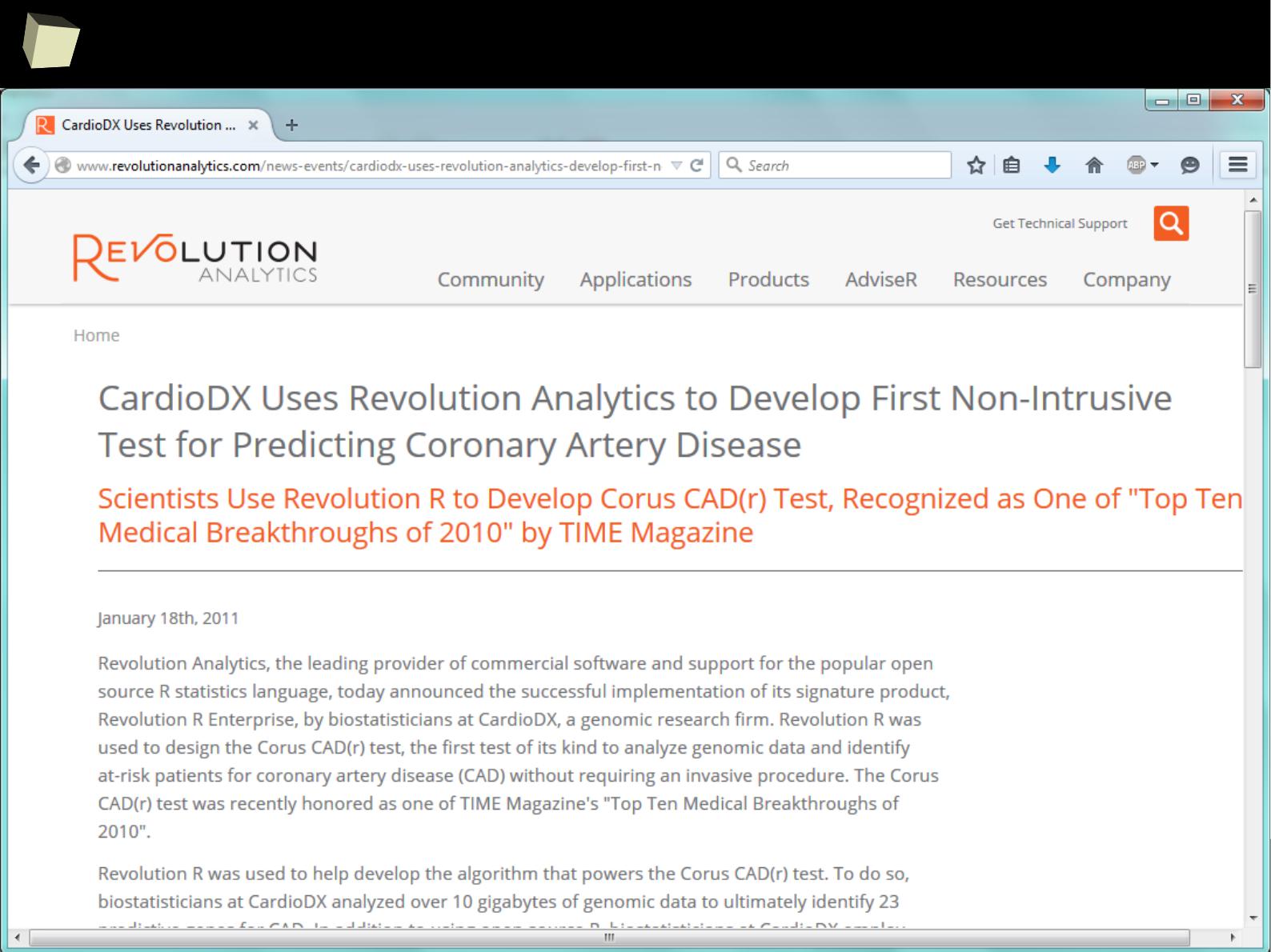

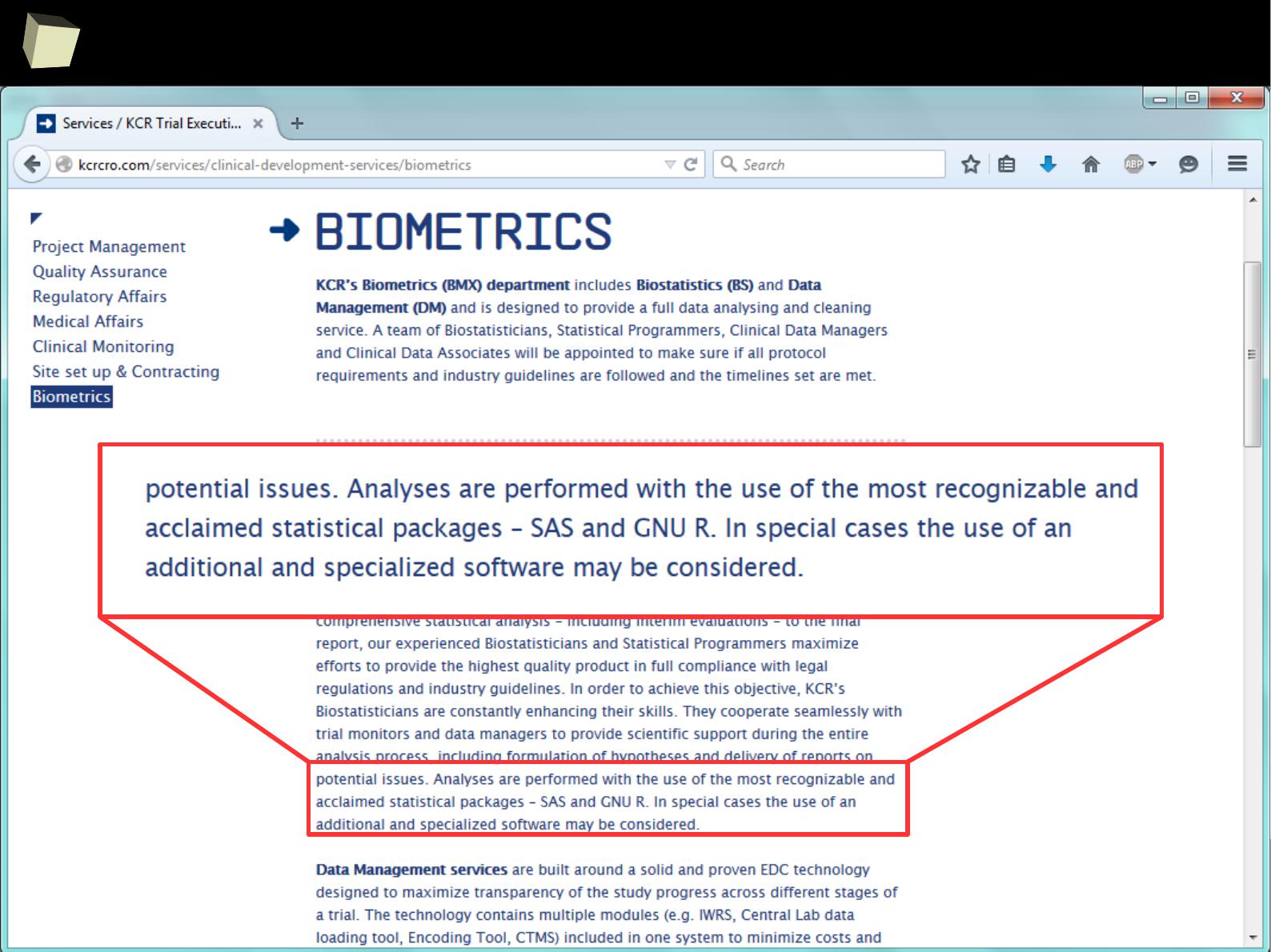

V R is supported by the business

V ½ :) R and SAS

VI R is able to read data in many formats

VI 1/3 :) R and relational databases

VI 2/3 :) Advanced data manipulation

VII Interoperability is easy to achieve

VIII R is truly cross-platform

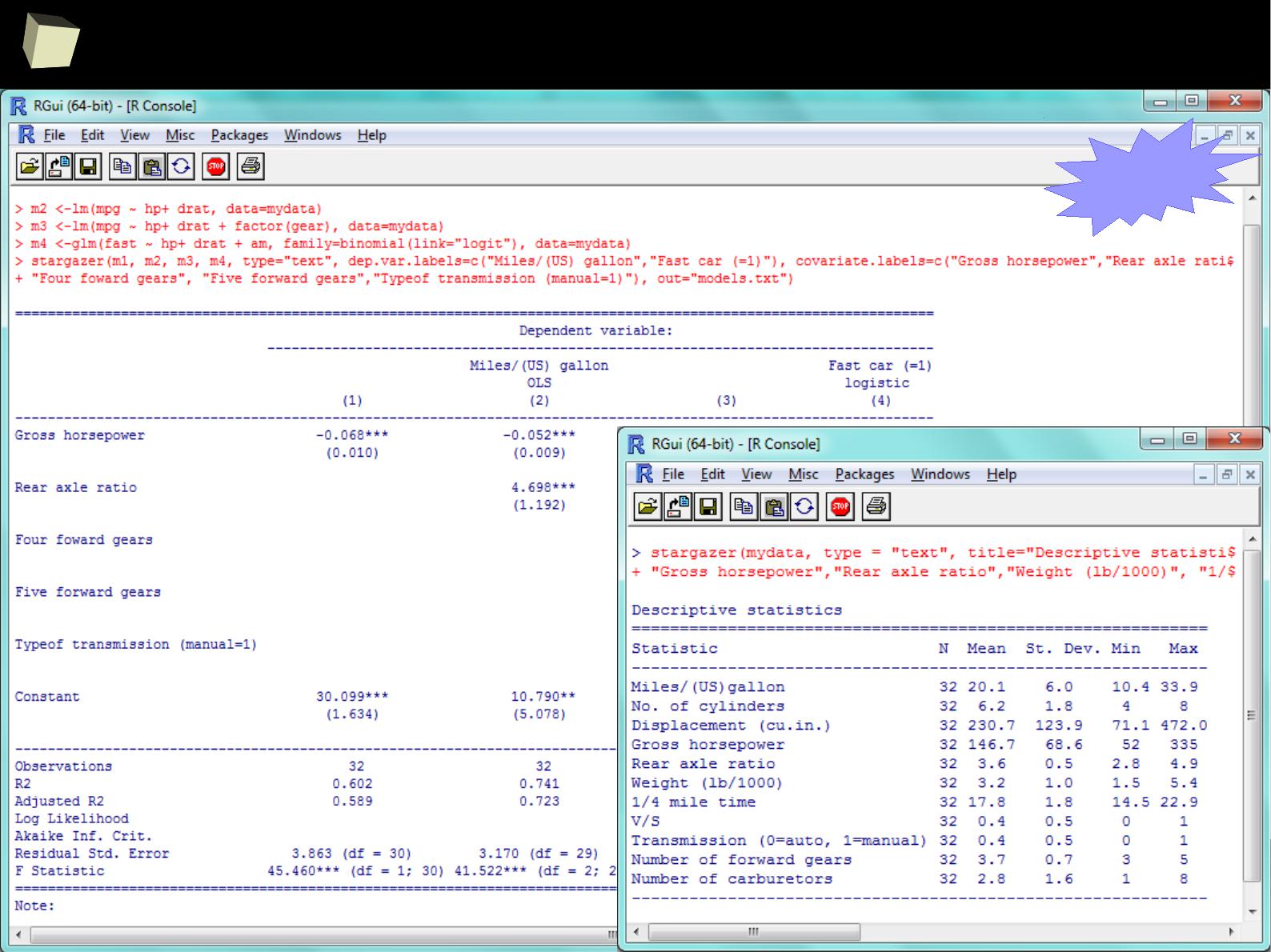

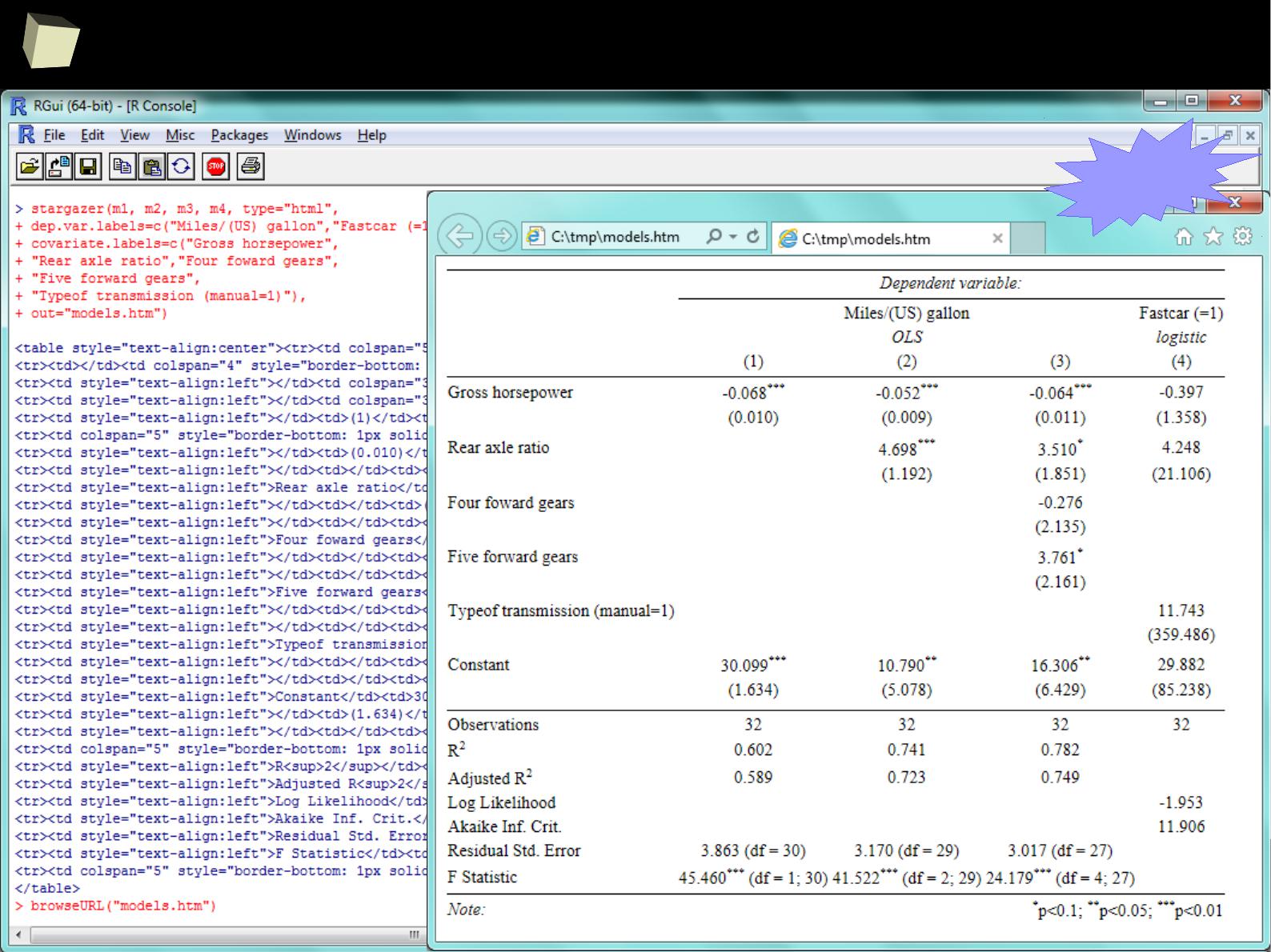

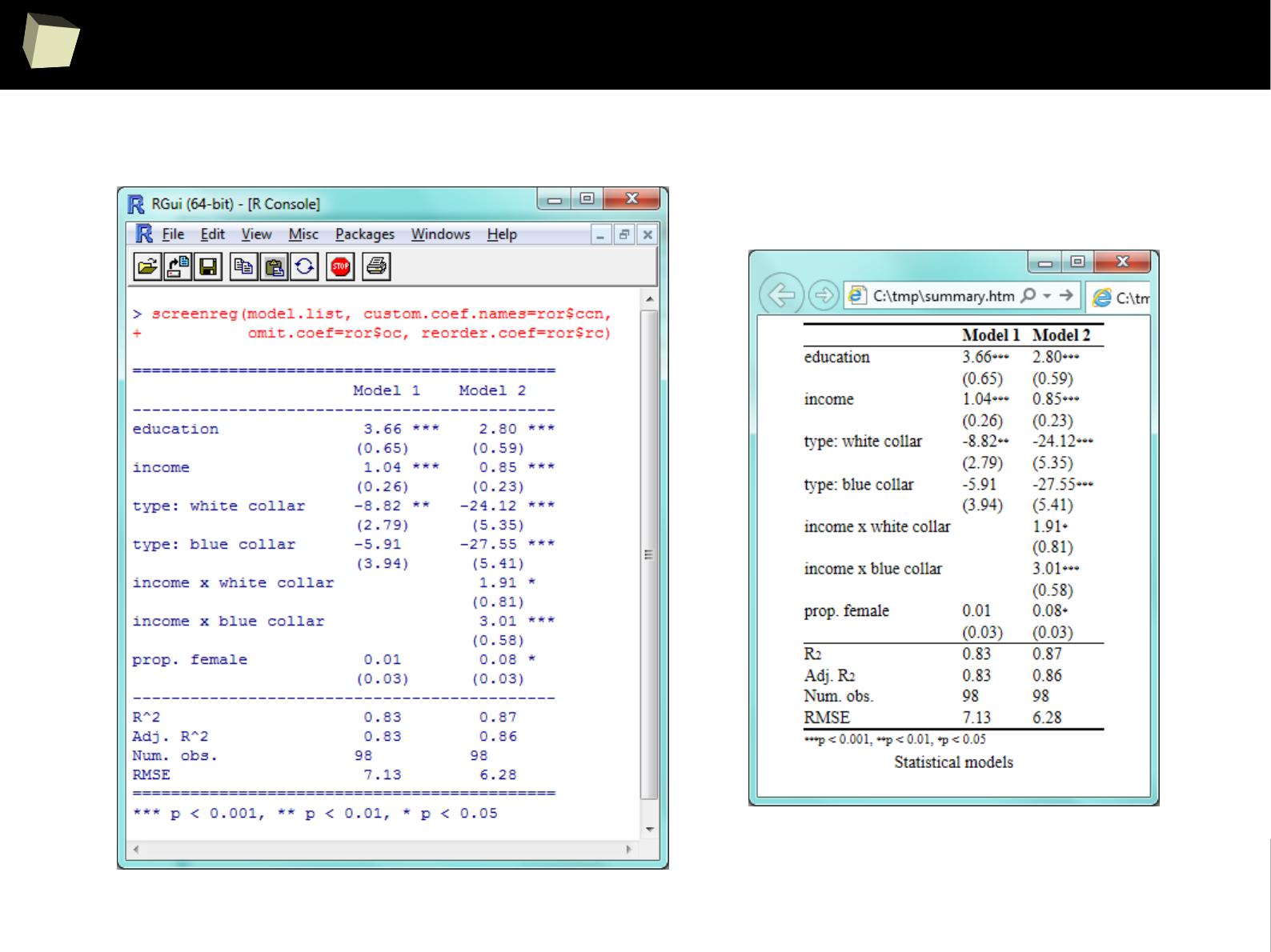

IX R offers numerous ways of presenting data. Reproducible Research

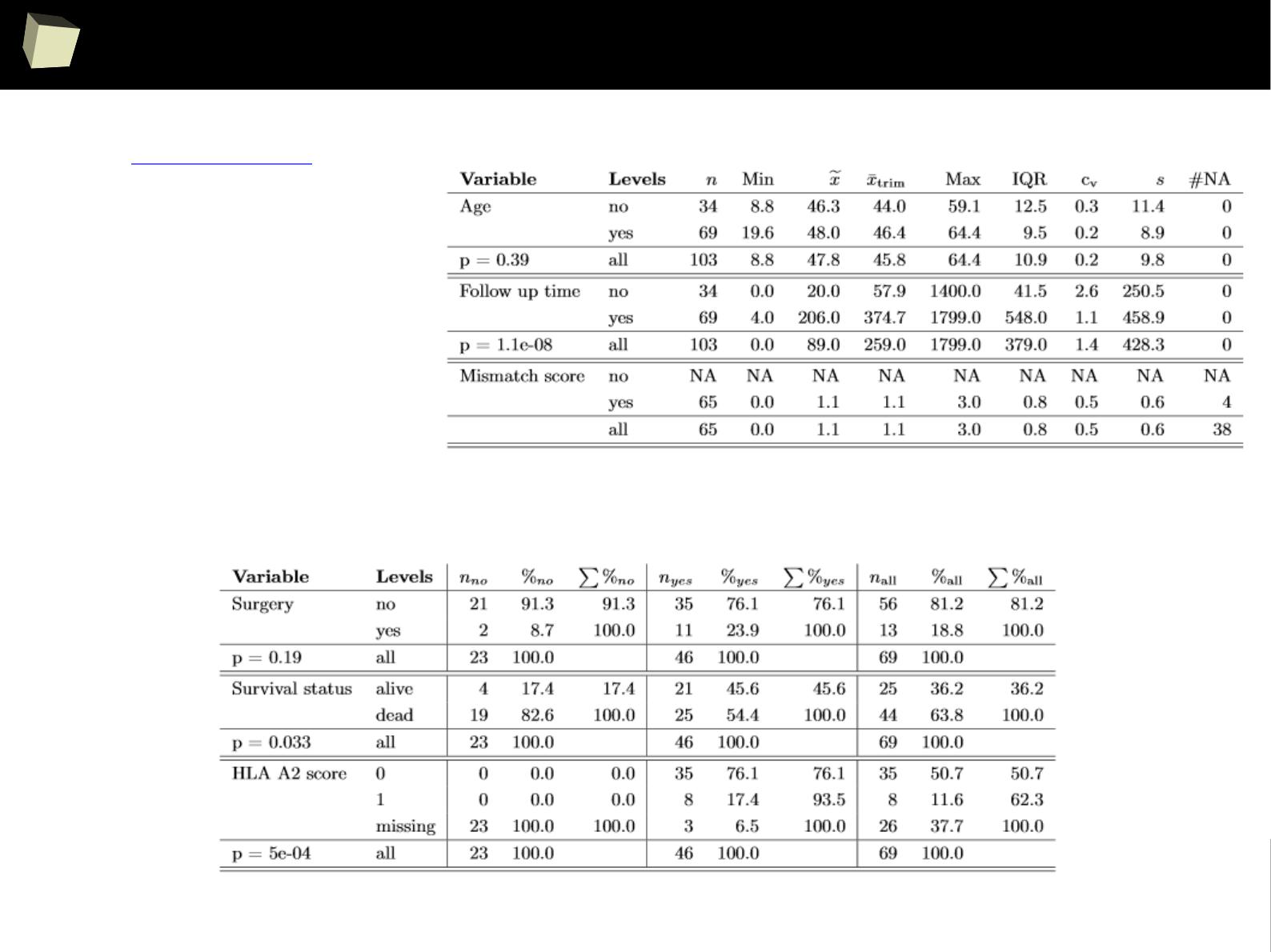

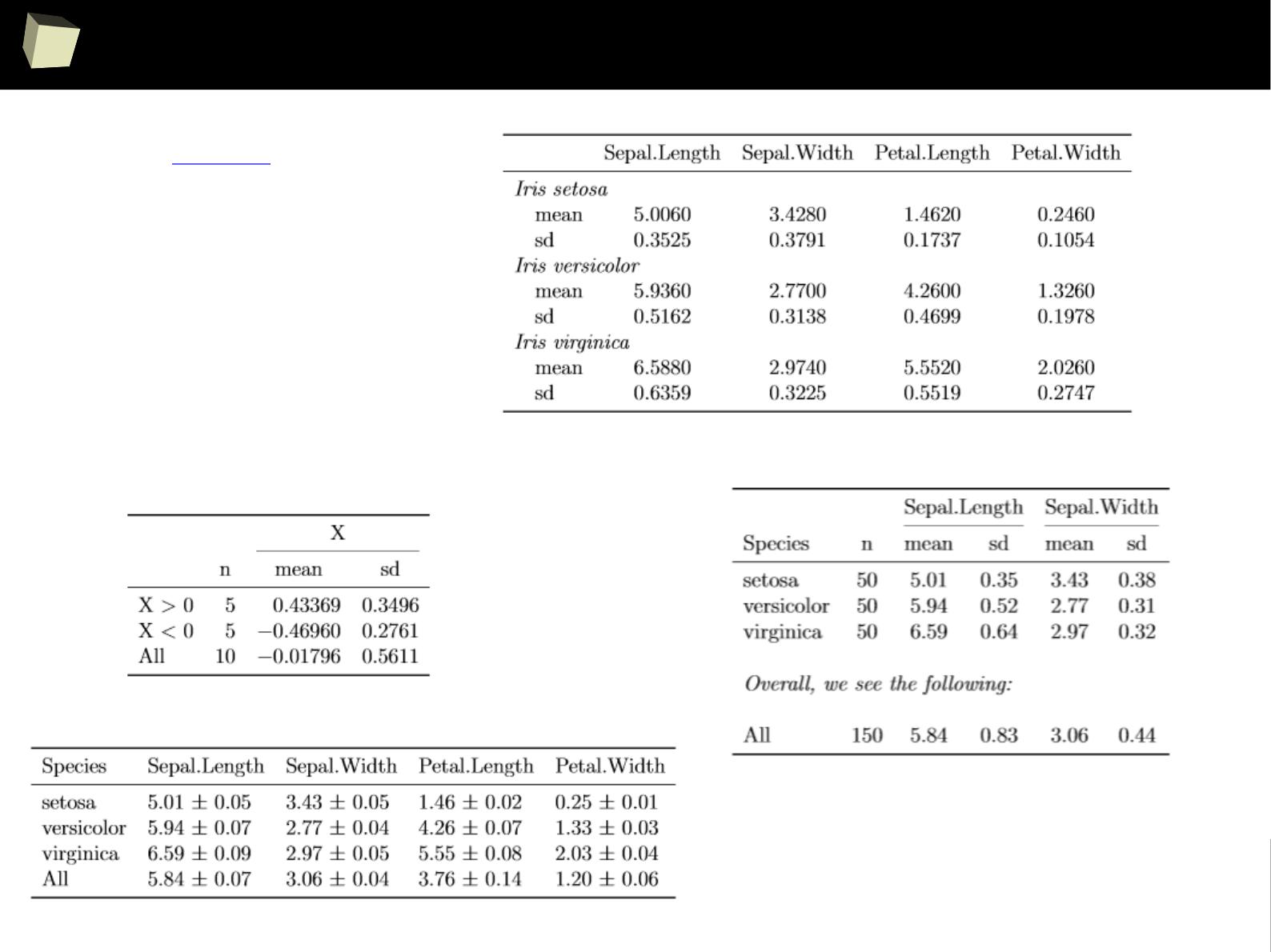

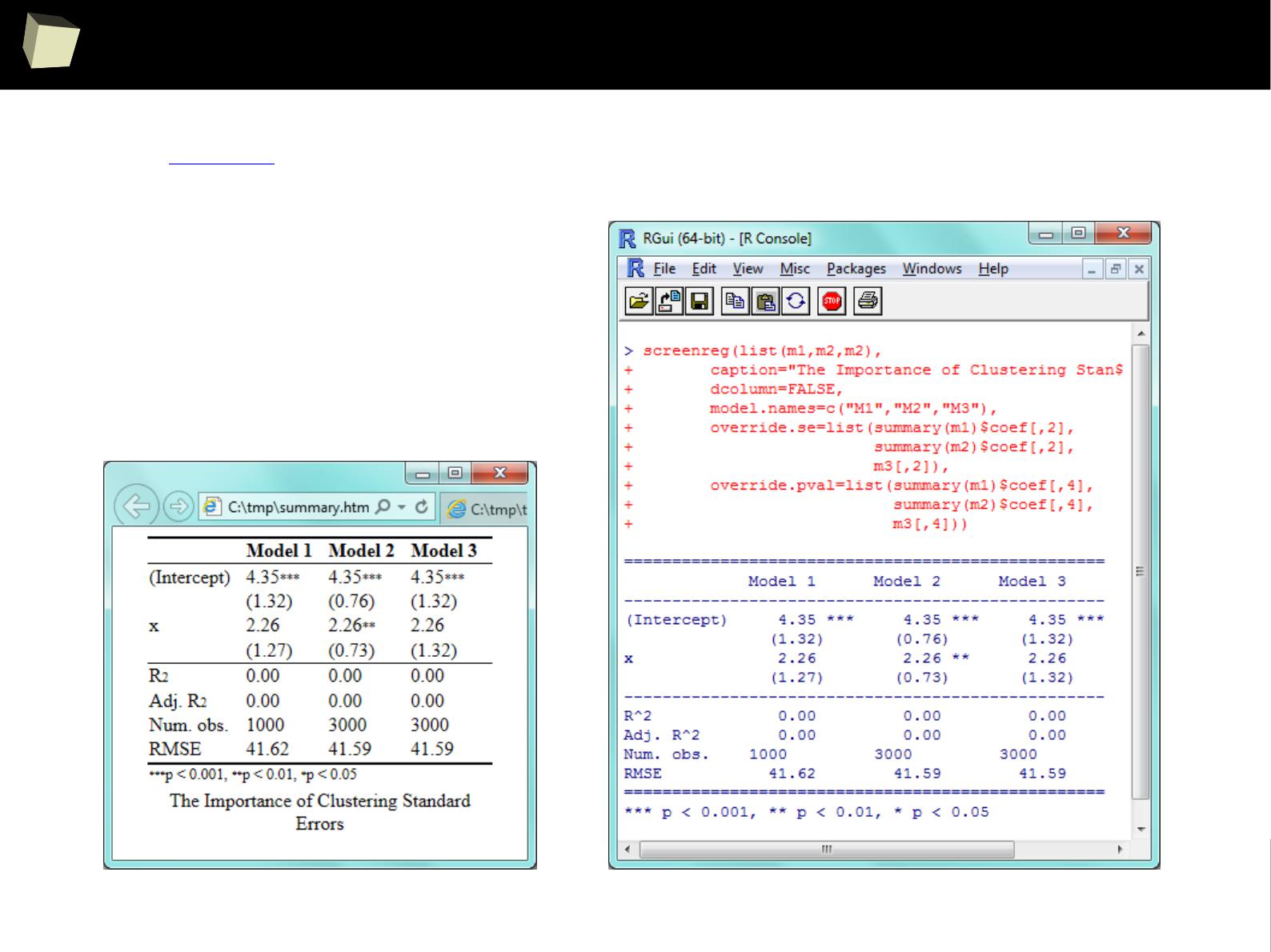

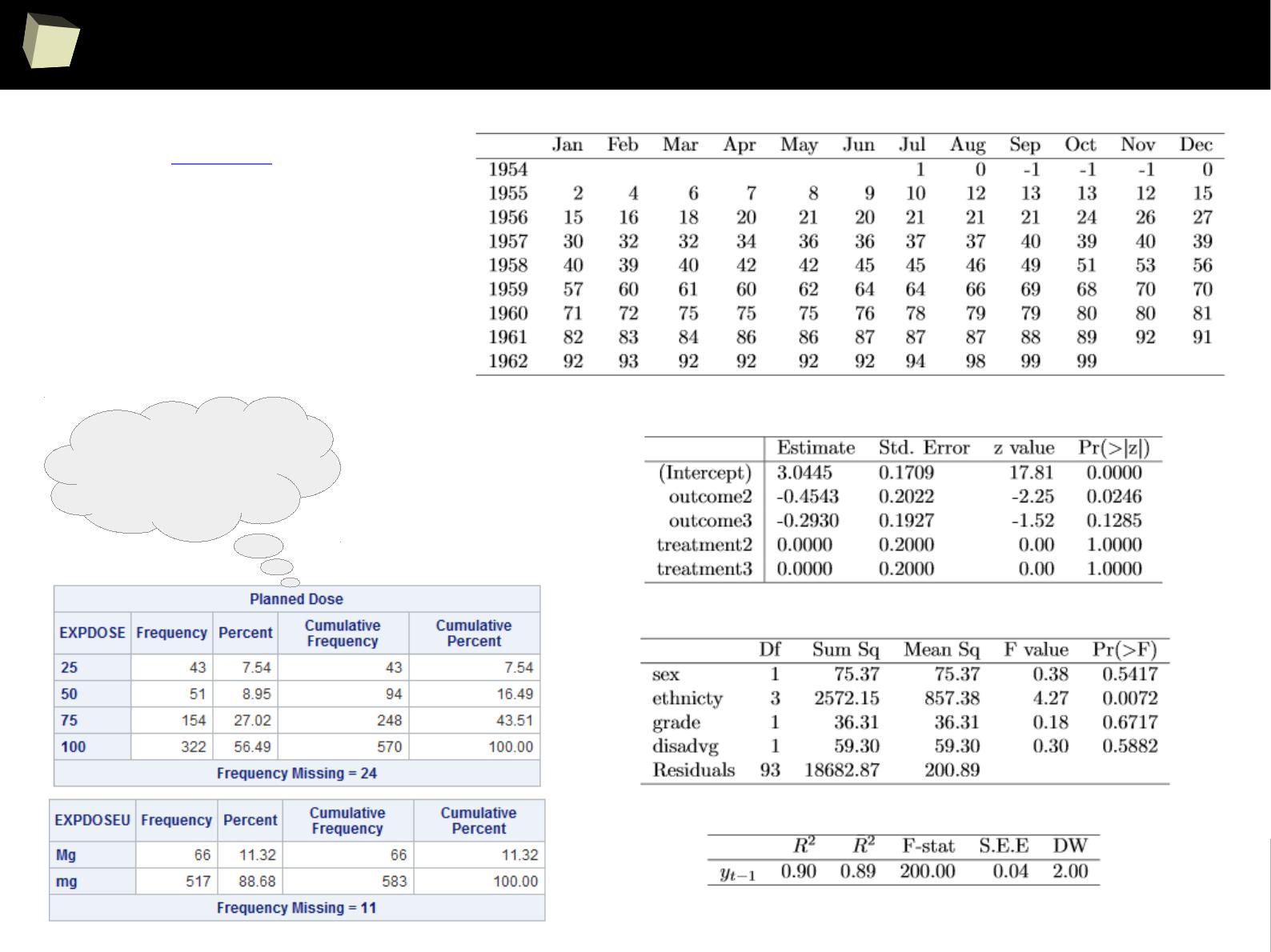

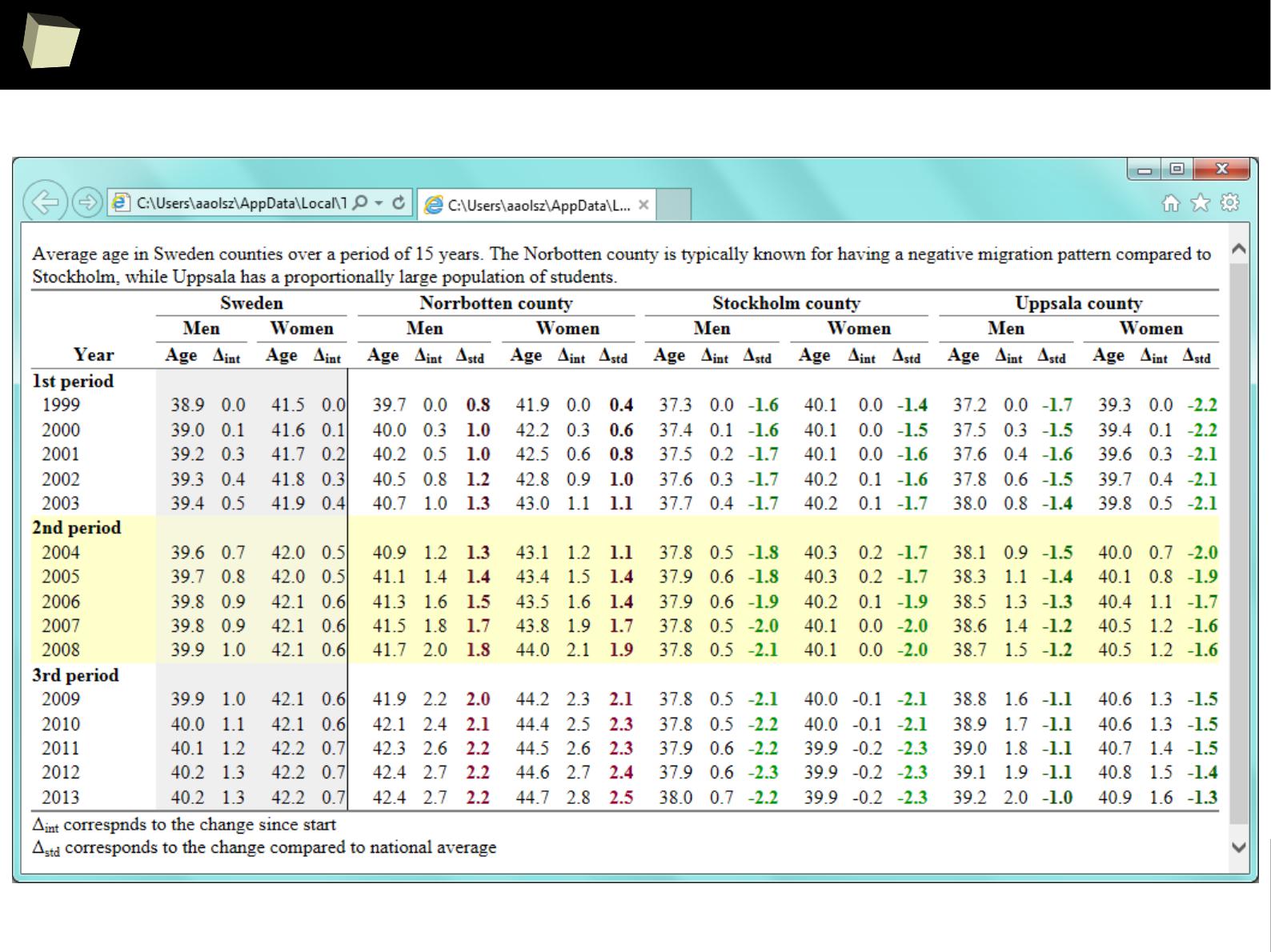

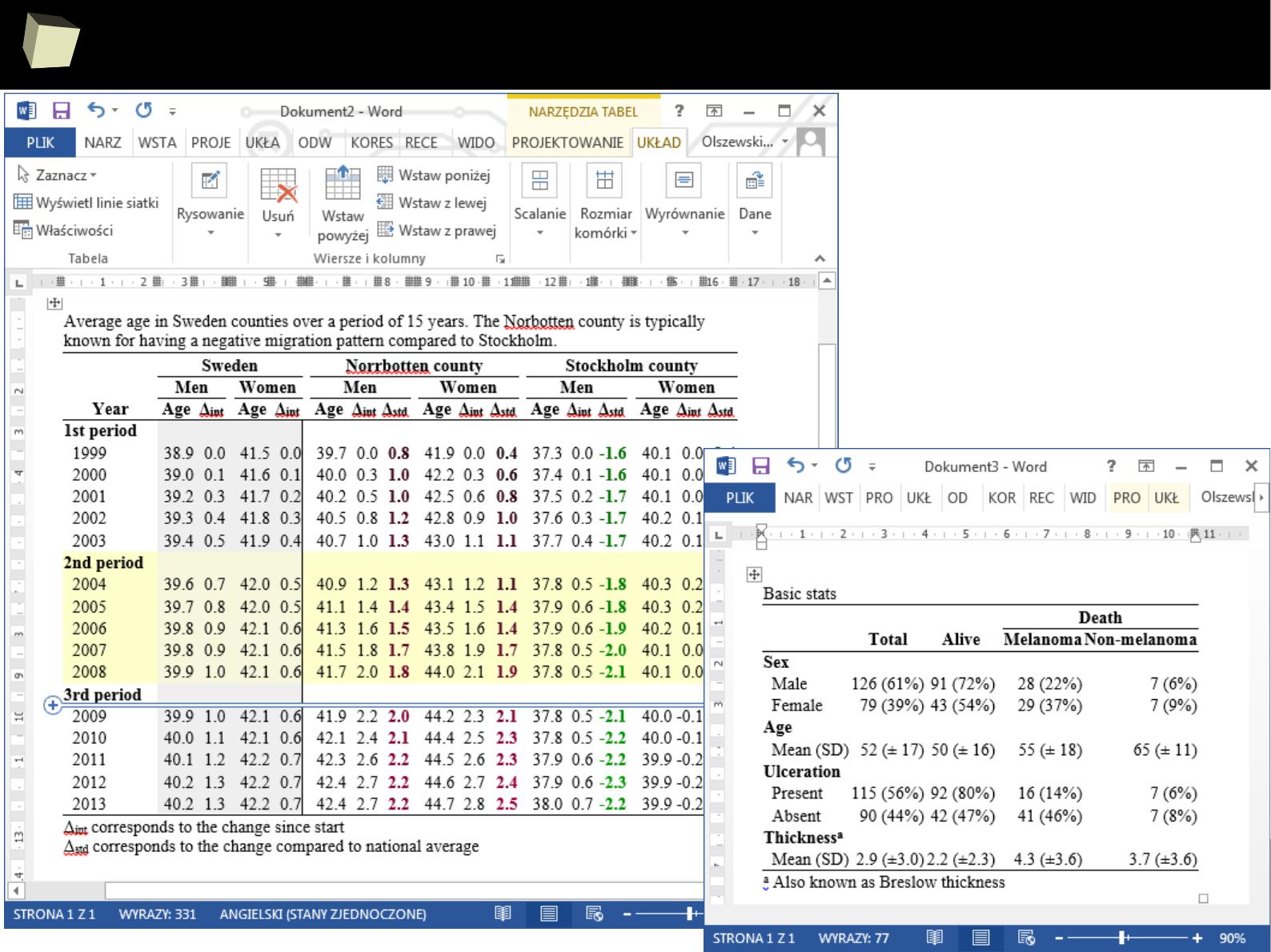

IX 1/3 :) Tables. Clinical Tables.

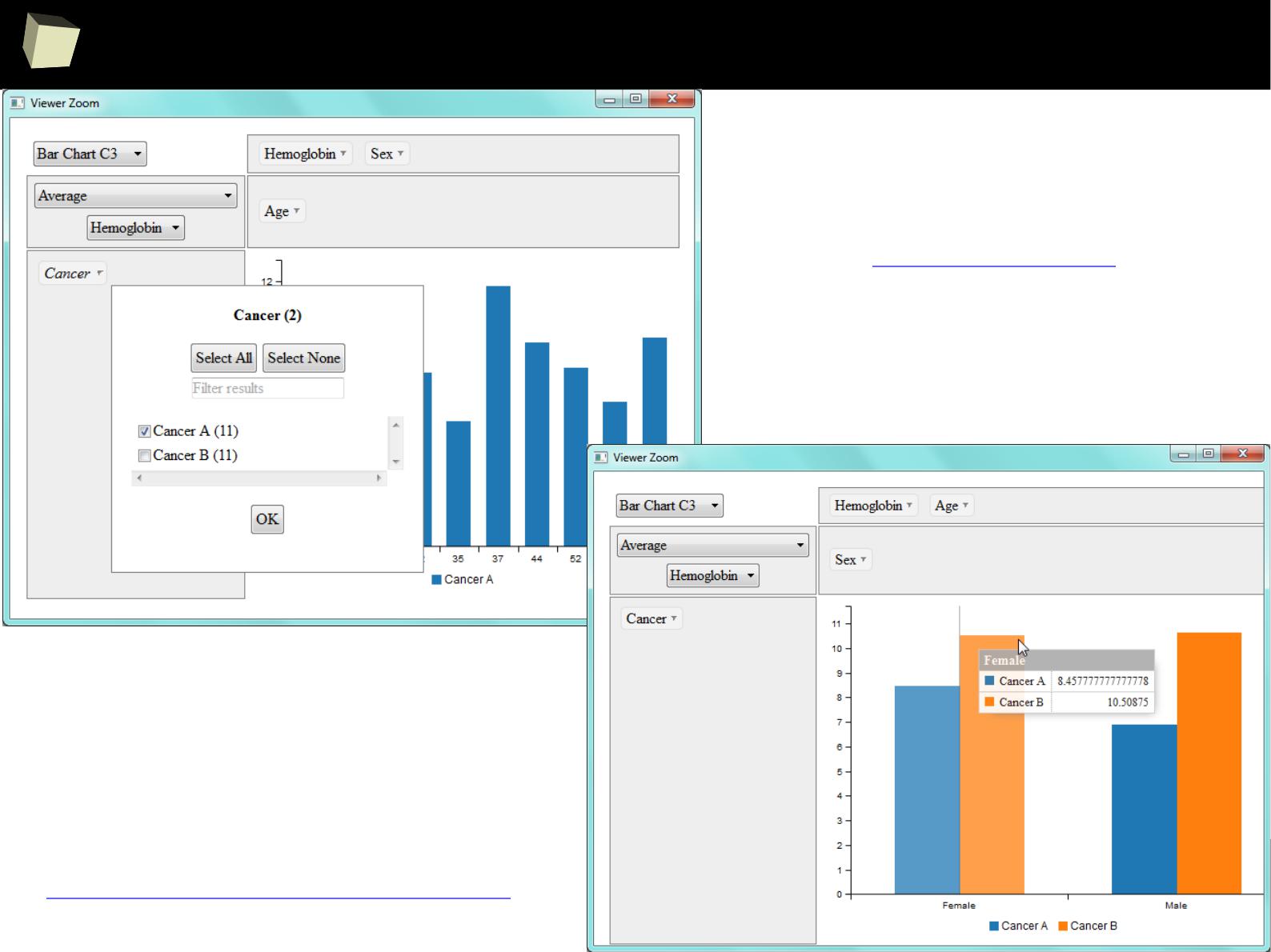

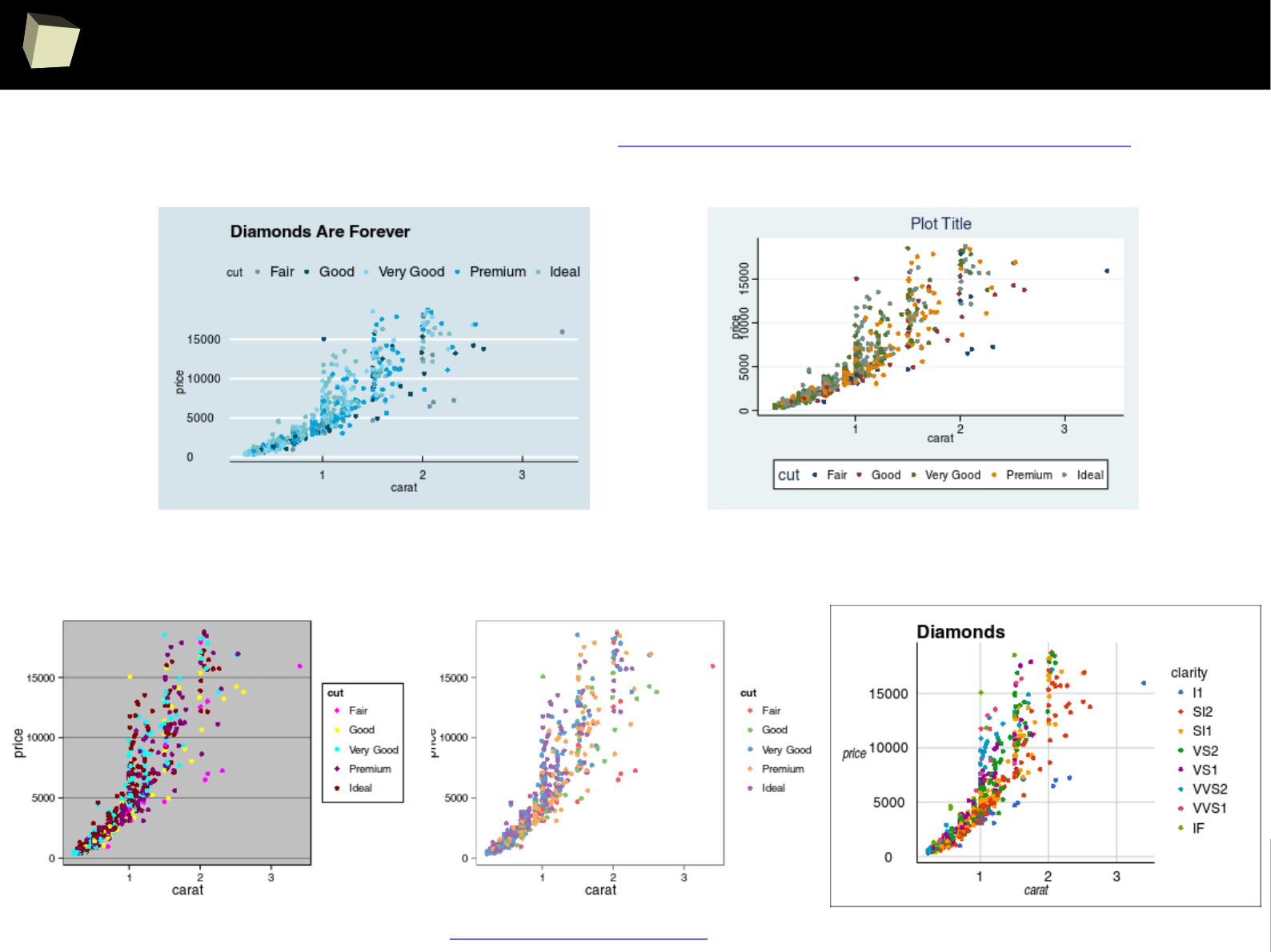

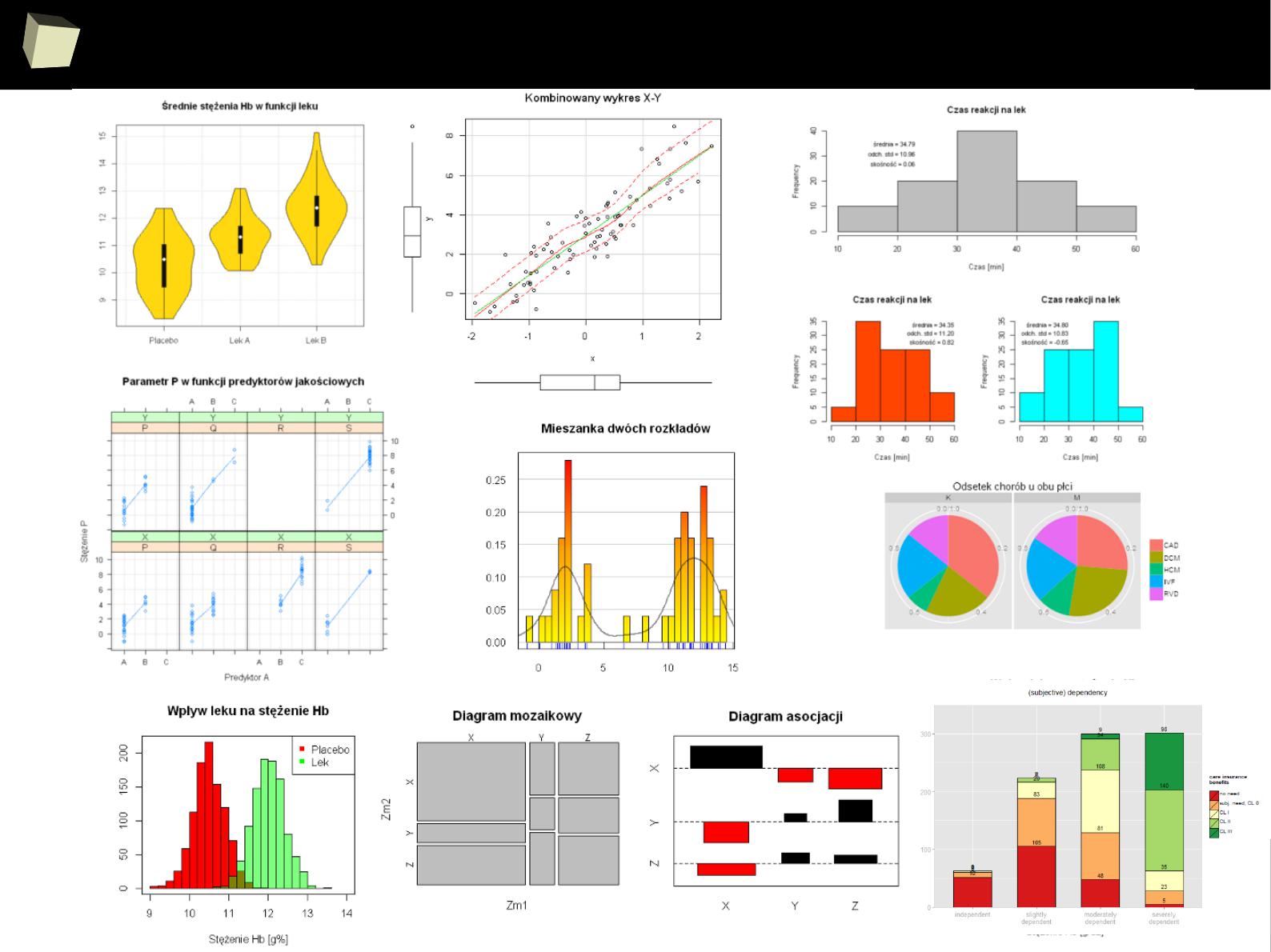

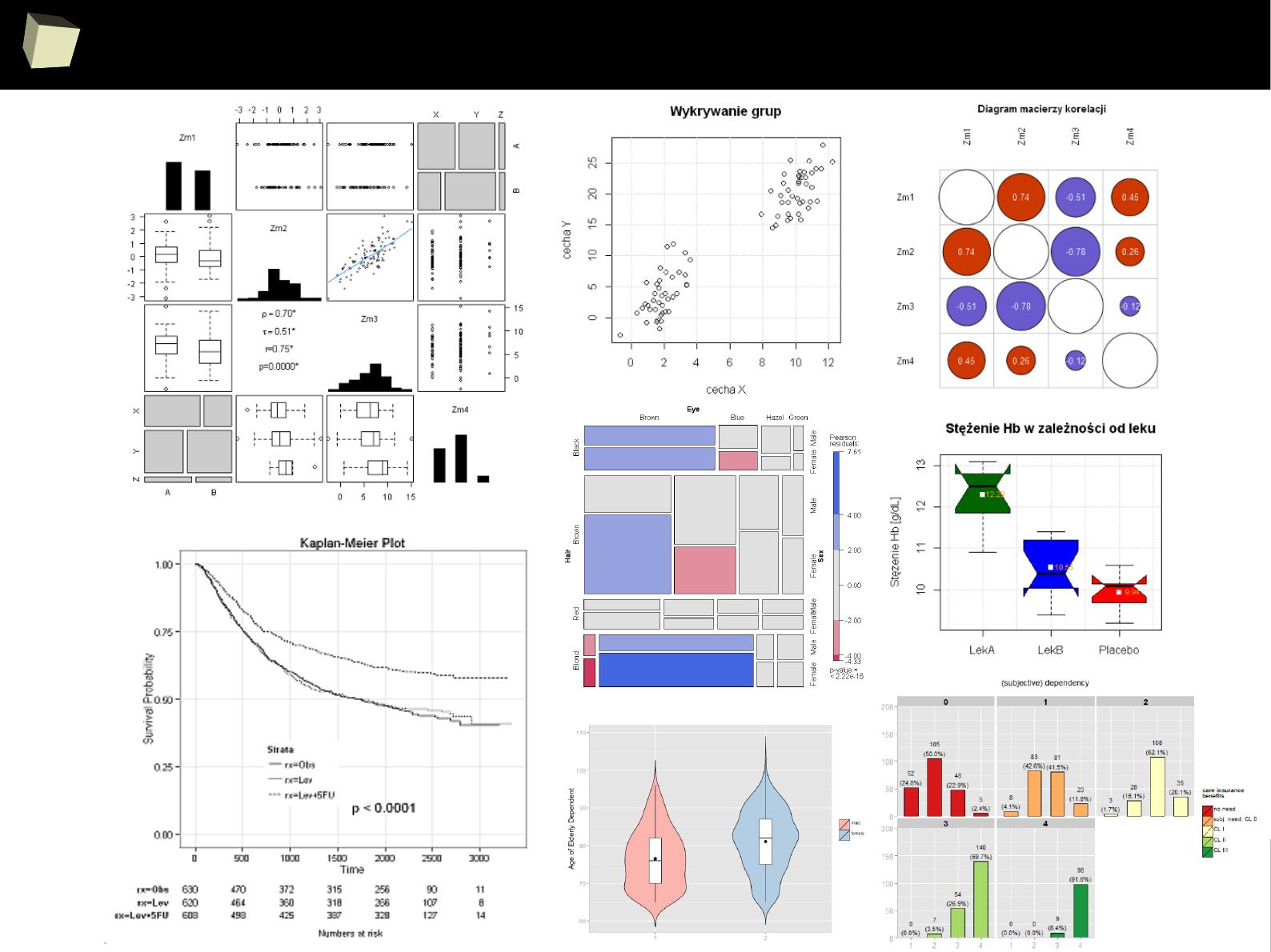

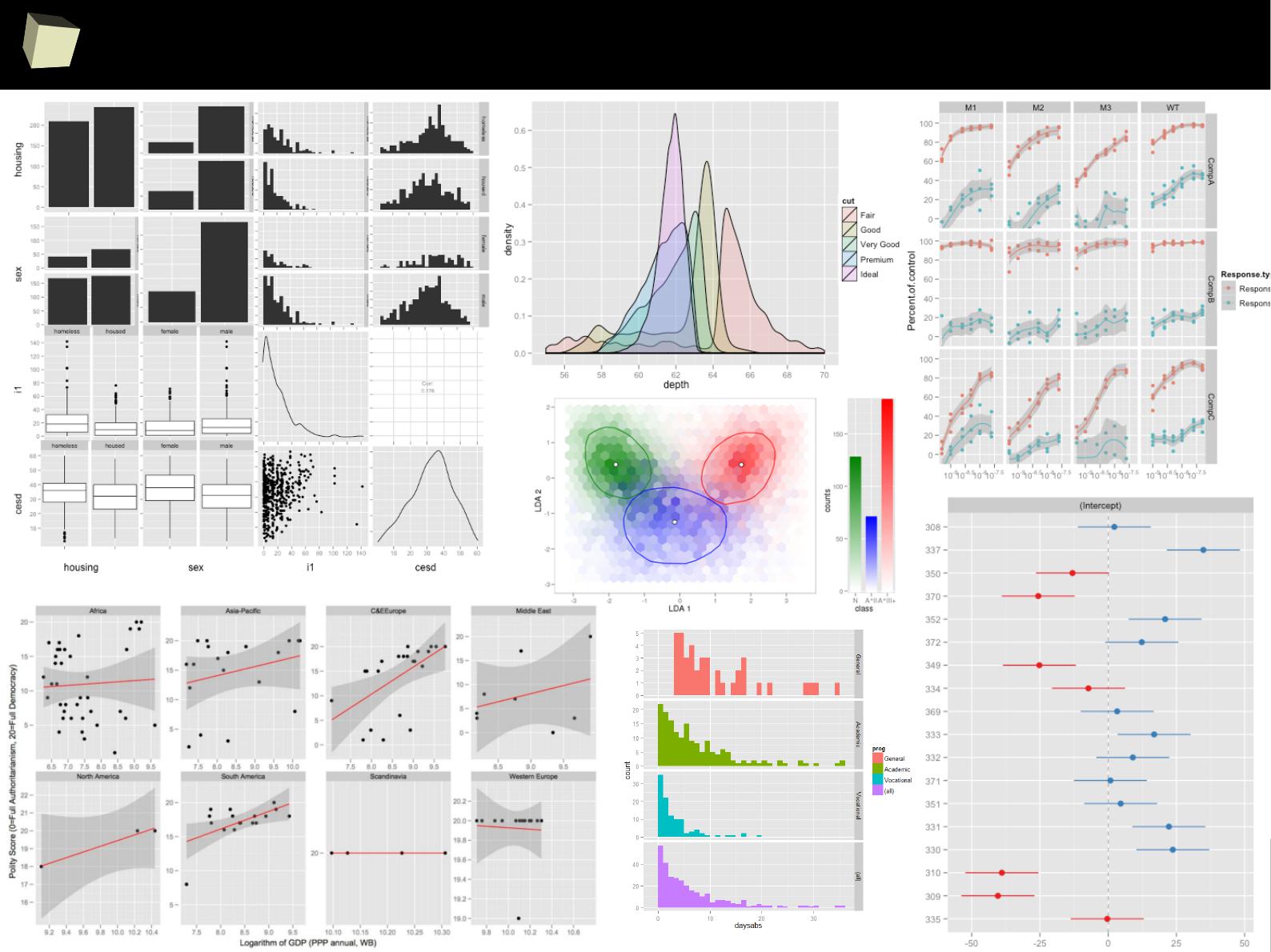

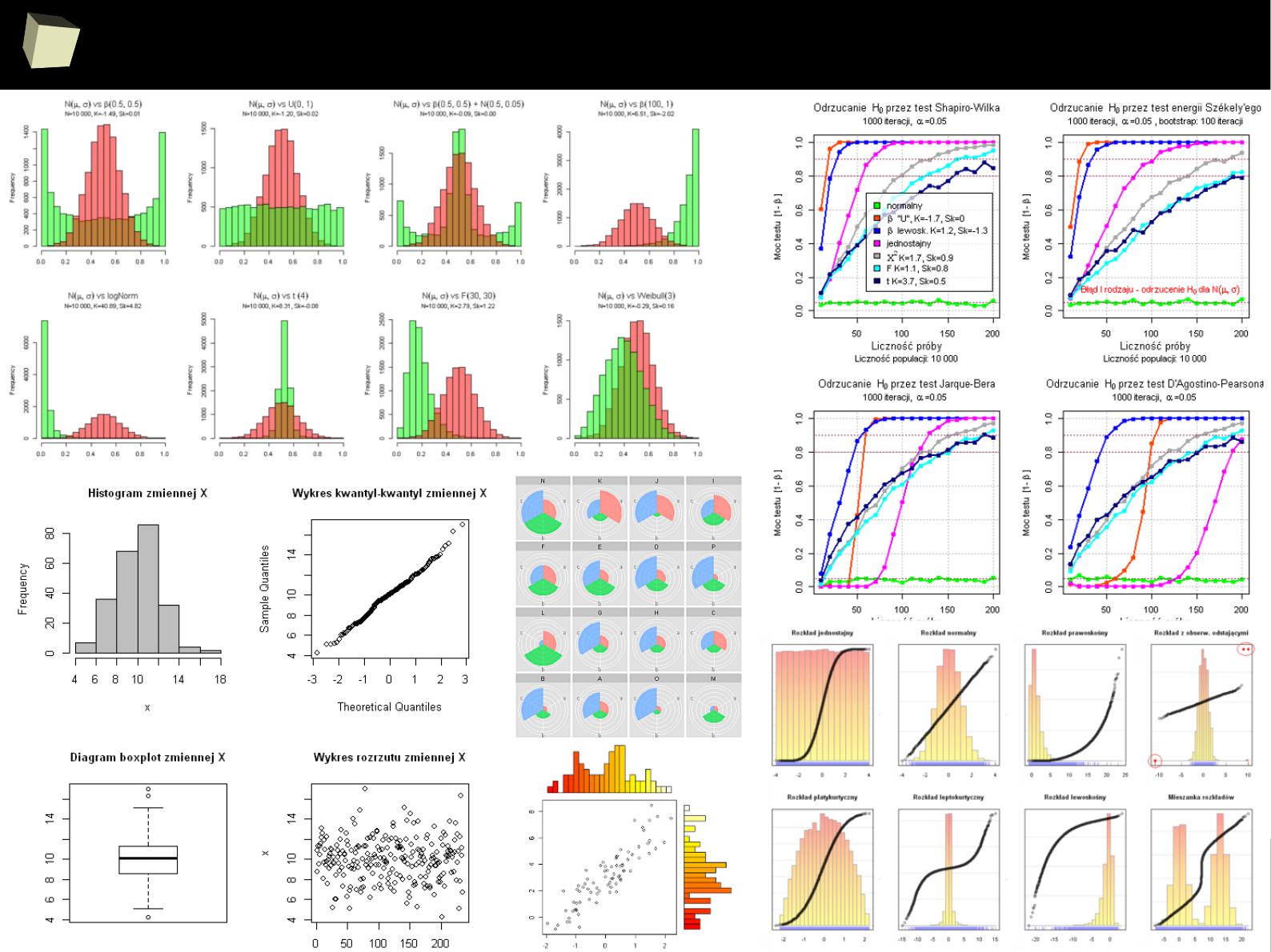

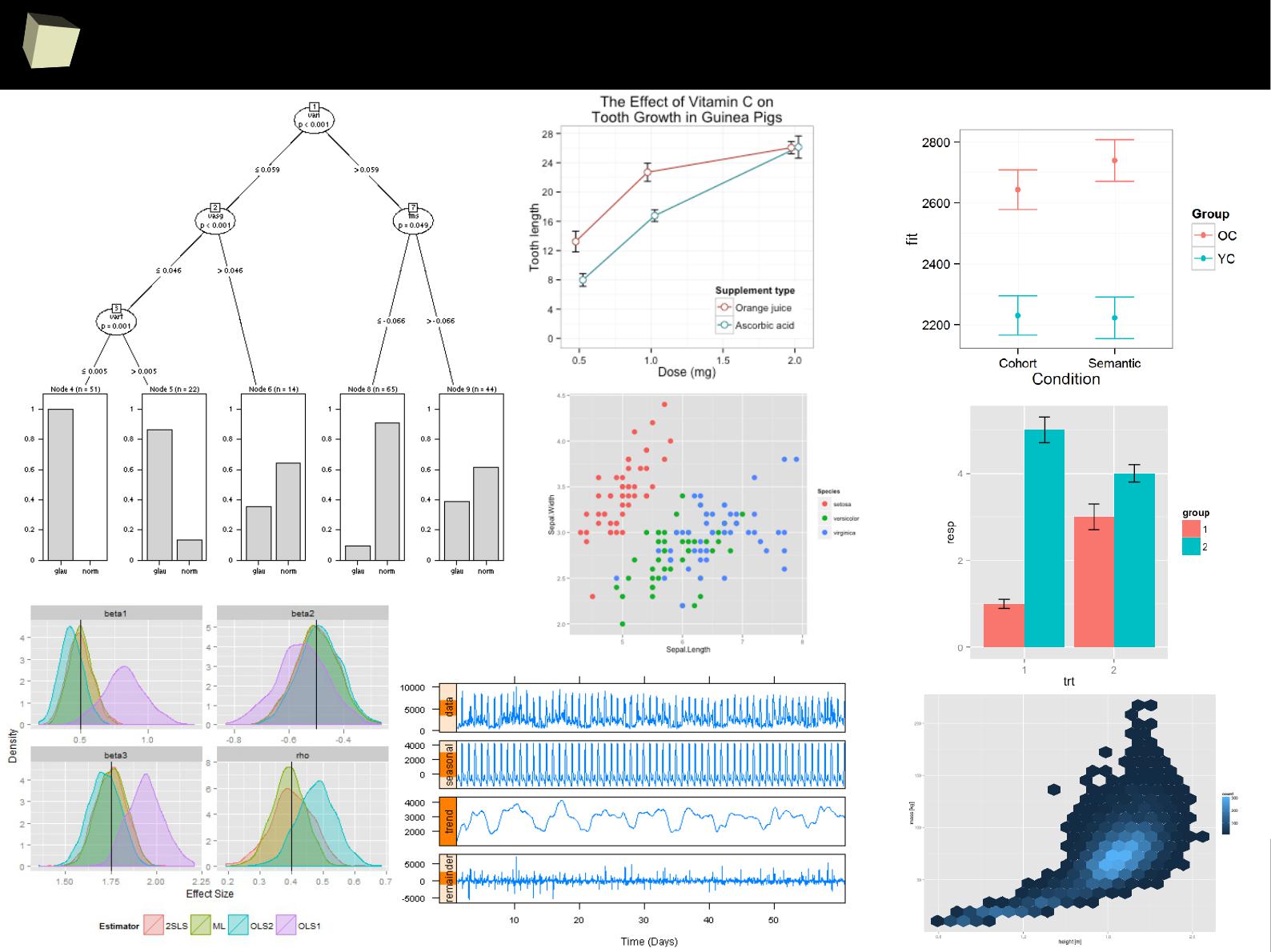

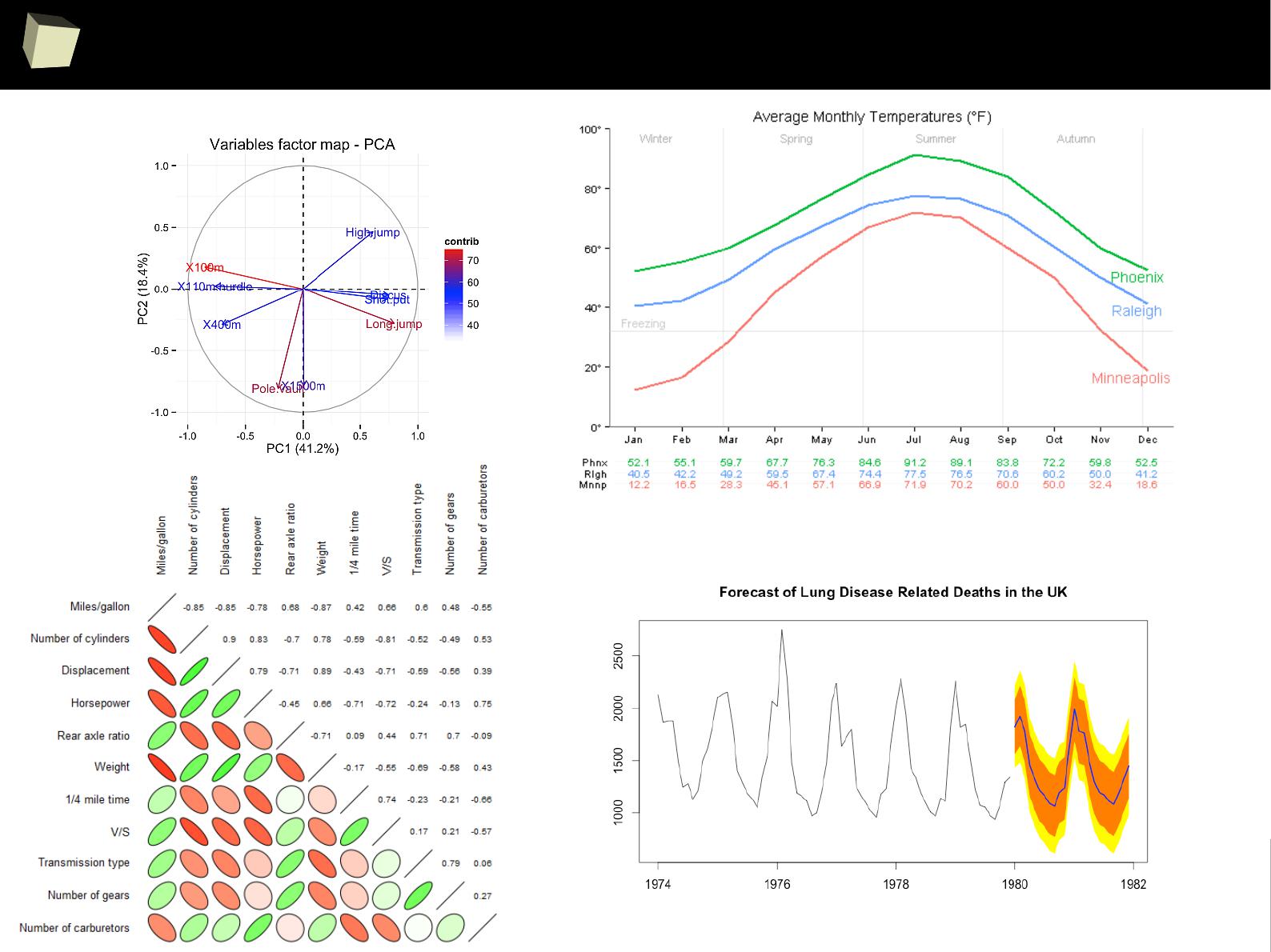

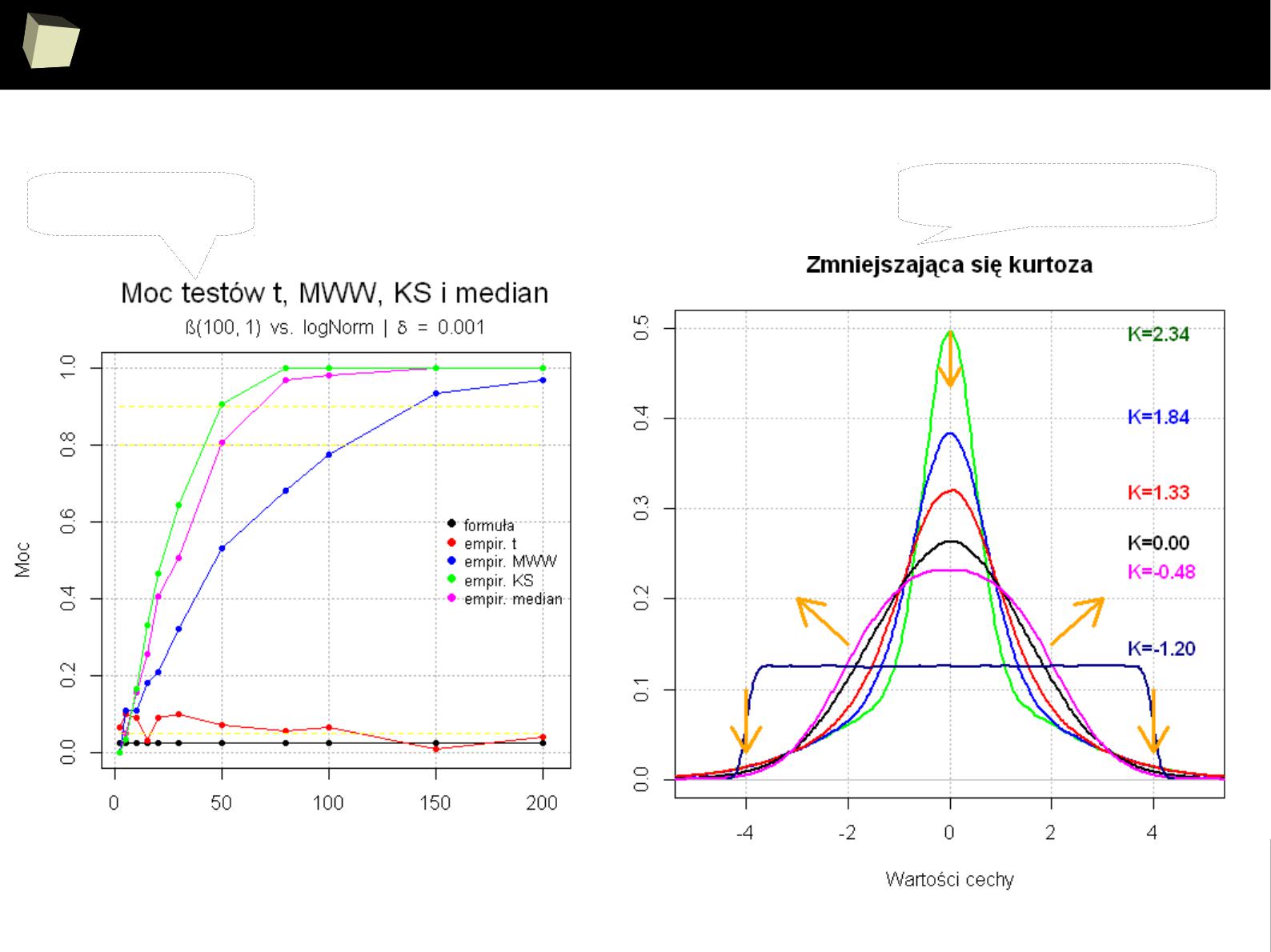

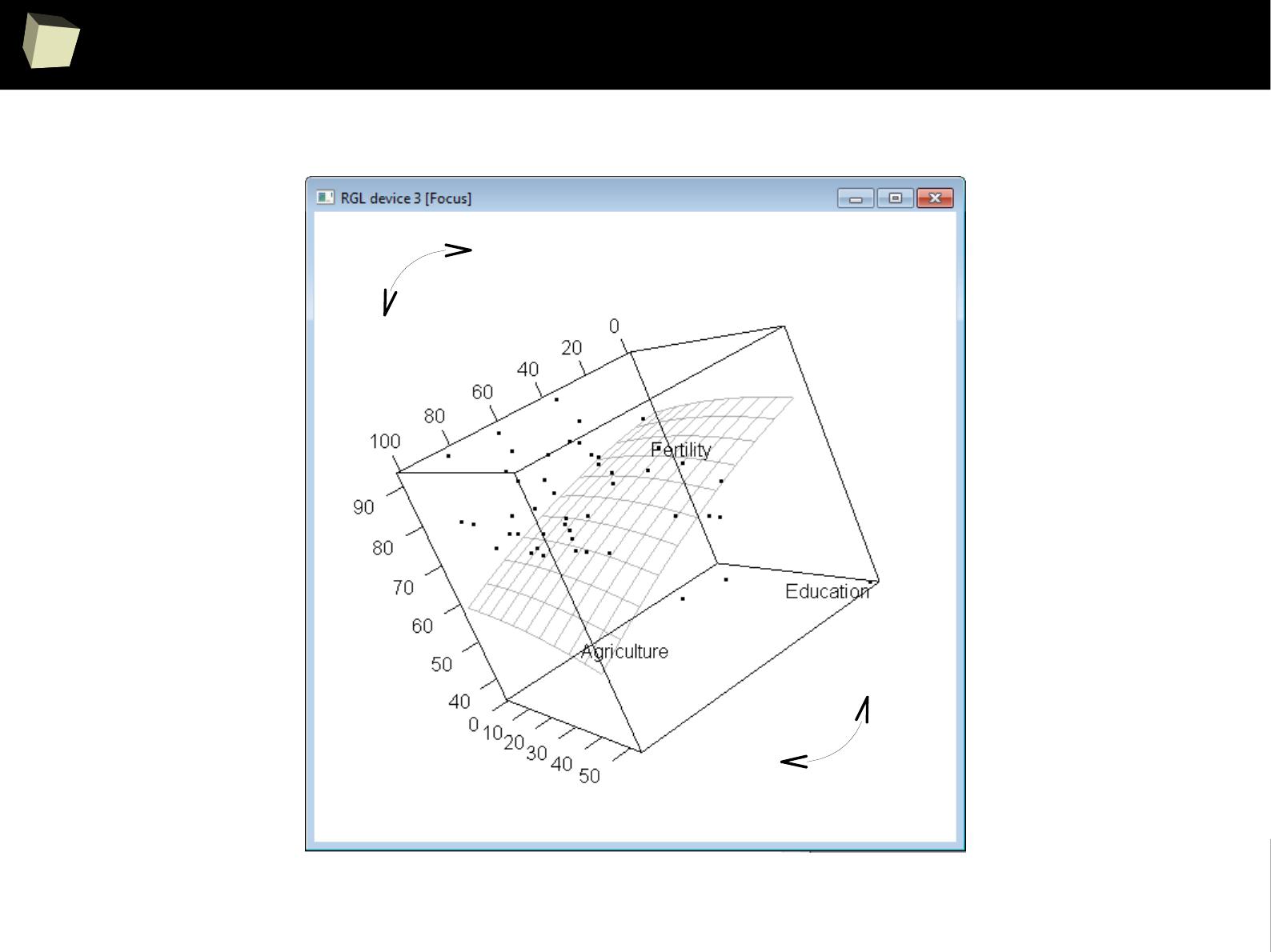

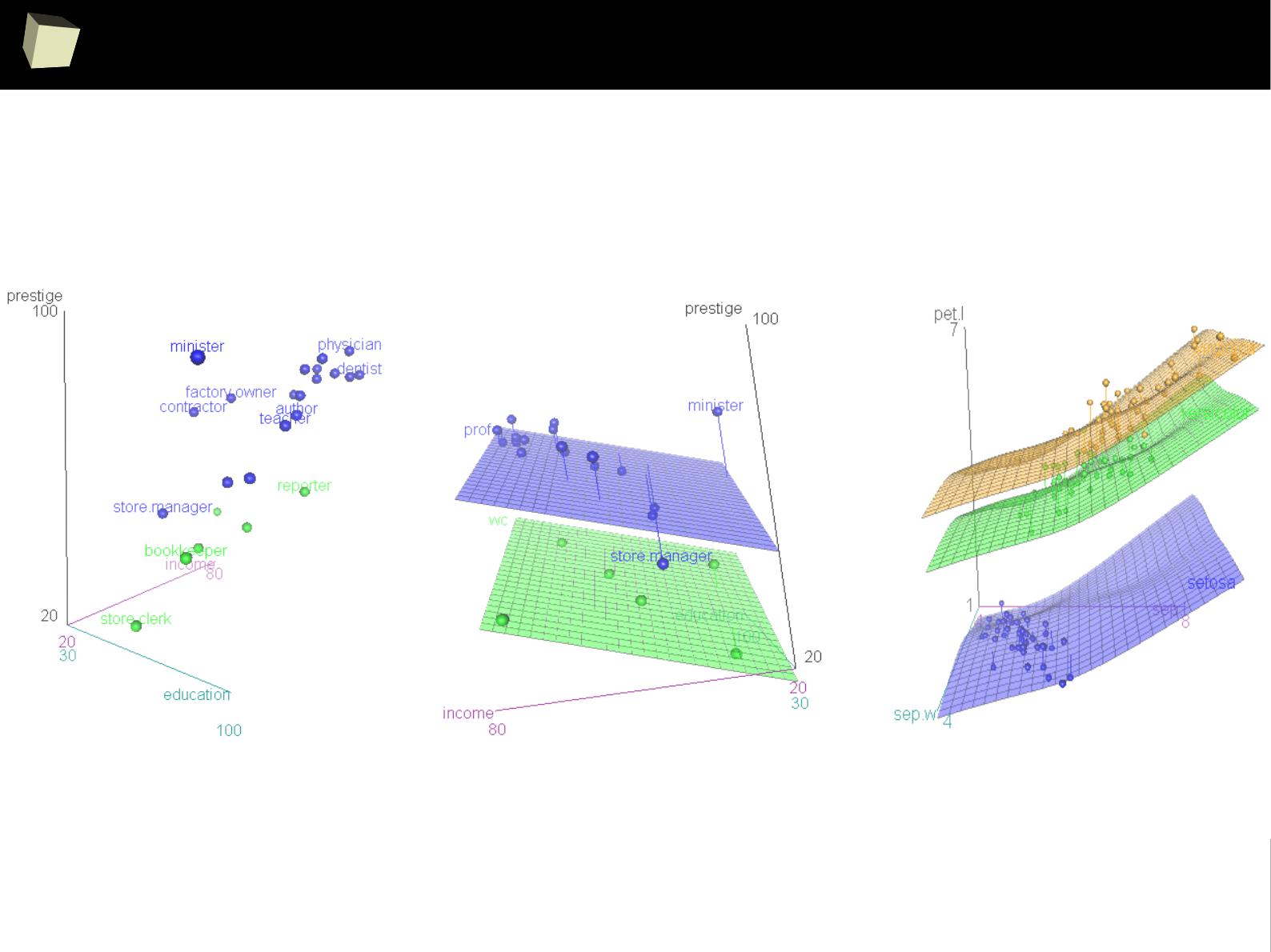

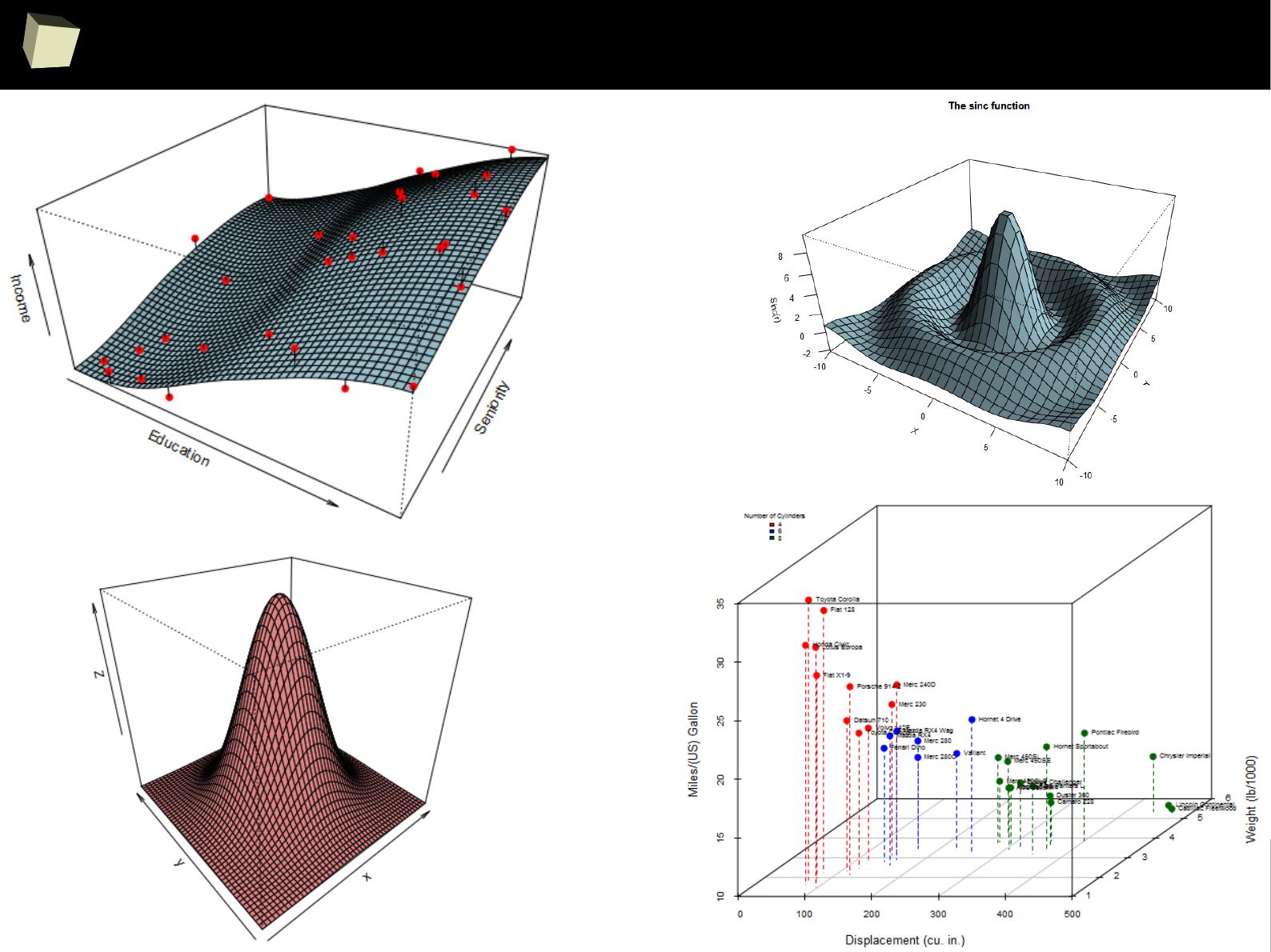

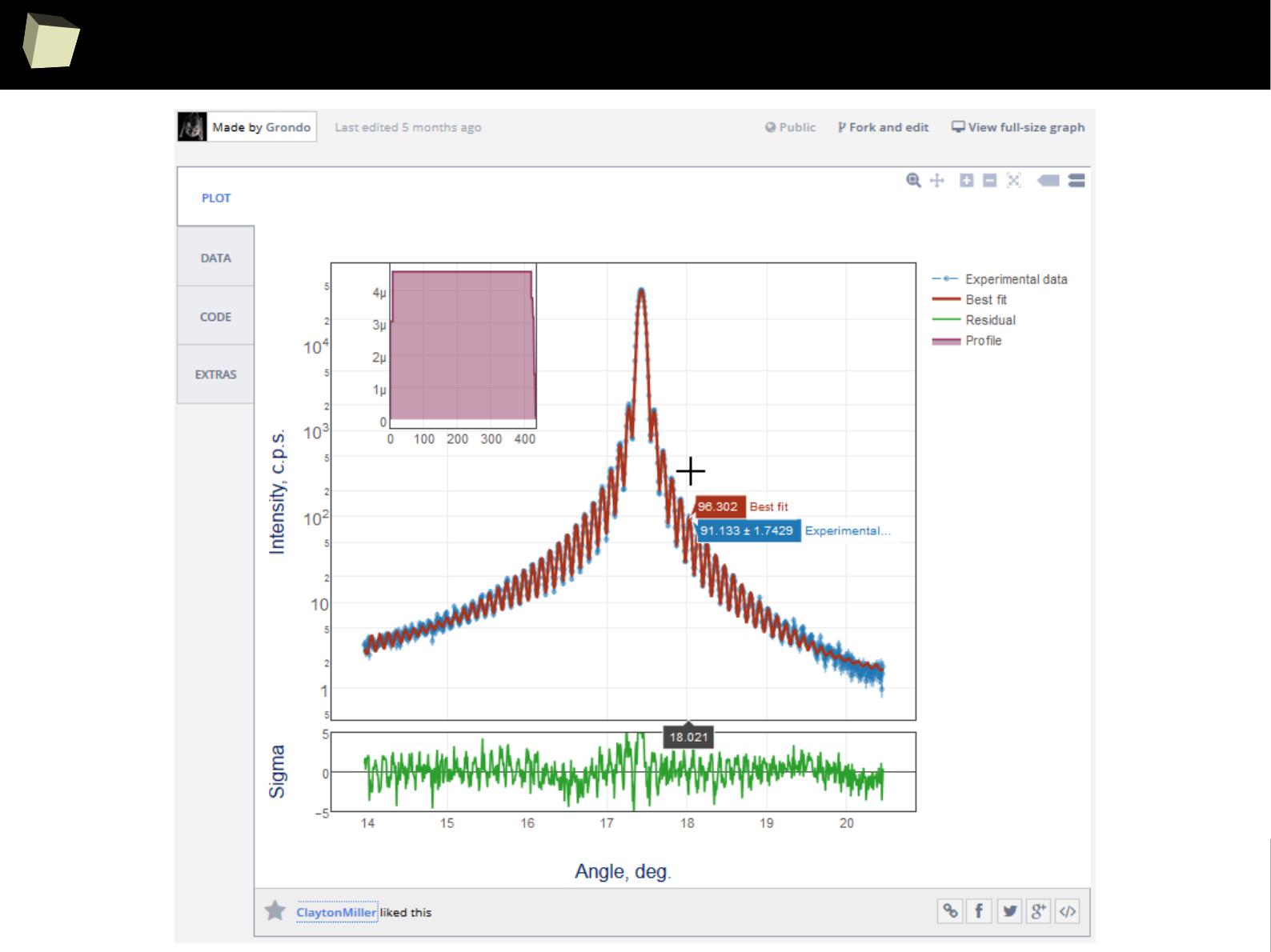

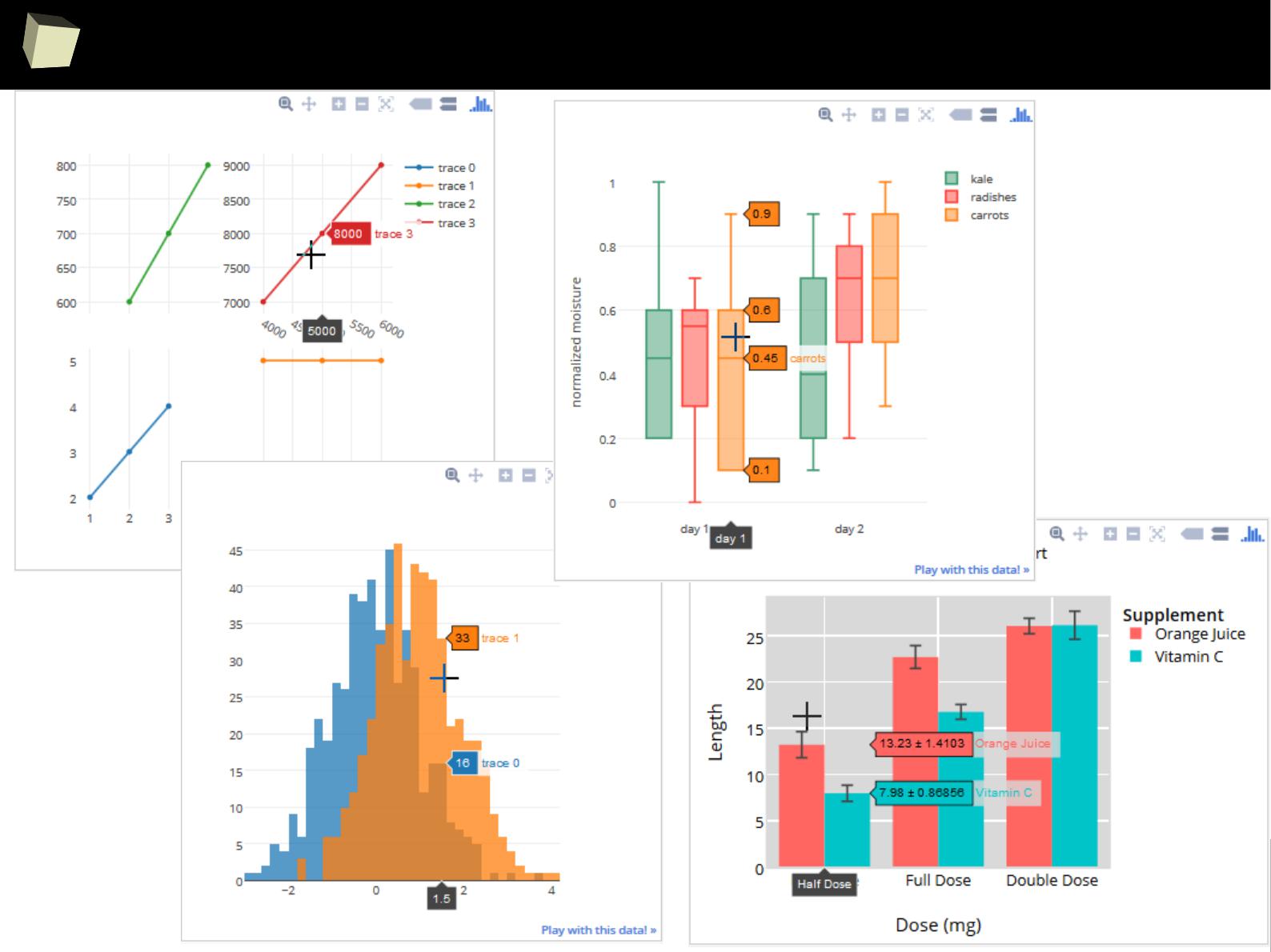

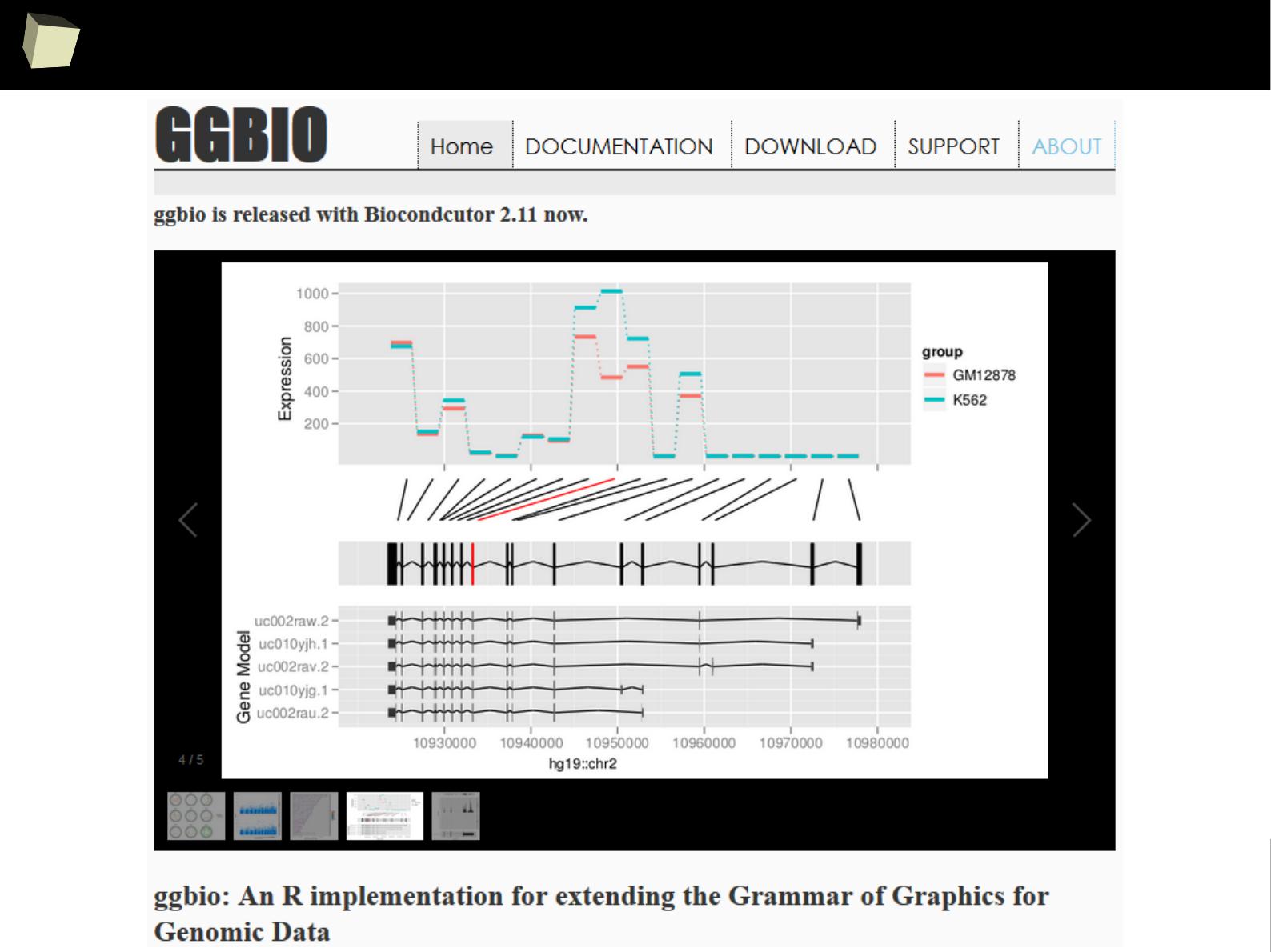

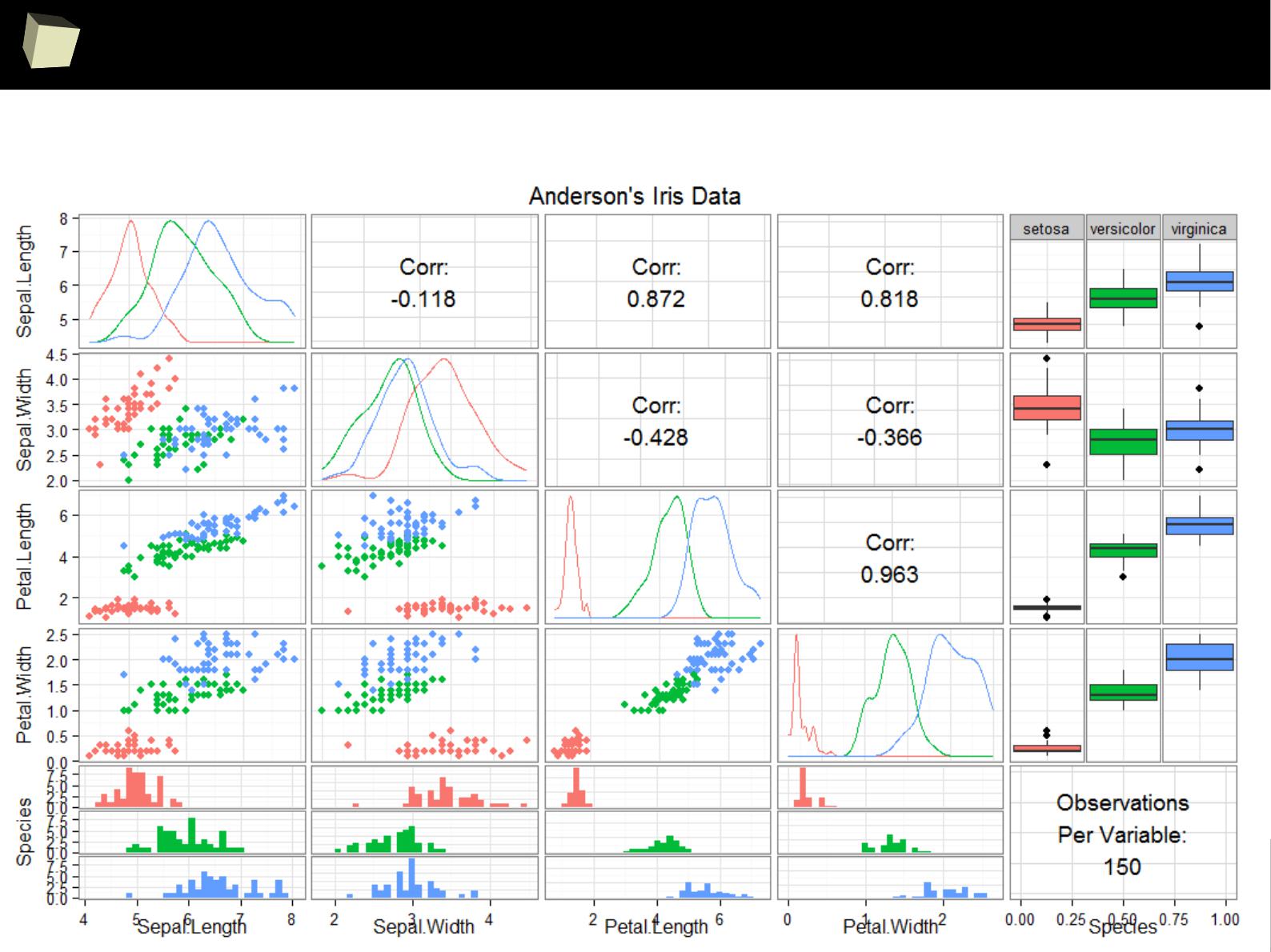

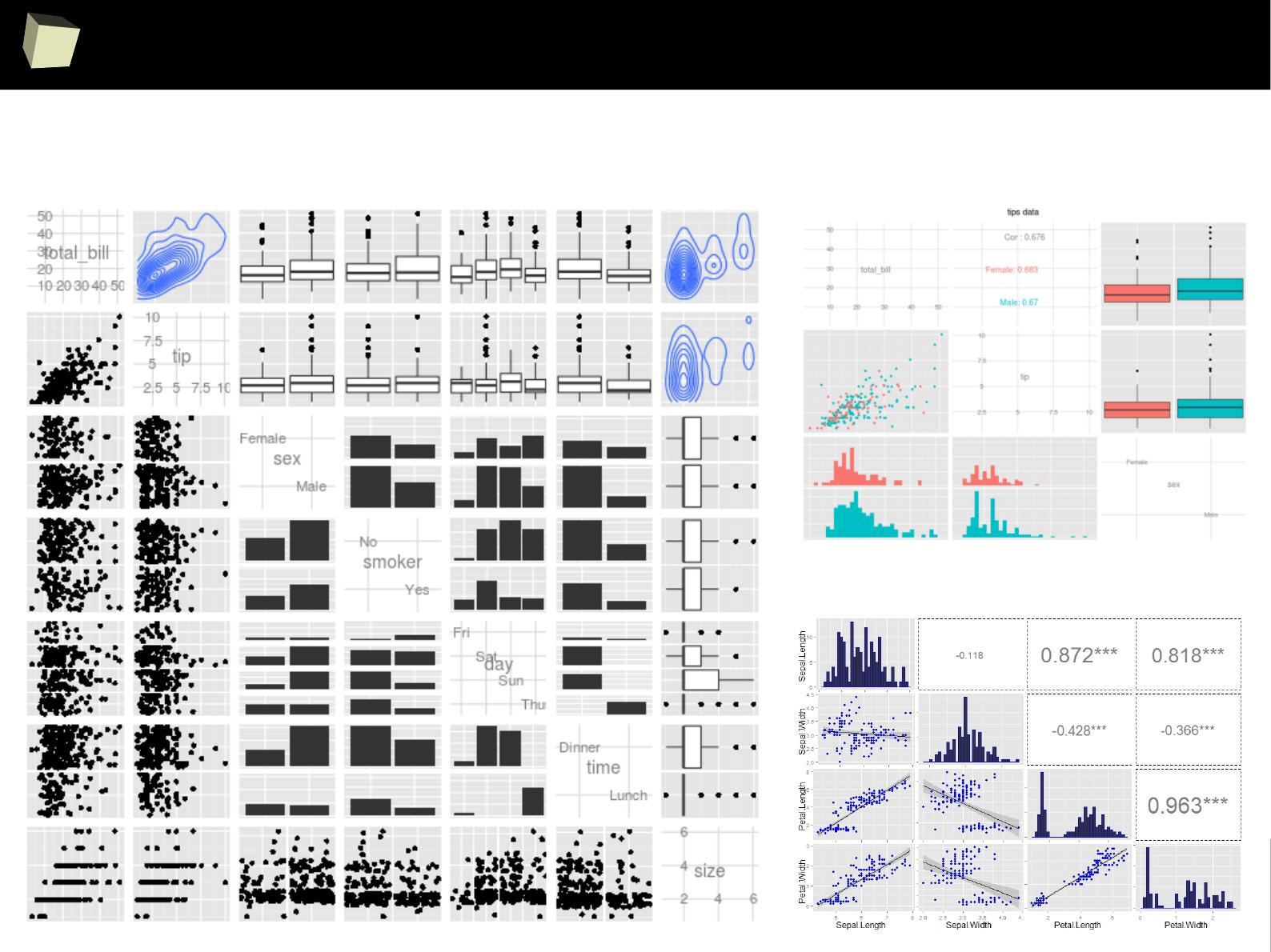

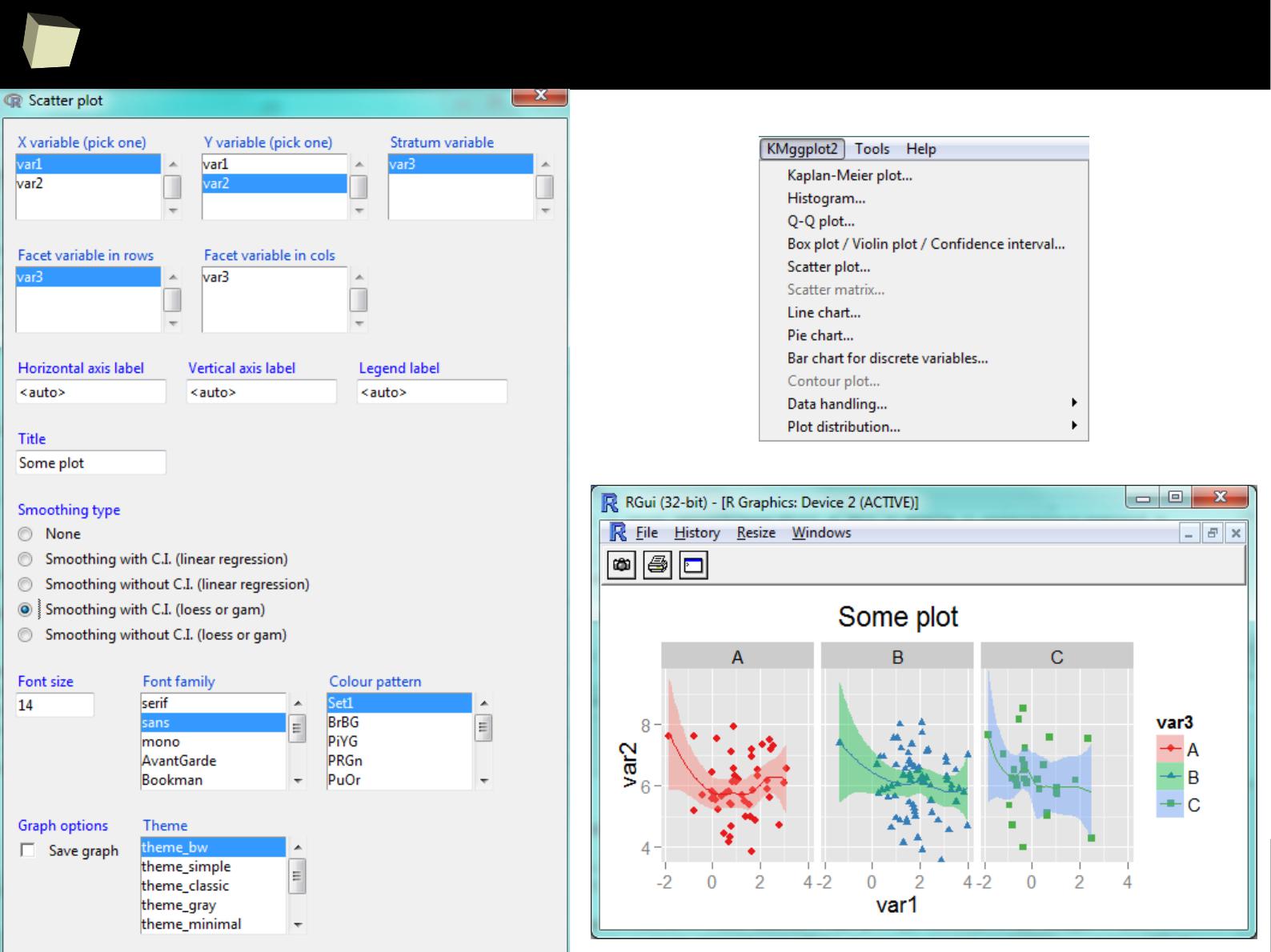

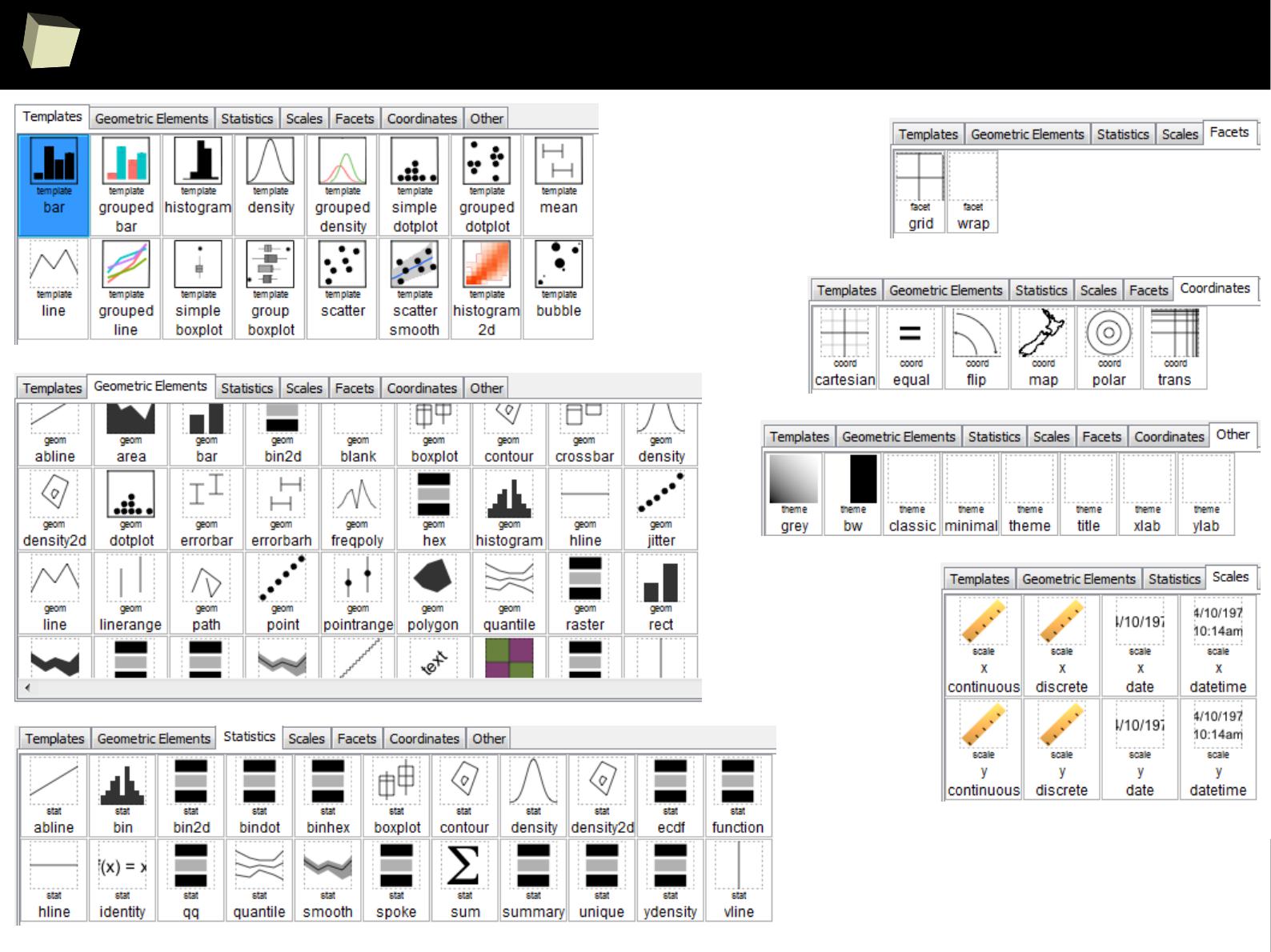

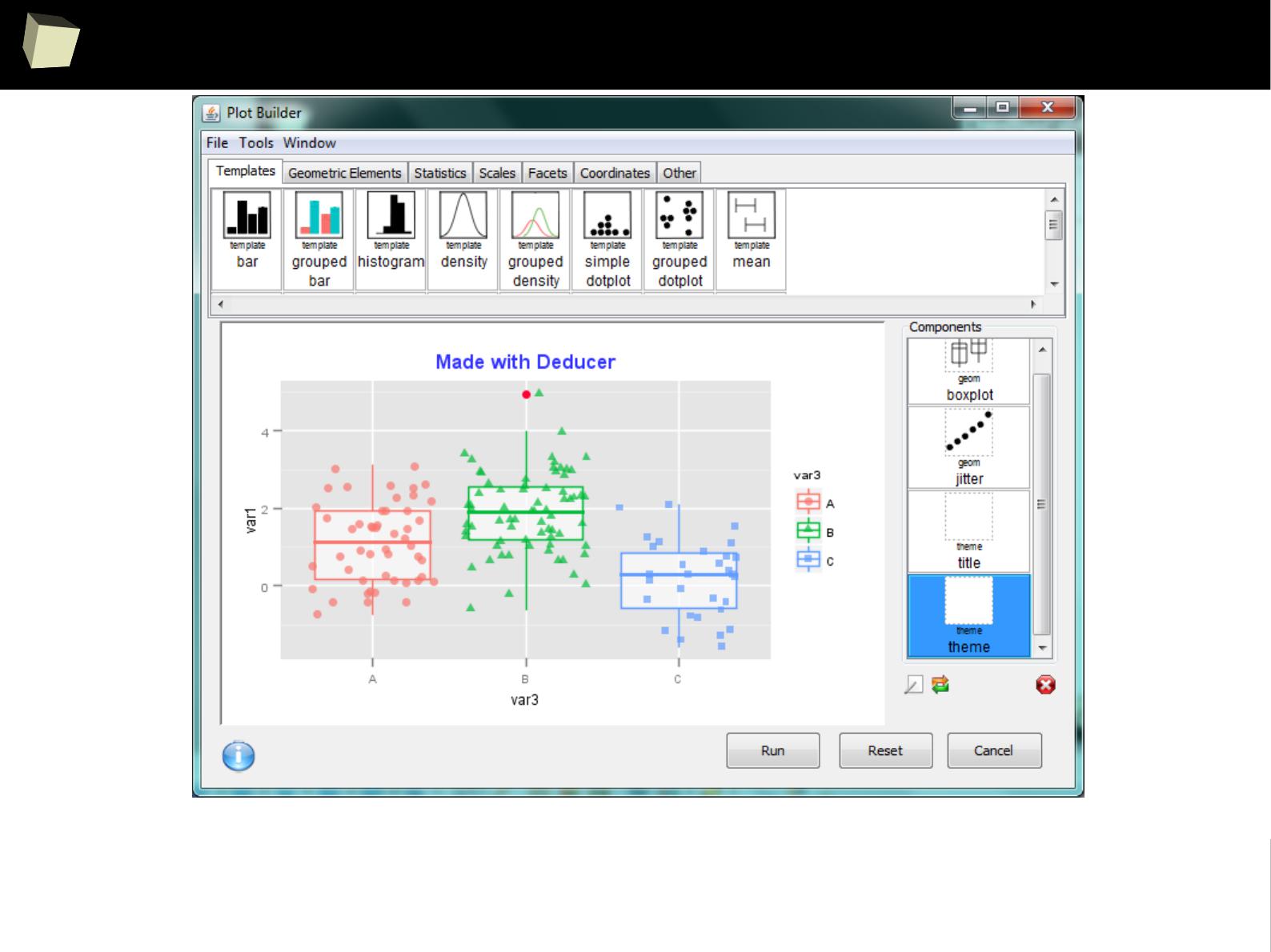

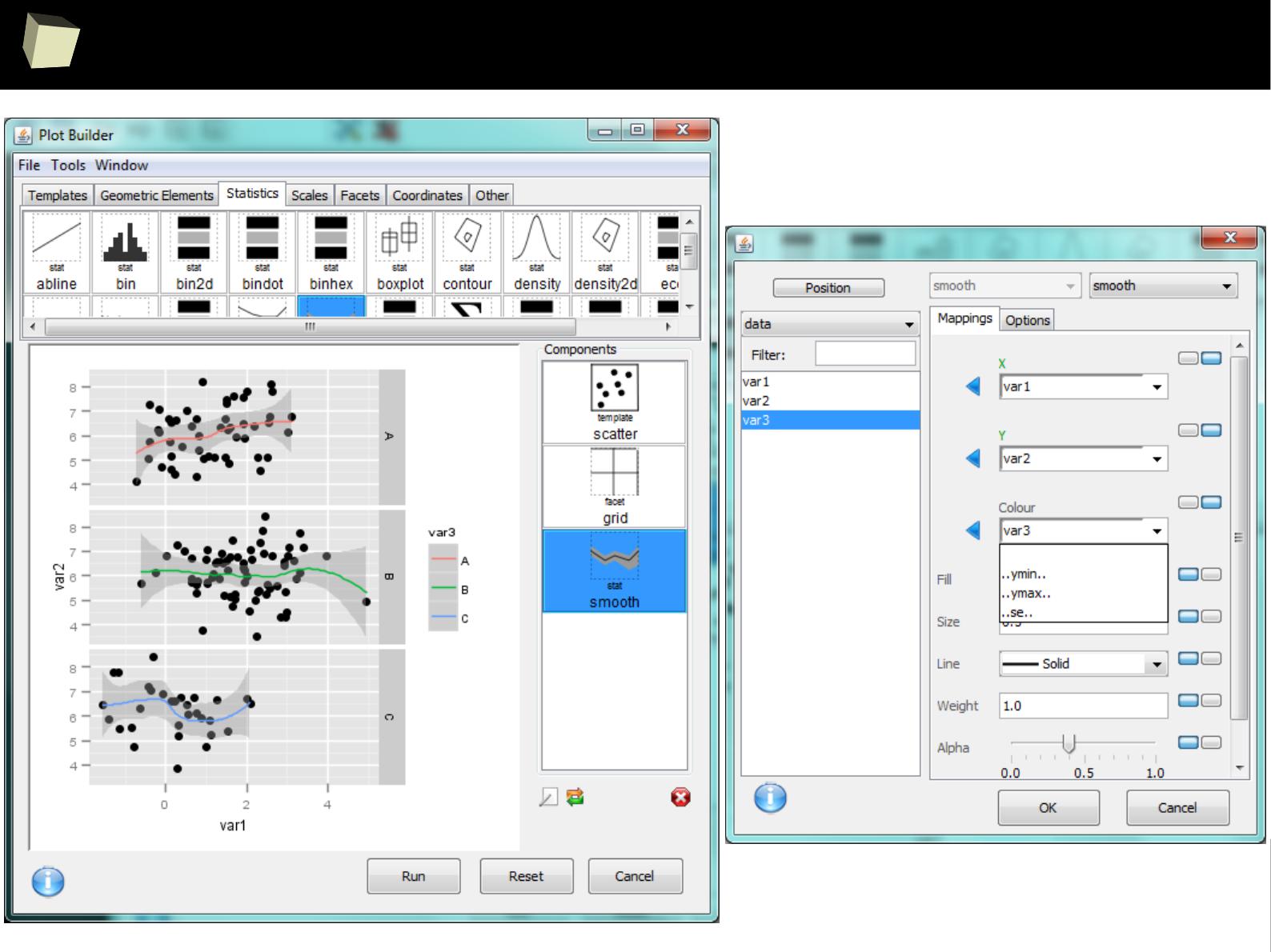

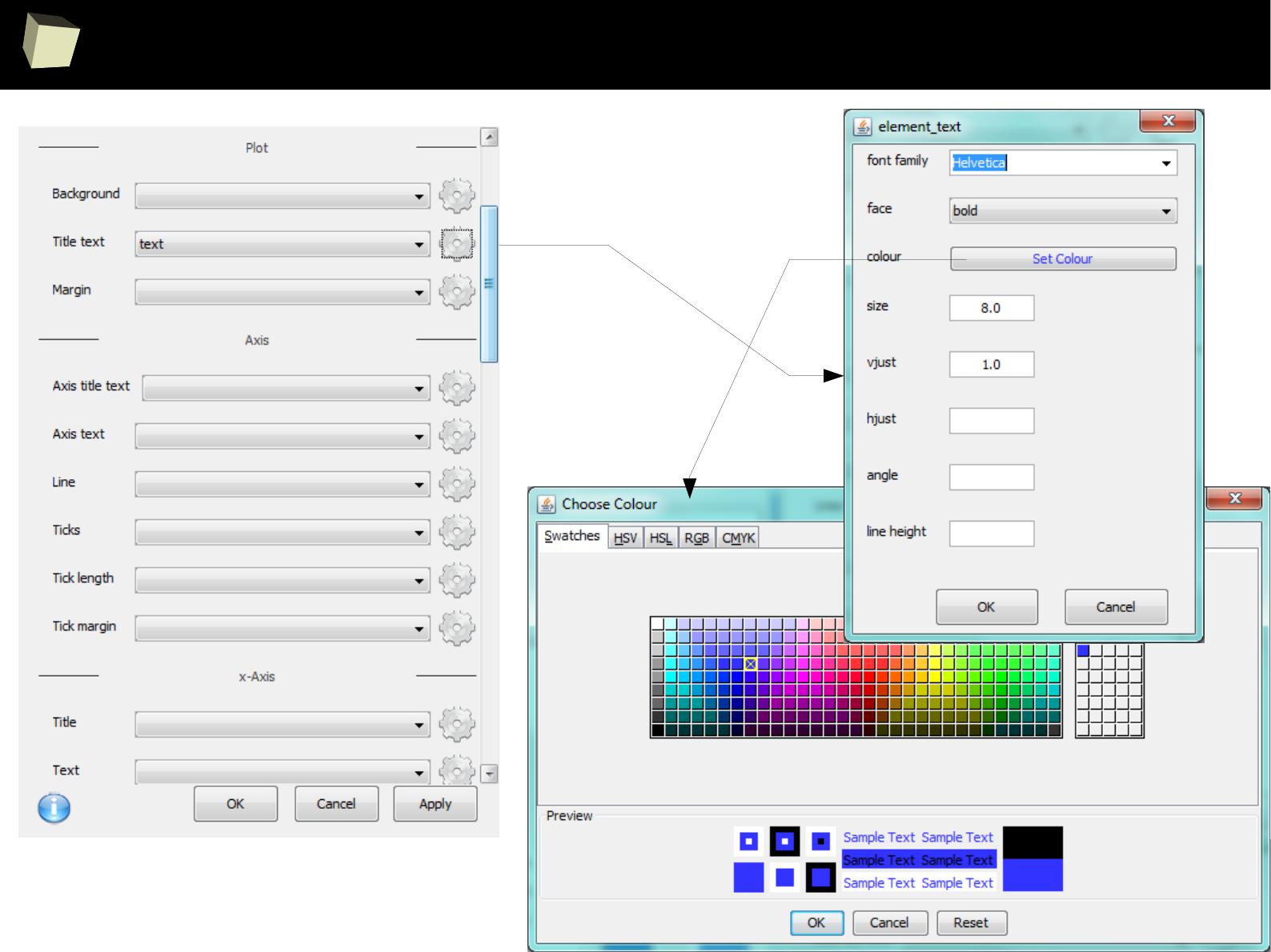

IX 2/3 :) Graphics – all have waited for this moment :)

X There are many options to optimize the code

XI R is able to handle large amount of data

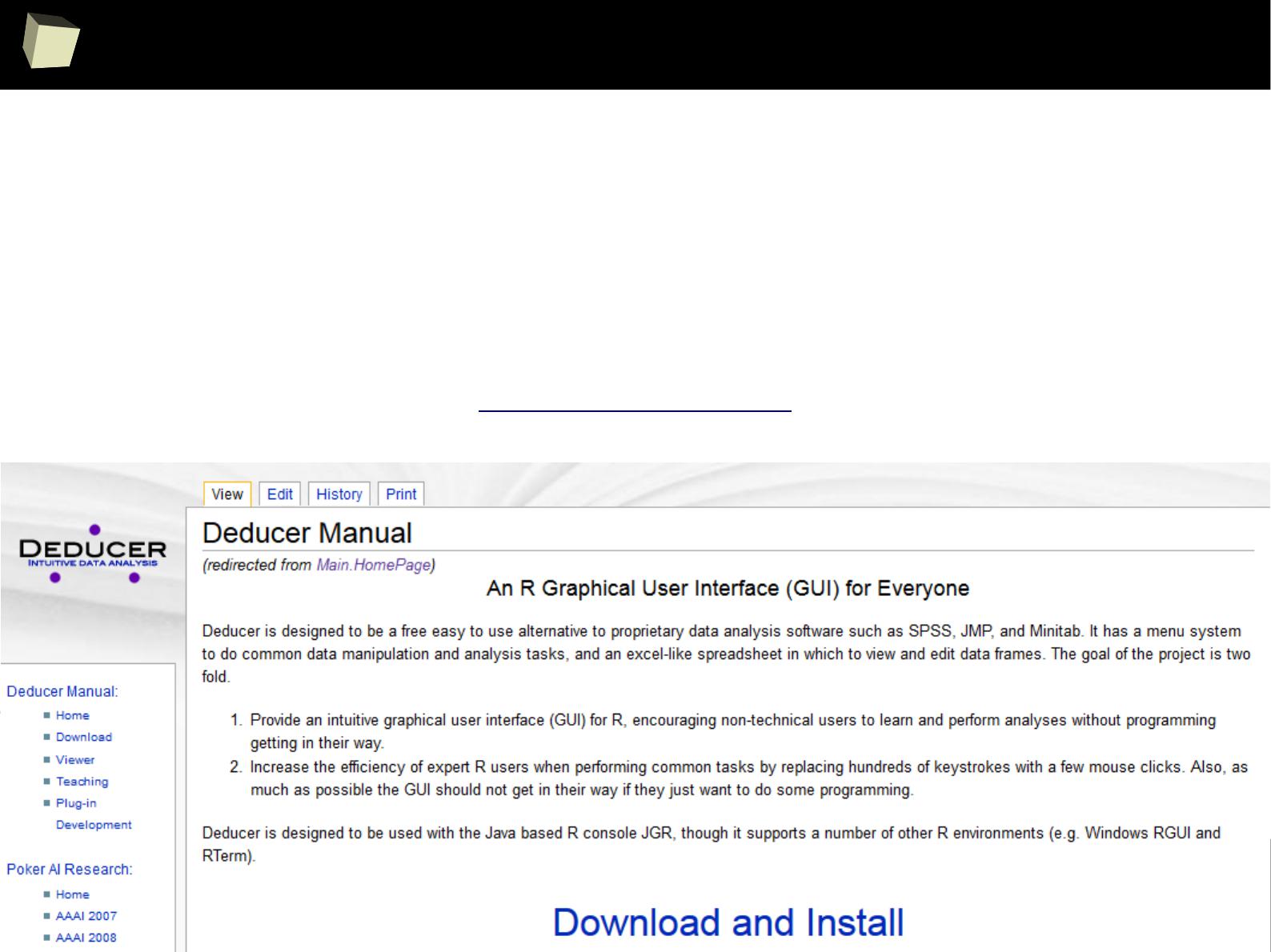

XII R has a set of fancy tools and IDEs

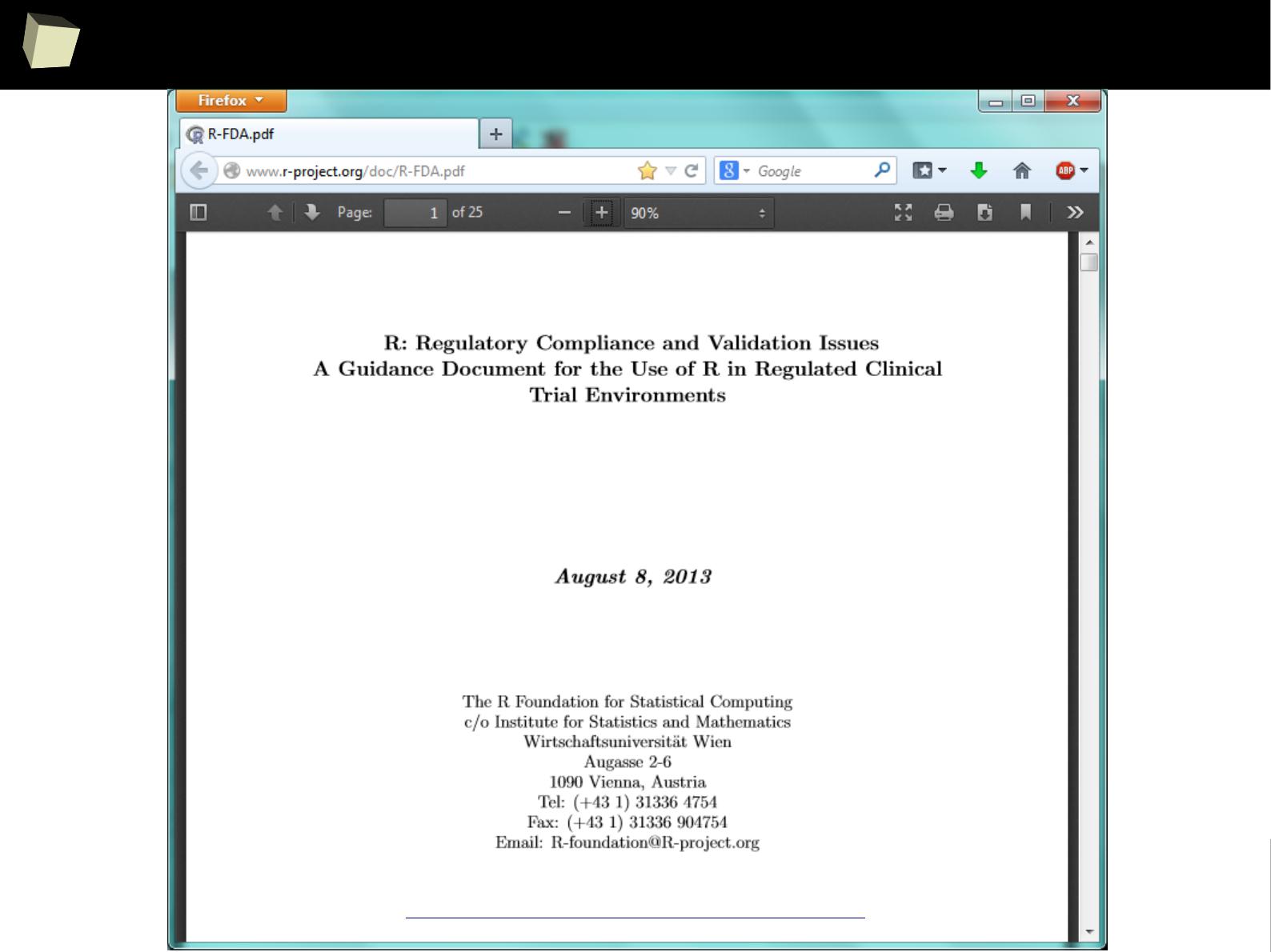

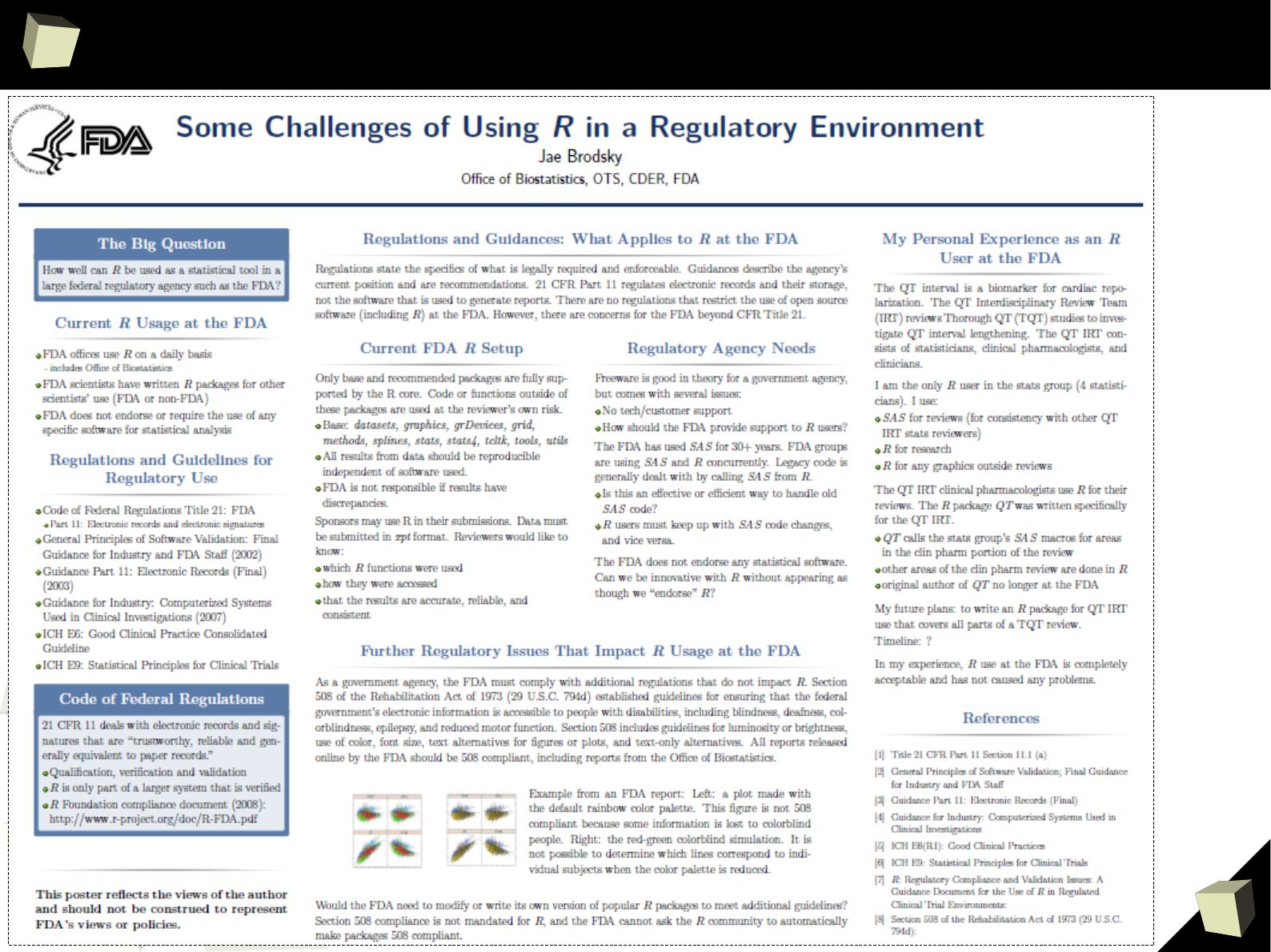

XIII FDA: R is OK for drug trials! - it should start from this slide :)

7

IntRoduction

8

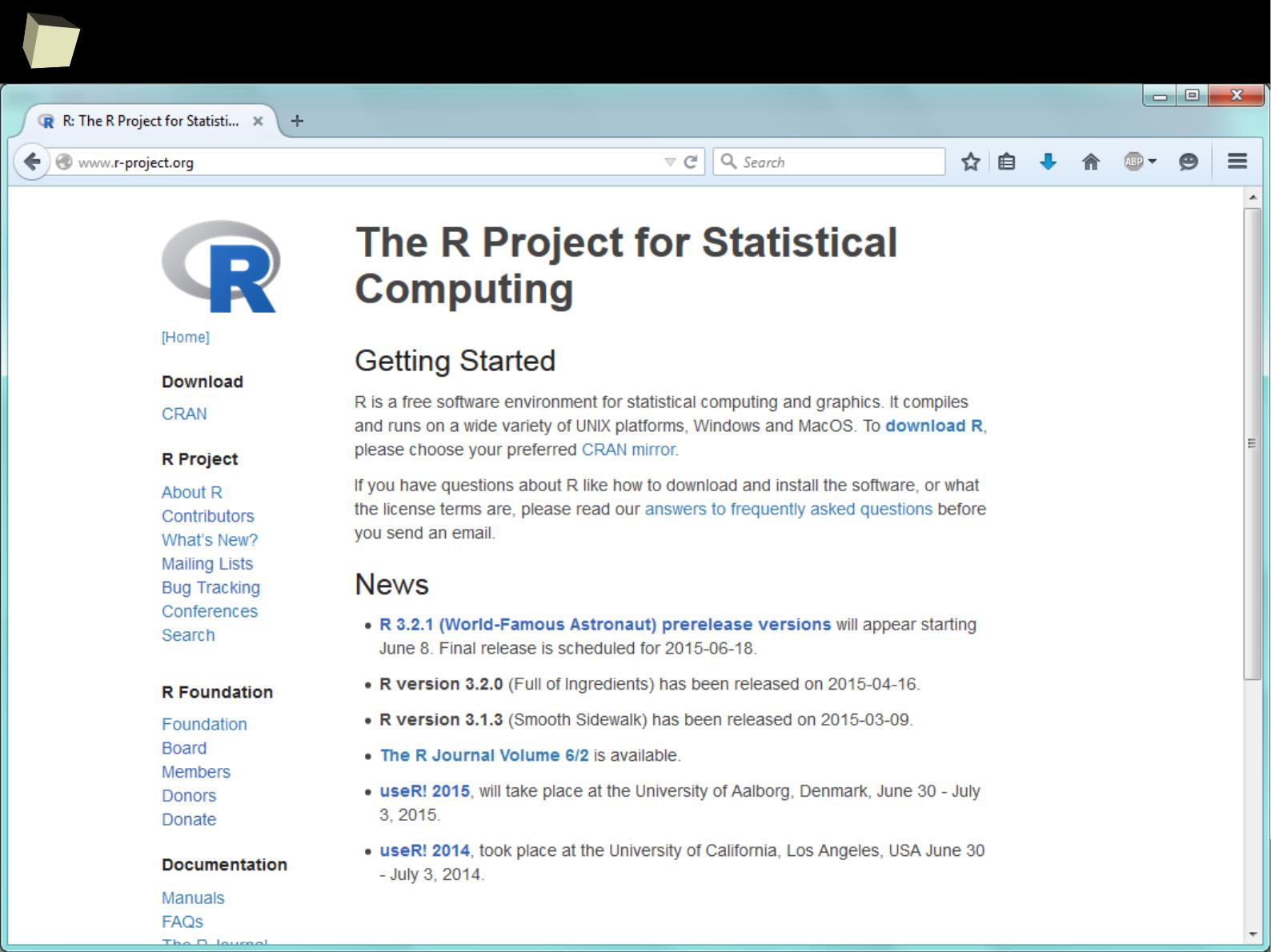

...so, what is “R”?

In simply words, R is a free software environment for statistical computing, data

manipulation and charting, widely used in the scientific world. It is also the

name of a high-level, interpreted programming language.

R

(the successor of S)

was created in 1992 by Ross Ihaka and Robert Gentleman at

University of Auckland. Now it is developed by the R Development Core Team.

http://www.r-project.org

9

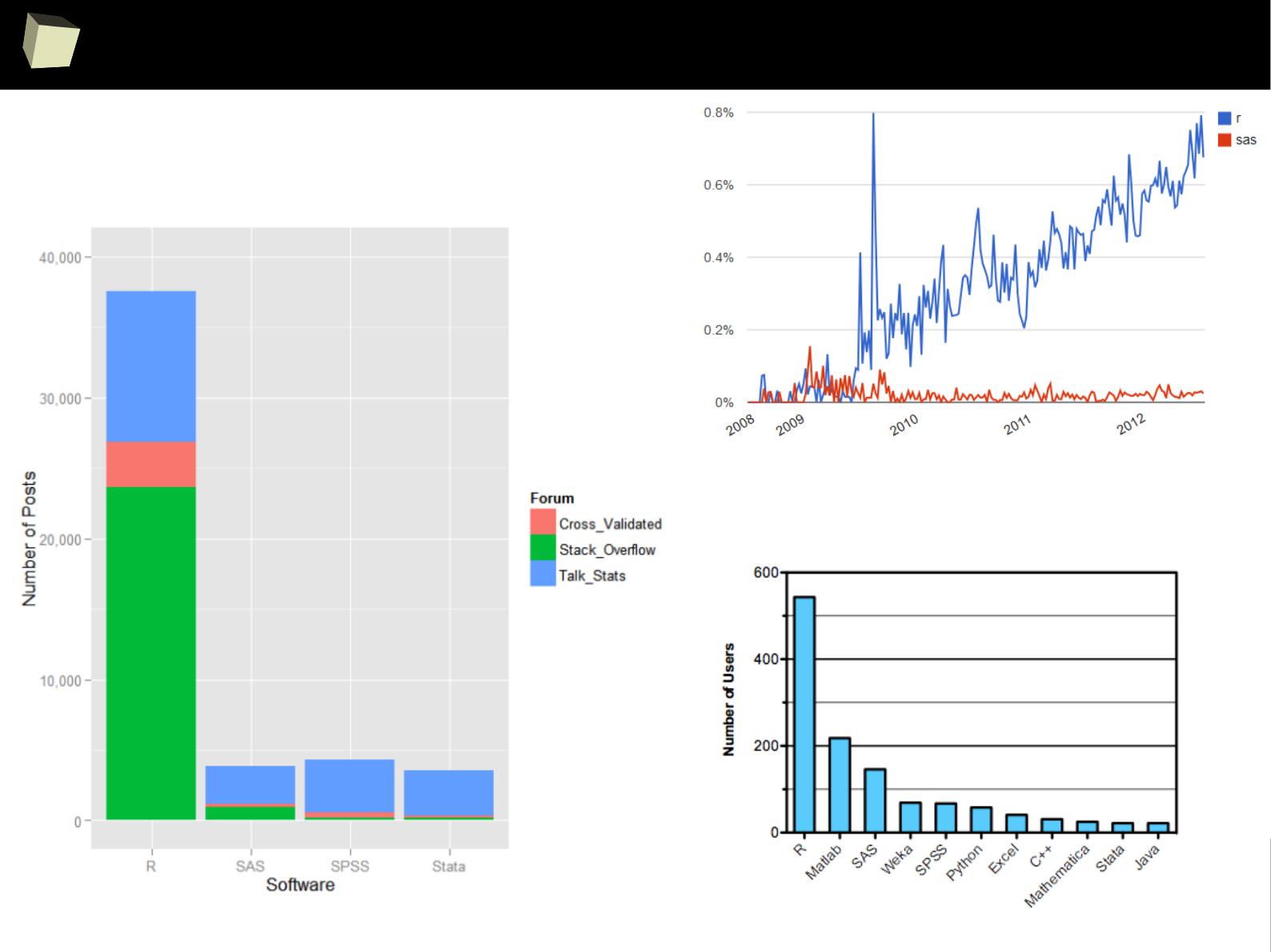

is it a popular softwaRe ?

[…] R is also the name of a popular programming language used by

a growing number of data analysts inside corporations and

academia. It is becoming their lingua franca partly because data

mining has entered a golden age, whether being used to set ad

prices, find new drugs more quickly or fine-tune financial models.

Companies as diverse as Google, Pfizer, Merck, Bank of America,

the InterContinental Hotels Group and Shell use it.

Data Analysts Captivated by R’s Power (The New York Times)

http://tinyurl.com/captivated-by-r

1

0

...so, what is “R”?

Short characteristics:

●

Description: computational environment + programming language

●

Developer: R Development Core Team

●

Operating systems: cross-platform: Windows, Unix, Linux, Linux-based “mobile“ OS:

(Android, Maemo, Raspbian), Mac OS X

●

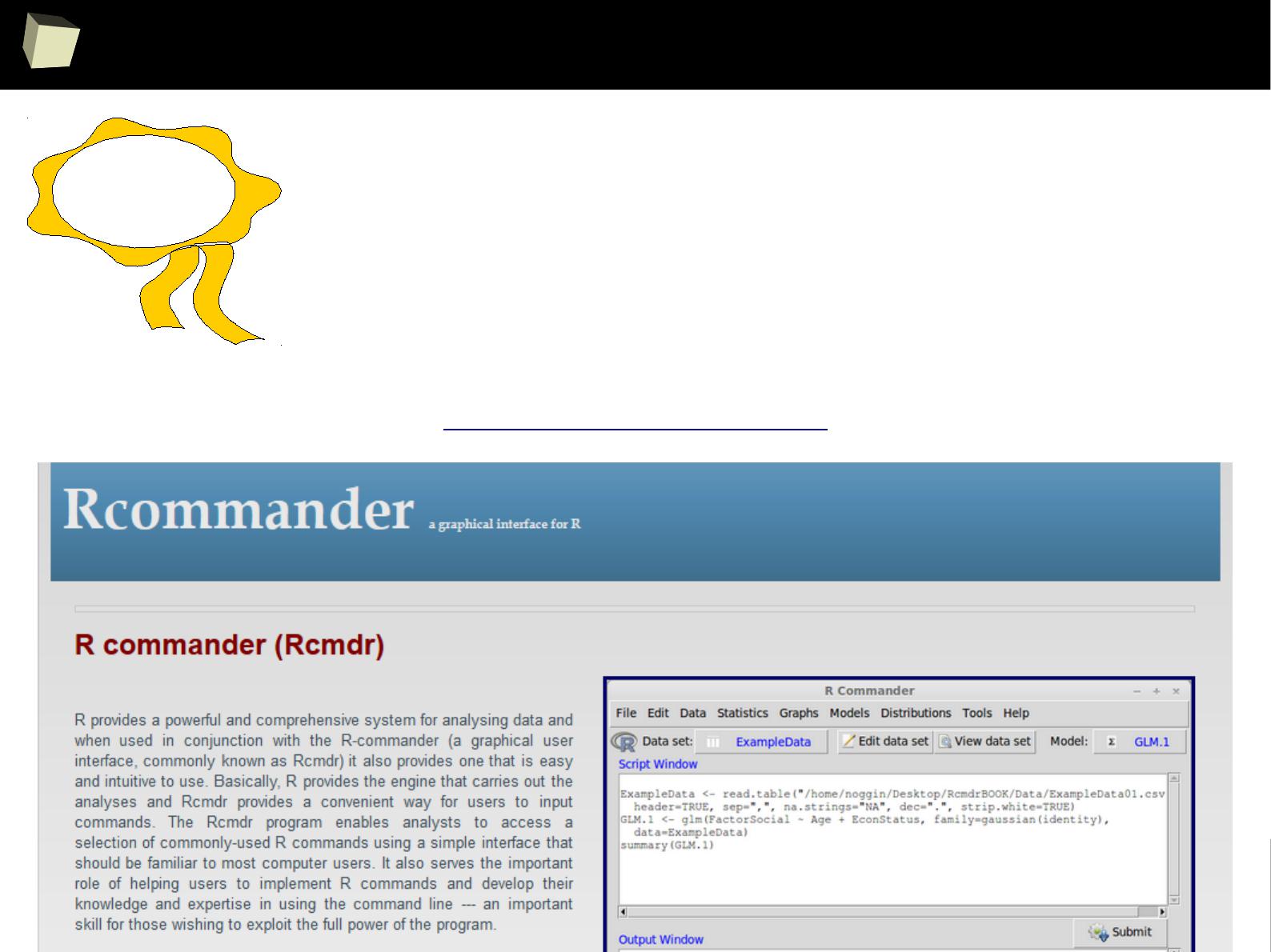

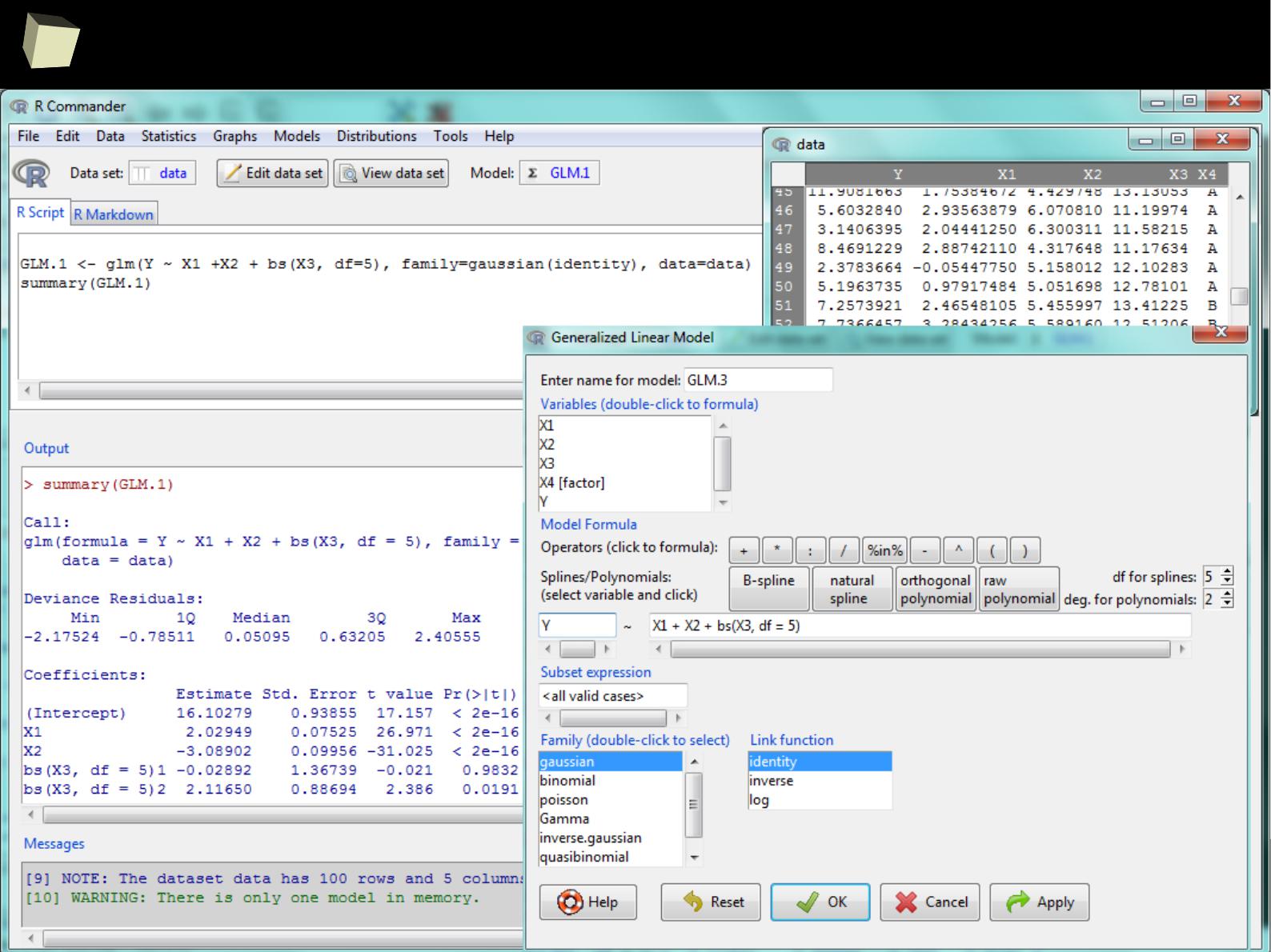

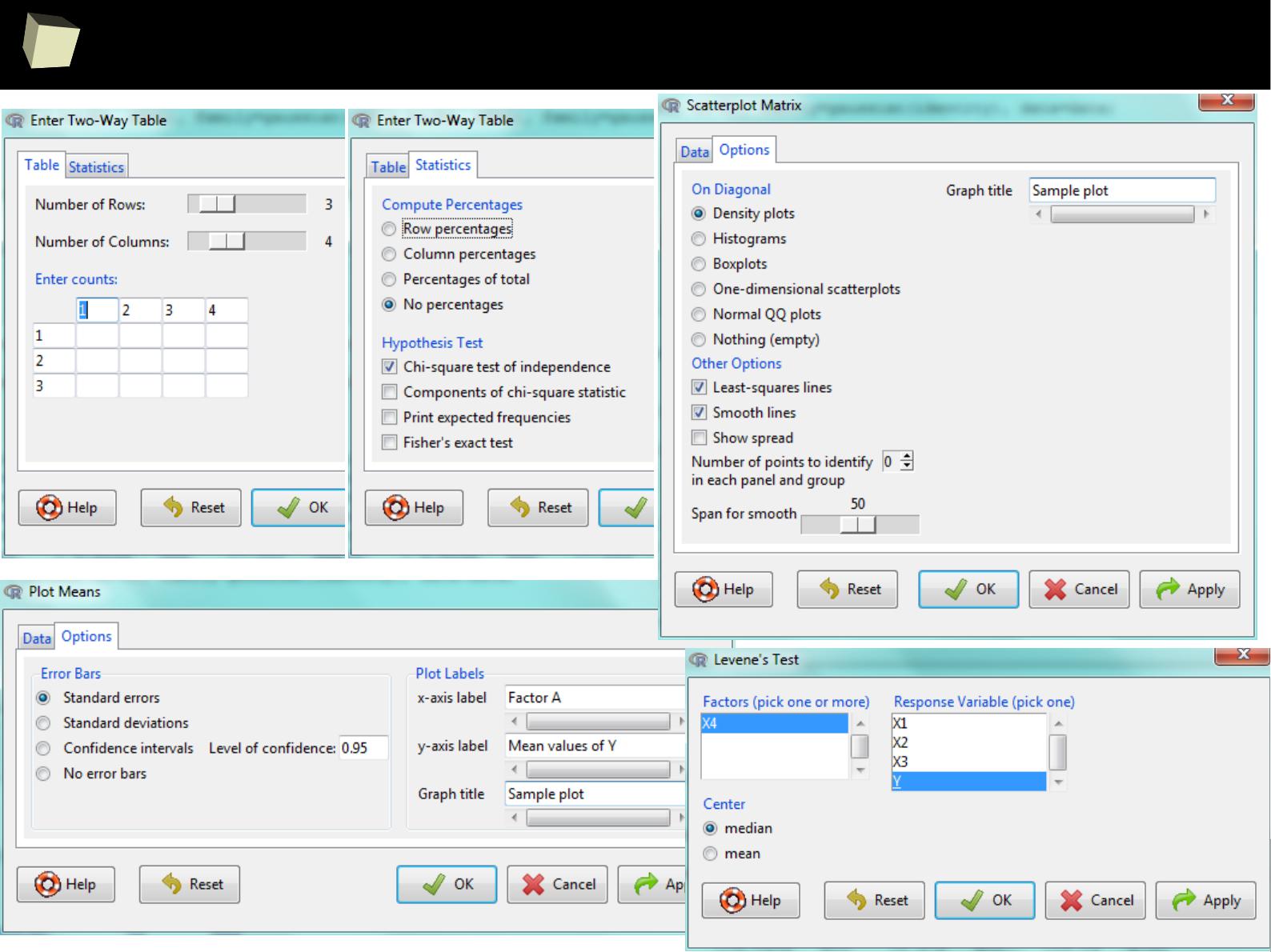

Form: command line + third-party IDEs and editors

RStudio, RCommander, etc.

●

Infrastructure: R core library + shell + libraries (base and third-party)

●

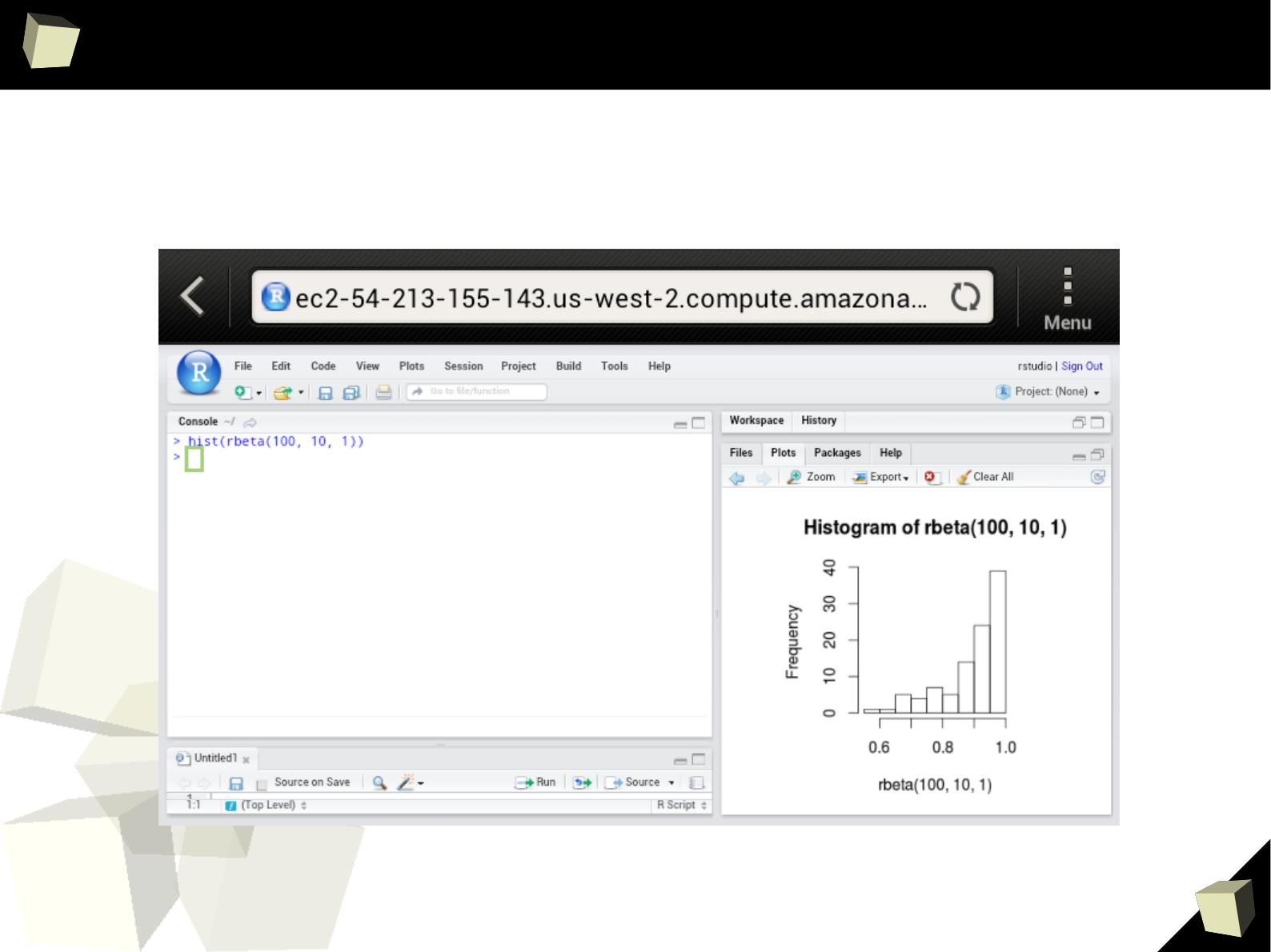

Model of work: 1) standalone application, 2) standalone server, 3) server process

●

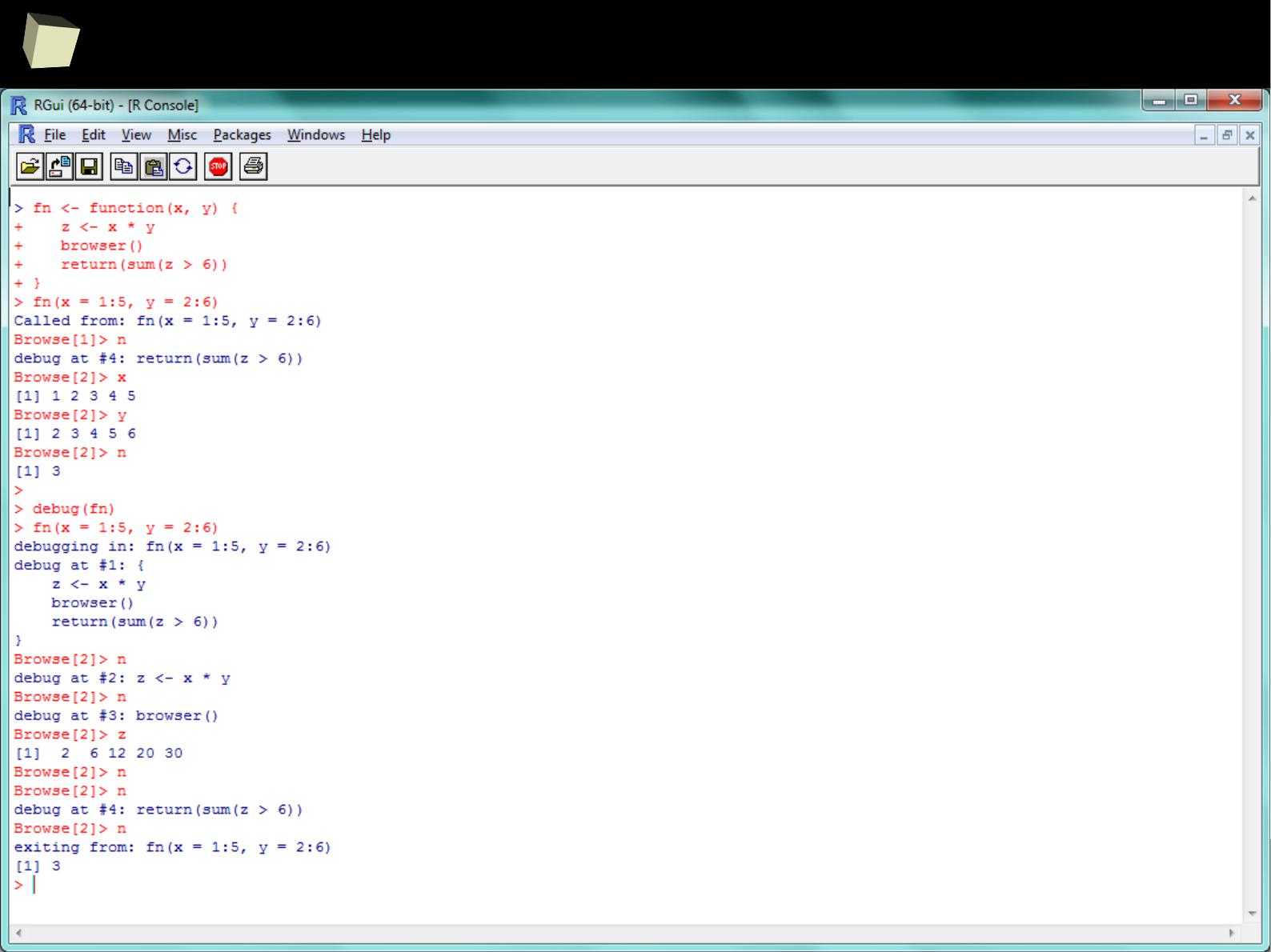

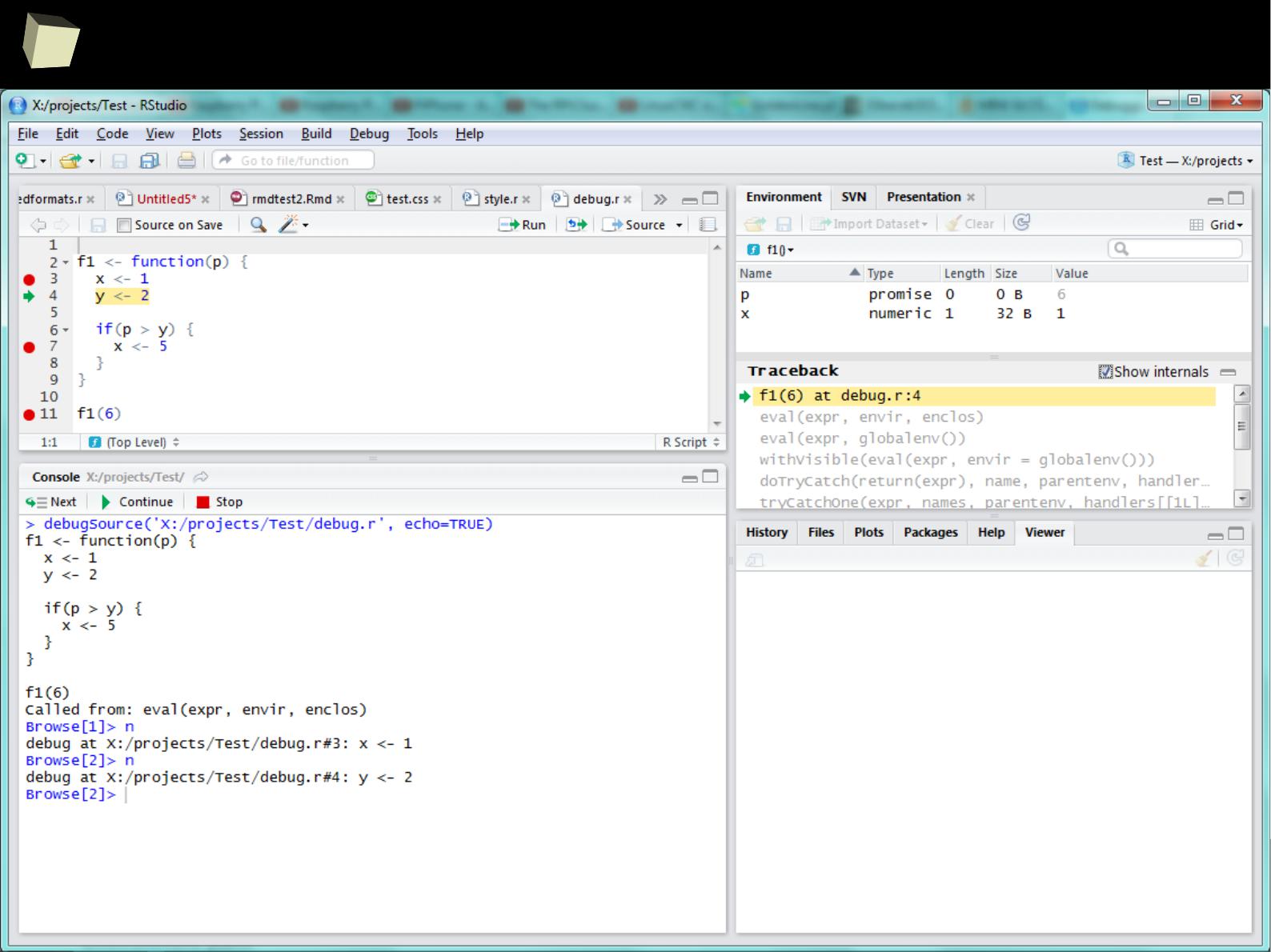

Programming lang.: interpreted, high-level with dynamic typing; debugger onboard

●

Paradigm: 1) array, 2) object-oriented, 3) imperative, 4) functional,

5) procedural, 6) reflective

●

Source of libraries: central, mirrored repository – CRAN; users' private repositories,

third-party repositories (Github, Rforge), other sources

●

License of the core: GNU General Public License ver. 2

●

License of libraries: 99.9% open-source, rest – licensed (free for non-commercial use)

http://www.r-project.org

1

2

The R family

S

S

Spotfire S+

Spotfire S+

formerly S-PLUS

GNU R

GNU R

REVO

REVO

lution

lution

(bought by Microsoft in 2015)

Commercial + Free (GPL v2)

http://www.revolutionanalytics.com

AT&T/ Bell Laboratories

Date of foundation: 1976, 1998

John Chambers, Rick Becker, Allan Wilks

http://ect.bell-labs.com/sl/S

TIBCO Software Inc.

Date of foundation: 2010

License: Commercial

http://spotfire.tibco.com

What's new in version 8.1

University of Auckland

Date of foundation: 1993

Robert Gentleman, Ross Ihaka

License: GNU GPL v2

http://www.r-project.org

In 1998, S became the first statistical system

to receive the Software System Award, the

top software award from the ACM

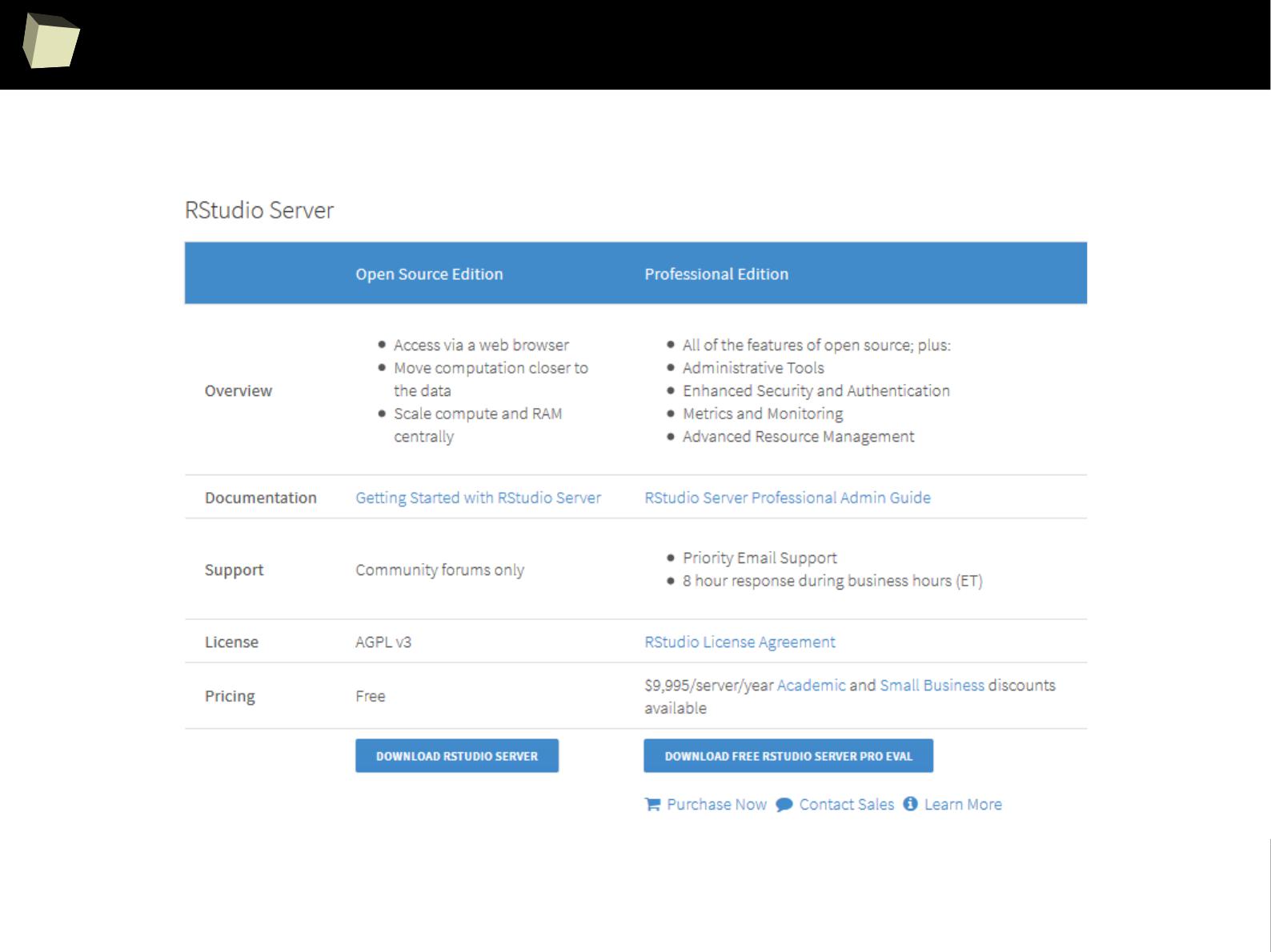

RStudio

RStudio

Commercial

+ Free (AGPL v3)

http://www.rstudio.com

Oracle R

Oracle R

Commercial + Free

http://www.oracle.com...

1

3

did you know, that...

http://blog.revolutionanalytics.com/2014/04/seven-quick-facts-about-r.html

Seven quick (and cool) facts about R

April 2014

(1) R is the highest paid IT skill

1

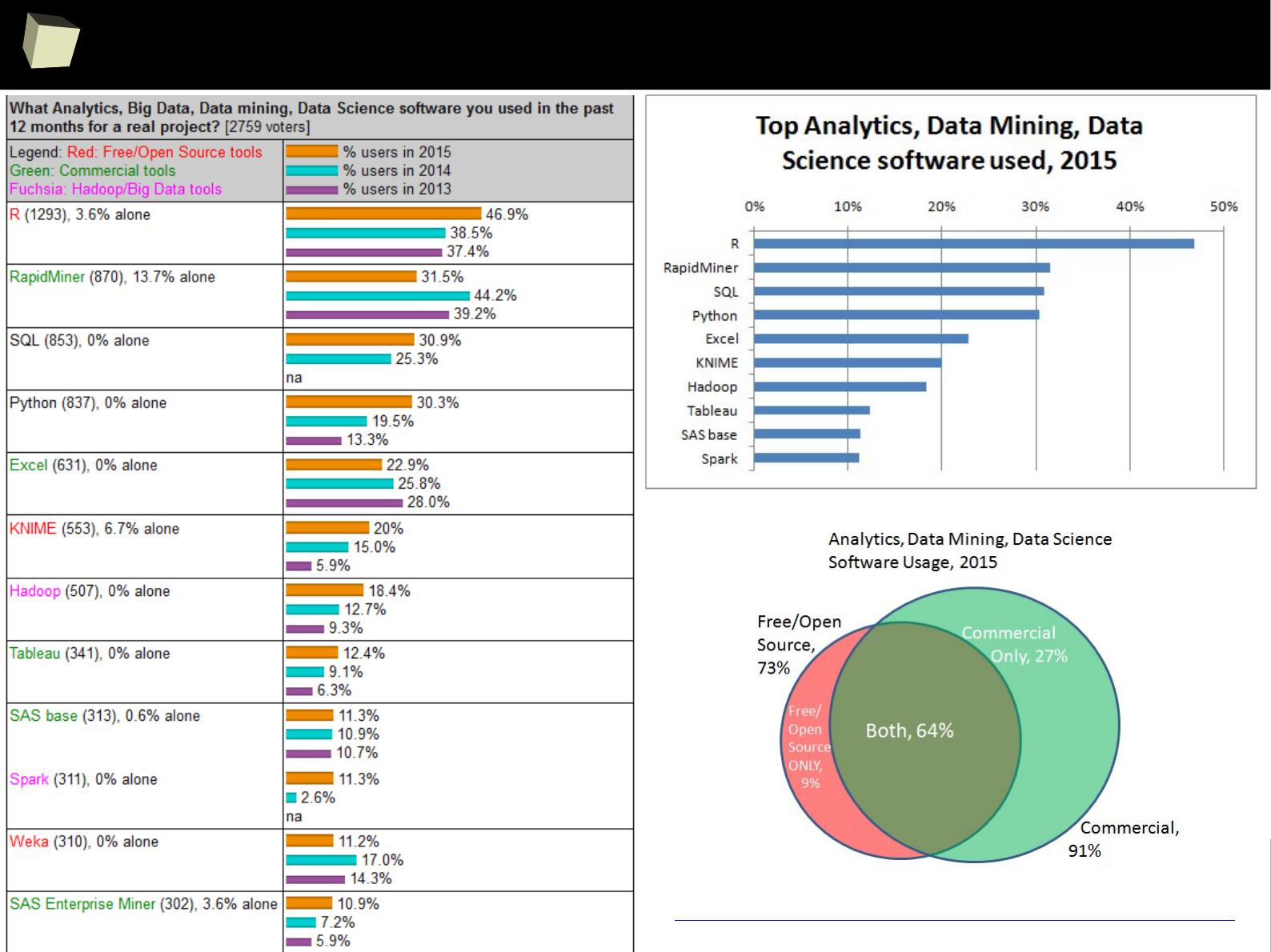

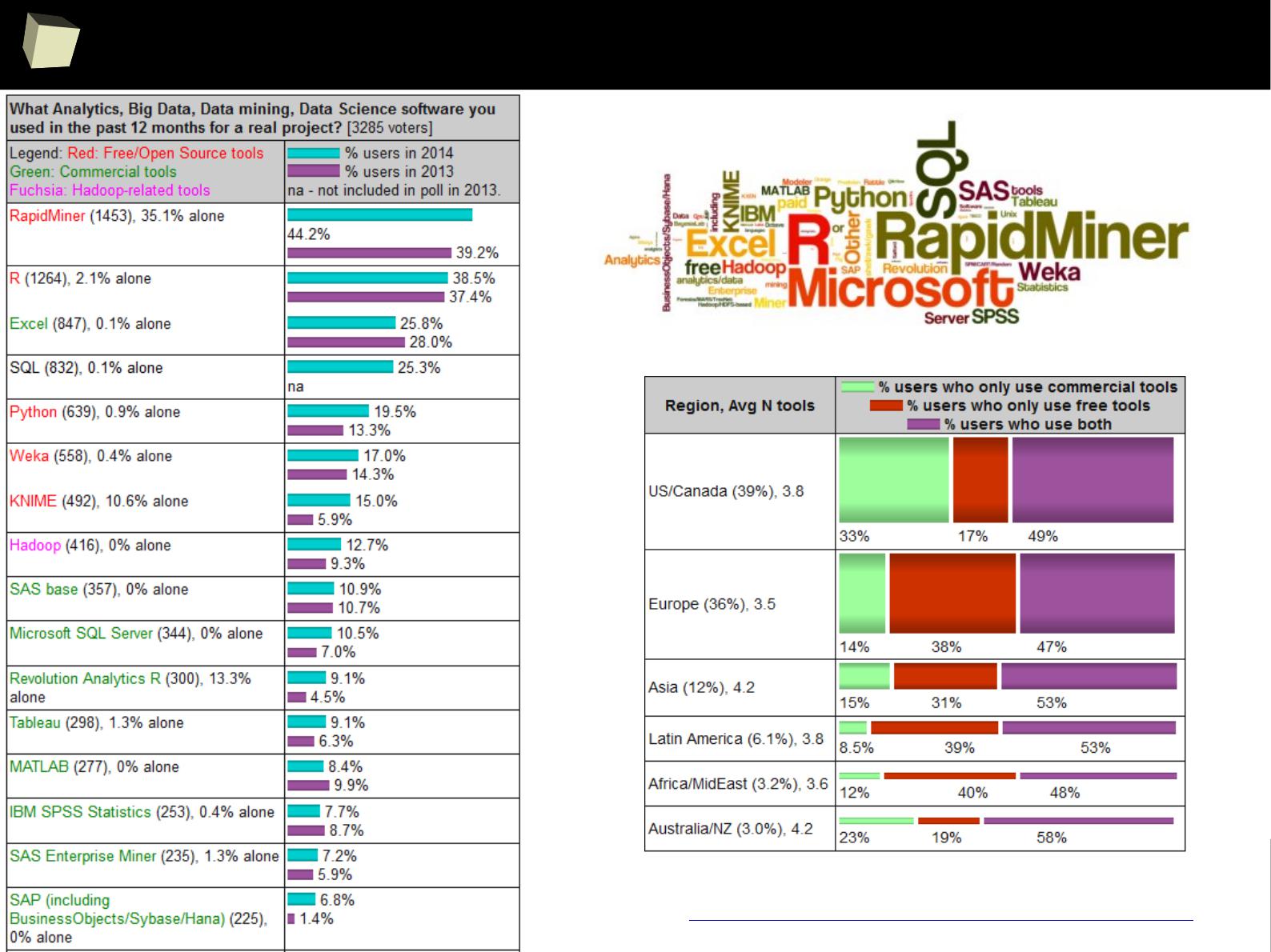

(2) R is most-used data science language after SQL

2

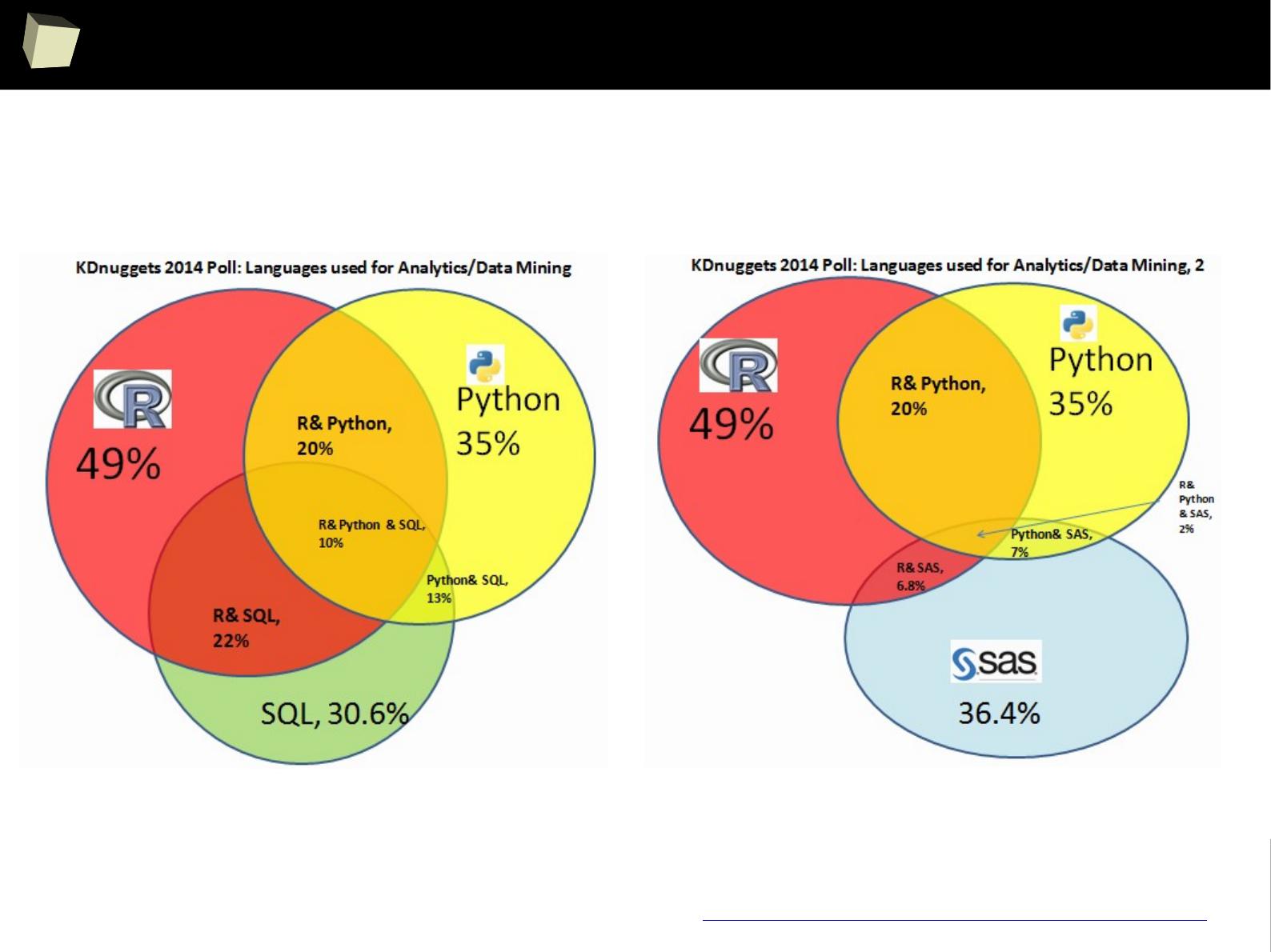

(3) R is used by 70% of data miners

3

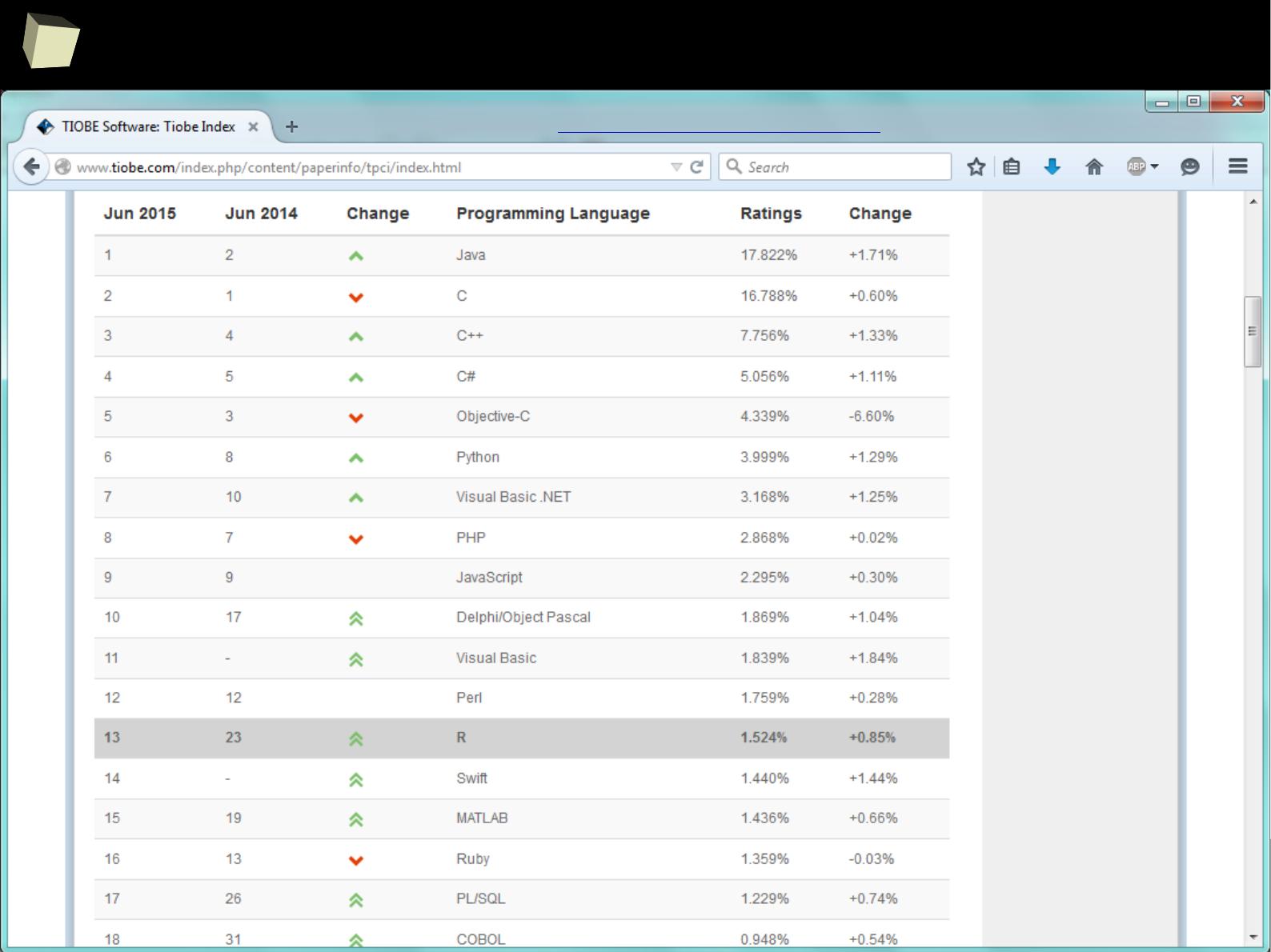

(4) R is #15 of all programming languages

4

(5) R is growing faster than any other data science language

5

(6) R is the #1 Google Search for Advanced Analytic software

6

(7) R has more than 2 million users worldwide

7

1. Dice.com survey, January 2014

2. O'Reilly survey, January 2014

3. Rexer survey, October 2013

4. RedMonk language rankings, January 2014

5. KDNuggets survey, August 2013

6. Google Trends, March 2014

7. Oracle estimate, February 2012

1

4

List of R users

1

6

A word about the list of UseRs

The list is built based exclusively on publicly available information:

●

lists of users provided by Revolution, RStudio and others

●

articles (example, example) and interviews (example)

●

published documents in which a name of a company is visible (example)

●

job advertisements

●

names of companies supporting / organizing events (conferences, courses)

That is to say, a logo of a company is included in the list only if there is a

strong evidence that the company uses or supports (or used or supported) R,

based on information shared on the Internet – and thus available for everyone.

Please note, that I am not aware if all listed companies are still using any

version of R at the time the presentation is being viewed.

1

7

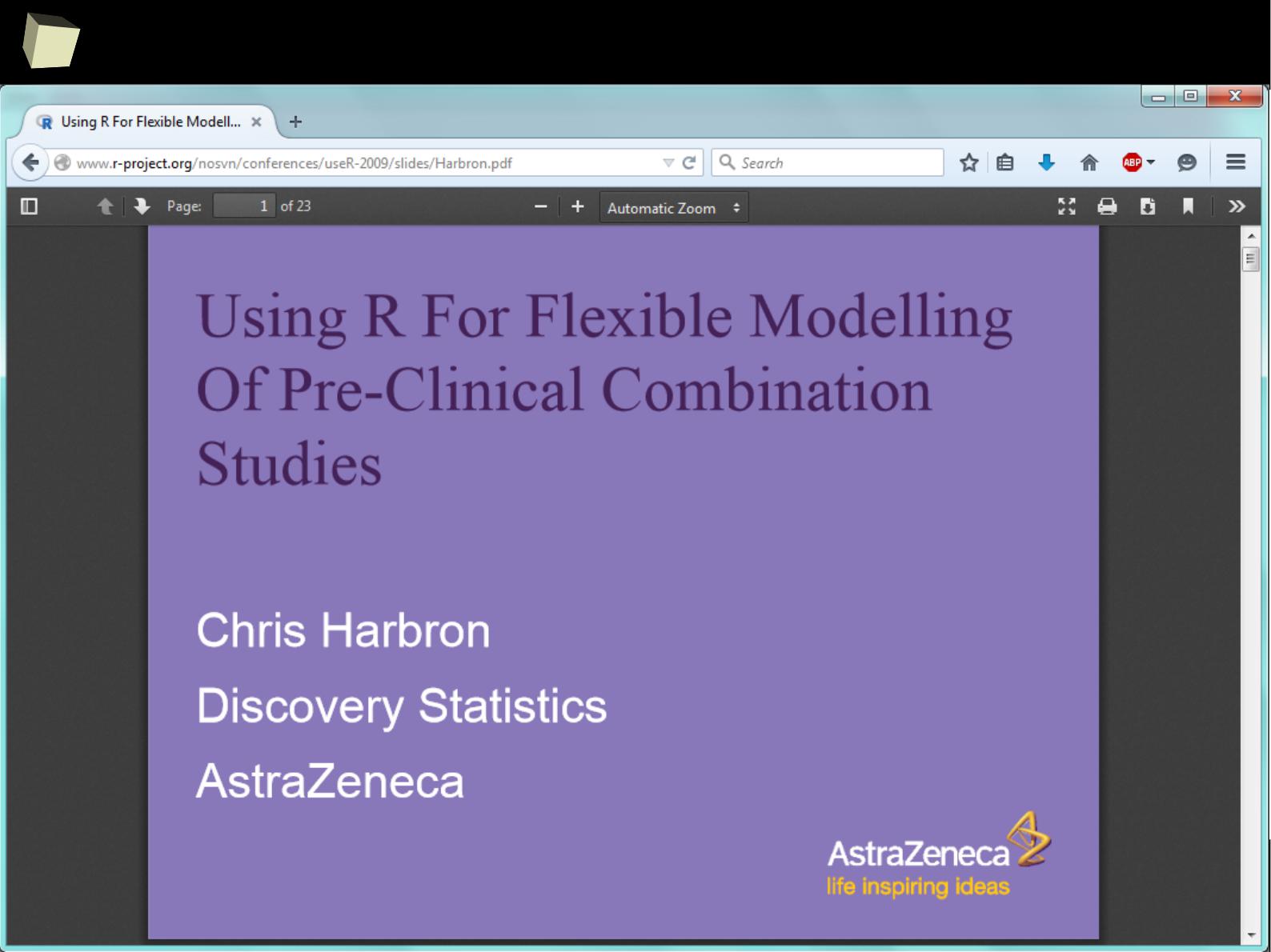

They use R

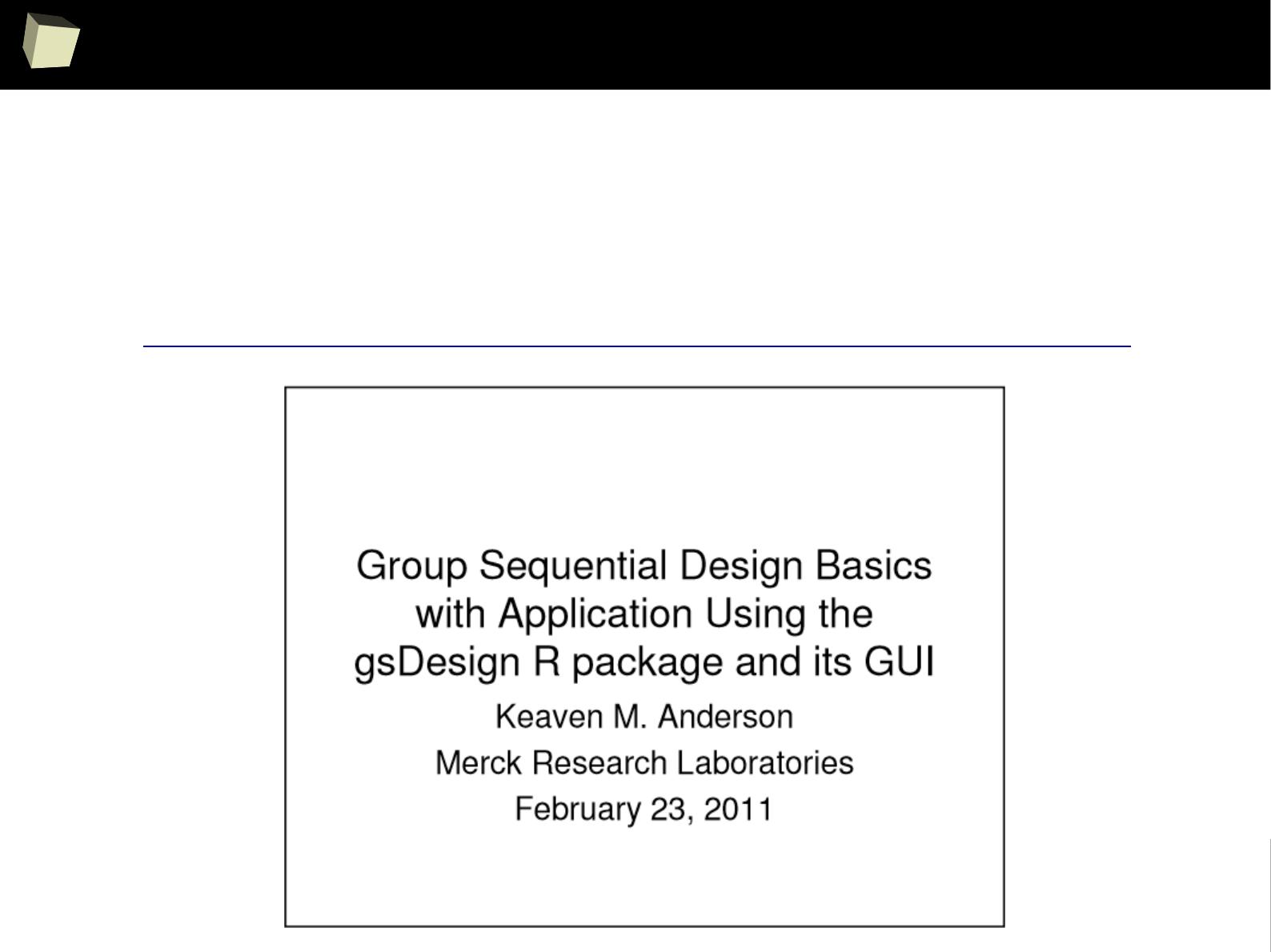

“We use R for adaptive designs frequently because it’s the fastest tool to

explore designs that interest us. Off-the-shelf software, gives you off-the-

shelf options. Those are a good first order approximation, but if you really

want to nail down a design, R is going to be the fastest way to do that.”

Keaven Anderson

Executive Director Late Stage Biostatistics,

Merck

http://www.revolutionanalytics.com/content/merck-optimizes-clinical-drug-development...

1

8

They use R

1

9

They use R

2

0

They use R

2

1

They use R

so...

2

2

They use R

2

3

In many discussions some praise SAS, others STATA or SPSS.

But most of them refer to R with respect.

2

4

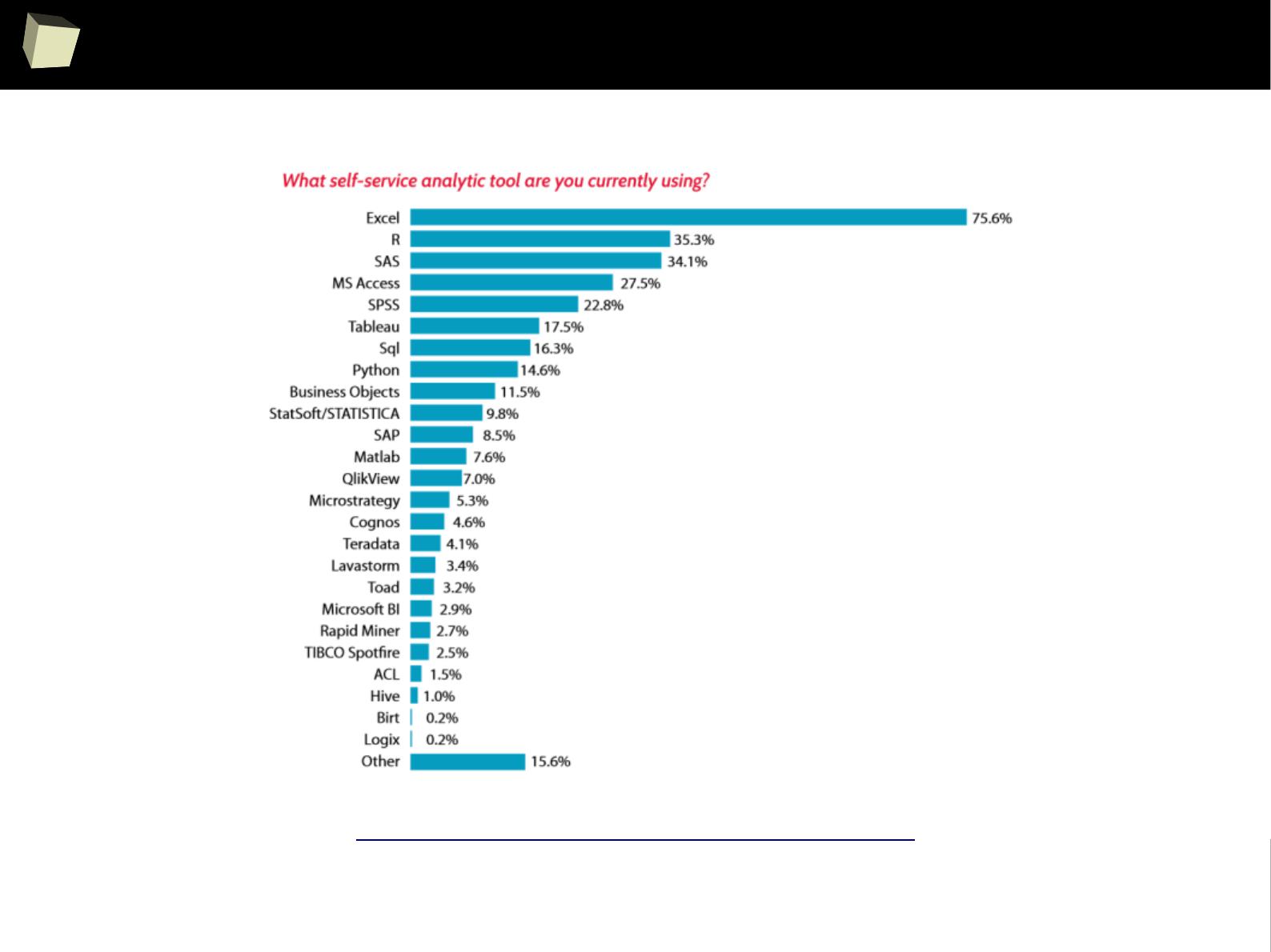

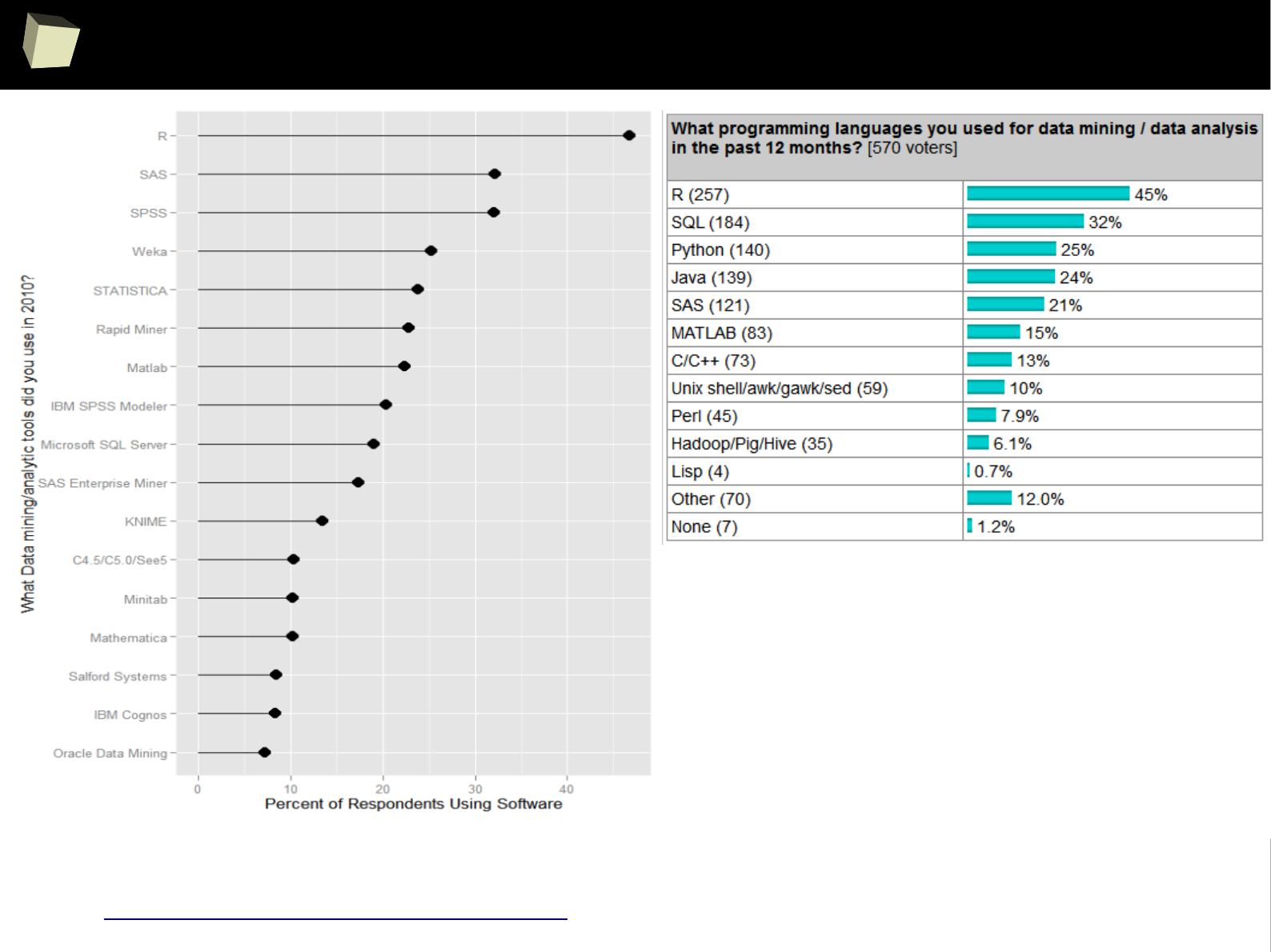

Is R a popular software?

3

2

Ancient history: R was already popular in 2004

3

3

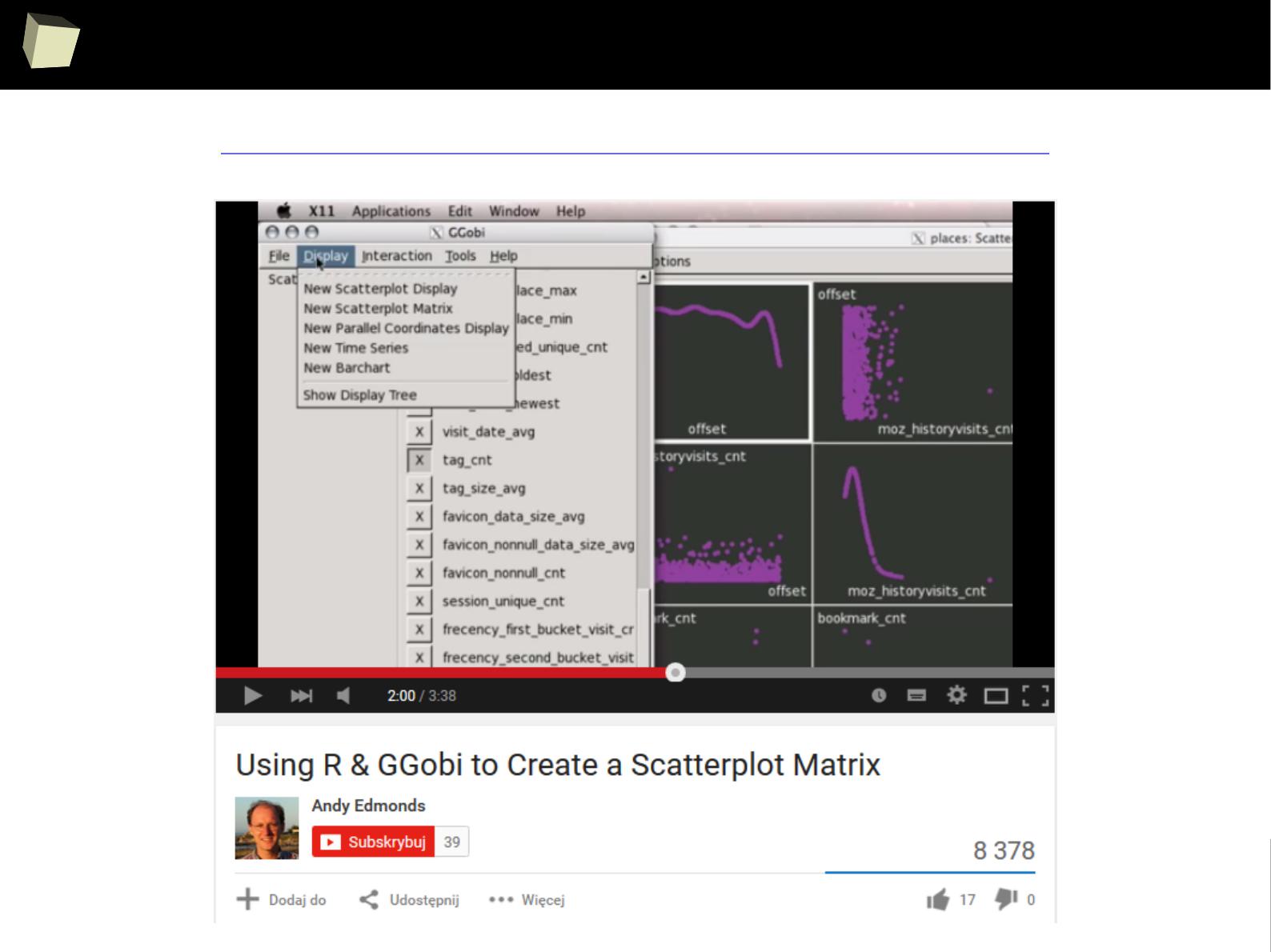

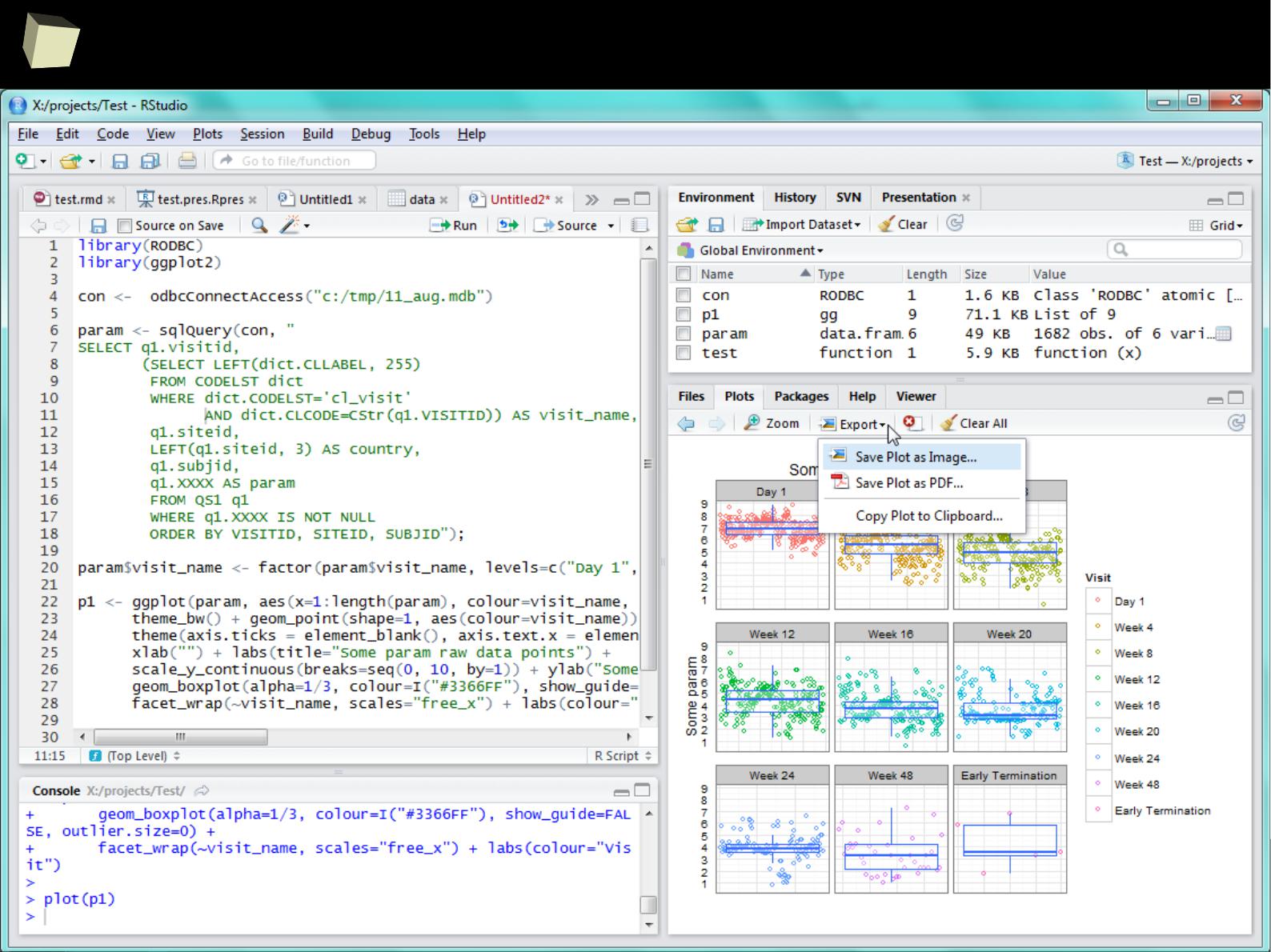

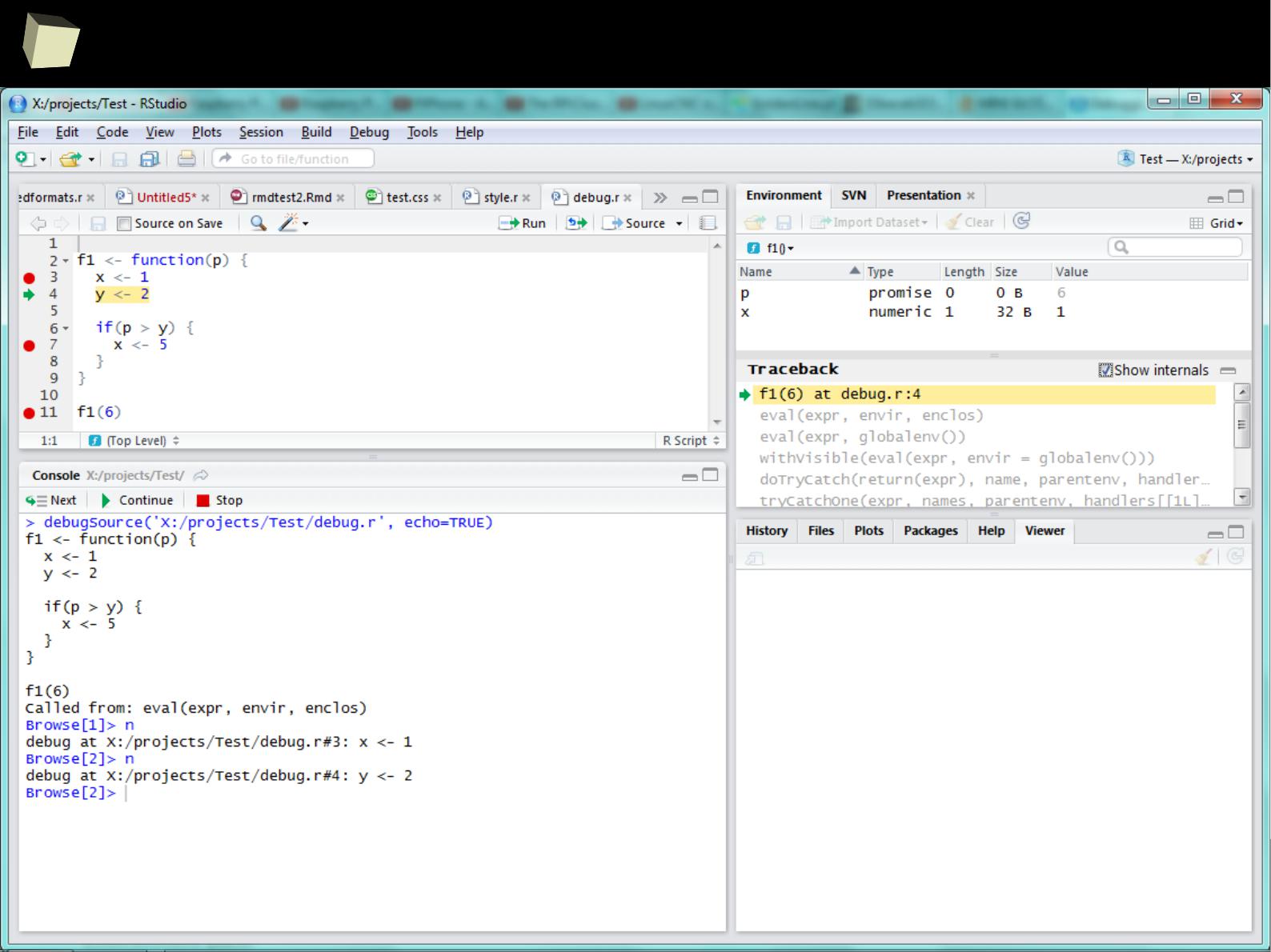

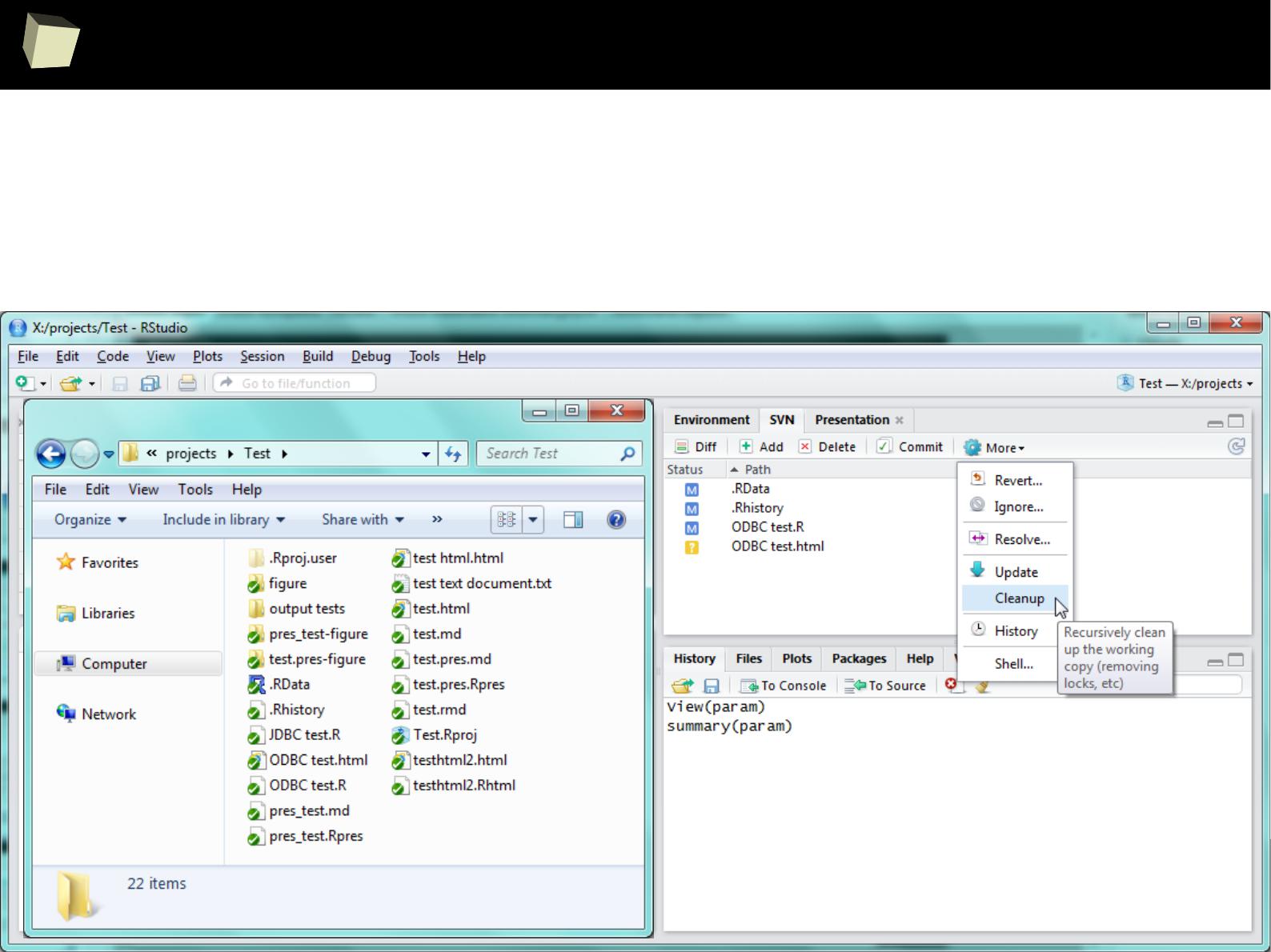

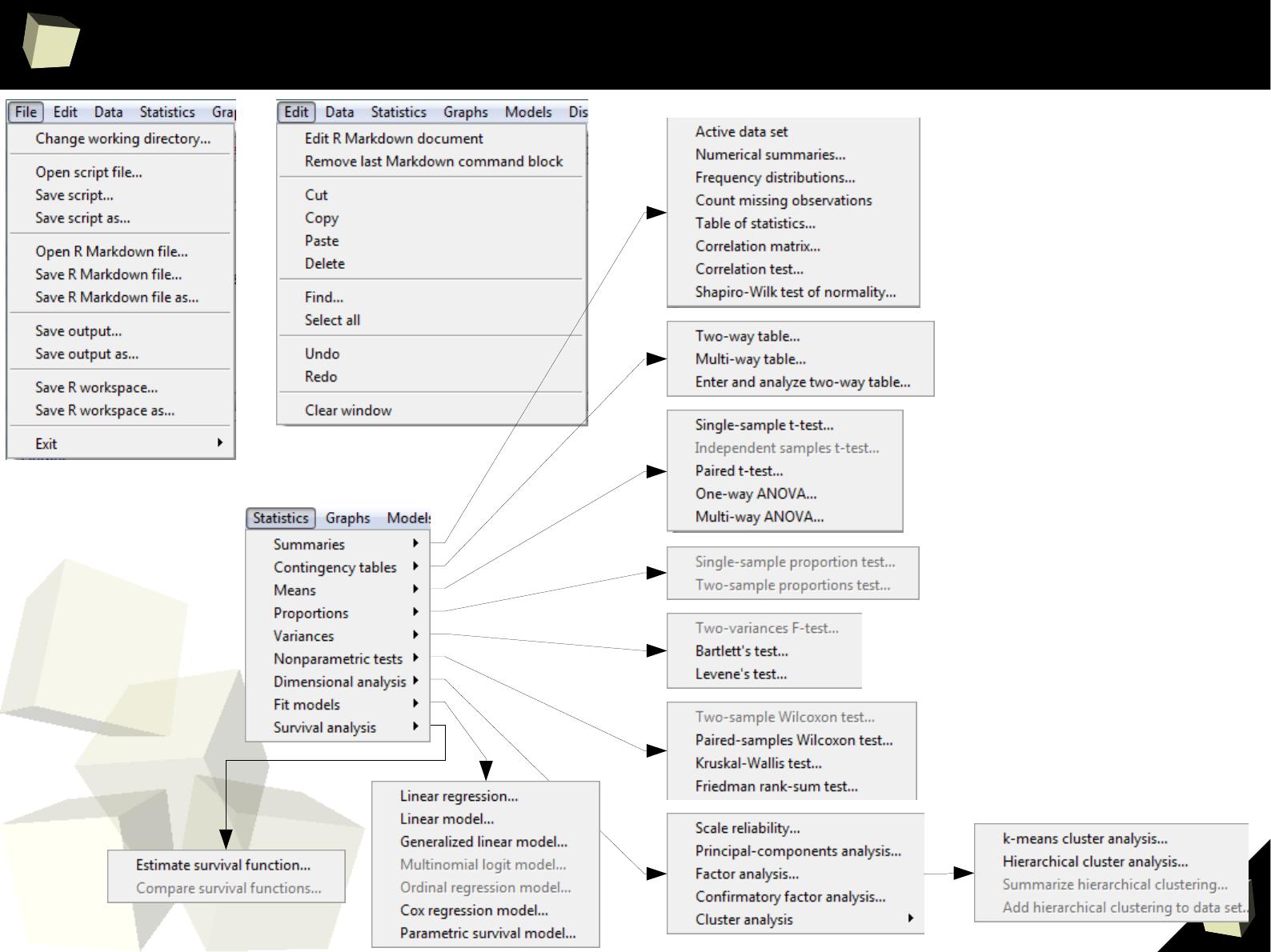

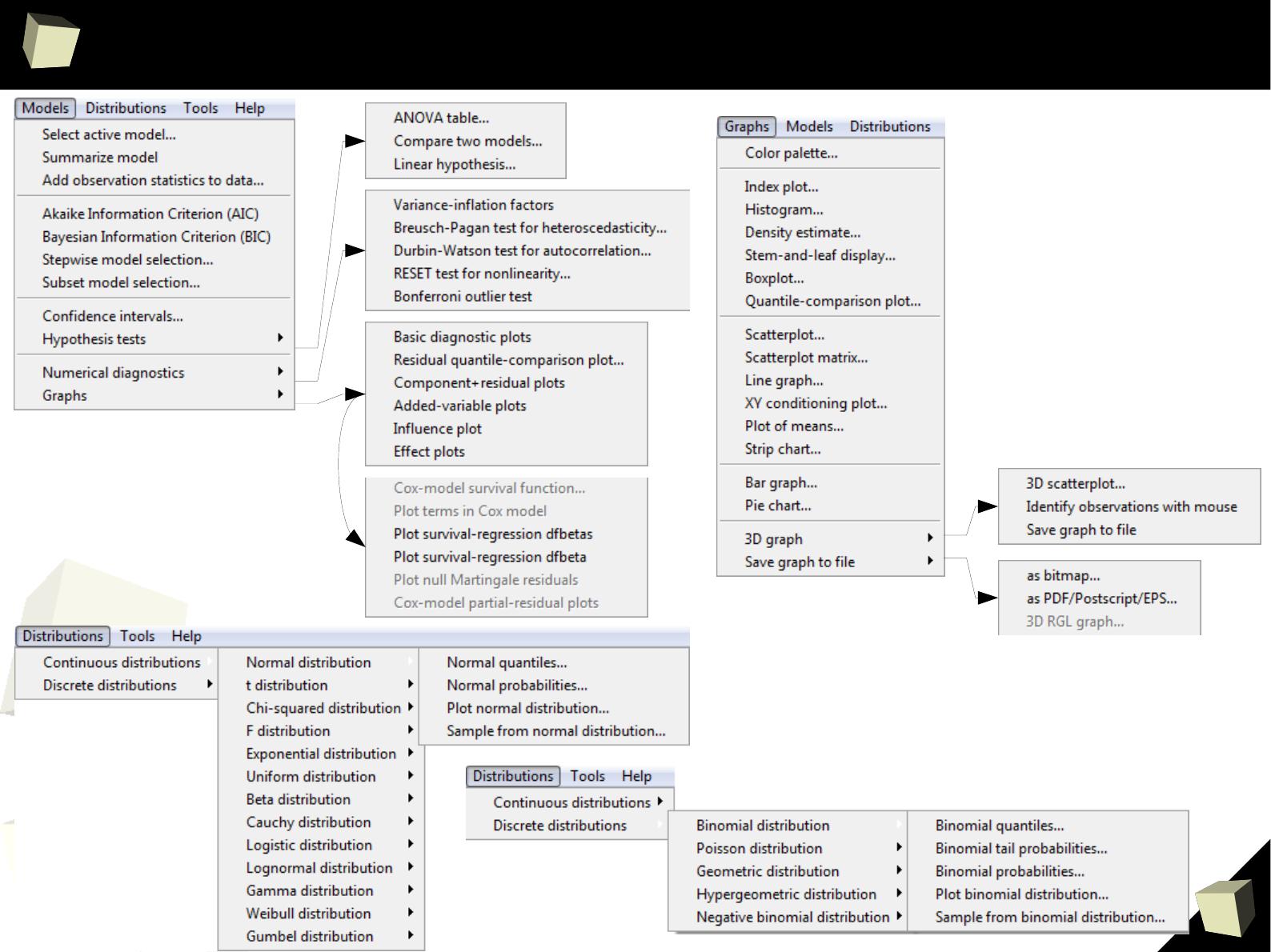

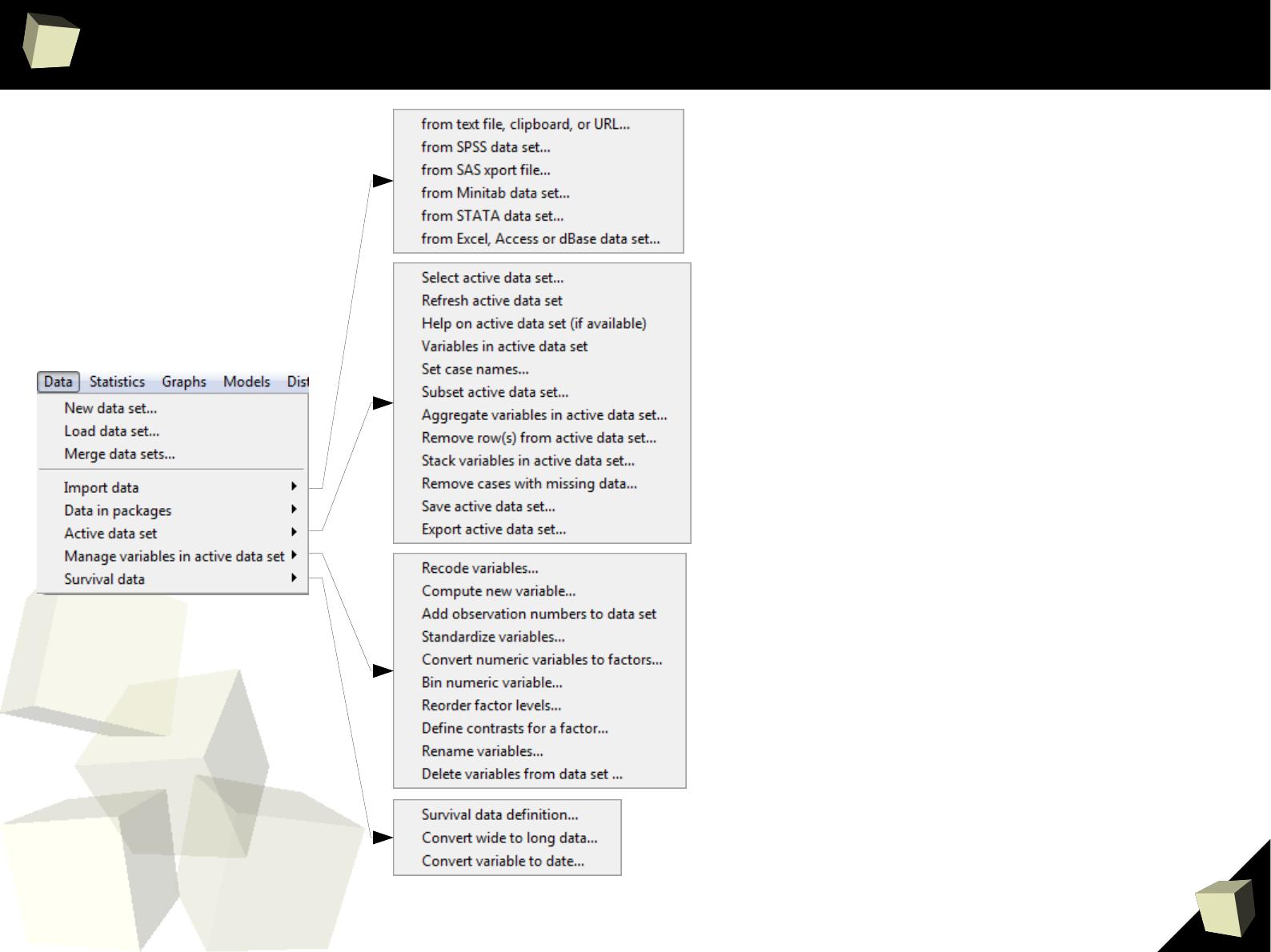

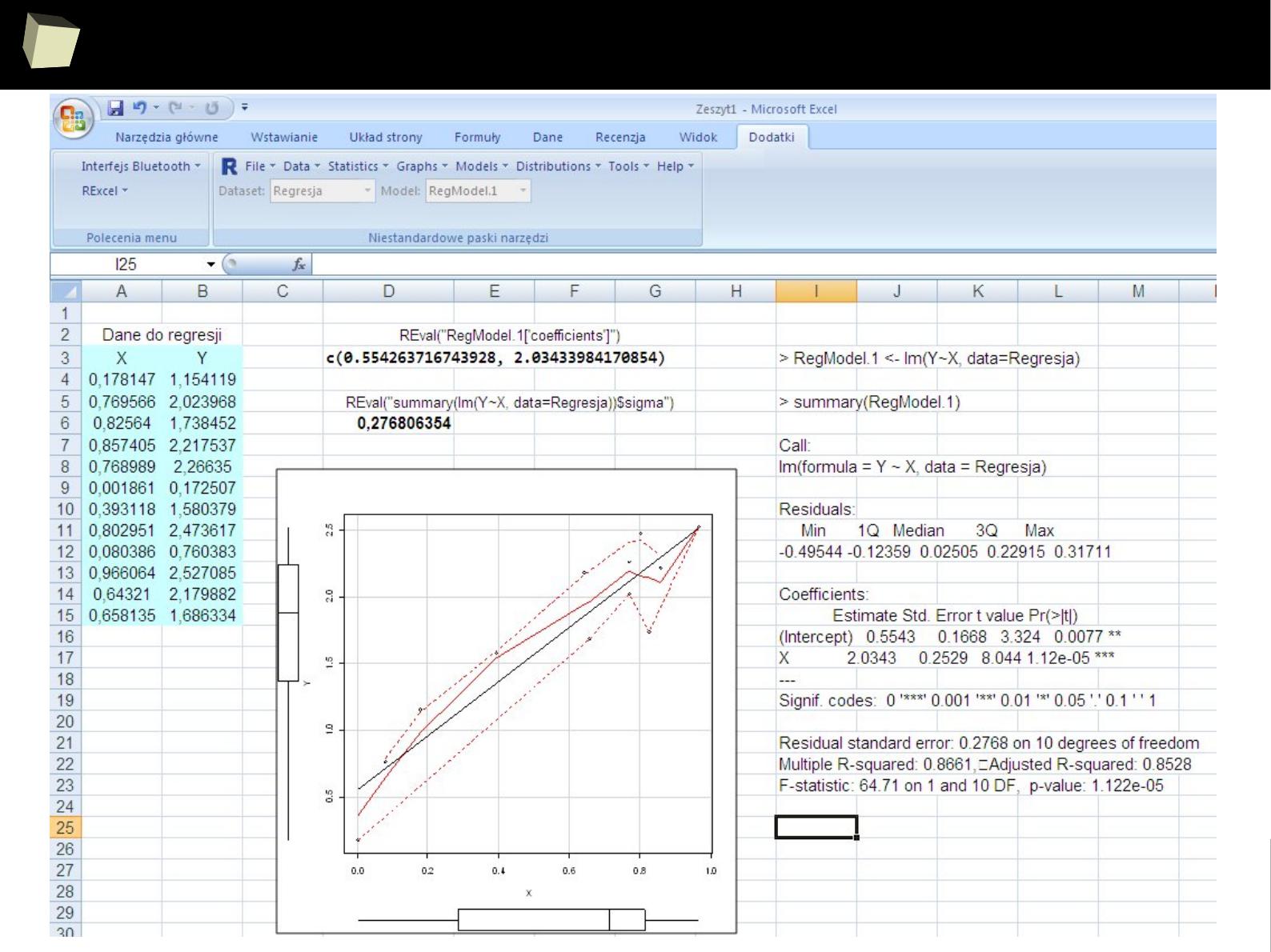

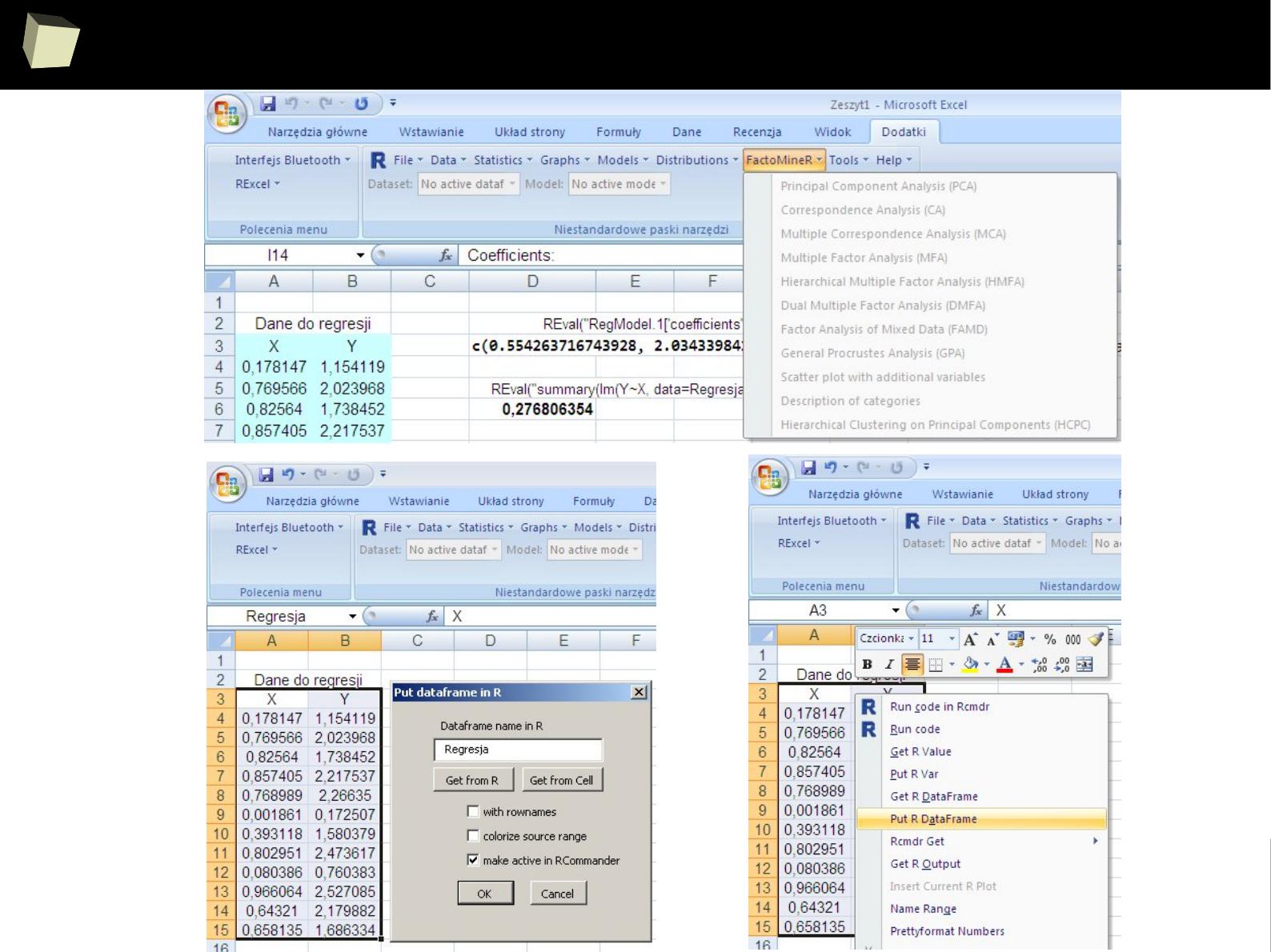

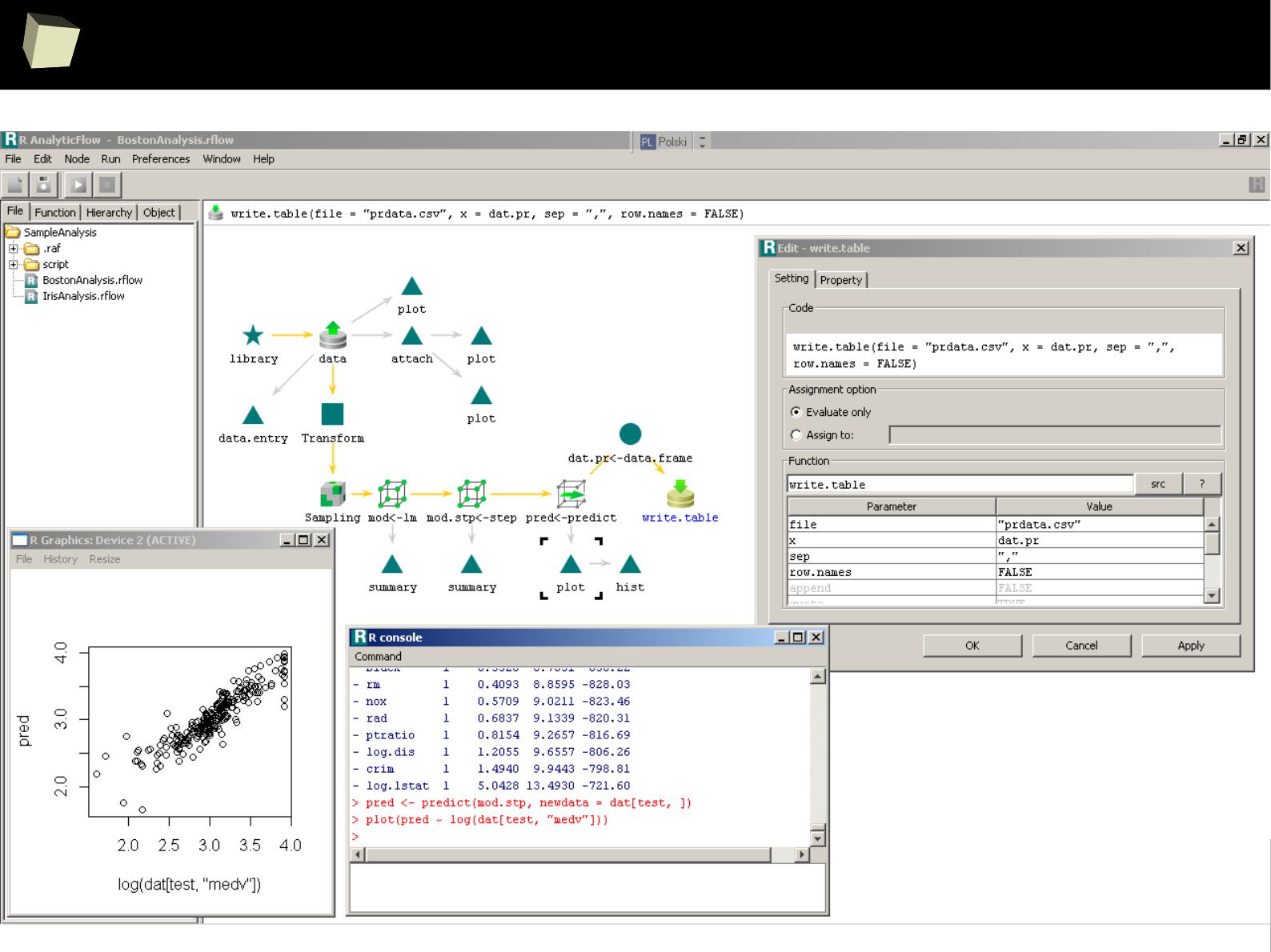

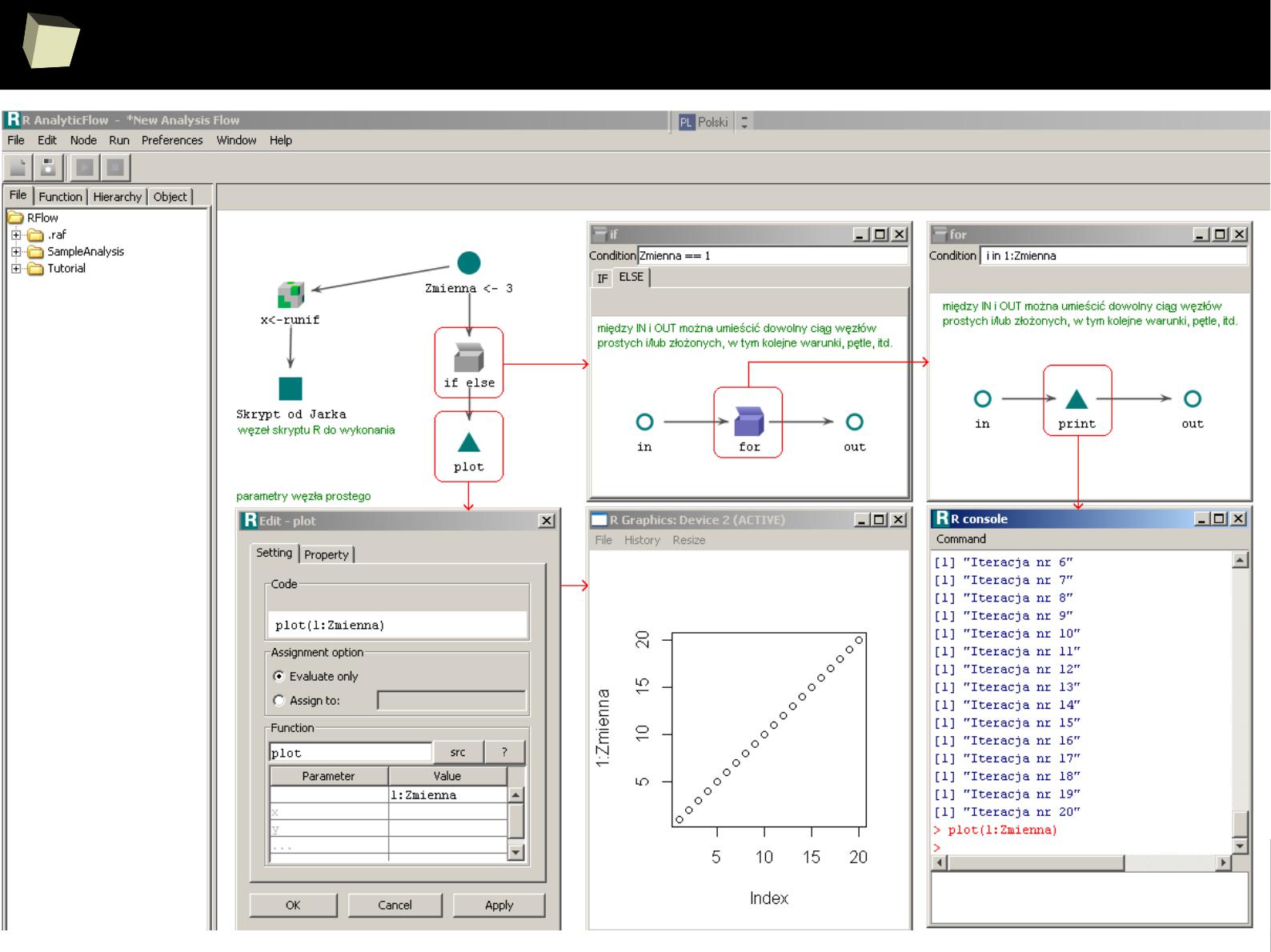

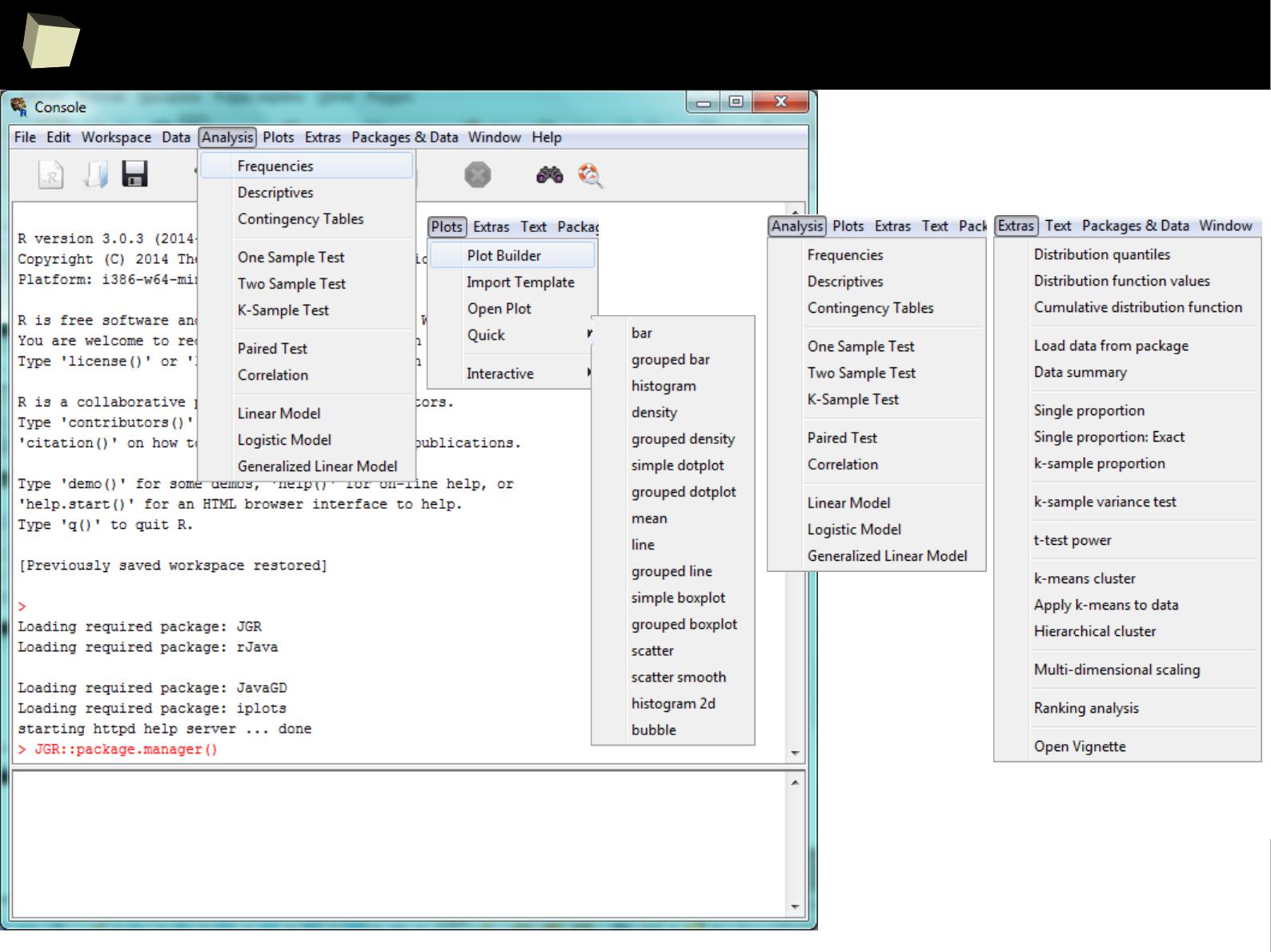

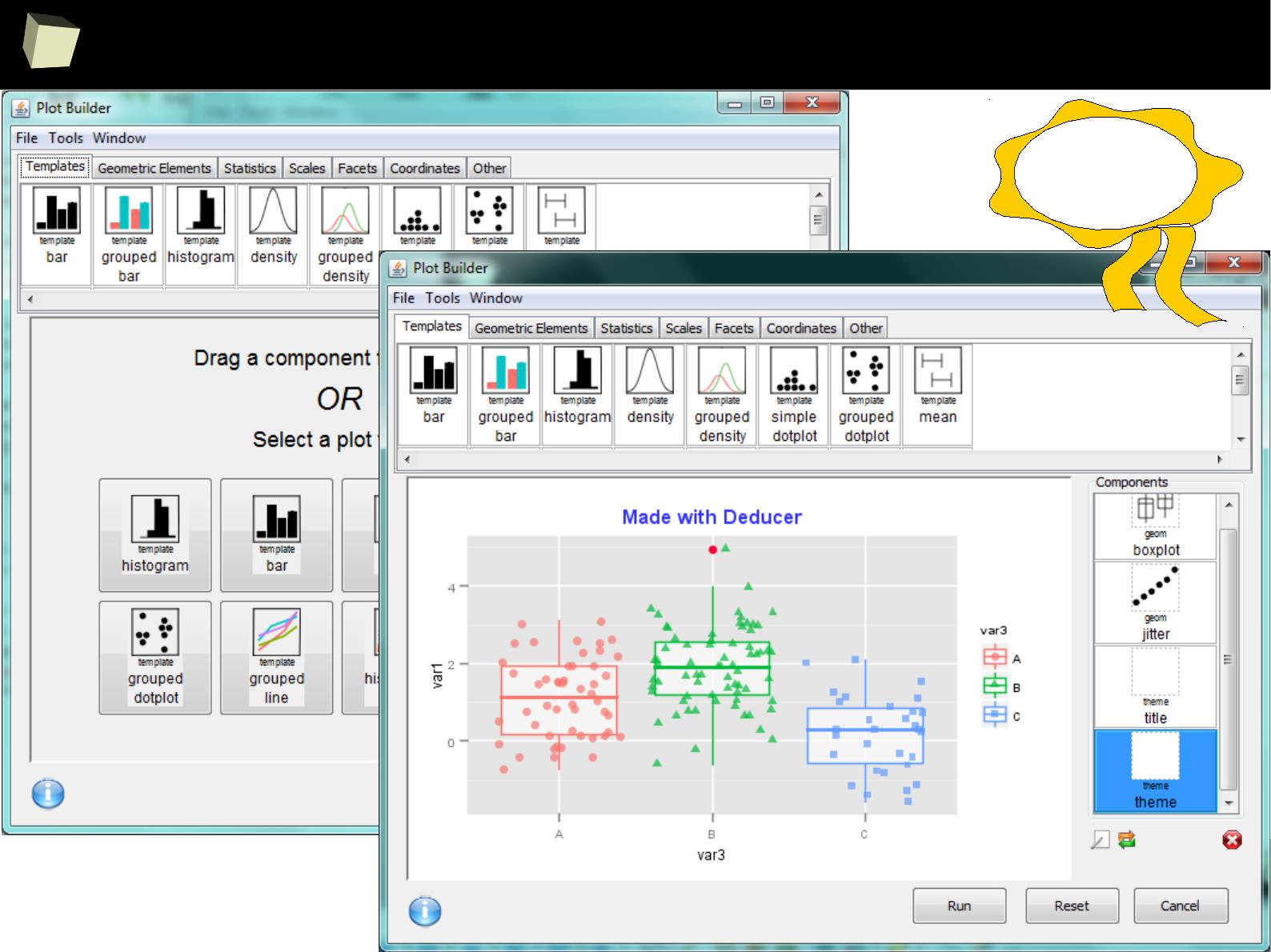

Demonstrative screenshots

3

4

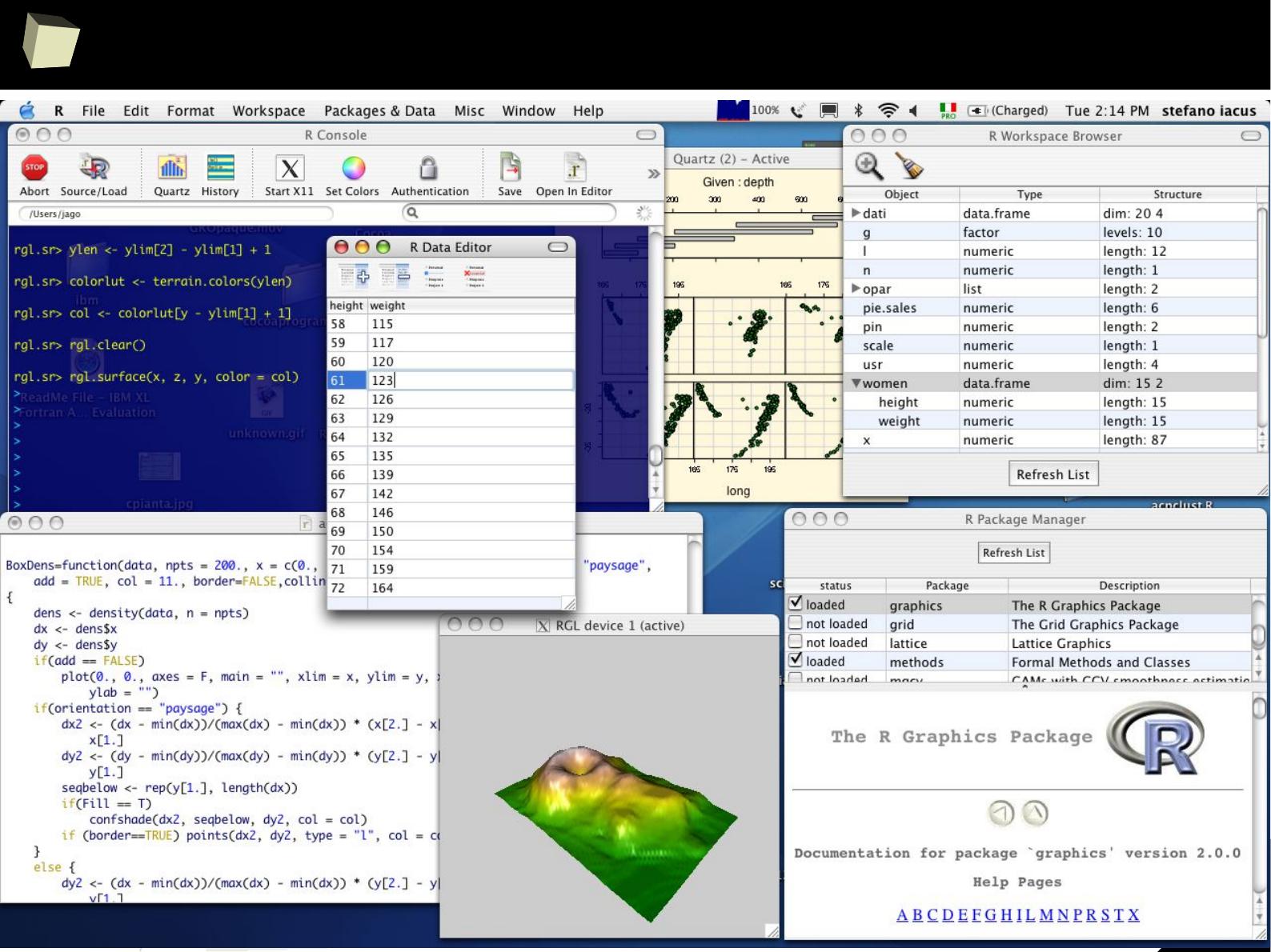

fiRst insight: the main program window

3

5

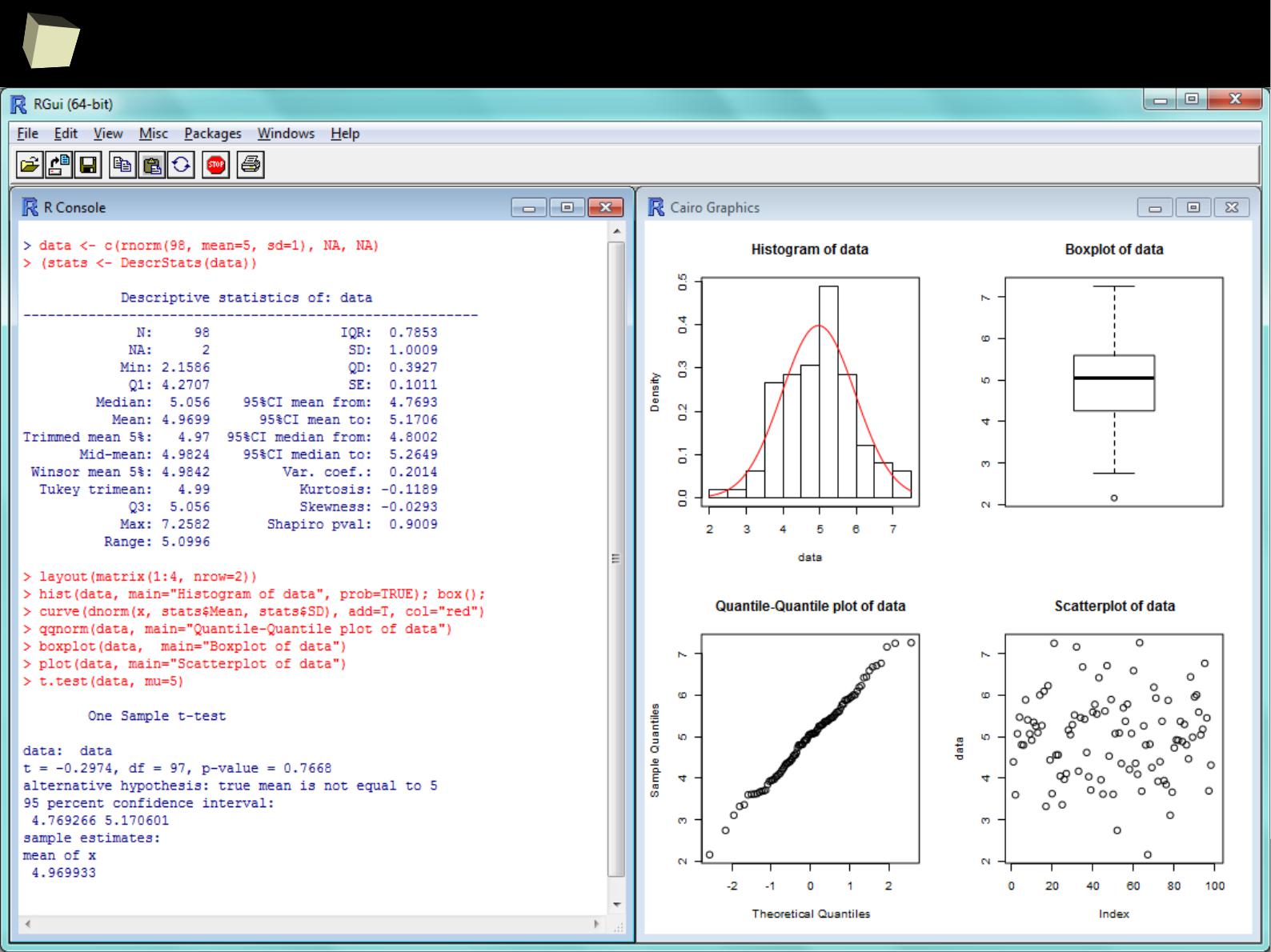

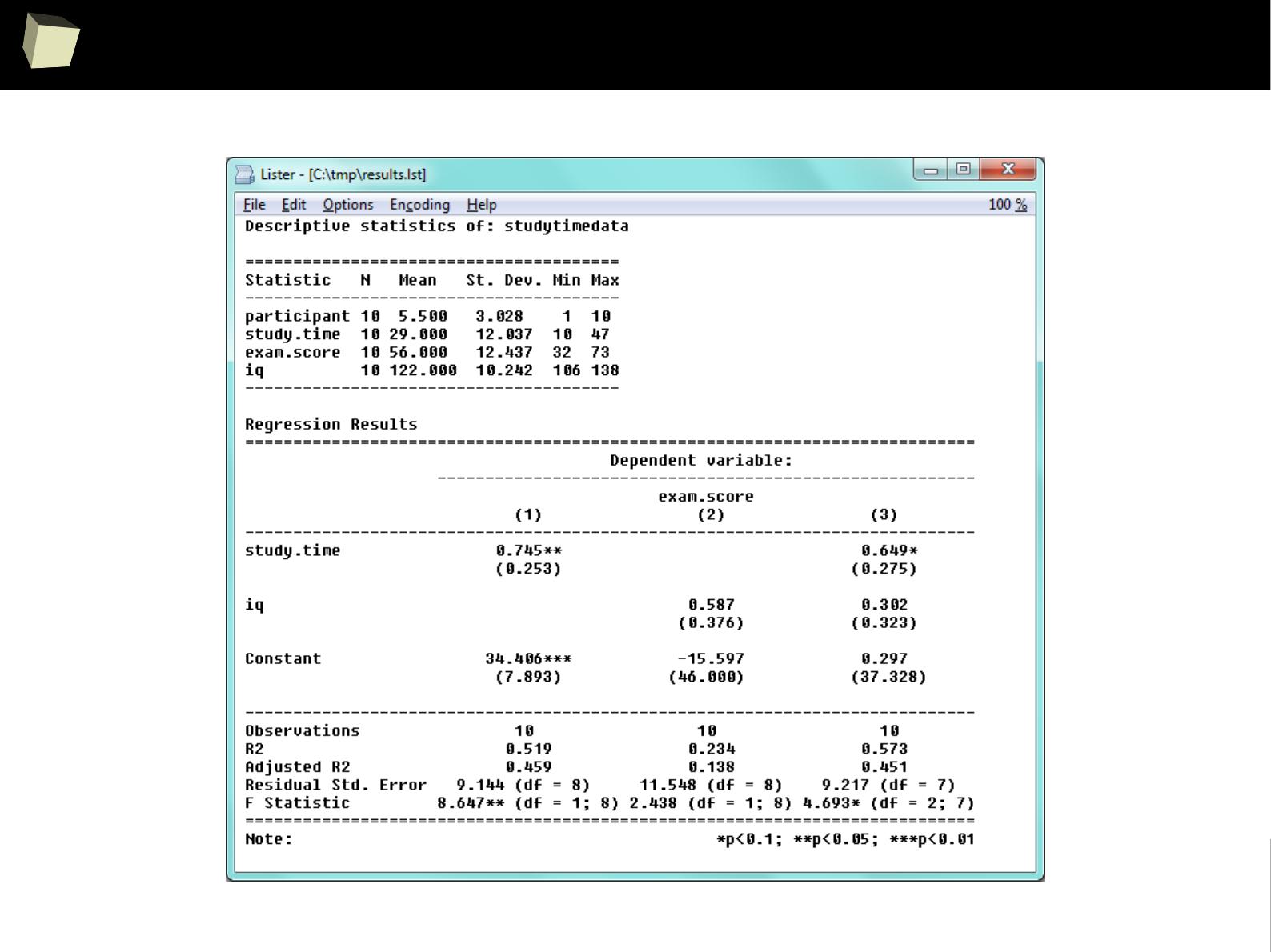

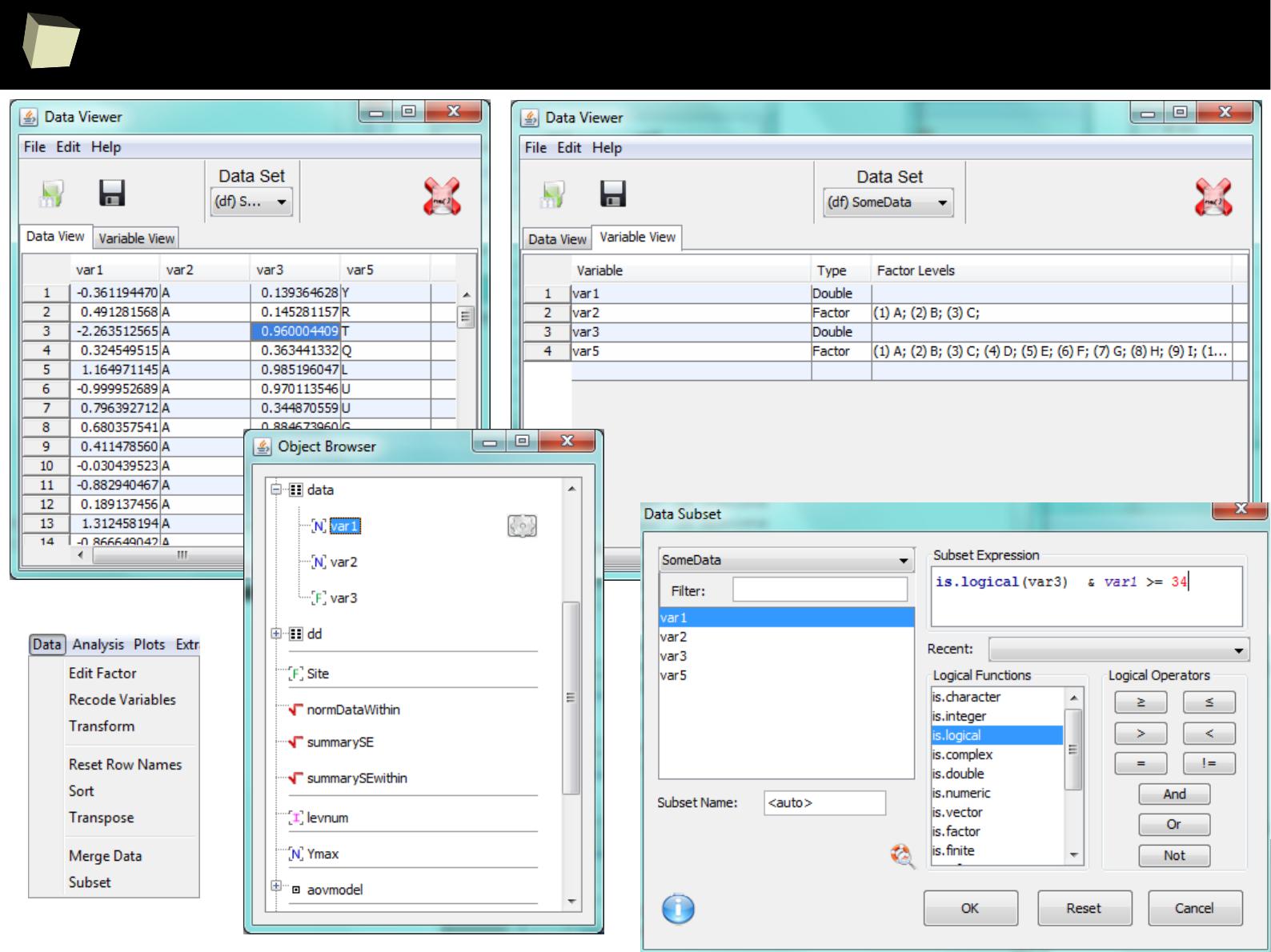

...some quick'n'dirty data inspection...

3

6

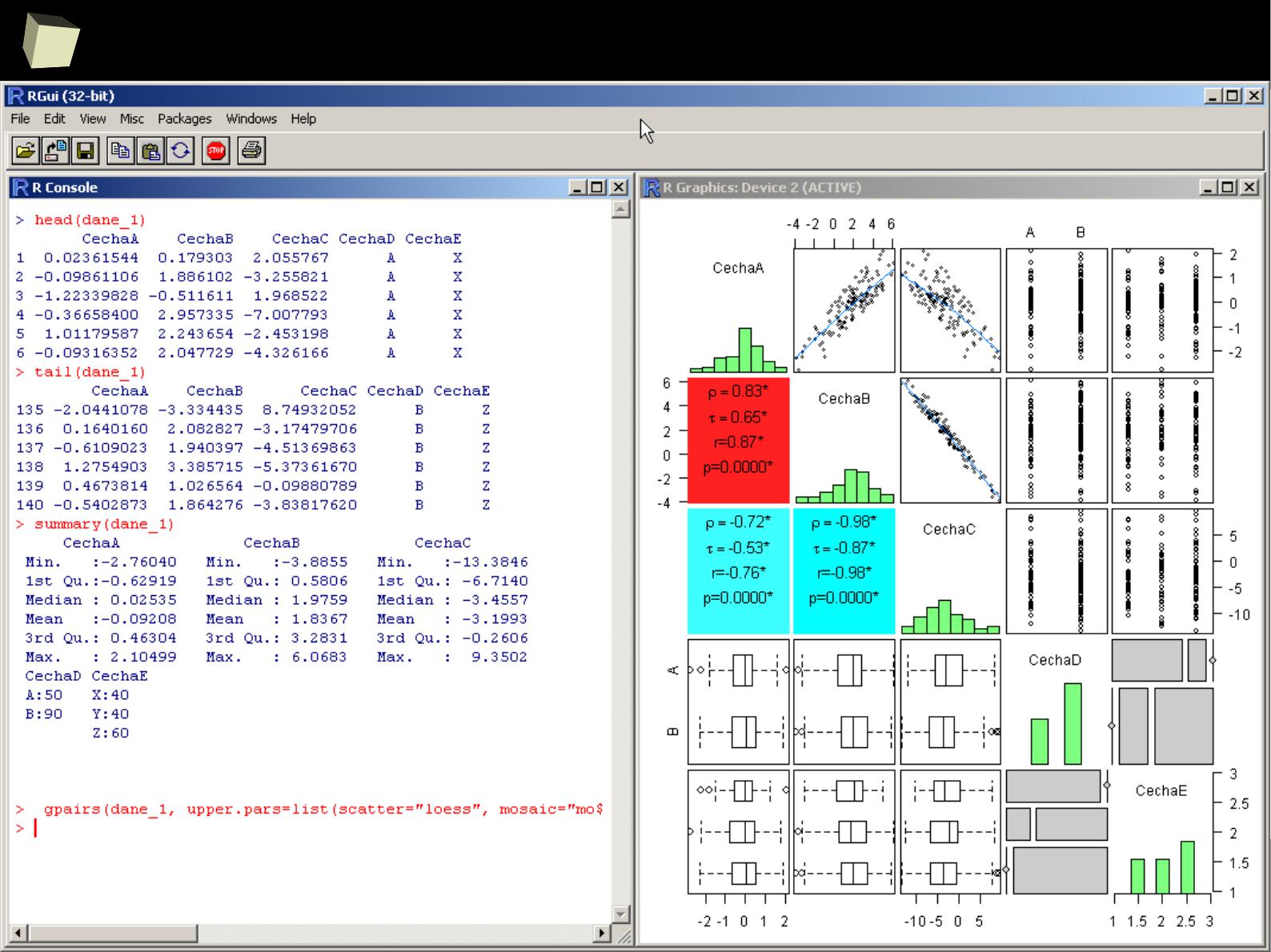

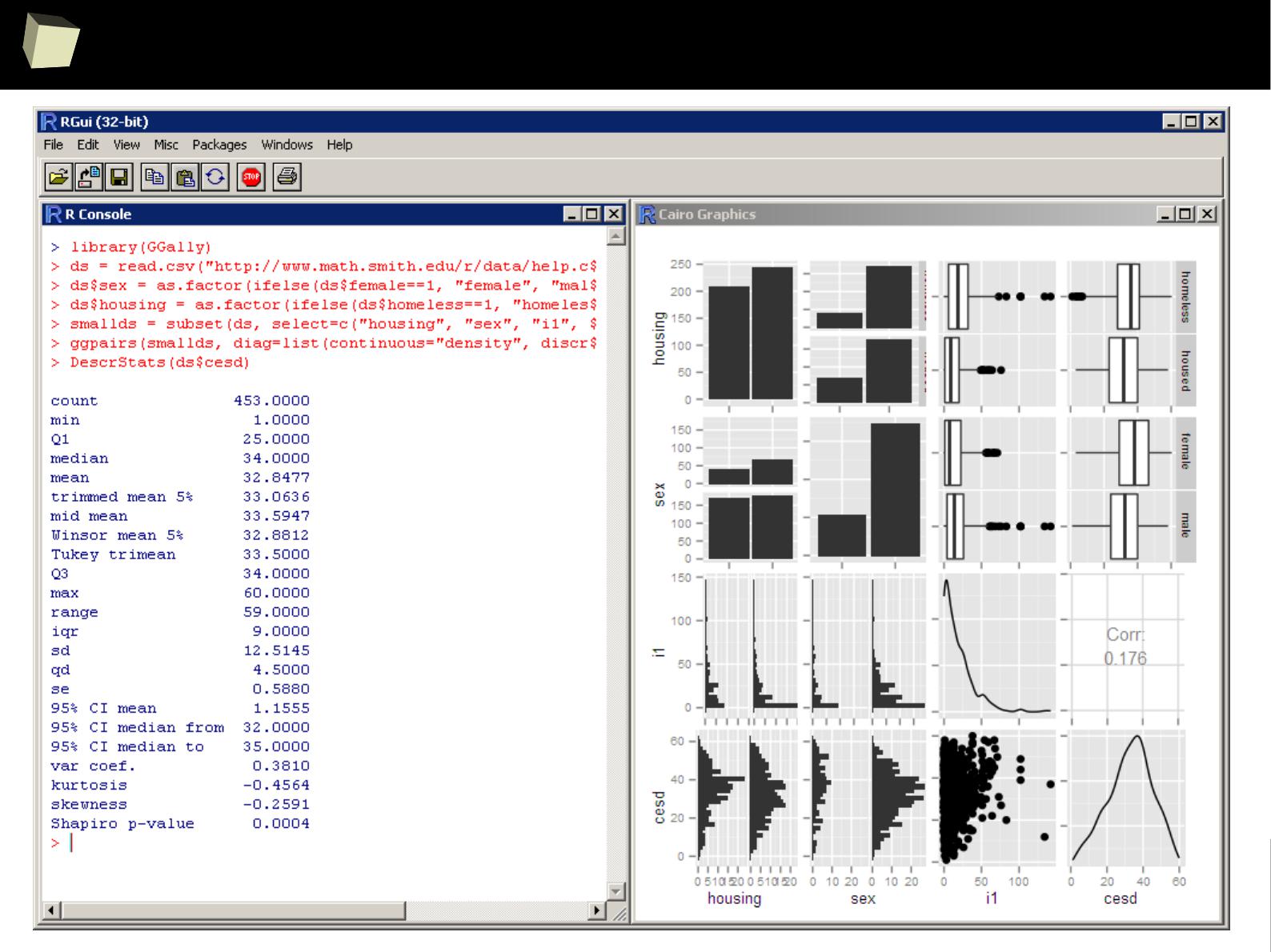

...quick'n'dirty data inspection other way...

3

7

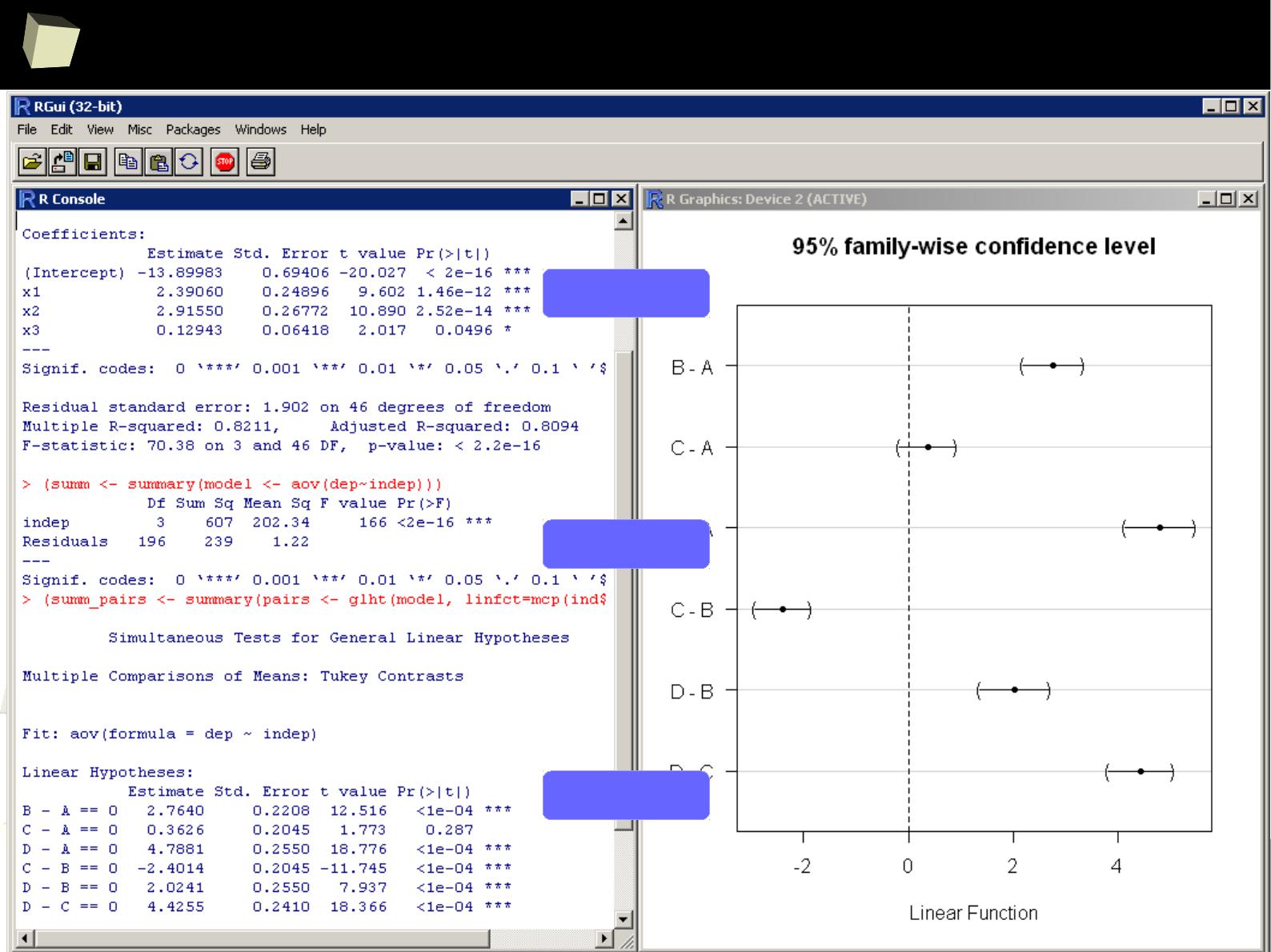

...some linear modeling...

< Linear regression

< ANOVA

< Post-hoc >

3

8

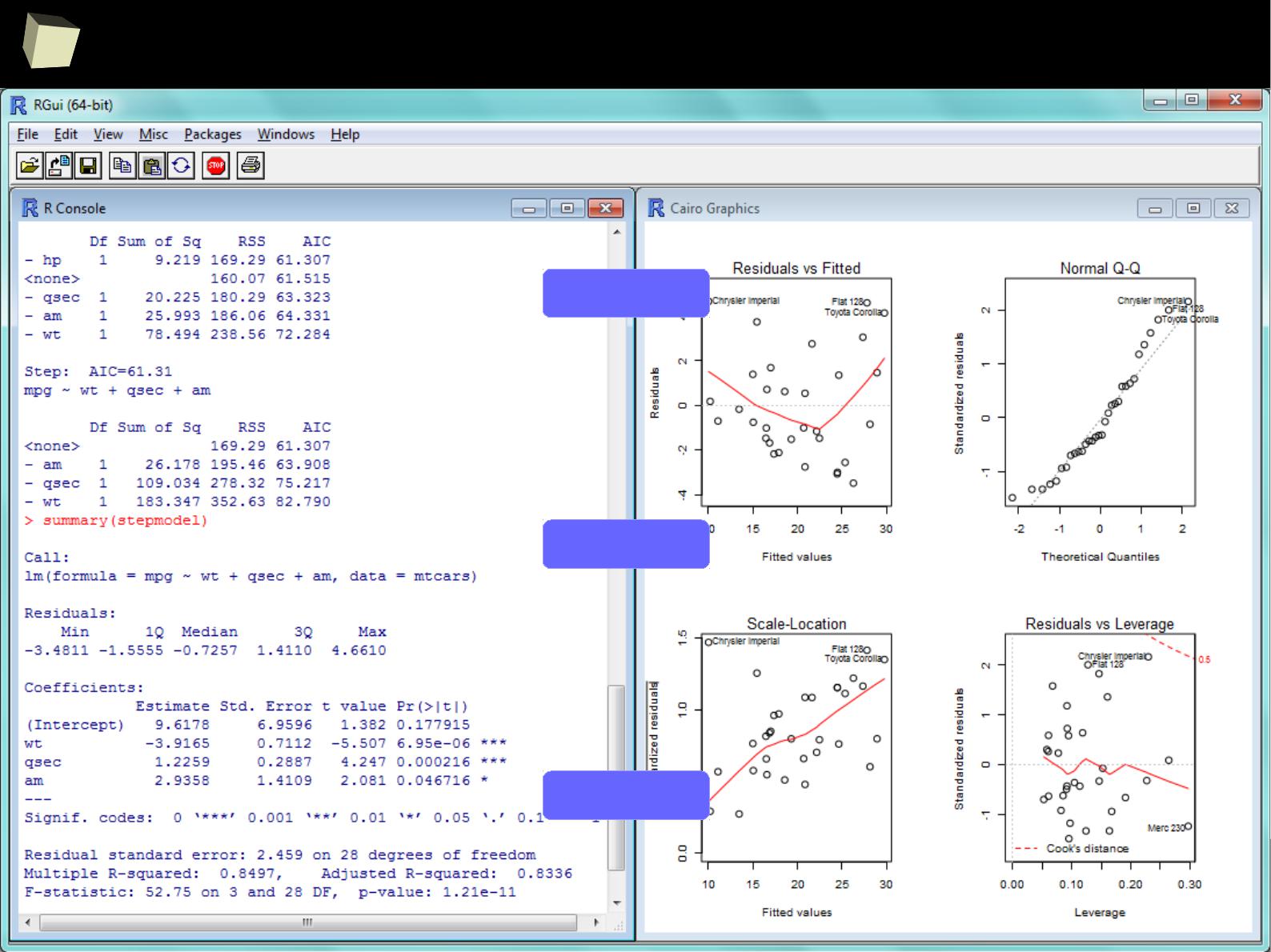

...some linear modeling...

< stepwise

regression

< best model

diagnostics >

3

9

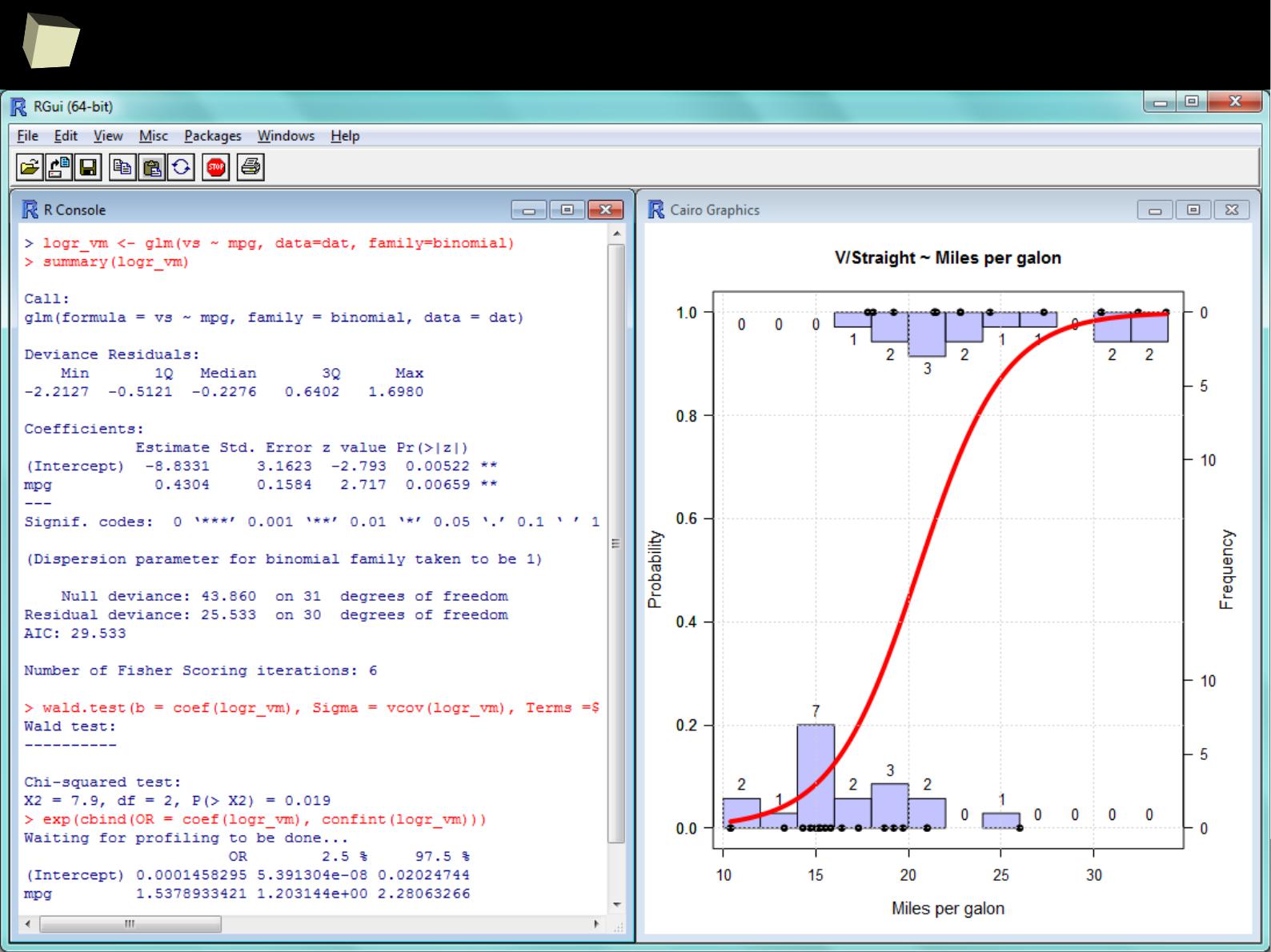

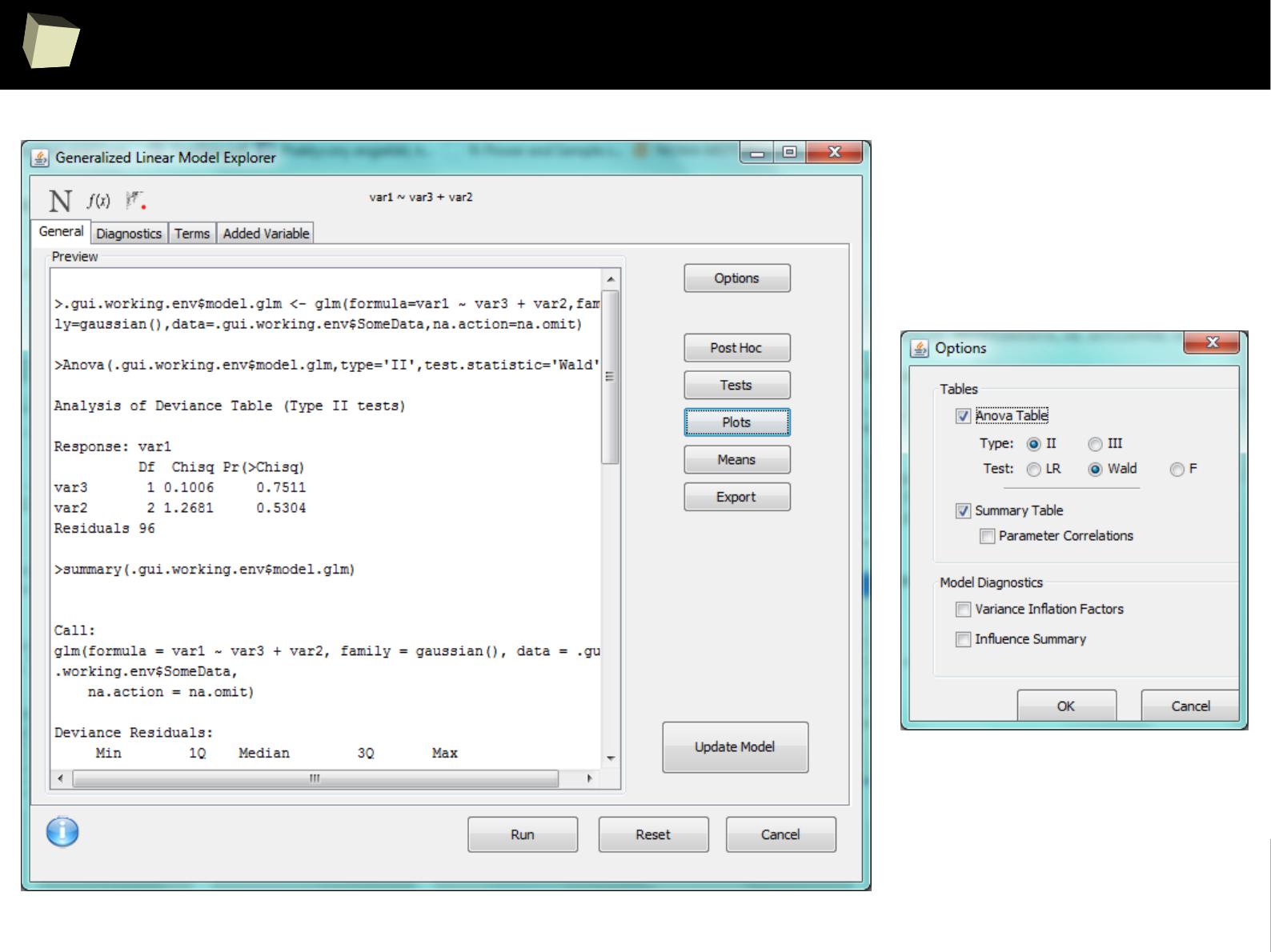

...some GLM modeling...

4

0

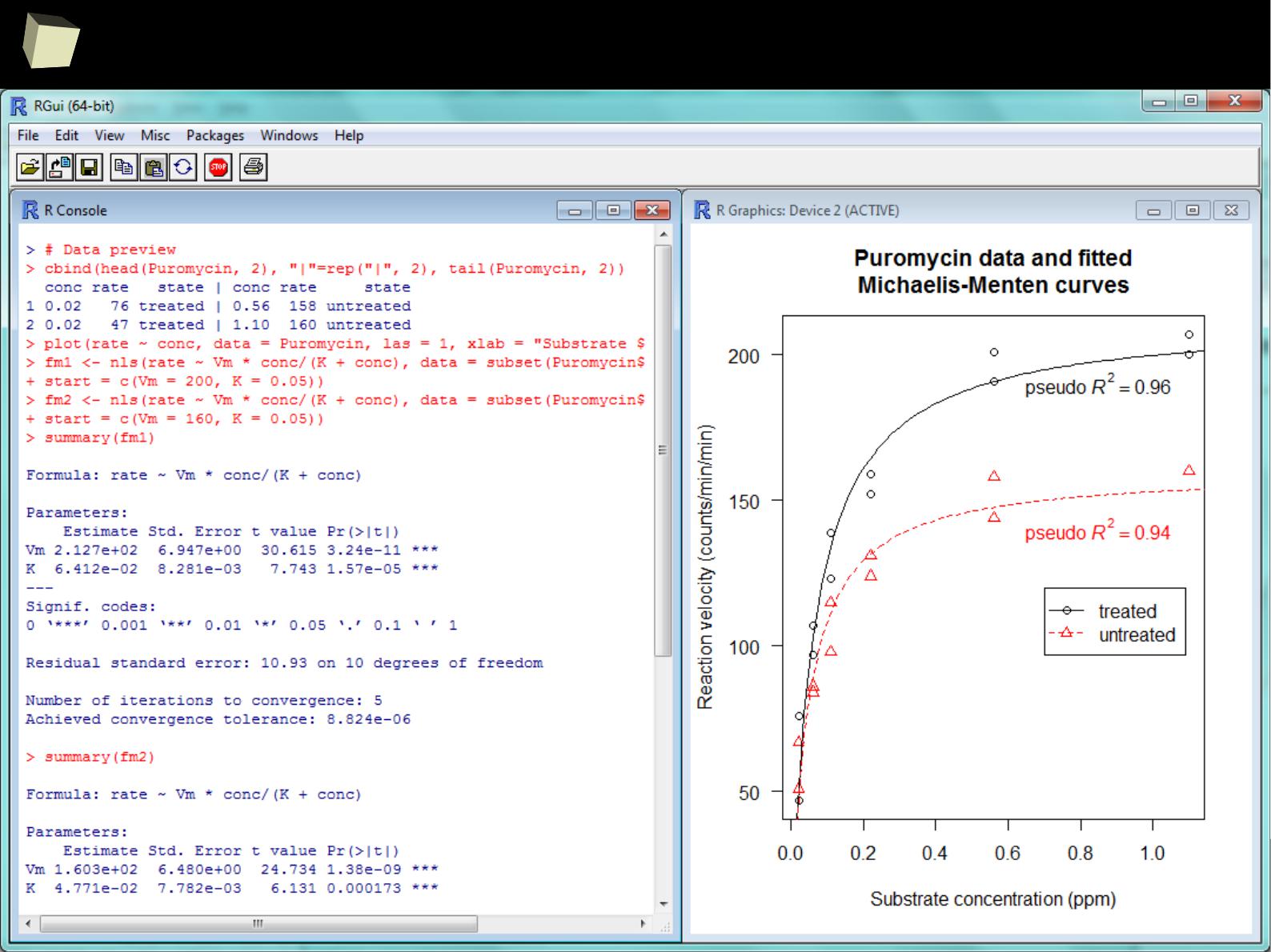

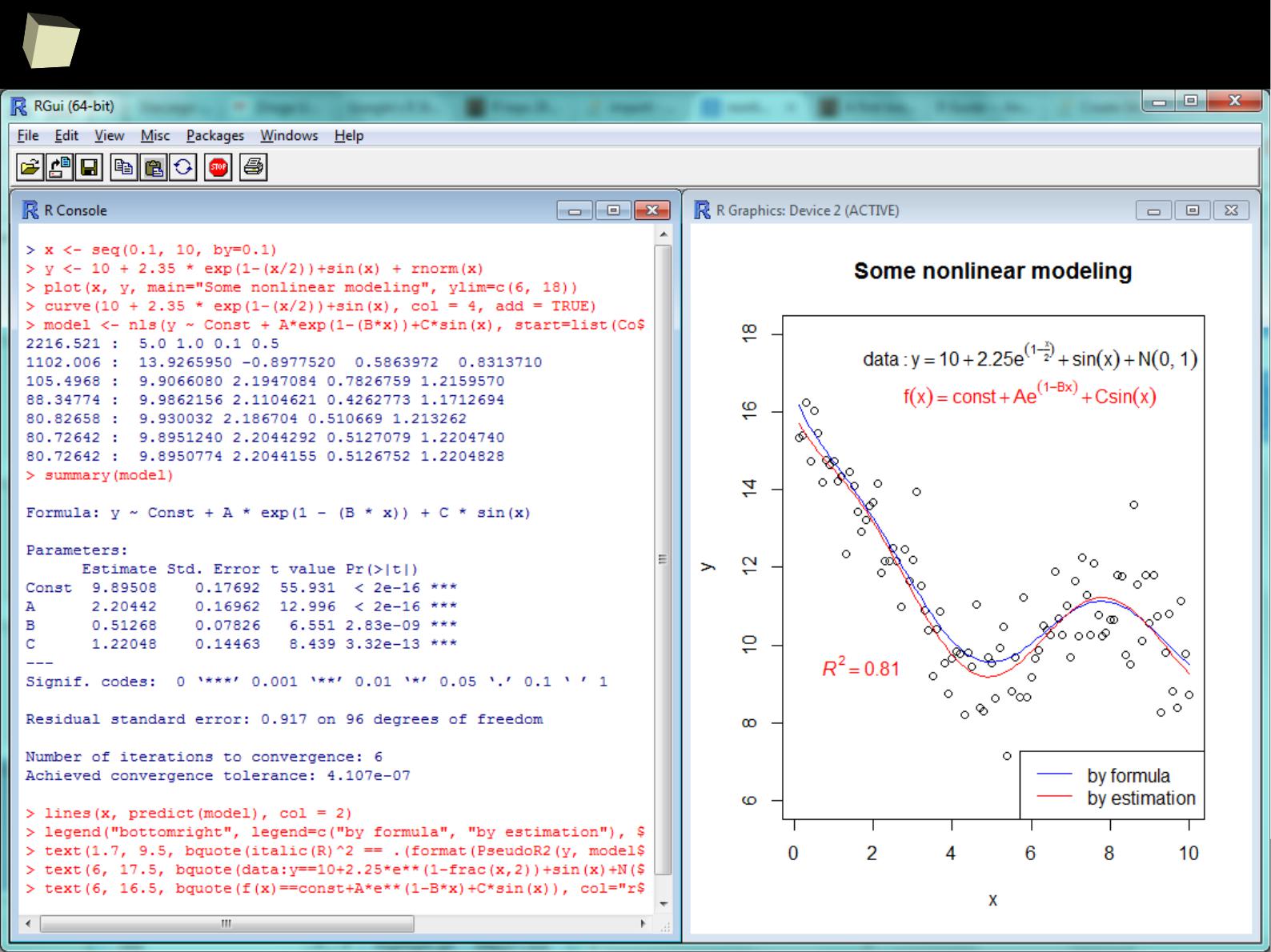

...and nonlinear modeling...

4

1

...and nonlinear modeling...

4

2

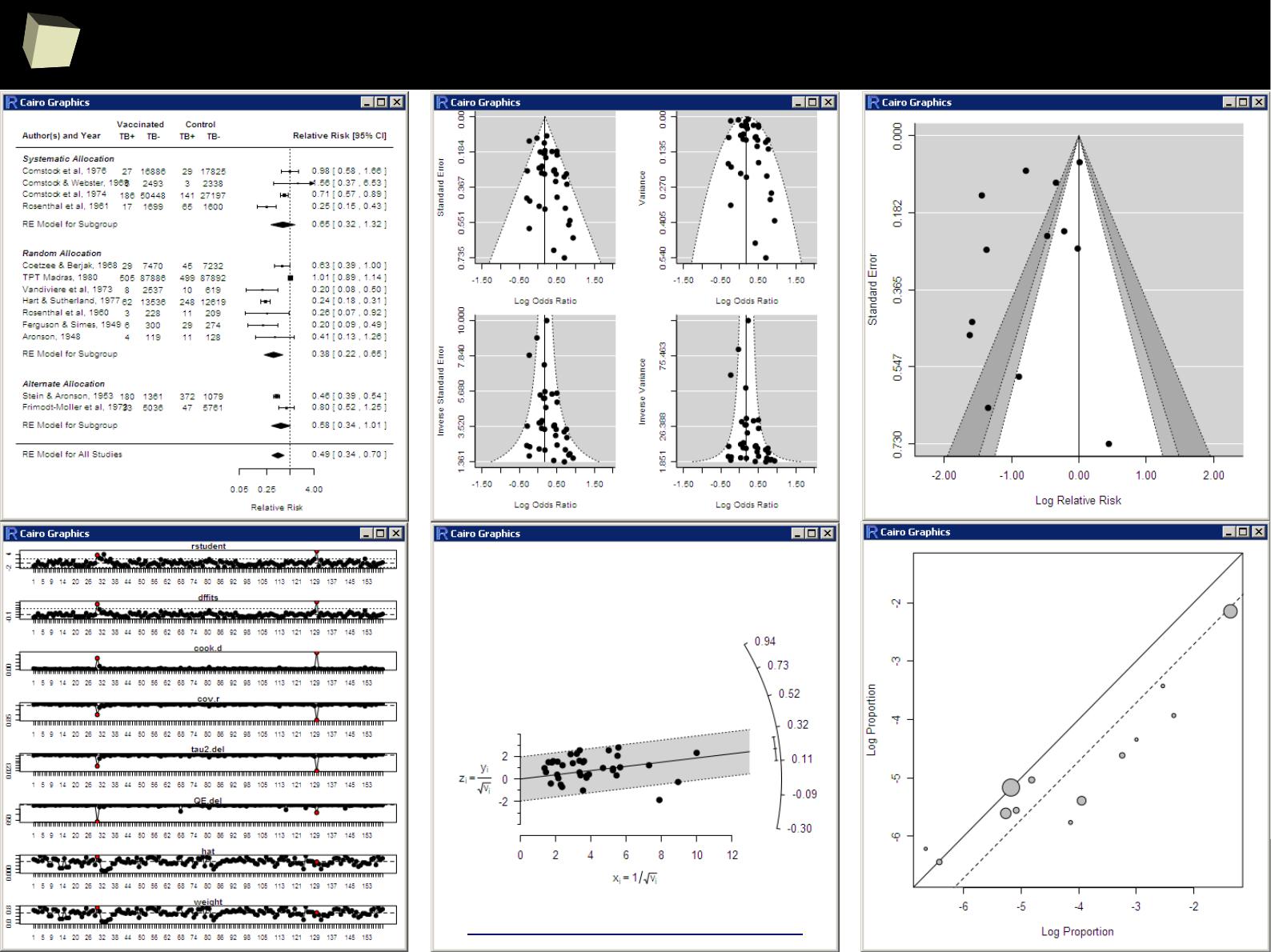

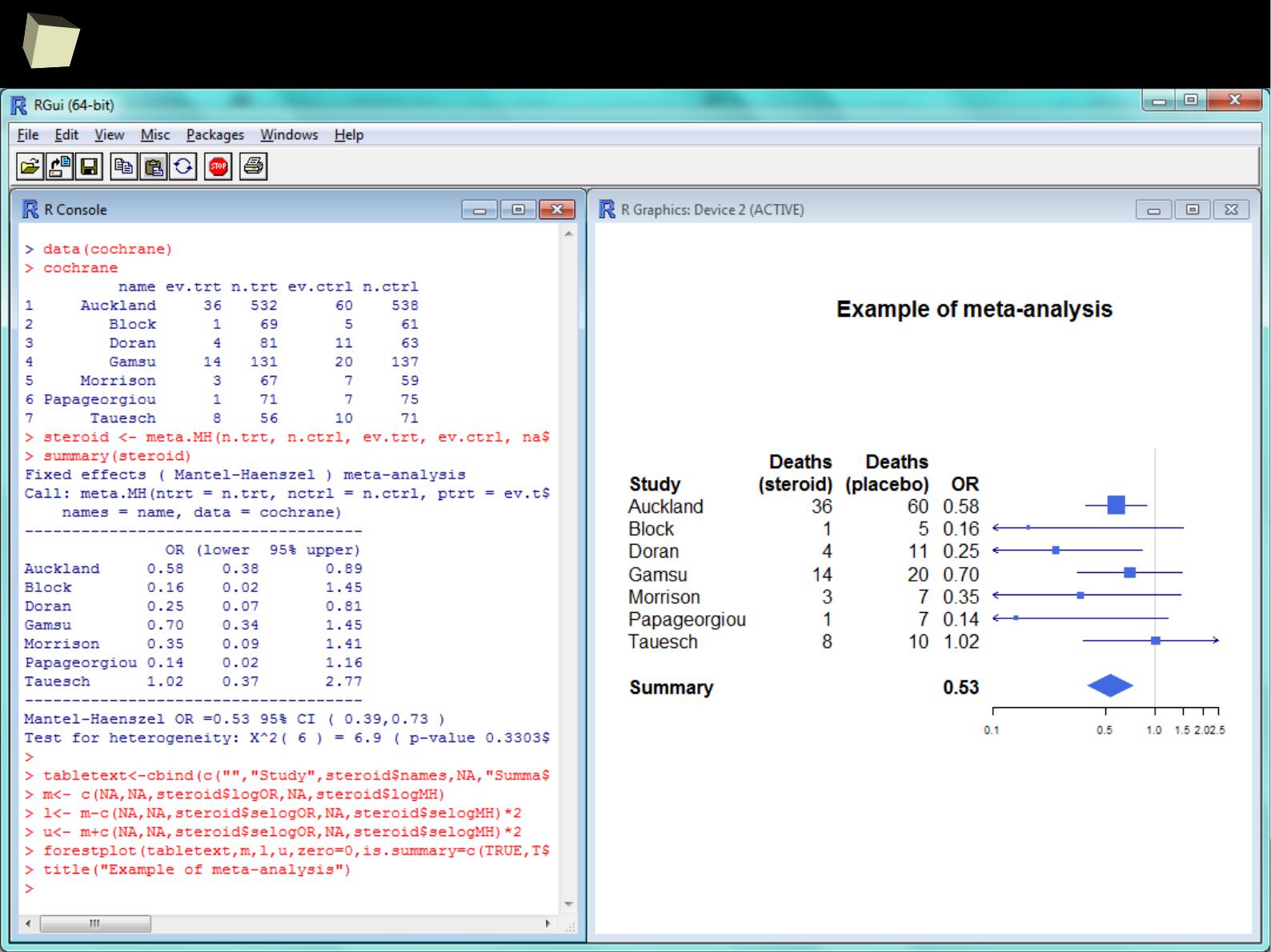

…and meta-analysis...

4

4

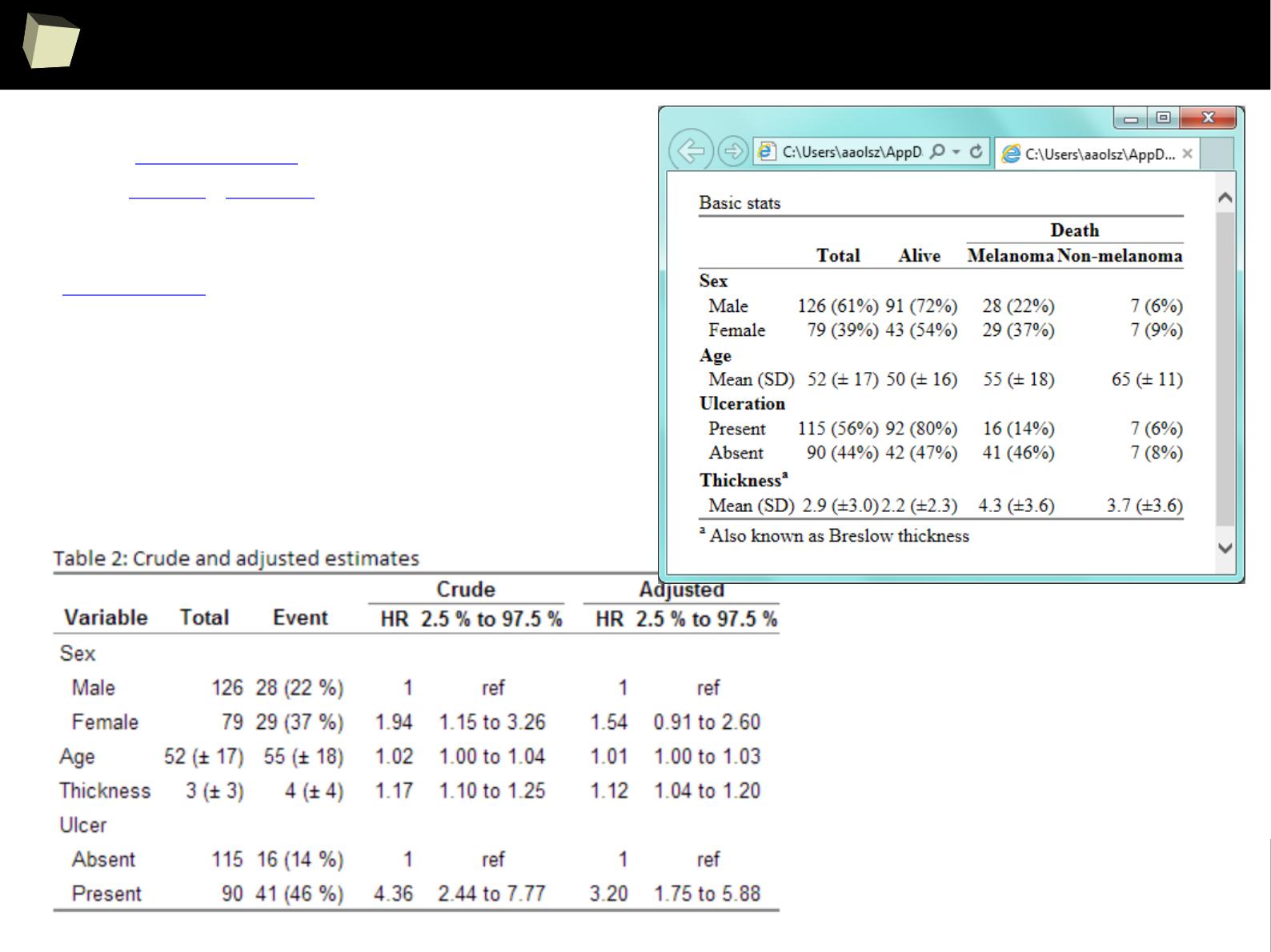

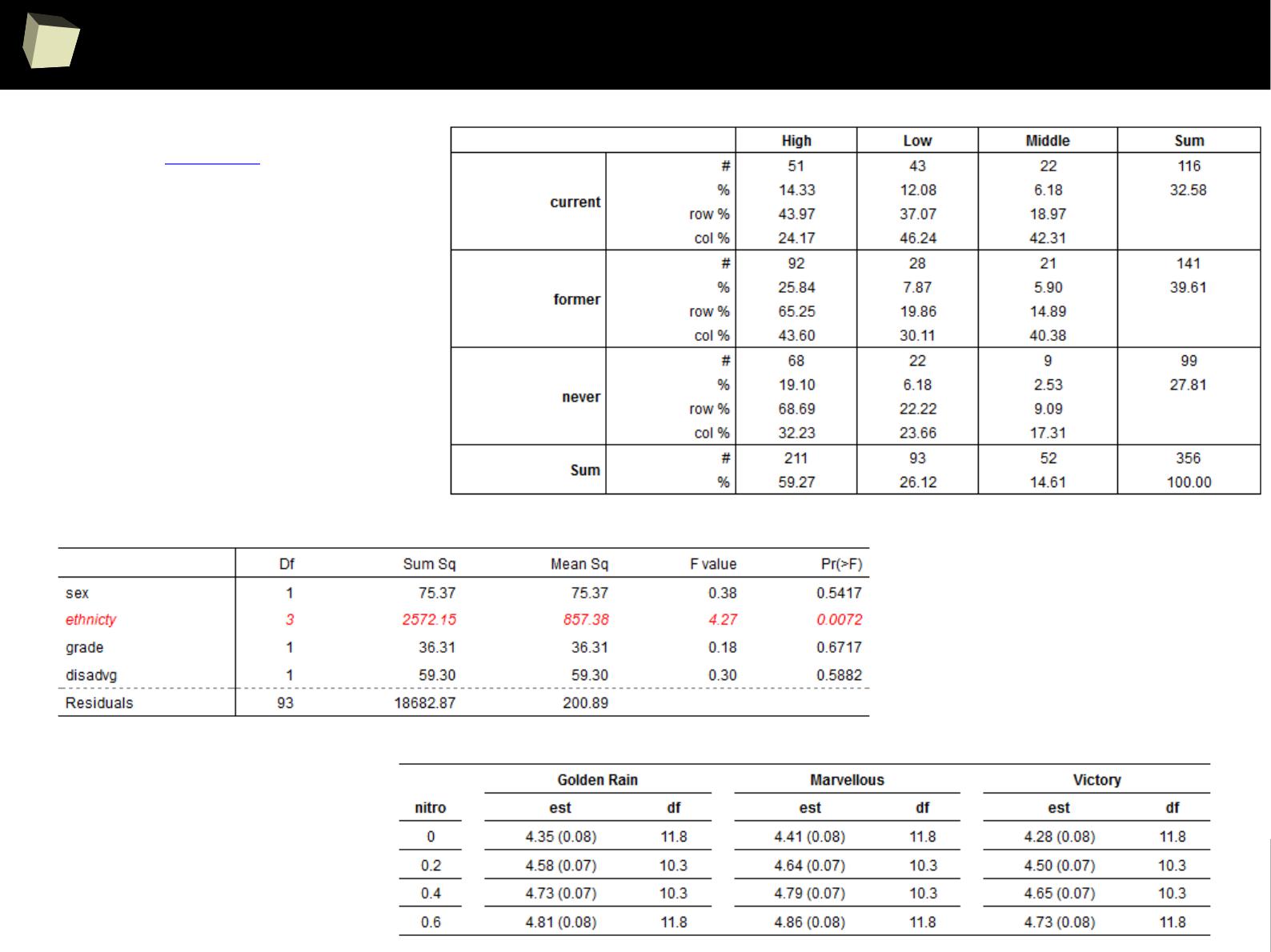

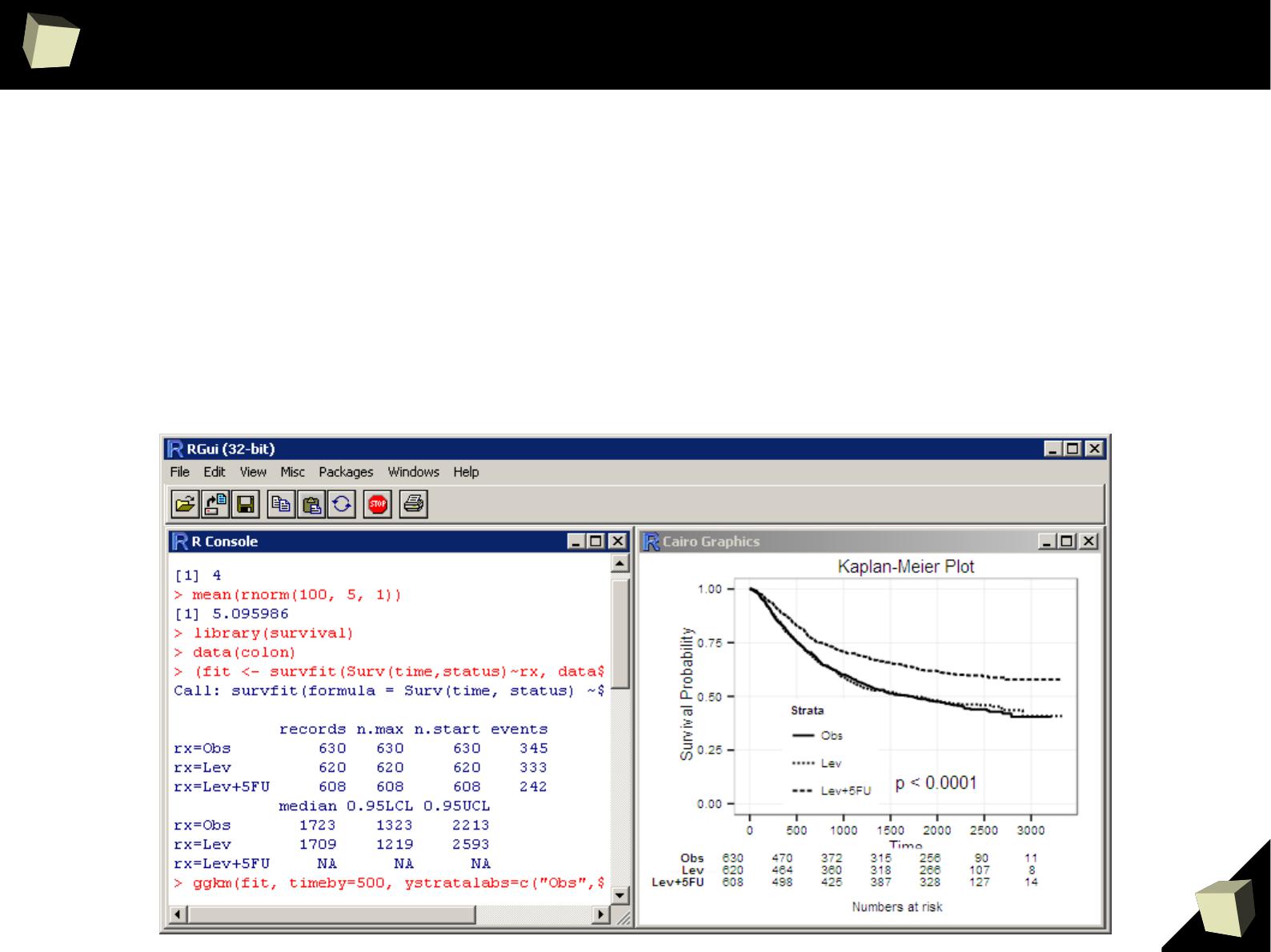

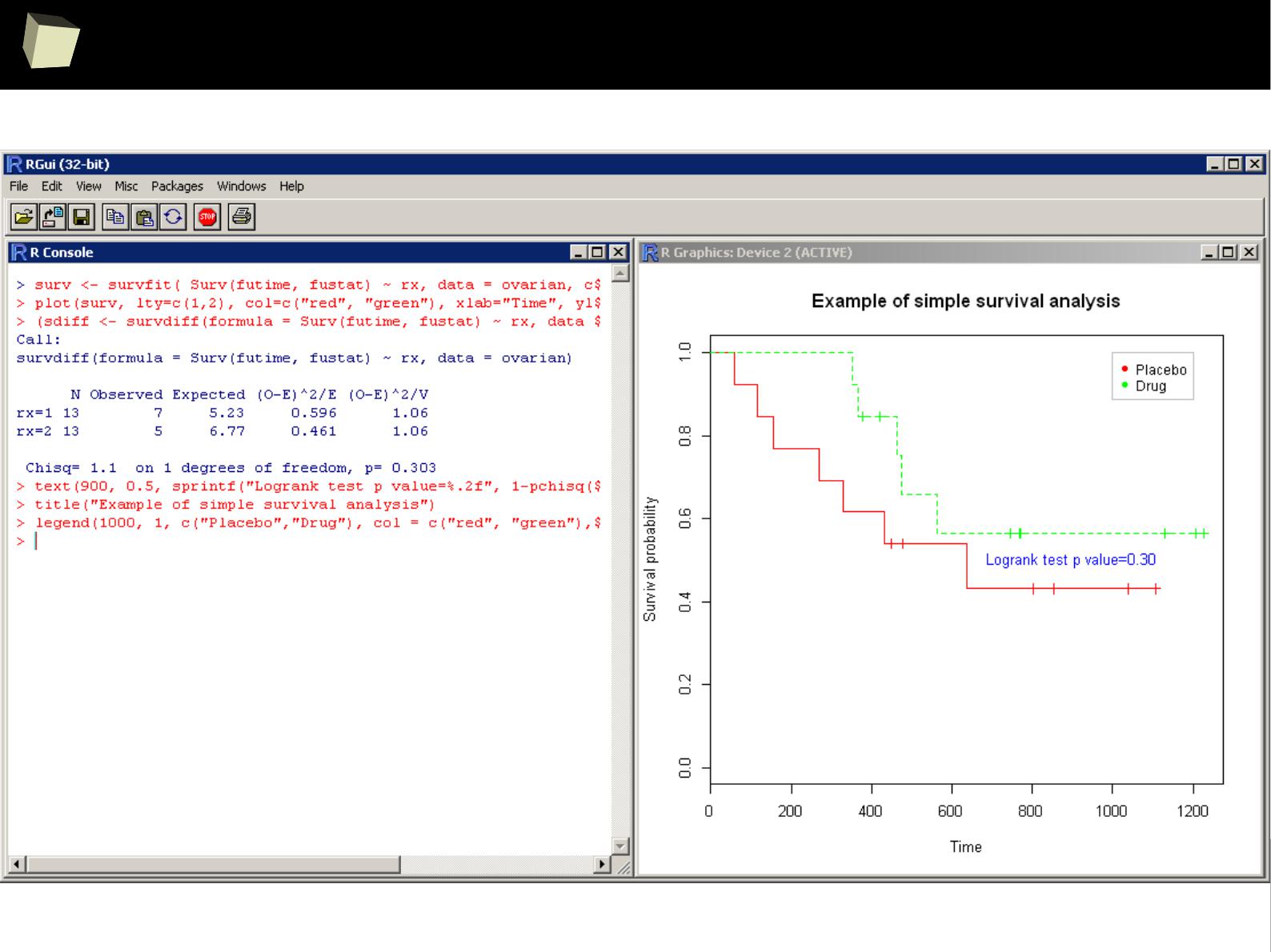

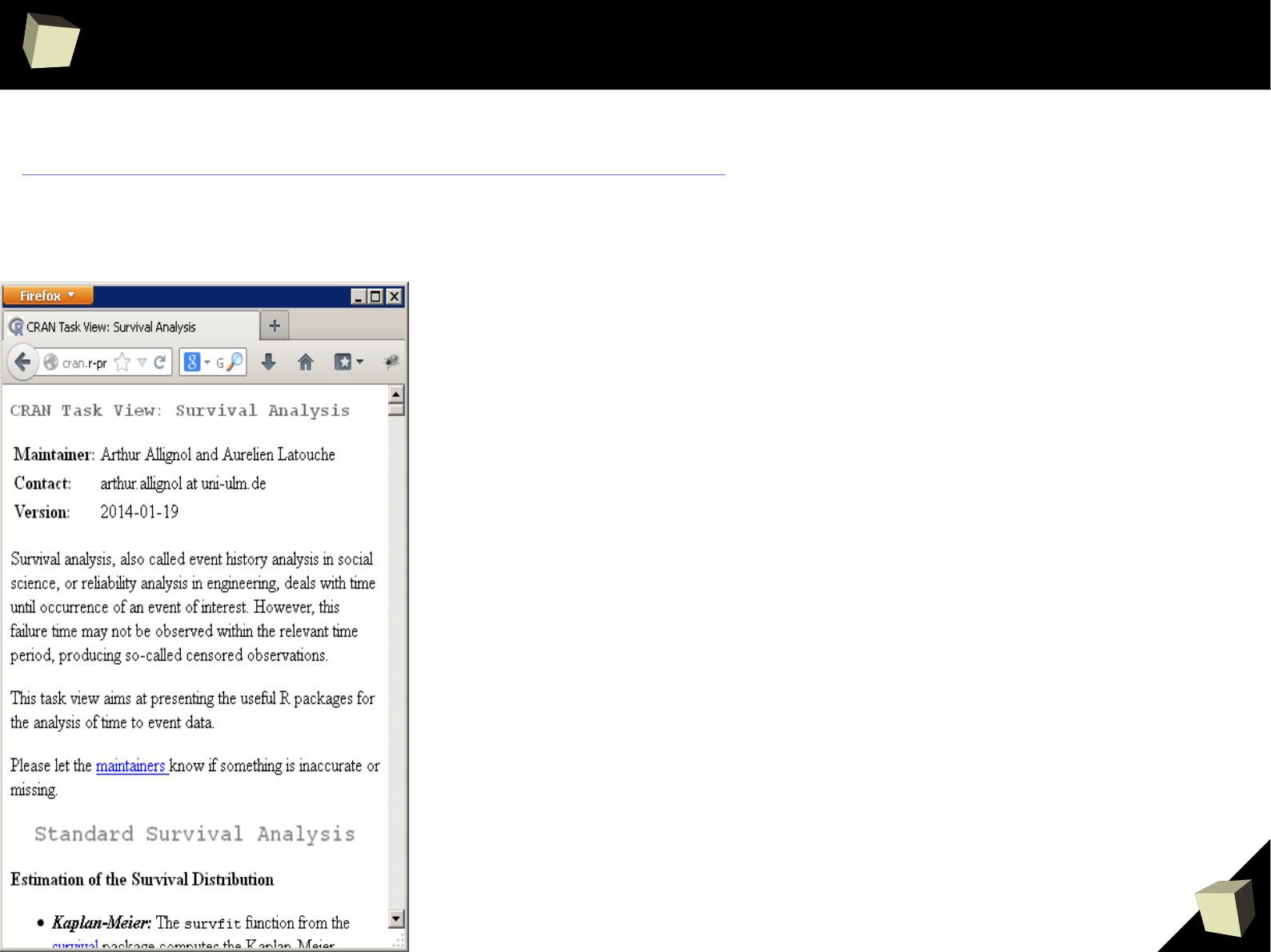

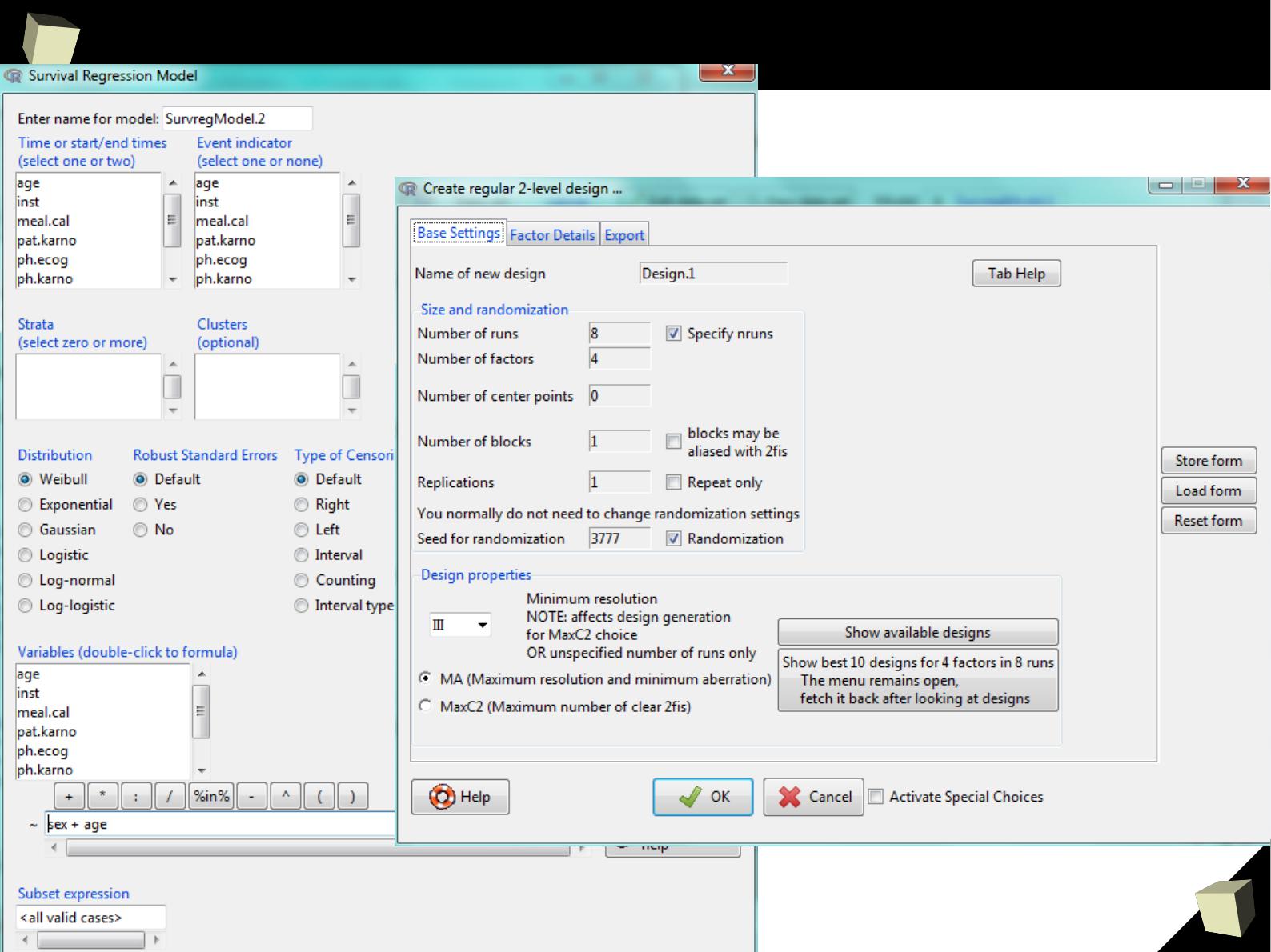

…and survival analysis...

A simple, ascetic solution...

4

5

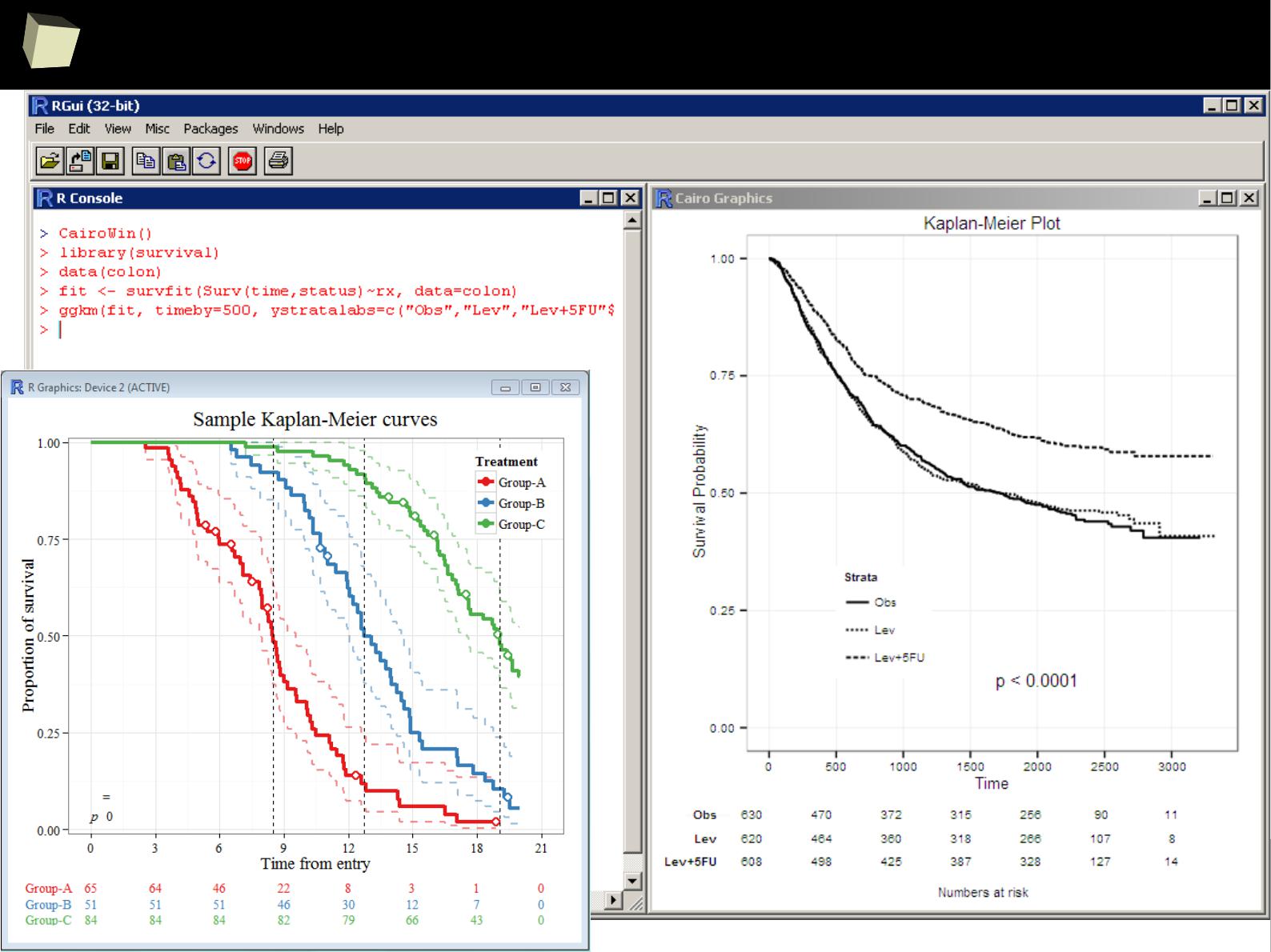

…and survival analysis...

4

6

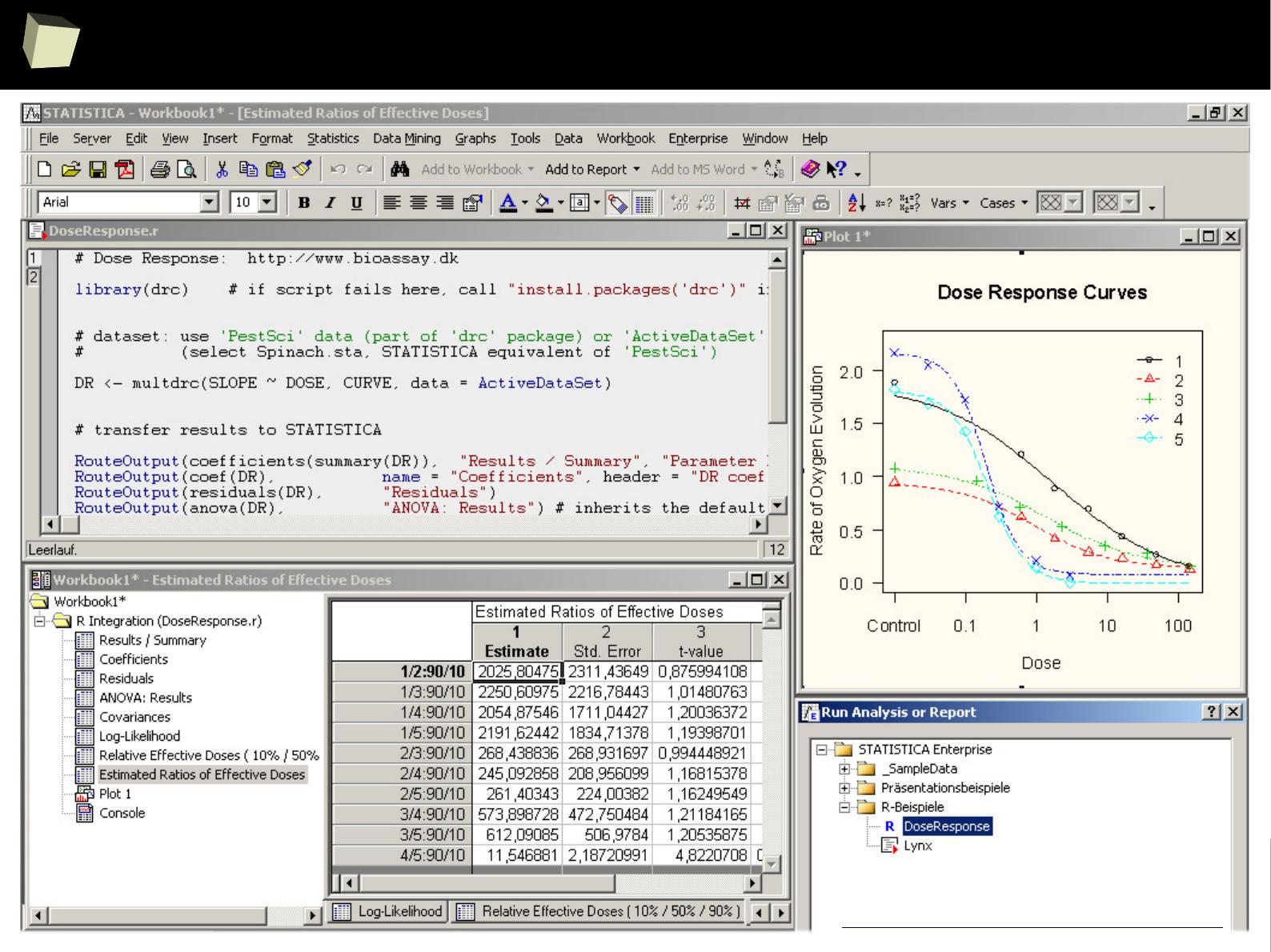

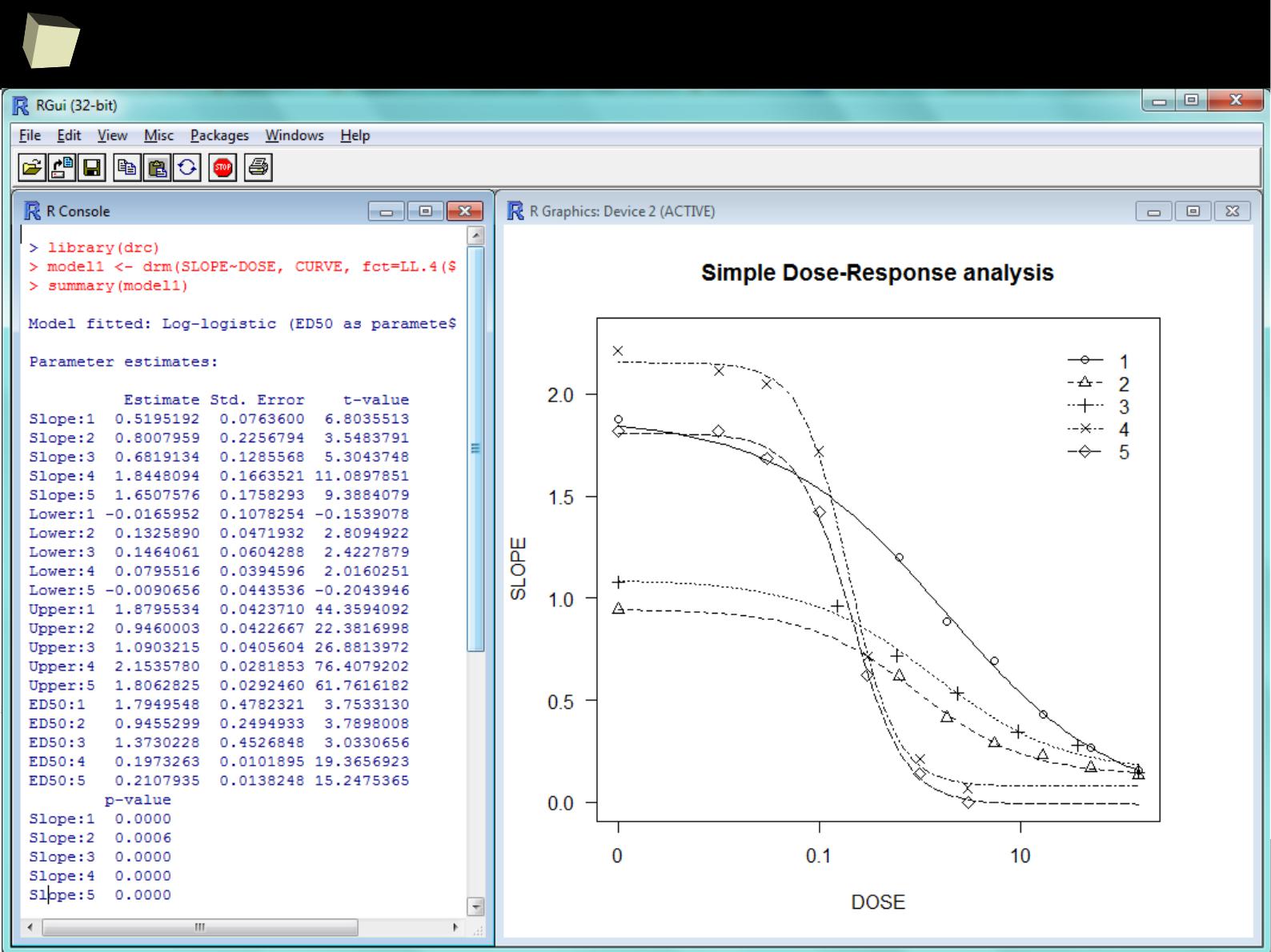

…and dose-response analysis...

4

7

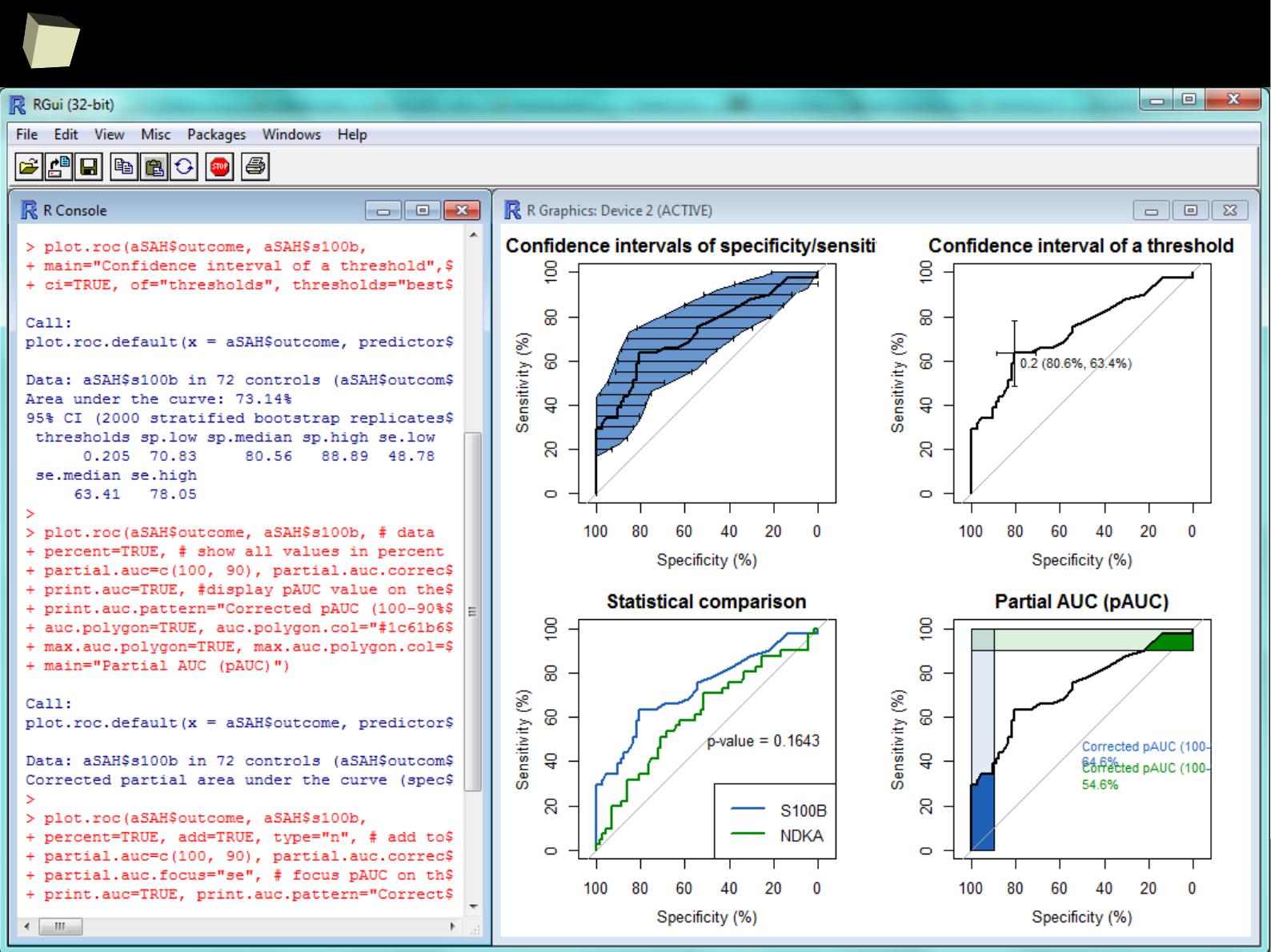

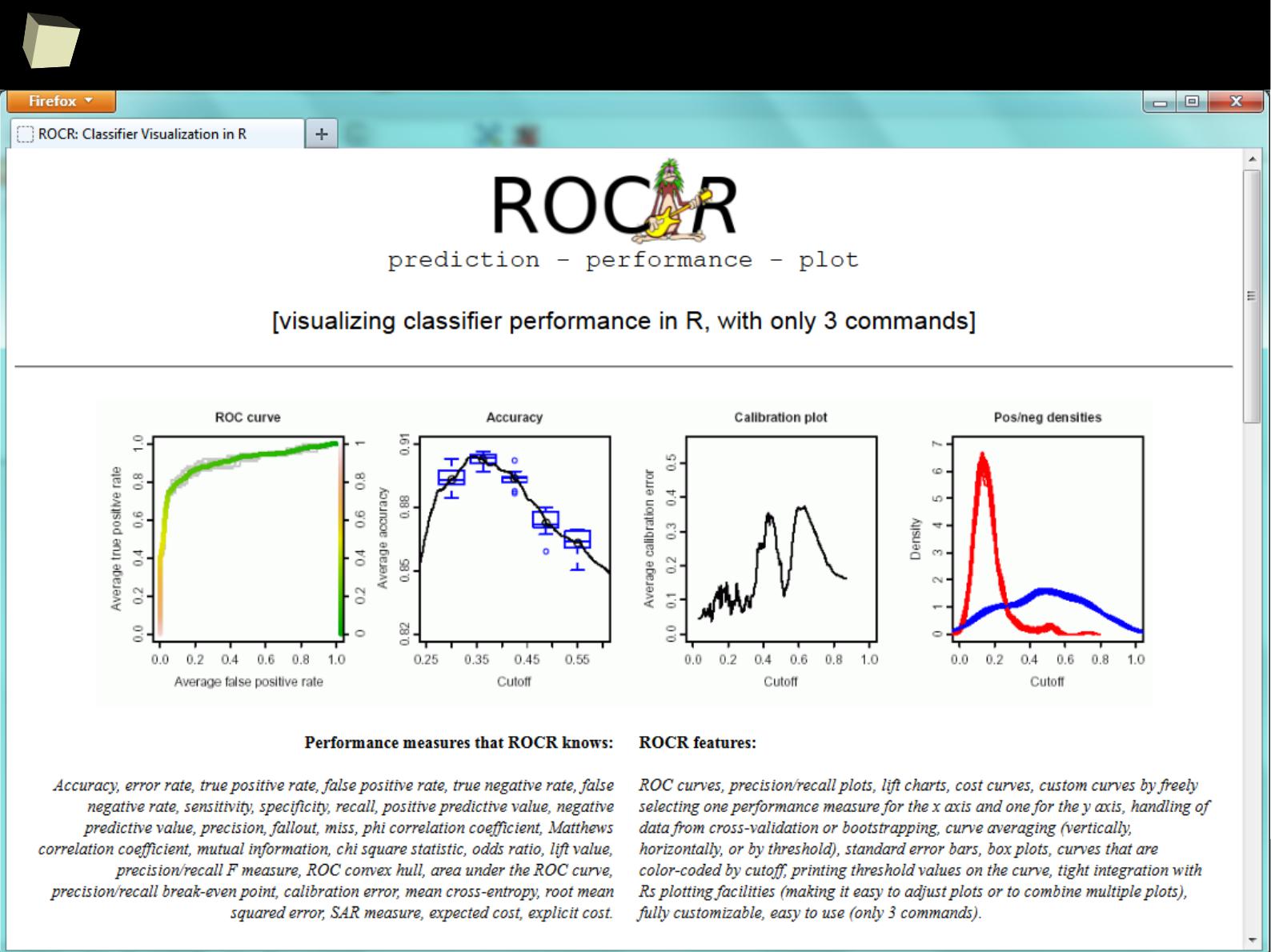

…and ROC curves...

4

8

13 reasons why you will love GNU R

4

9

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is supported by the world of science

IV R is supported by the community

V R is supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

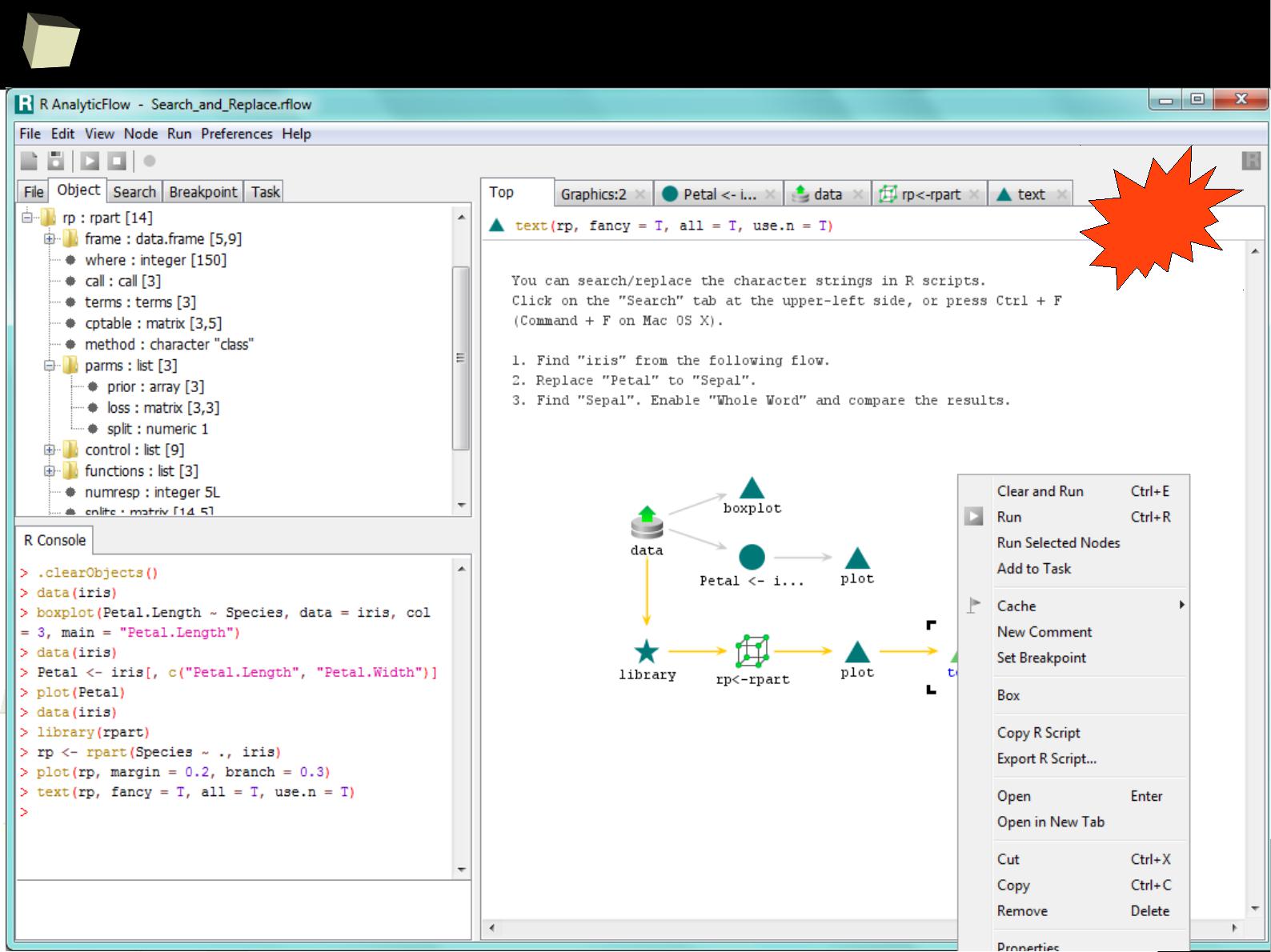

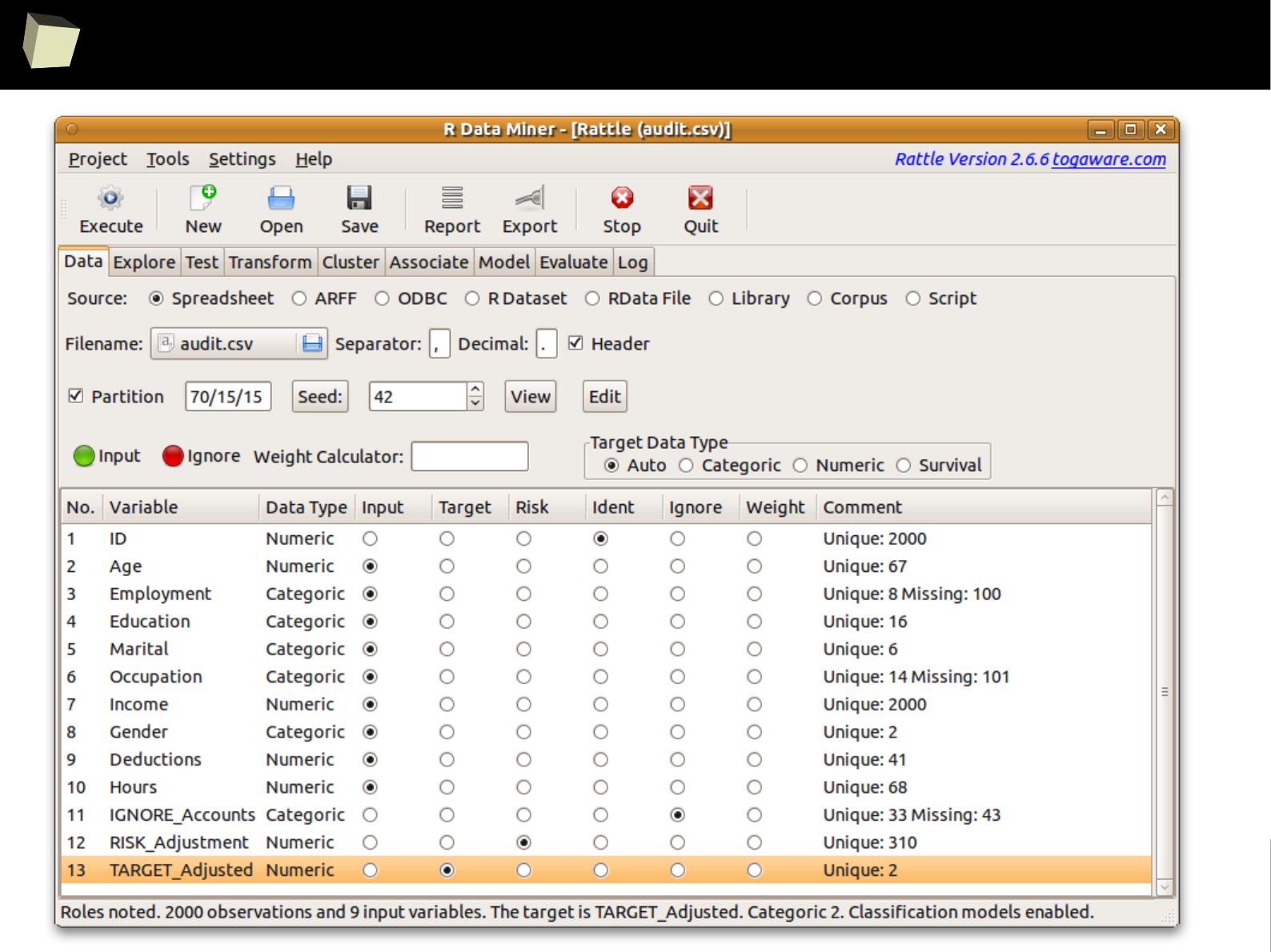

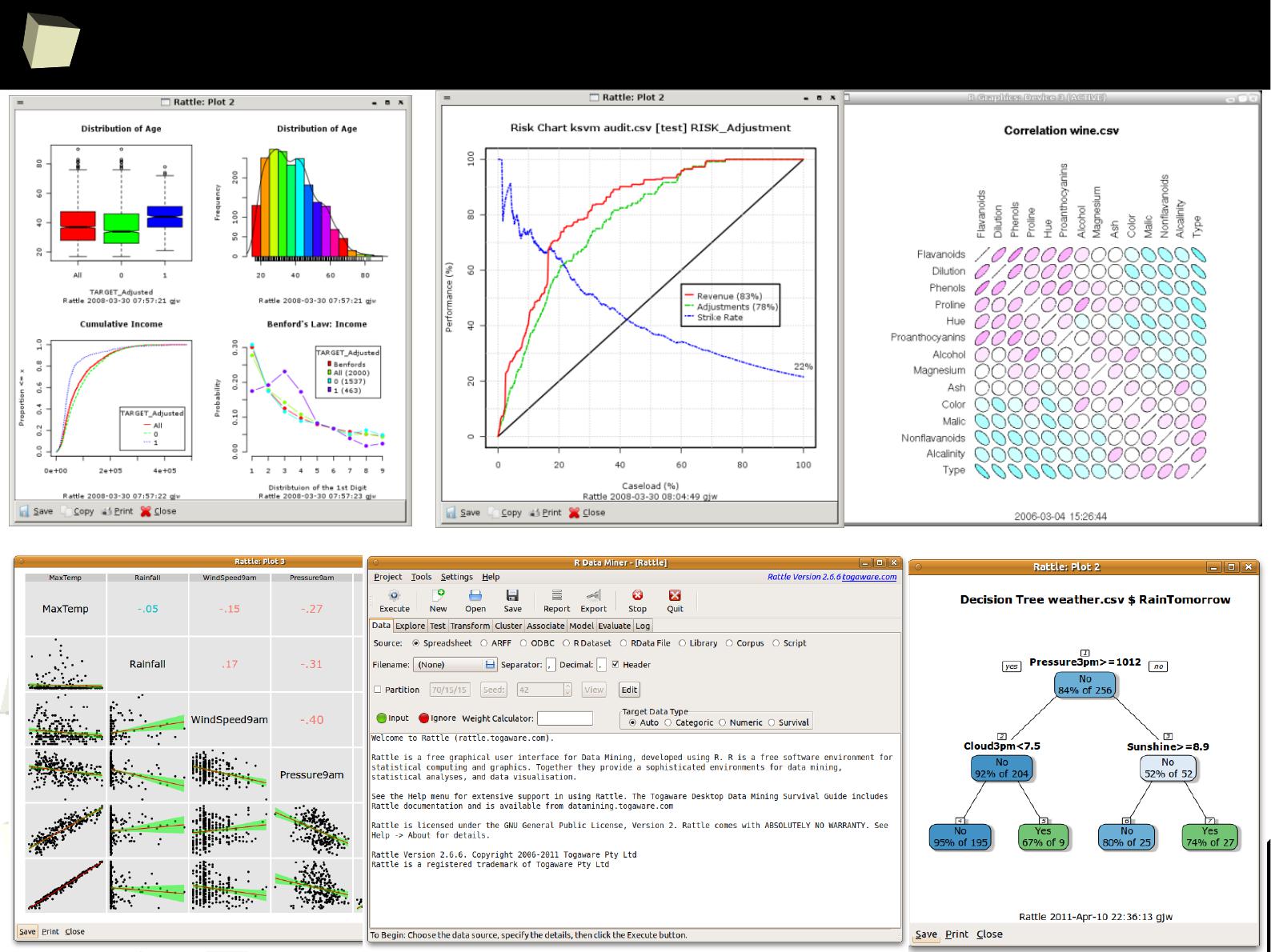

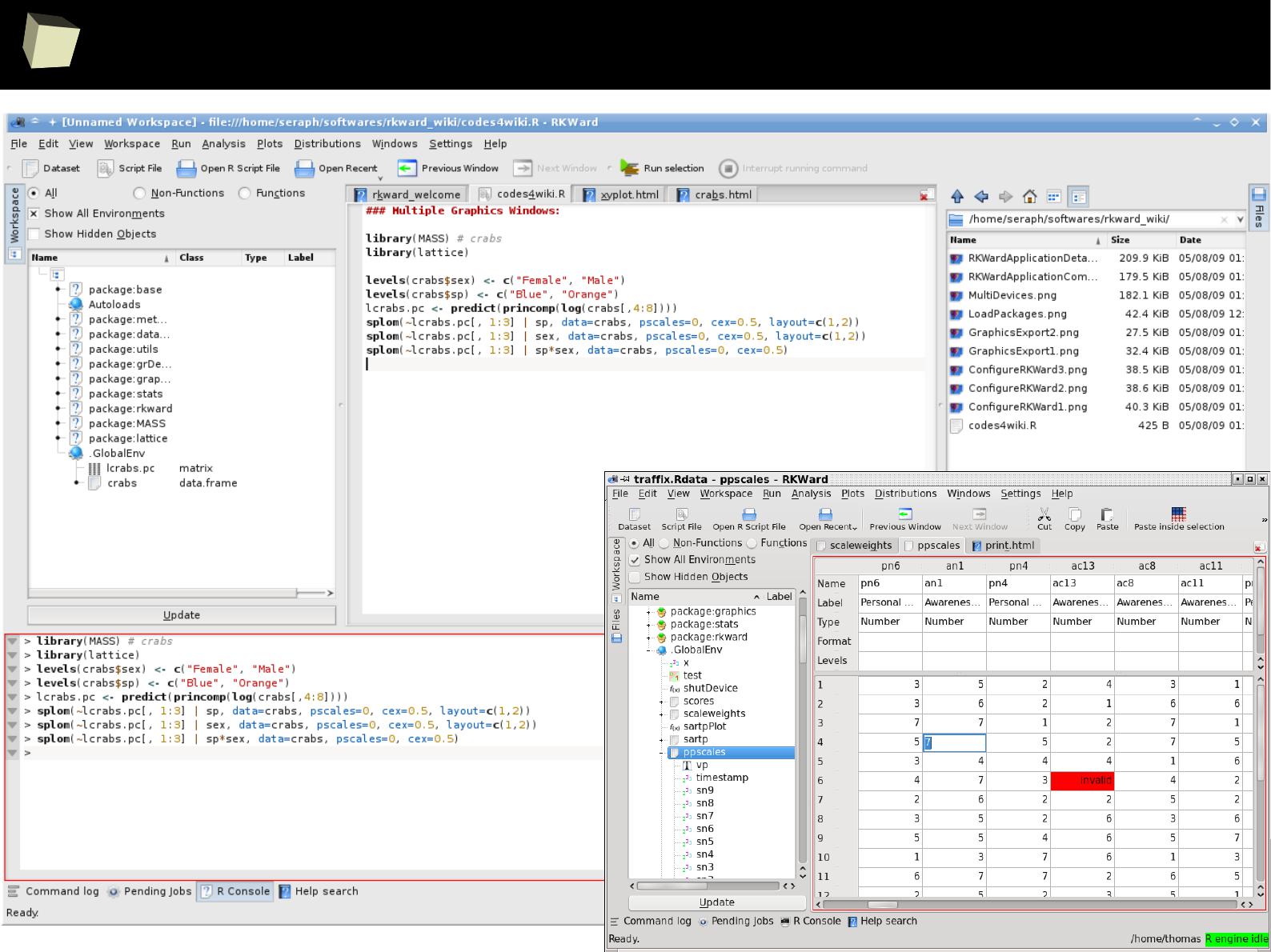

XII R has a set of fancy tools and IDEs

XIII

XIII

FDA: R is OK for drug trials!

FDA: R is OK for drug trials!

!

5

0

the pRice

GNU R is a free software.

One can legally use it, even commercially, at no cost.

http://buy.revolutionanalytics.com/

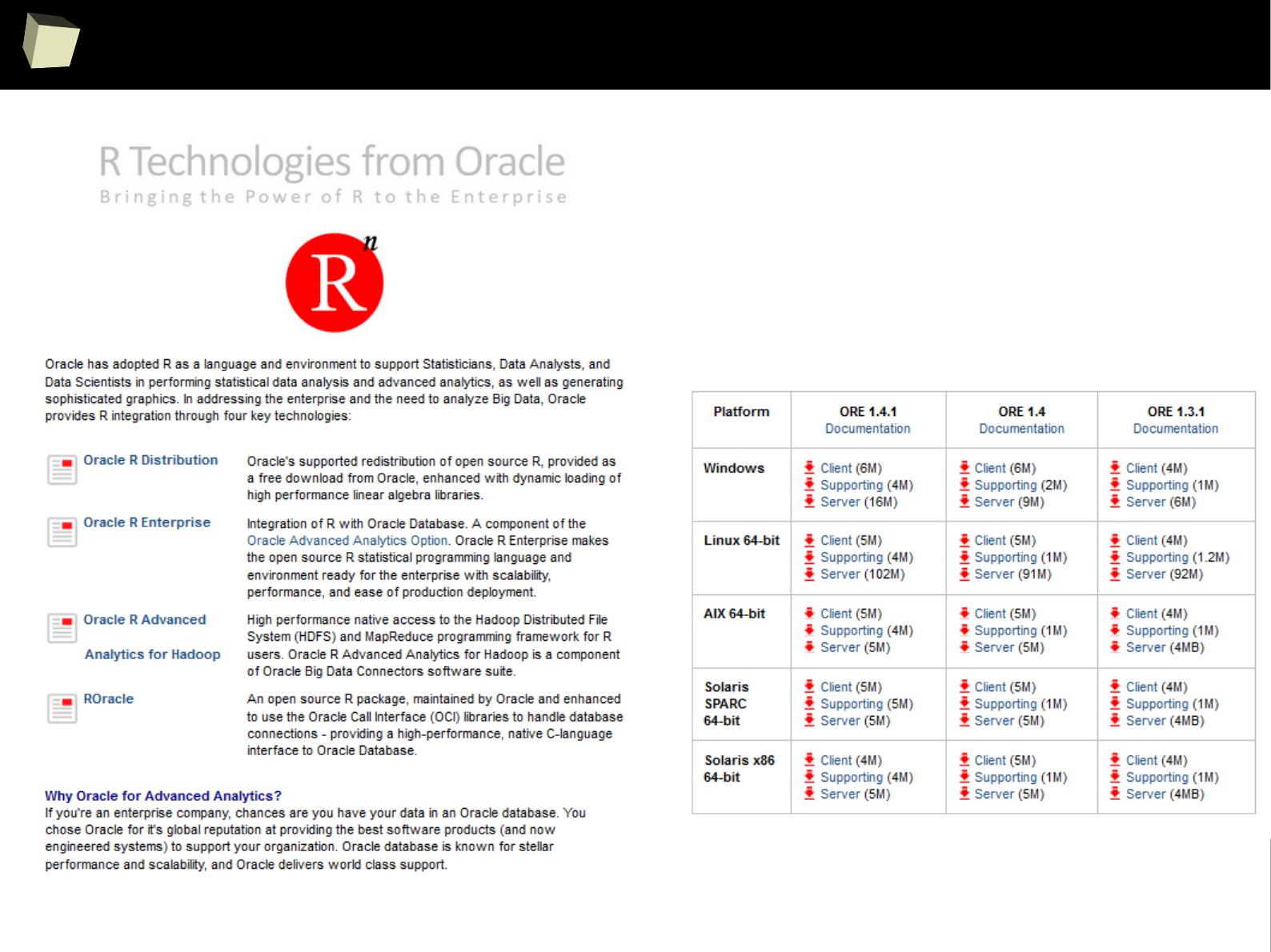

Some companies provide their own, both free and commercial, customized

versions of R along with support and integration services. Well known

providers are: Revolution (since 2015 part of Microsoft), RStudio and Oracle.

http://www.oracle.com/technetwork/...

http://www.rstudio.com/about/trademark/

http://www.rstudio.com...

http://blogs.microsoft.com/blog/2015/01/23/...

5

1

save your money with R!

Some

Some

Expensive

Expensive

Statistical

Statistical

Package

Package

Module

Module

#1

#1

Module

Module

#2

#2

Module

Module

#3

#3

an

an

expensive

expensive

Module

Module

#4

#4

call R

call R

from the

from the

Package

Package

Required

Required

algorithm or

algorithm or

functionality

functionality

bidirectional

data

exchange

No, thanks!

a “bridge”

between

the Package

and R

5

2

Licenses

The following licenses are in use for R and associated software:

●

GNU Affero GPL v.3

●

Artistic License" v.2.0

●

BSD 2-clause

●

BSD 3-clause

●

GNU GPL v.2

●

GNU GPL v.3

●

GNU Library GPL v.2

●

GNU Lesser GPL v.2.1

●

GNU Lesser GPL v.3

●

Massachusetts Institute of Technology (X11)

R as a package is licensed under GPLv2

5

3

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is supported by the world of science

IV R is supported by the community

V R is supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

5

4

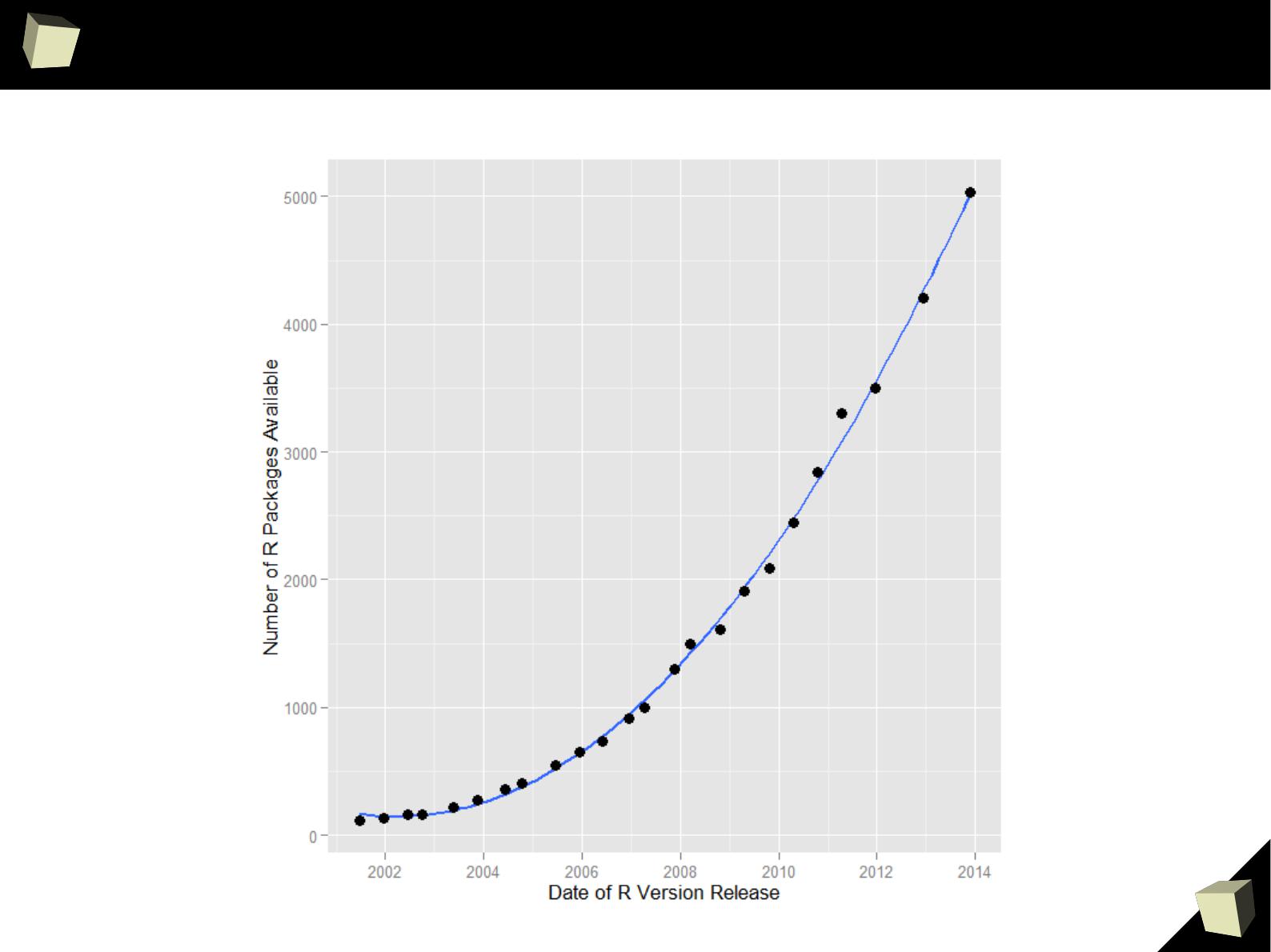

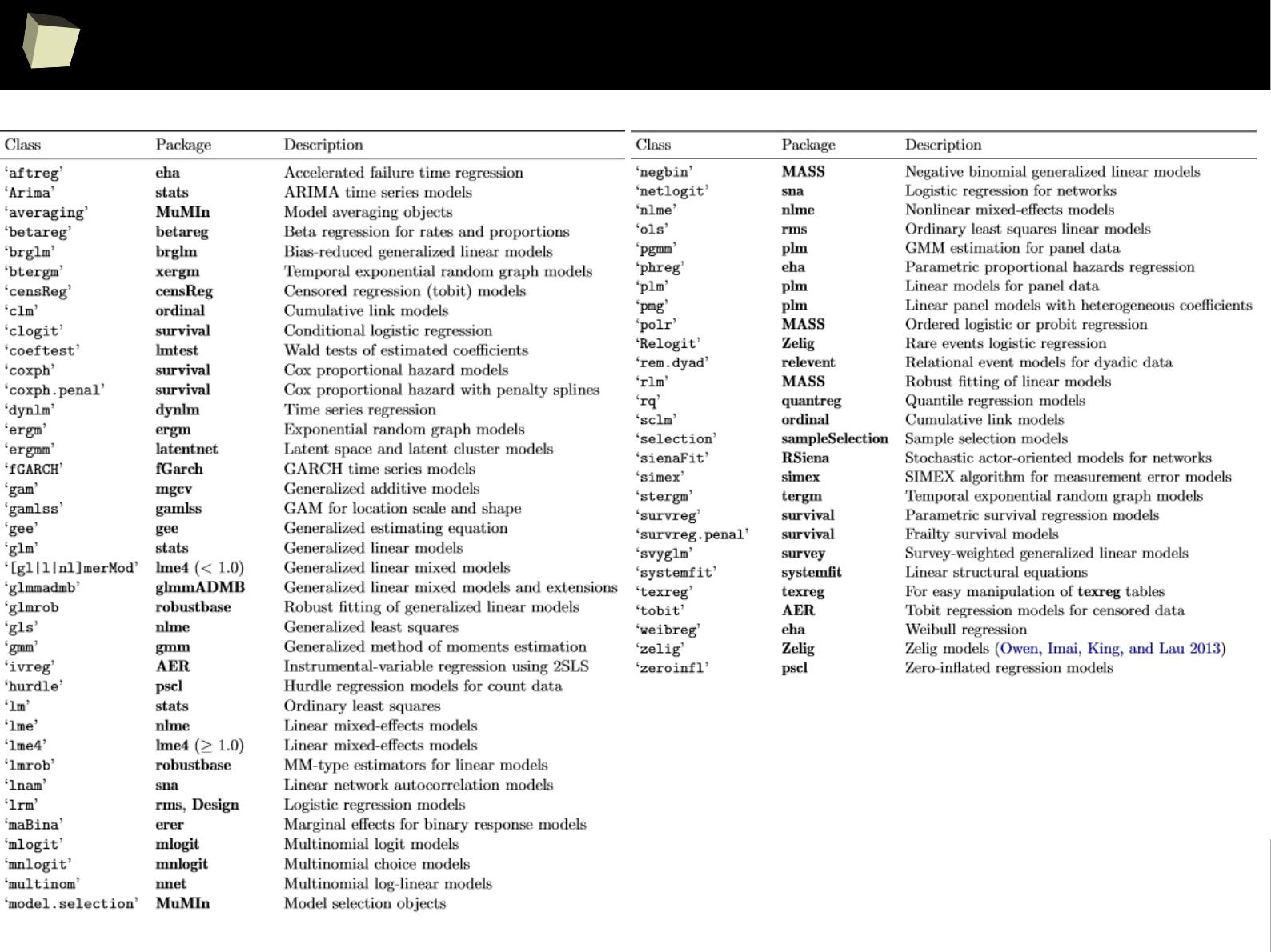

the Comprehensive R Archive Network

Sit down and hold tight! CRAN holds over 6660

nrow(available.packages()), 15.06.2015

packages.

Just describe your problem or ask me for a statistical test or procedure and

I will give you the right package(s):

●

Linear models of ANY kind. (M)AN(C)OVA, regression (linear, logistic, hierarchical, etc.)

●

Post-factum analysis and planned comparisons

●

Nonlinear models (NLS, Generalized Additive Models with a rich set of smoothers), trees

●

Robust methods: Regularized methods, M-estimators

●

Models with fixed and random effects (mixed models). Variance components

●

Monte Carlo methods (permutational, bootstrap). Exact methods.

●

Survival • PK/PD • Superior / Non inferior. / Equiv. / Bioequiv. trials • Meta-analysis

●

Design of experiments – including those applicable in clinical research

●

Structural equations. Time series. Forecasting.

●

Methods for analyzing multidimensional data: NN, SVM, LDA/QDA, PCA, FA, CC, MS, KLT,

CA, MART, POLYMARS, PINDIS, PPR, ACE, AVAS, K-means, KN, and lots of more!

●

Trees (CART, CHAID). Random forests. Aggregating (boosting, bagging)

●

Reproducible research. Graphical User Interfaces. Widely understood interoperability.

http://cran.r-project.org/web/views

5

5

Growth in the number of packages

5

6

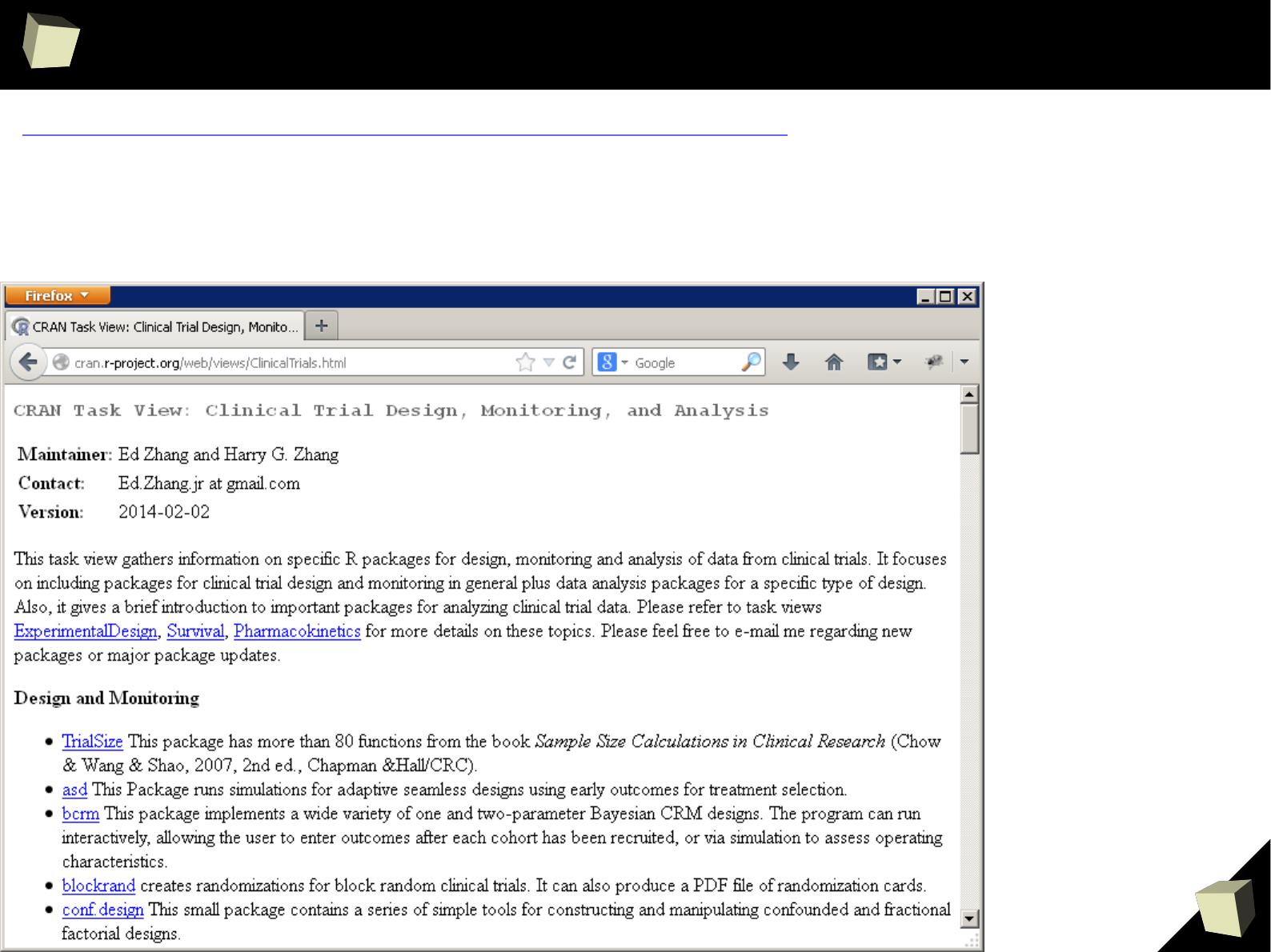

CRAN

[biostatistics]

A list of thematic sections covered by the CRAN repository:

●

Bayesian Inference

●

Chemometrics and Computational Physics

●

Clinical Trial Design, Monitoring, and Analysis

●

Cluster Analysis & Finite Mixture Models

●

Differential Equations

●

Probability Distributions

●

Computational Econometrics

●

Analysis of Ecological and Environmental Data

●

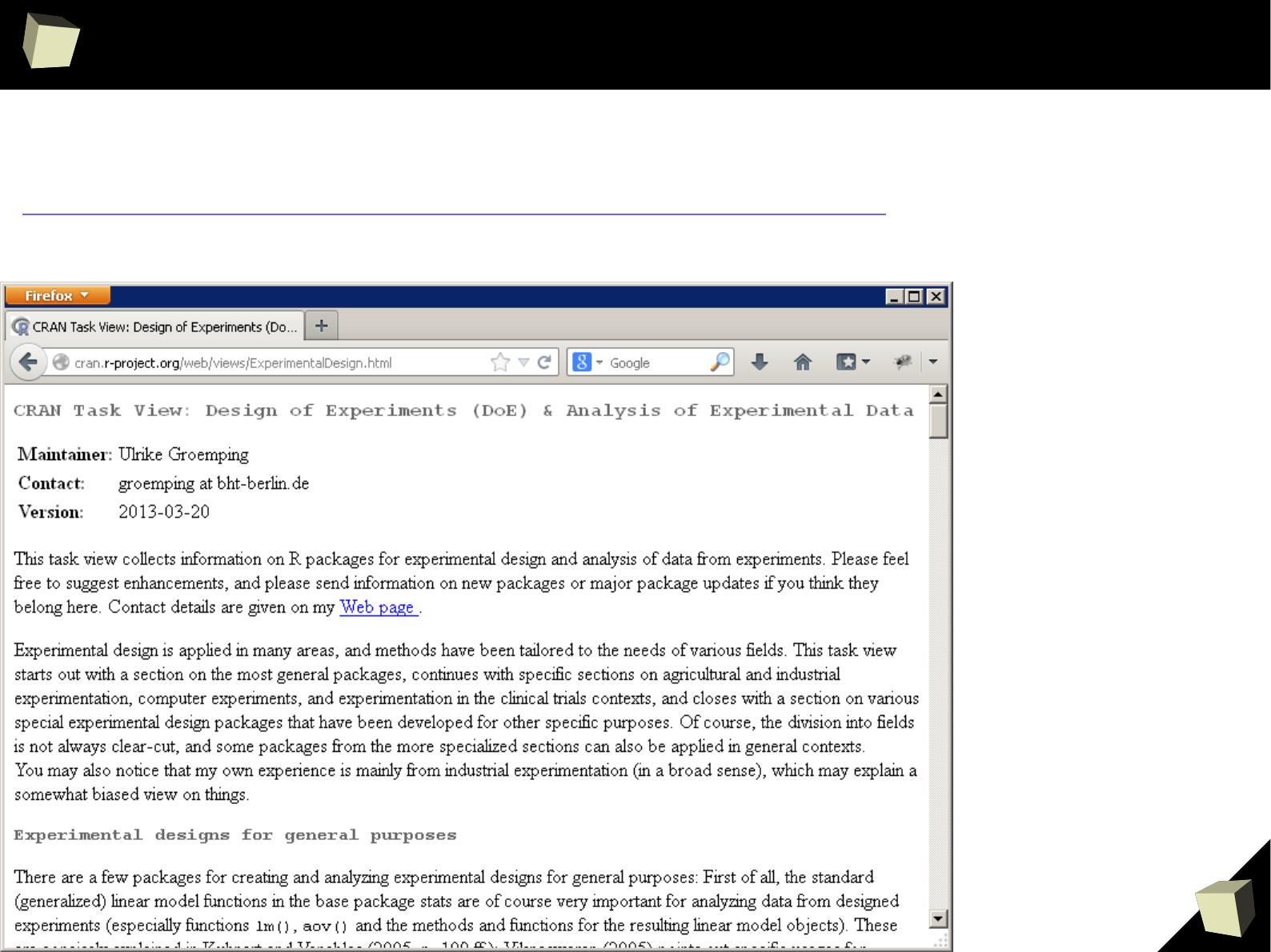

Design of Experiments (DoE) & Analysis of Experimental Data

●

Empirical Finance

●

Statistical Genetics

●

Graphic Displays & Dynamic Graphics & Graphic Devices &

Visualization

●

High-Performance and Parallel Computing with R

●

Machine Learning & Statistical Learning

●

Medical Image Analysis

●

Meta-Analysis

●

Multivariate Statistics

●

Natural Language Processing

●

Numerical Mathematics

●

Official Statistics & Survey Methodology

●

Optimization and Mathematical Programming

●

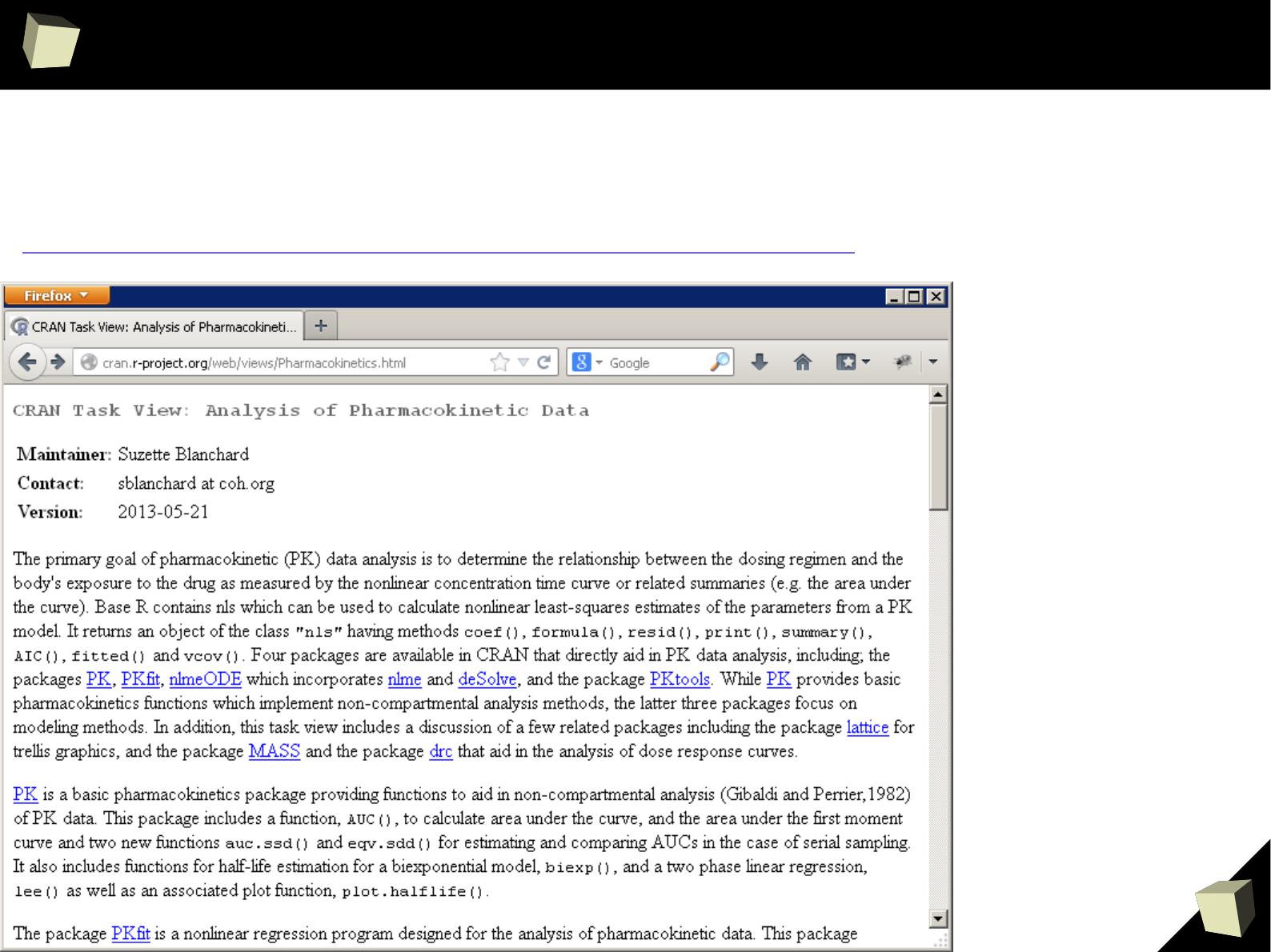

Analysis of Pharmacokinetic Data

●

Phylogenetics, Especially Comparative Methods

●

Psychometric Models and Methods

●

Reproducible Research

●

Robust Statistical Methods

●

Statistics for the Social Sciences

●

Analysis of Spatial Data

●

Handling and Analyzing Spatio-Temporal Data

●

Survival Analysis

●

Time Series Analysis

●

Web Technologies and Services

●

gRaphical Models in R

5

7

Clinical Research

What kind of analyses common in clinical research can be done in R?

●

Descriptive statistics, summaries (demographic, recruitment)

●

Advanced, linear and nonlinear modeling (models of any type)

●

Comparisons of treatments

●

PK / PD analysis

●

Analysis of bio-equivalence, non-inferiority, superiority

●

Design of experiments

●

Time-to-event analysis (survival analysis)

●

Analysis of data from longitudinal trials

●

Sample size determination and power analysis

●

Meta-analysis

●

Bayesian analyzes

●

Analysis of Adverse Events

●

Analysis of DNA micro-arrays

●

ROC curves

5

8

Clinical Research

http://cran.r-project.org/web/views/ClinicalTrials.html

●

adaptTest

●

AGSDest

●

asd

●

asypow

●

bcrm

●

bifactorial

●

blockrand

●

clinfun

●

clinsig

●

coin

●

conf.design

●

copas

●

CRM

●

CRTSize

●

dfcrm

●

DoseFinding

●

epibasix

●

epicalc

●

experiment

●

FrF2

●

GroupSeq

●

gsDesign

●

HH

●

Hmisc

●

ldbounds

●

longpower

●

MChtest

●

MCPMod

●

meta

●

metafor

●

metaLik

●

multcomp

●

nppbib

●

PIPS

●

PowerTOST

●

pwr

●

PwrGSD

●

qtlDesign

●

rmeta

●

samplesize

●

seqmon

●

speff2trial

●

ssanv

●

survival

●

tdm

●

TEQR

●

TrialSize

CRAN packages:

http://cran.r-project.org/web/views/Survival.html

http://cran.r-project.org/web/views/ExperimentalDesign.html

http://cran.r-project.org/web/views/Pharmacokinetics.html

5

9

Clinical Research

Package: TrialSize

“More than 80 functions in this package are widely used to

calculate sample size in clinical trial research studies.

This package covers the functions in Chapter

3,4,6,7,9,10,11,12,14,15 of the reference book”

AB.withDescalation

AB.withoutDescalation

ABE

ANOVA.Repeat.Measure

Carry.Over

Cochran.Armitage/Trend

Cox.Equality

Cox.Equivalence

Cox.NIS

CrossOver.ISV.Equality

CrossOver.ISV.Equivalence

CrossOver.ISV.NIS

Dose.MinE.ffect

Dose.Response.binary

Dose.Response.Linear

Dose.Response.time.to.event

gof.Pearson

gof.Pearson.twoway

IBE

InterSV.Equality

InterSV.NIS

ISCV.Equality

ISCV.Equivalence

ISCV.NIS

ISV.Equality

ISV.Equivalence

ISV.NIS

McNemar.Test

MeanWilliamsDesign.Equality

MeanWilliamsDesign.Equivalence

MeanWilliamsDesign.NIS

Multiple.Testing

Nonpara.Independ

Nonpara.One.Sample

Nonpara.Two.Sample

OneSampleMean.Equality

OneSampleMean.Equivalence

OneSampleMean.NIS

OneSampleProportion.Equality

OneSampleProportion.Equivalence

OneSampleProportion.NIS

OneSide.fixEffect

OneSide.varyEffect

OneWayANOVA.pairwise

OneWayANOVA.PairwiseComparison

PBE

Propensity.Score.nostrata

Propensity.Score.strata

QOL

QT.crossover

QT.parallel

QT.PK.crossover

QT.PK.parallel

RelativeRisk.Equality

RelativeRisk.Equivalence

RelativeRisk.NIS

RelativeRiskCrossOver.Equality

RelativeRiskCrossOver.Equivalence

RelativeRiskCrossOver.NIS

Sensitivity.Index

Stuart.Maxwell.Test

TwoSampleCrossOver.Equality

TwoSampleCrossOver.Equivalence

TwoSampleCrossOver.NIS

TwoSampleMean.Equality

TwoSampleMean.Equivalence

TwoSampleMean.NIS

TwoSampleProportion.Equality

TwoSampleProportion.Equivalence

TwoSampleProportion.NIS

TwoSampleSeqCrossOver.Equality

TwoSampleSeqCrossOver.Equivalence

TwoSampleSeqCrossOver.NIS

TwoSampleSurvival.Conditional

TwoSampleSurvival.Equality

TwoSampleSurvival.Equivalence

TwoSampleSurvival.NIS

TwoSide.fixEffect

TwoSide.varyEffect

Vaccine.CEM

Vaccine.ELDI

Vaccine.RDI

Vitro.BE

WilliamsDesign.Equality

WilliamsDesign.Equivalence

WilliamsDesign.NIS

6

0

Clinical Research

6

1

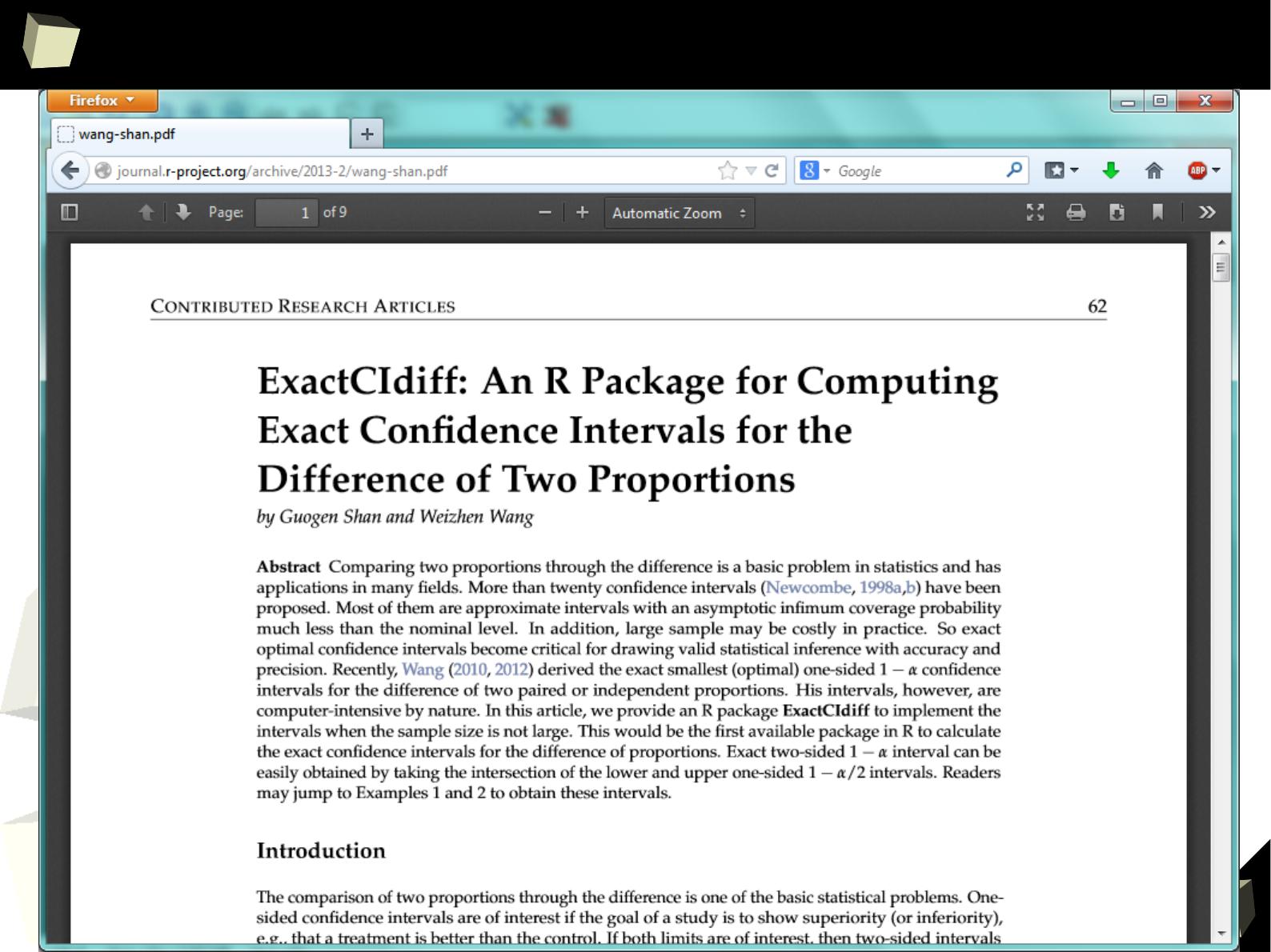

ROC I

6

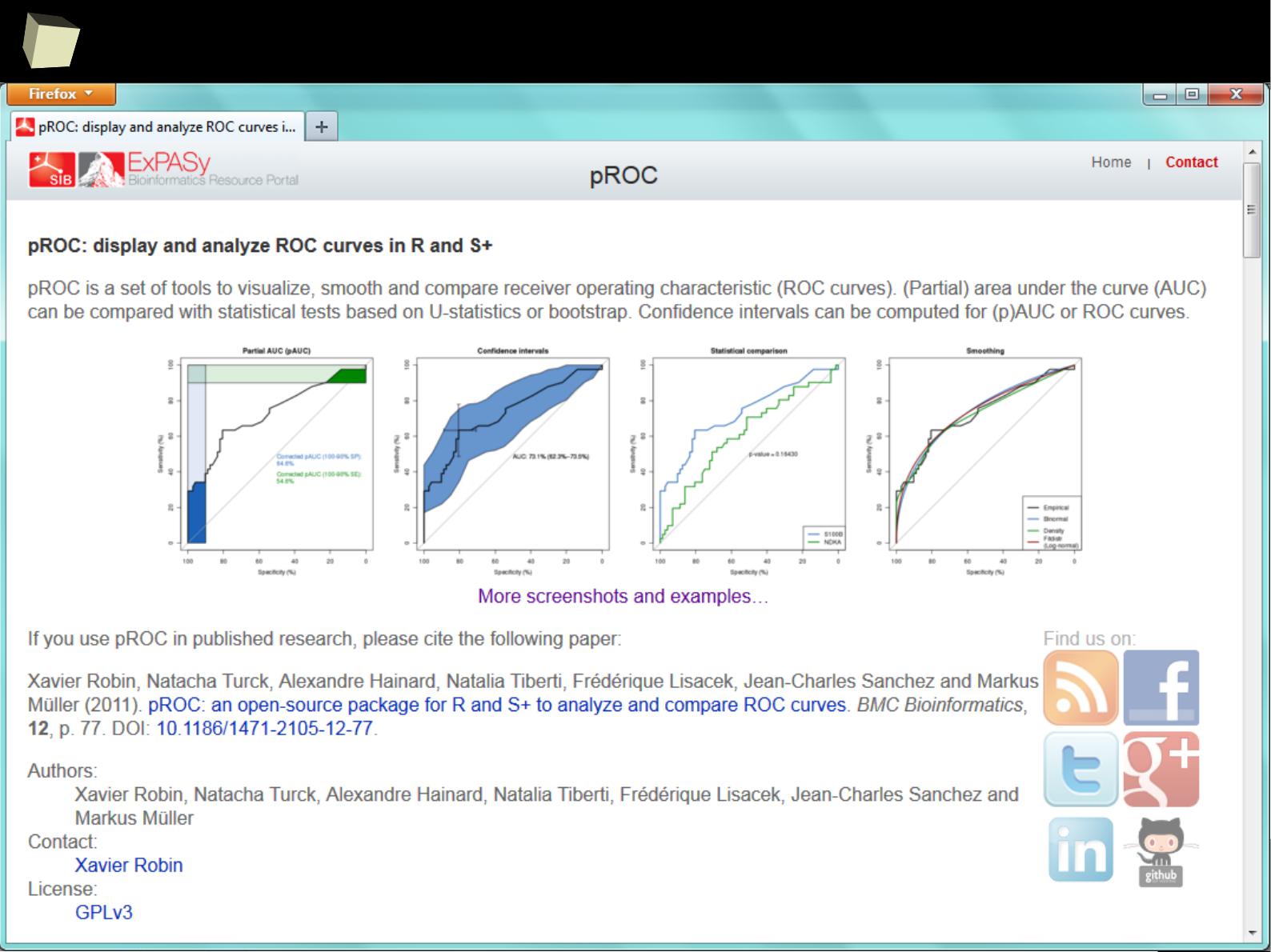

2

ROC II

6

3

Clinical Research

●

AdapEnetClass

●

AER

●

ahaz

●

AIM

●

asbio

●

aster

●

aster2

●

BaSTA

●

bayesSurv

●

BayHaz

●

BGPhazard

●

Biograph

●

BMA

●

boot

●

bpcp

●

censReg

●

changeLOS

●

clinfun

●

cmprsk

●

coarseDataTools

●

coin

●

compeir

●

complex.surv.dat.

sim

●

compound.Cox

●

concreg

●

condGEE

●

CoxBoost

●

coxme

●

coxphf

●

coxphw

●

CoxRidge

●

coxrobust

●

CPE

●

CPHshape

●

CR

●

crrSC

●

crrstep

●

currentSurvival

●

DAAG

●

dblcens

●

DPpackage

●

DTDA

●

dynpred

●

dynsurv

●

eha

●

emplik

●

Epi

●

epiR

●

etm

●

exactRankTests

●

fastcox

●

FHtest

●

fitdistrplus

●

flexsurv

●

frailtyHL

●

frailtypack

●

gamlss.cens

●

gbm

●

gems

●

genSurv

●

glmpath

●

globalboosttest

●

glrt

●

gof

●

gss

●

ICE

●

intcox

●

interval

●

ipdmeta

CRAN packages:

●

ipred

●

ipw

●

jackknifeKME

●

JM

●

JMbayes

●

jmec

●

JMLSD

●

joineR

●

JPSurv

●

kaps

●

km.ci

●

kmc

●

kmconfband

●

kmi

●

KMsurv

●

lava.tobit

●

lbiassurv

●

LearnBayes

●

lmec

●

locfit

●

logconcens

●

LogicReg

●

LogrankA

●

logspline

●

lpc

●

lss

●

MAMSE

●

maxstat

●

mboost

●

MCMCglmm

●

MCMCpack

●

Mets

●

mfp

●

mixAK

●

mixPHM

●

MLEcens

●

MRsurv

●

msm

●

msSurv

●

mstate

●

muhaz

●

multcomp

●

mvna

●

mvpart

●

NADA

●

NestedCohort

●

NPHMC

●

NPMLEcmprsk

●

OIsurv

●

OrdFacReg

●

OutlierDC

●

p3state.msm

●

pamr

●

parfm

●

party

●

pec

●

penalized

●

peperr

●

PermAlgo

●

phmm

●

plsRcox

●

polspline

●

powerSurvEpi

●

prodlim

●

psbcGroup

●

pseudo

●

quantreg

●

randomForestSRC

●

RandomSurvivalForest

●

rankhazard

●

relsurv

●

rhosp

●

riskRegression

●

risksetROC

●

rms

●

RobustAFT

●

ROCt

●

rpart

●

rsig

●

saws

●

SemiCompRisks

●

SemiMarkov

●

SGL

●

simexaft

●

simMSM

●

simPH

●

SMIR

●

SmoothHazard

●

smoothHR

●

smoothSurv

●

SMPracticals

●

spatstat

●

spatsurv

●

superpc

●

surv2sampleComp

●

survAUC

●

survBayes

●

survC1

●

survexp.fr

●

survey

●

Survgini

●

survIDINRI

●

survival

●

survivalBIV

●

survivalROC

●

survJamda

●

survMisc

●

survPresmooth

●

survrec

●

SurvRegCensCov

●

survsim

●

survSNP

●

SvyNom

●

TBSSurvival

●

TestSurvRec

●

timereg

●

timeROC

●

tlmec

●

TPmsm

●

tpr

●

TraMineR

●

TSHRC

●

uniCox

●

VGAM

●

wtcrsk

●

YPmodel

http://cran.r-project.org/web/views/ClinicalTrials.html

http://cran.r-project.org/web/views/ExperimentalDesign.html

http://cran.r-project.org/web/views/Pharmacokinetics.html

http://cran.r-project.org/web/views/Survival.html

6

4

Clinical Research

●

agricolae

●

AlgDesign

●

asd

●

BatchExperiments

●

BHH2

●

blockTools

●

BsMD

●

conf.design

●

crossdes

●

dae

●

desirability

●

DiceDesign

●

DiceEval

●

DiceKriging

●

DiceView

●

displayHTS

●

DoE.base

●

DoE.wrapper

●

DoseFinding

●

dynaTree

●

experiment

●

FrF2

●

FrF2.catlg128

●

GAD

●

granova

●

gsbDesign

●

gsDesign

●

ldDesign

●

lhs

●

mixexp

●

mkssd

●

mxkssd

●

odprism

●

osDesign

●

planor

●

plgp

●

qtlDesign

●

qualityTools

●

RcmdrPlugin.DoE

●

rsm

●

SensoMineR

●

support.CEs

●

TEQR

●

tgp

●

Vdgraph

CRAN packages:

http://cran.r-project.org/web/views/ClinicalTrials.html

http://cran.r-project.org/web/views/Pharmacokinetics.html

http://cran.r-project.org/web/views/Survival.html

http://cran.r-project.org/web/views/ExperimentalDesign.html

6

5

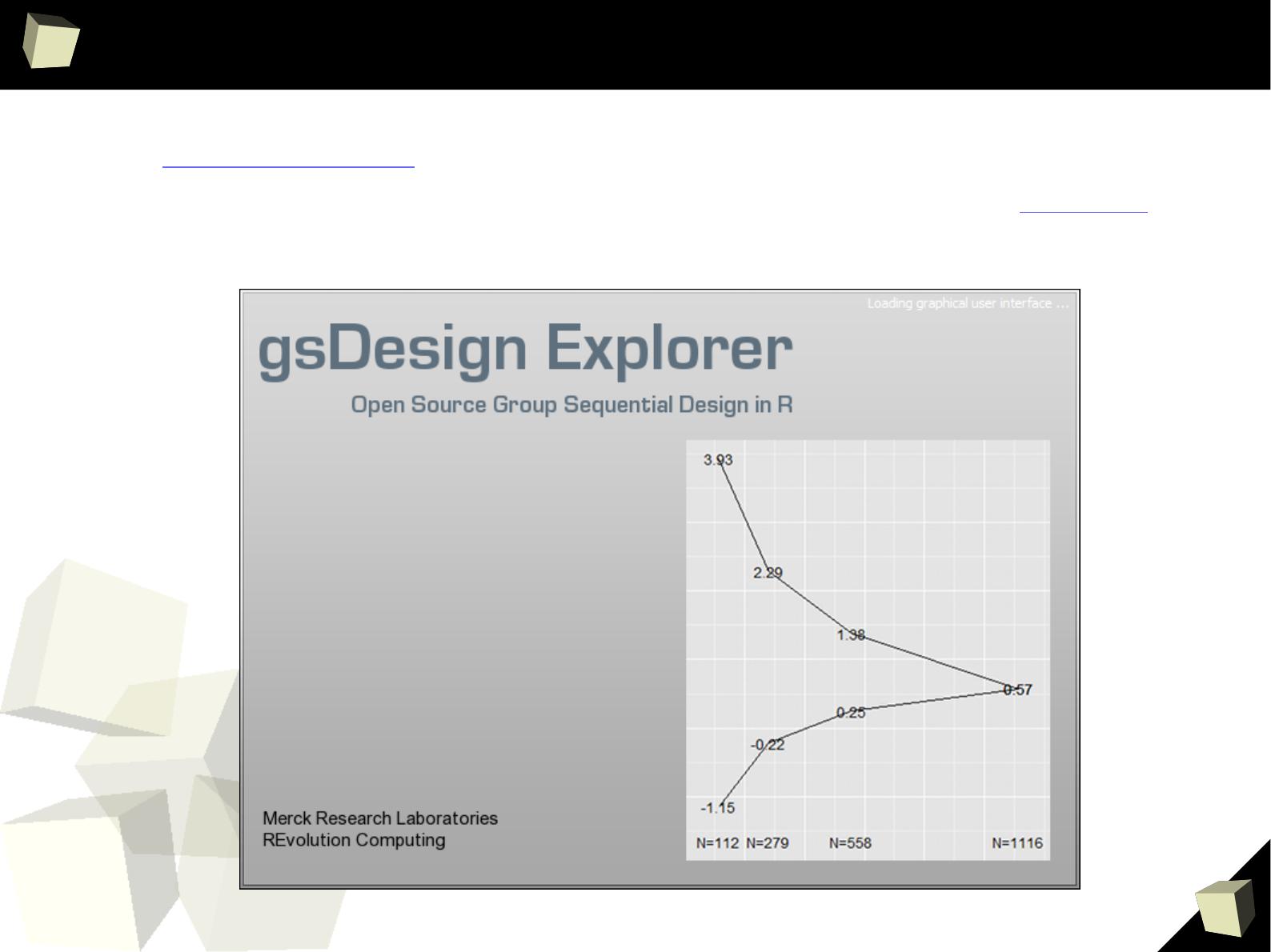

let's

gs

Design it!

Some R packages are real gems! Meet gsDesign, a package for deriving and

describing group sequential designs, created by Keaven Anderson (Merck) and

REVOlution Computing to optimize Merck's clinical trial process...

http://www.amstat.org/sections/sbiop/webinars/2011/AndersonWebinar2-23-2011.pdf

6

7

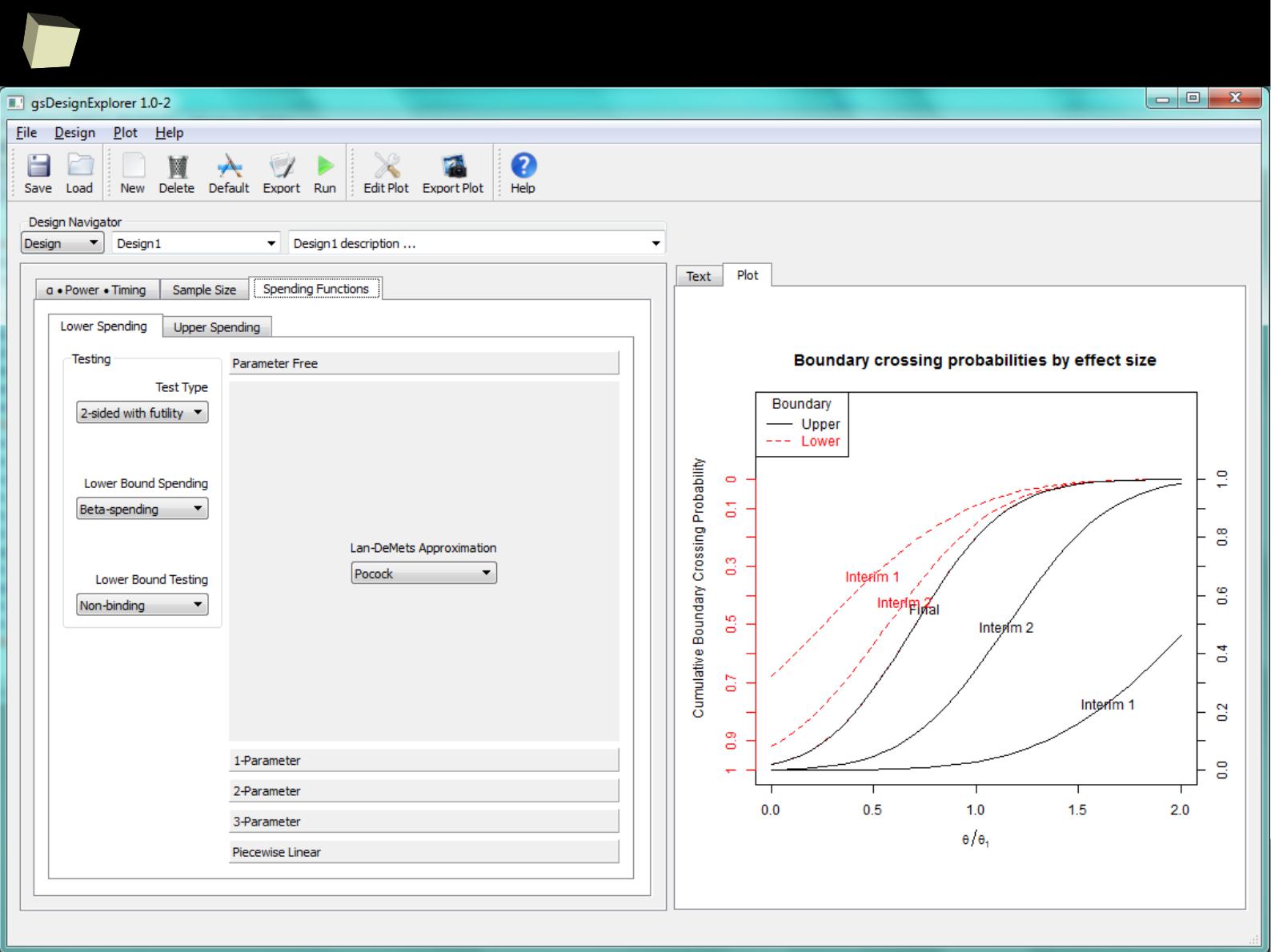

gsDesignExplorer

6

8

Clinical Research

http://cran.r-project.org/web/views/ClinicalTrials.html

http://cran.r-project.org/web/views/ExperimentalDesign.html

http://cran.r-project.org/web/views/Survival.html

http://cran.r-project.org/web/views/Pharmacokinetics.html

●

deSolve

●

drc

●

lattice

●

MASS

●

nlme

●

nlmeODE

●

PK

●

PKfit

●

PKPDmodels

●

PKtools

CRAN packages:

7

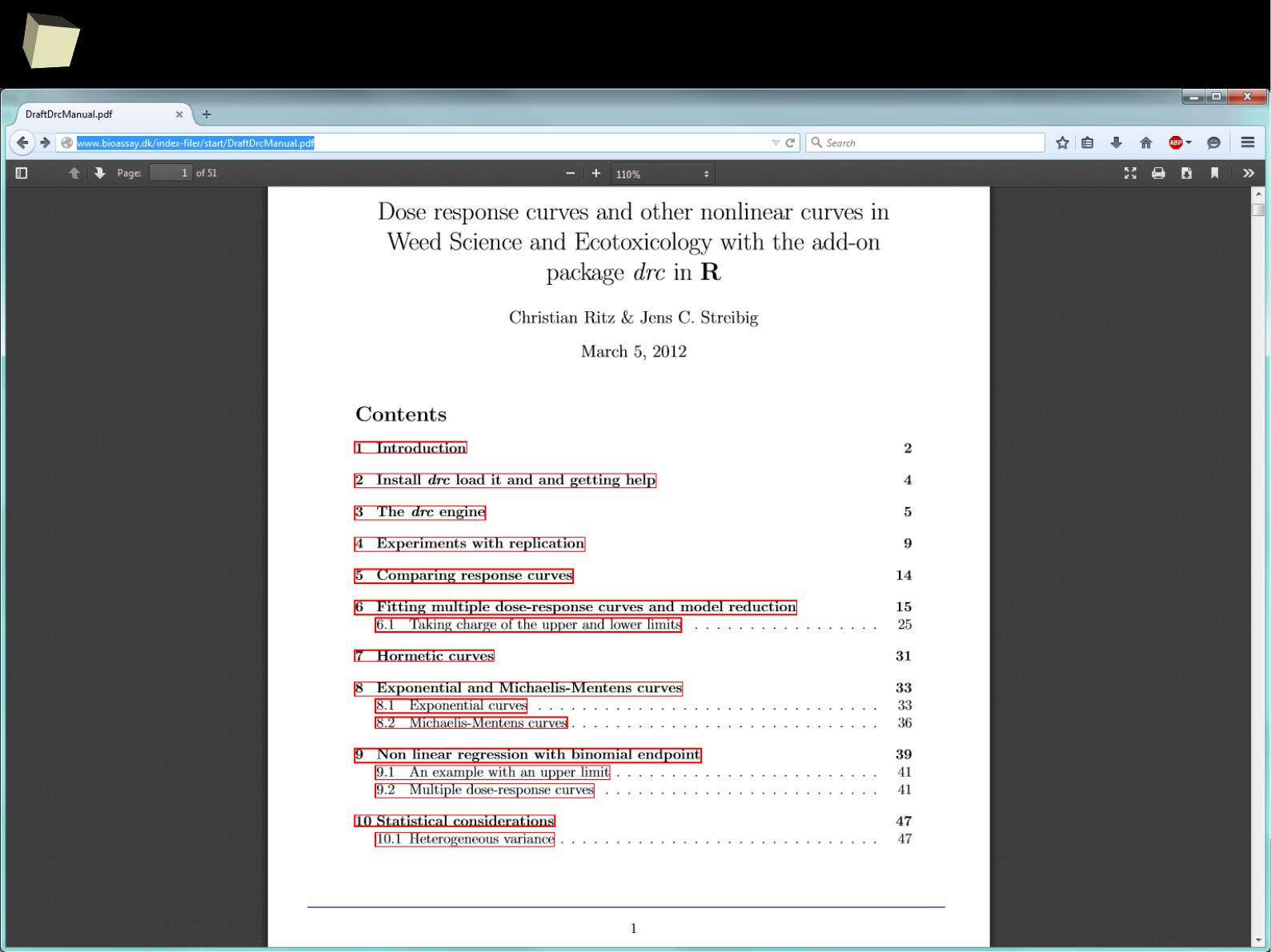

0

Analysis of dose-Response curves

●

actimL Model function for the universal response surface approach (URSA) for the

quantitative assessment of drug interaction

●

anova.drc ANOVA for dose-response model fits

●

AR.2 Asymptotic regression model

●

AR.3 Asymptotic regression model

●

baro5 The modified baro5 function

●

BC.4 The Brain-Cousens hormesis models

●

BC.5 The Brain-Cousens hormesis models

●

bcl3 The Brain-Cousens hormesis models

●

bcl4 The Brain-Cousens hormesis models

●

boxcox.drc Transform-both-sides Box-Cox transformation

●

braincousens The Brain-Cousens hormesis models

●

cedergreen The Cedergreen-Ritz-Streibig model

●

coef.drc Extract Model Coefficients

●

comped Comparison of effective dose values

●

compParm Comparison of parameters

●

confint.drc Confidence Intervals for model parameters

●

CRS.4a The Cedergreen-Ritz-Streibig model

●

CRS.4b The Cedergreen-Ritz-Streibig model

●

CRS.4c The Cedergreen-Ritz-Streibig model

●

CRS.5a Cedergreen-Ritz-Streibig dose-reponse model for describing hormesis

●

CRS.5b Cedergreen-Ritz-Streibig dose-reponse model for describing hormesis

●

CRS.5c Cedergreen-Ritz-Streibig dose-reponse model for describing hormesis

●

CRS.6 The Cedergreen-Ritz-Streibig model

●

diagnostics Information on estimation

●

drm Fitting dose-response models

●

drmc Sets control arguments

●

ED Estimating effective doses

●

ED.drc Estimating effective doses

●

ED.mrdrc Estimating effective doses

●

estfun.drc Bread and meat for the sandwich

●

EXD.2 Exponential decay model

●

EXD.3 Exponential decay model

●

fitted.drc Extract fitted values from model

●

FPL.4 Fractional polynomial-logistic dose-response models

●

fplogistic Fractional polynomial-logistic dose-response models

●

G.2 Mean function for the Gompertz dose-response or growth curve

●

G.3 Mean function for the Gompertz dose-response or growth curve

●

G.3u Mean function for the Gompertz dose-response or growth curve

●

G.4 Mean function for the Gompertz dose-response or growth curve

●

genBliss Model function for the universal response surface approach (URSA) for the

quantitative assessment of drug interaction

●

genBliss2 Model function for the universal response surface approach (URSA) for the

quantitative assessment of drug interaction

●

genLoewe Model function for the universal response surface approach (URSA) for the

quantitative assessment of drug interaction

●

genLoewe2 Model function for the universal response surface approach (URSA) for the

quantitative assessment of drug interaction

●

genursa Model function for the universal response surface approach (URSA) for the

quantitative assessment of drug interaction

●

getInitial Showing starting values used

●

getMeanFunctions Display available dose-response models

●

gompertz Mean function for the Gompertz dose-response or growth curve

●

gompertzd The derivative of the Gompertz function

●

gompGrowth.1 Gompertz growth models

●

gompGrowth.2 Gompertz growth models

●

gompGrowth.3 Gompertz growth models

●

iceLoewe.1 Model function for the universal response surface approach

(URSA) for the quantitative assessment of drug interaction

●

iceLoewe2.1 Model function for the universal response surface approach

(URSA) for the quantitative assessment of drug interaction

●

isobole Creating isobolograms

●

L.3 The logistic model

●

L.4 The logistic model

●

L.5 The logistic model

●

l2 The two-parameter log-logistic function

●

l3 The three-parameter log-logistic function

●

l3u The three-parameter log-logistic function

●

l4 The four-parameter log-logistic function

●

l5 The five-parameter log-logistic function

●

lin.test Lack-of-fit test for the mean structure based on cumulated

residuals

●

LL.2 The two-parameter log-logistic function

●

LL.3 The three-parameter log-logistic function

●

LL.3u The three-parameter log-logistic function

●

LL.4 The four-parameter log-logistic function

●

LL.5 The five-parameter log-logistic function

●

LL2.2 The two-parameter log-logistic function

●

LL2.3 The three-parameter log-logistic function

●

LL2.3u The three-parameter log-logistic function

●

LL2.4 The four-parameter log-logistic function

●

LL2.5 The five-parameter log-logistic function

●

llogistic The log-logistic function

●

llogistic2 The log-logistic function

●

LN.2 Log-normal dose-response model

●

LN.3 Log-normal dose-response model

●

LN.3u Log-normal dose-response model

●

LN.4 Log-normal dose-response model

●

lnormal Log-normal dose-response model

●

logistic The logistic model

●

logLik.drc Extracting the log likelihood

●

maED Estimation of ED values using model-averaging

●

MAX Maximum mean response

●

mixture Fitting binary mixture models

●

ml3a The Cedergreen-Ritz-Streibig model

●

ml3b The Cedergreen-Ritz-Streibig model

●

ml3c The Cedergreen-Ritz-Streibig model

●

ml4a Cedergreen-Ritz-Streibig dose-reponse model for describing

hormesis

●

ml4b Cedergreen-Ritz-Streibig dose-reponse model for describing

hormesis

●

ml4c Cedergreen-Ritz-Streibig dose-reponse model for describing

hormesis

●

MM.2 Michaelis-Menten model

●

MM.3 Michaelis-Menten model

●

modelFit Assessing the model fit

7

1

Analysis of dose-Response curves [cont'd]

●

mr.test Mizon-Richard test for dose-response models

●

mrdrm Model-robust dose-response modelling

●

mselect Model selection

●

NEC Dose-response model for estimation of no effect concentration (NEC).

●

NEC.2 Dose-response model for estimation of no effect concentration (NEC).

●

NEC.3 Dose-response model for estimation of no effect concentration (NEC).

●

NEC.4 Dose-response model for estimation of no effect concentration (NEC).

●

neill.test Neill's lack-of-fit test for dose-response models

●

plot.drc Plotting fitted curves for a 'drc' or 'mrdrc' object

●

plot.mrdrc Plotting fitted curves for a 'drc' or 'mrdrc' object

●

PR Expected or predicted response

●

predict.drc Prediction

●

predict.mrdrc Prediction

●

print.drc Printing key features

●

print.mrdrc Printing key features

●

print.summary.drc Printing summary of non-linear model fits

●

rdrm Simulating a dose-response curve

●

relpot Comparing selectivity indices across curves

●

residuals.drc Extracting residuals from model

●

ryegrass Effect of ferulic acid on growth of ryegrass

●

S.capricornutum Effect of cadmium on growth of green alga

●

searchdrc Searching through a range of initial parameter values to obtain

convergence

●

secalonic Root length measurements

●

SI Comparing selectivity indices across curves

●

simDR Simulating ED values under various scenarios

●

summary.drc Summarising non-linear model fits

●

twophase Two-phase dose-response model

●

ucedergreen The Cedergreen-Ritz-Streibig model

●

UCRS.4a The Cedergreen-Ritz-Streibig model

●

UCRS.4b The Cedergreen-Ritz-Streibig model

●

UCRS.4c The Cedergreen-Ritz-Streibig model

●

UCRS.5a Cedergreen-Ritz-Streibig dose-reponse model for describing hormesis

●

UCRS.5b Cedergreen-Ritz-Streibig dose-reponse model for describing hormesis

●

UCRS.5c Cedergreen-Ritz-Streibig dose-reponse model for describing hormesis

●

uml3a The Cedergreen-Ritz-Streibig model

●

uml3b The Cedergreen-Ritz-Streibig model

●

uml3c The Cedergreen-Ritz-Streibig model

●

uml4a Cedergreen-Ritz-Streibig dose-reponse model for describing hormesis

●

uml4b Cedergreen-Ritz-Streibig dose-reponse model for describing hormesis

●

uml4c Cedergreen-Ritz-Streibig dose-reponse model for describing hormesis

●

update.drc Updating and re-fitting a model

●

ursa Model function for the universal response surface approach (URSA) for the

quantitative assessment of drug interaction

●

vcov.drc Calculating variance-covariance matrix for objects of class 'drc'

●

W1.2 The two-parameter Weibull functions

●

W1.3 The three-parameter Weibull functions

●

W1.3u The three-parameter Weibull functions

●

W1.4 The four-parameter Weibull functions

●

w2 The two-parameter Weibull functions

●

W2.2 The two-parameter Weibull functions

●

W2.3 The three-parameter Weibull functions

●

W2.3u The three-parameter Weibull functions

●

W2.4 The four-parameter Weibull functions

●

W2x.3 The three-parameter Weibull functions

●

W2x.4 The four-parameter Weibull functions

●

w3 The three-parameter Weibull functions

●

w4 The four-parameter Weibull functions

●

weibull1 Weibull model functions

●

weibull2 Weibull model functions

●

weibull2x Weibull model functions

●

yieldLoss Calculating yield loss parameters

7

4

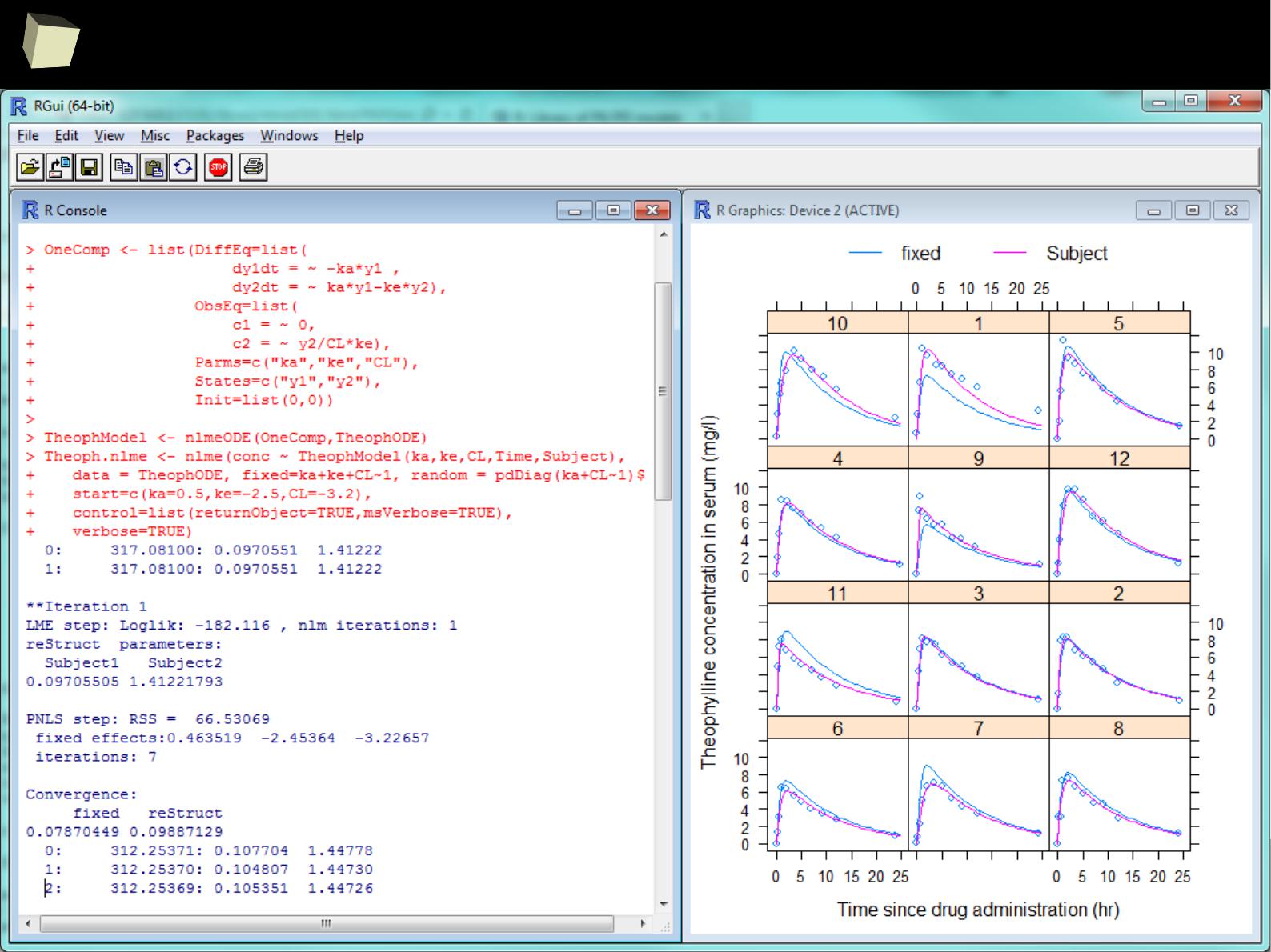

Advanced PK modeling with mixed effects

nlmeODE is a powerful combination of the famous nlme package with deSolve

(an ordinary differential equations solver) for non-linear, mixed-effects

modelling, designed especially for pharmaceutical research.

The package also includes several PK examples such as one- and two-

compartment models with multiple doses and infusions.

●

Pharmacokinetics of Theophylline

●

Pharmacokinetics of Indomethacine

●

Absorption model with estimation of time/rate of infusion

●

Simulation and simultaneous estimation of PK/PD data

●

Minimal Model of Glucose and Insulin

●

Minimal Model of Glucose using observed insulin as forcing function

http://www.inside-r.org/packages/cran/nlmeODE/docs/PKPDmodels

7

5

Advanced PK modeling with mixed effects

7

6

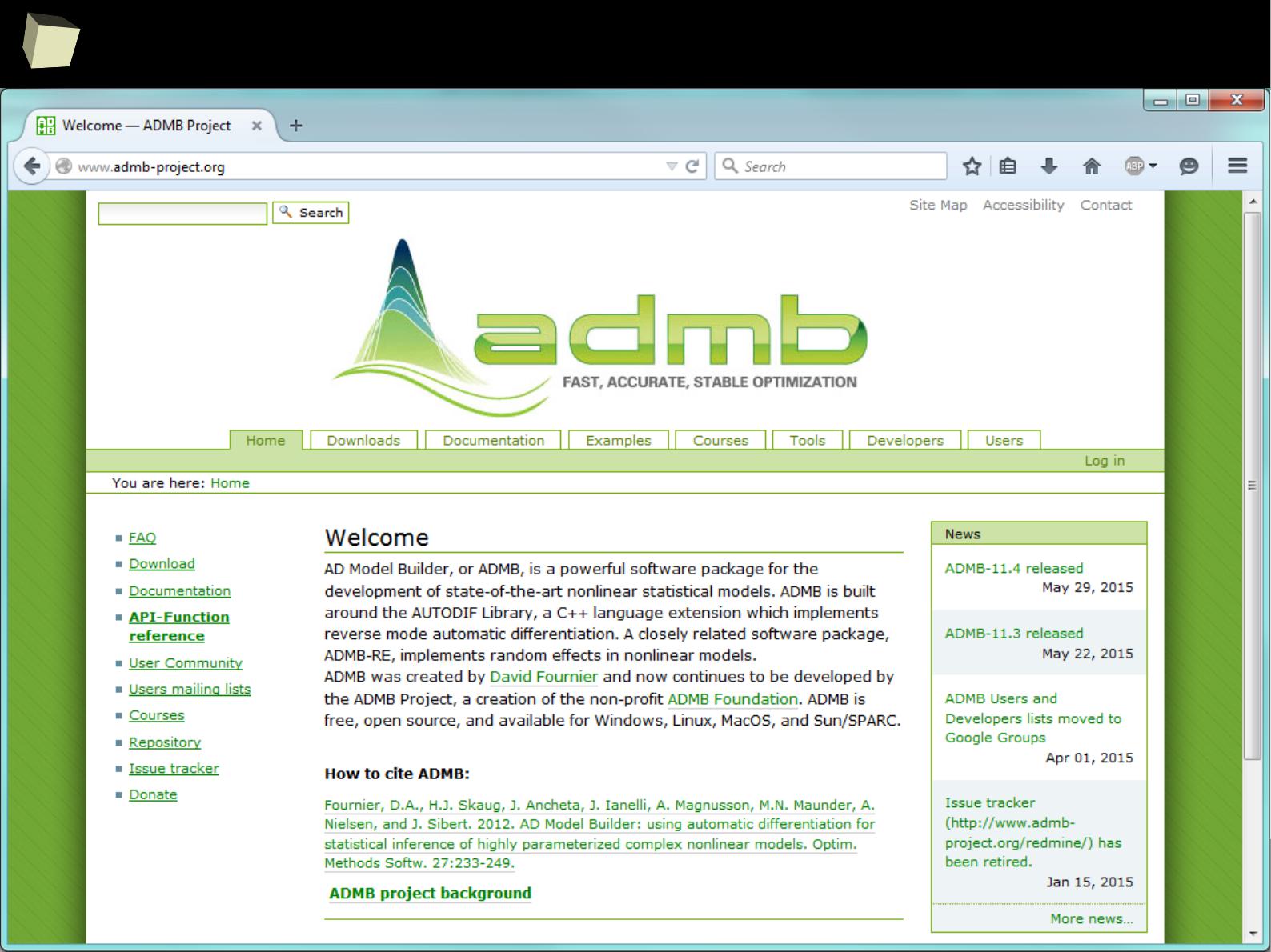

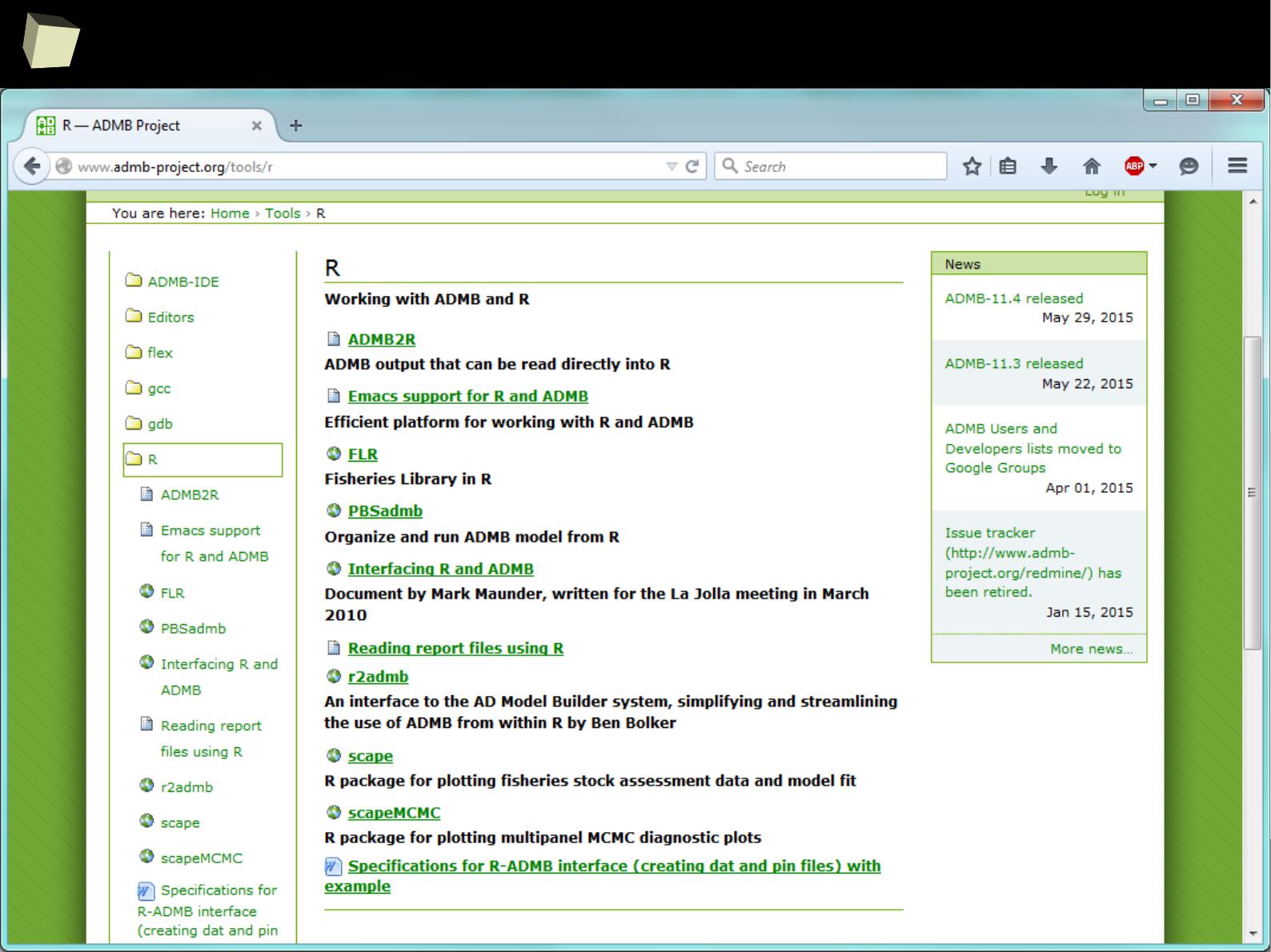

R + ADMB (automatic diffeRentiation!)

7

7

R + ADMB (automatic diffeRentiation!)

8

2

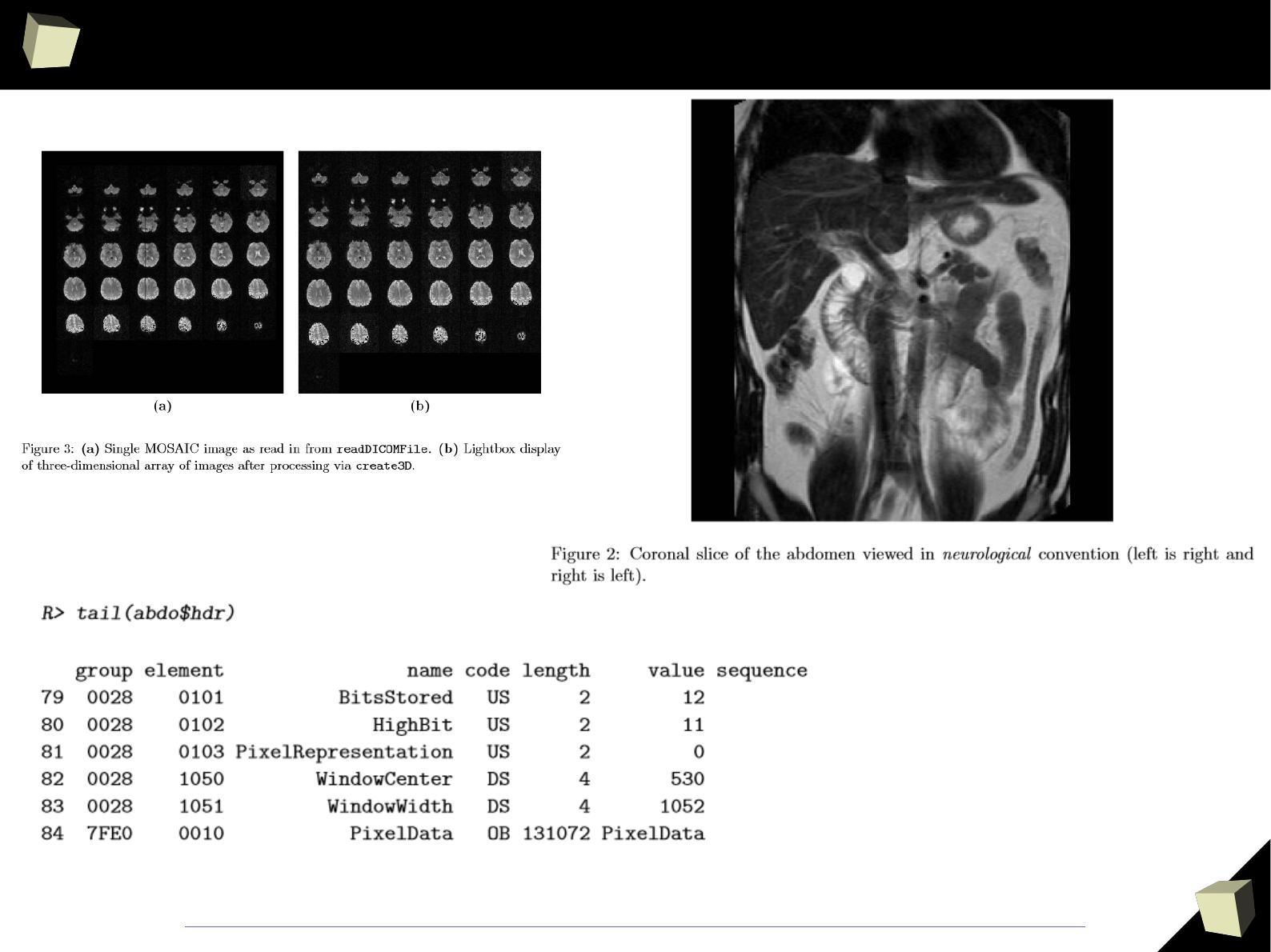

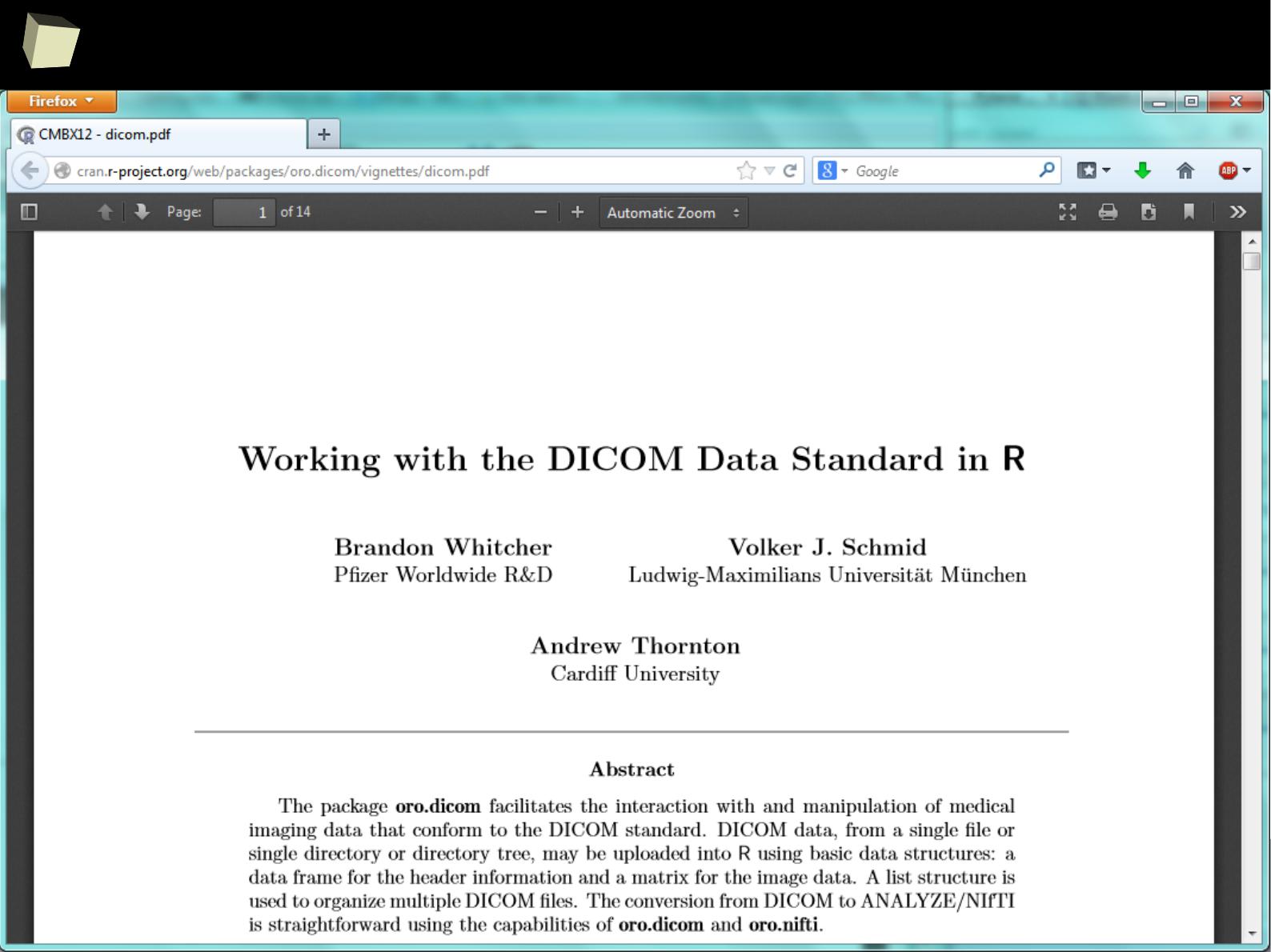

Process DICOM data with oro.dicom

8

4

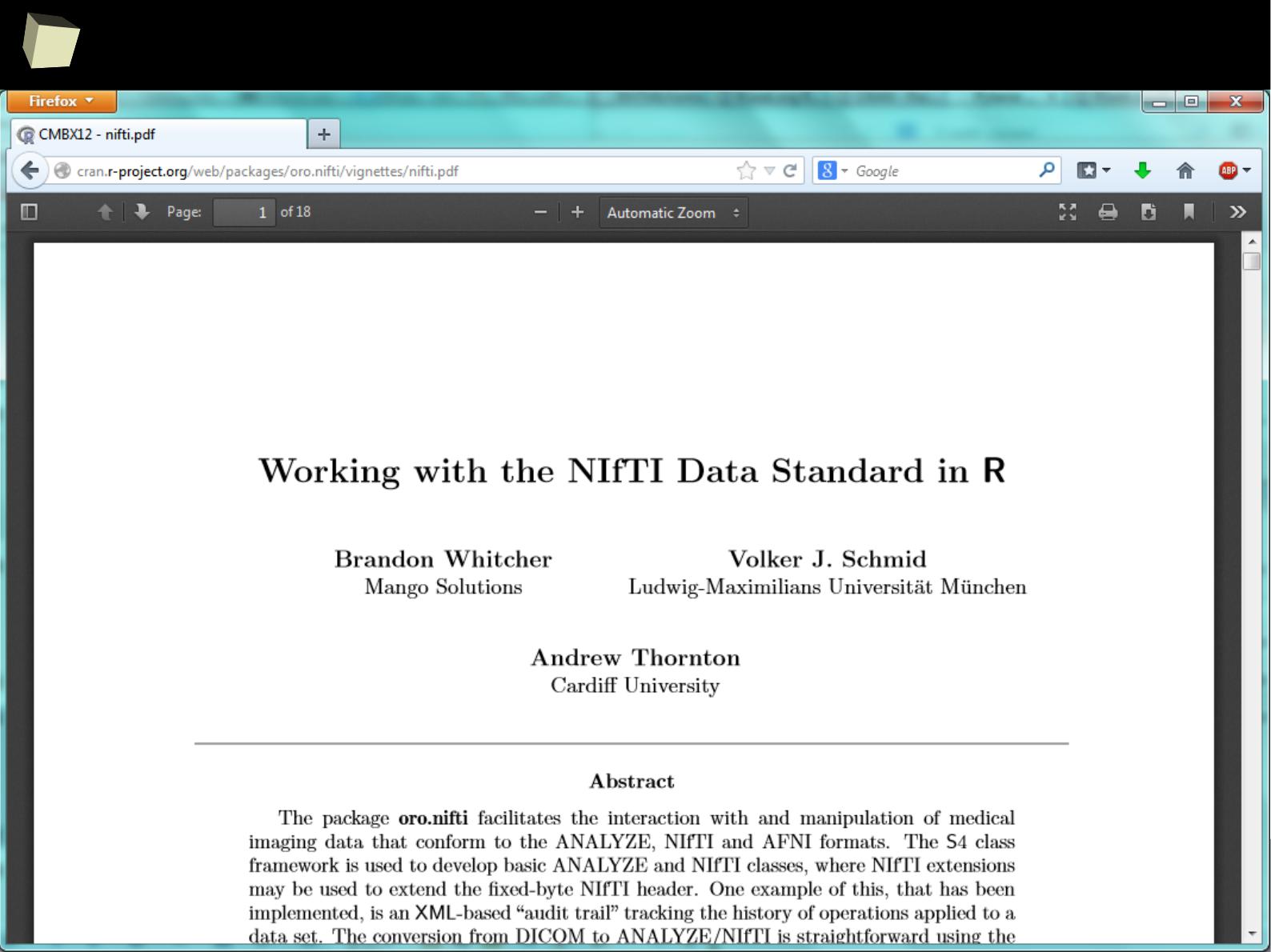

Process NIfTI data with oro.nifti

8

5

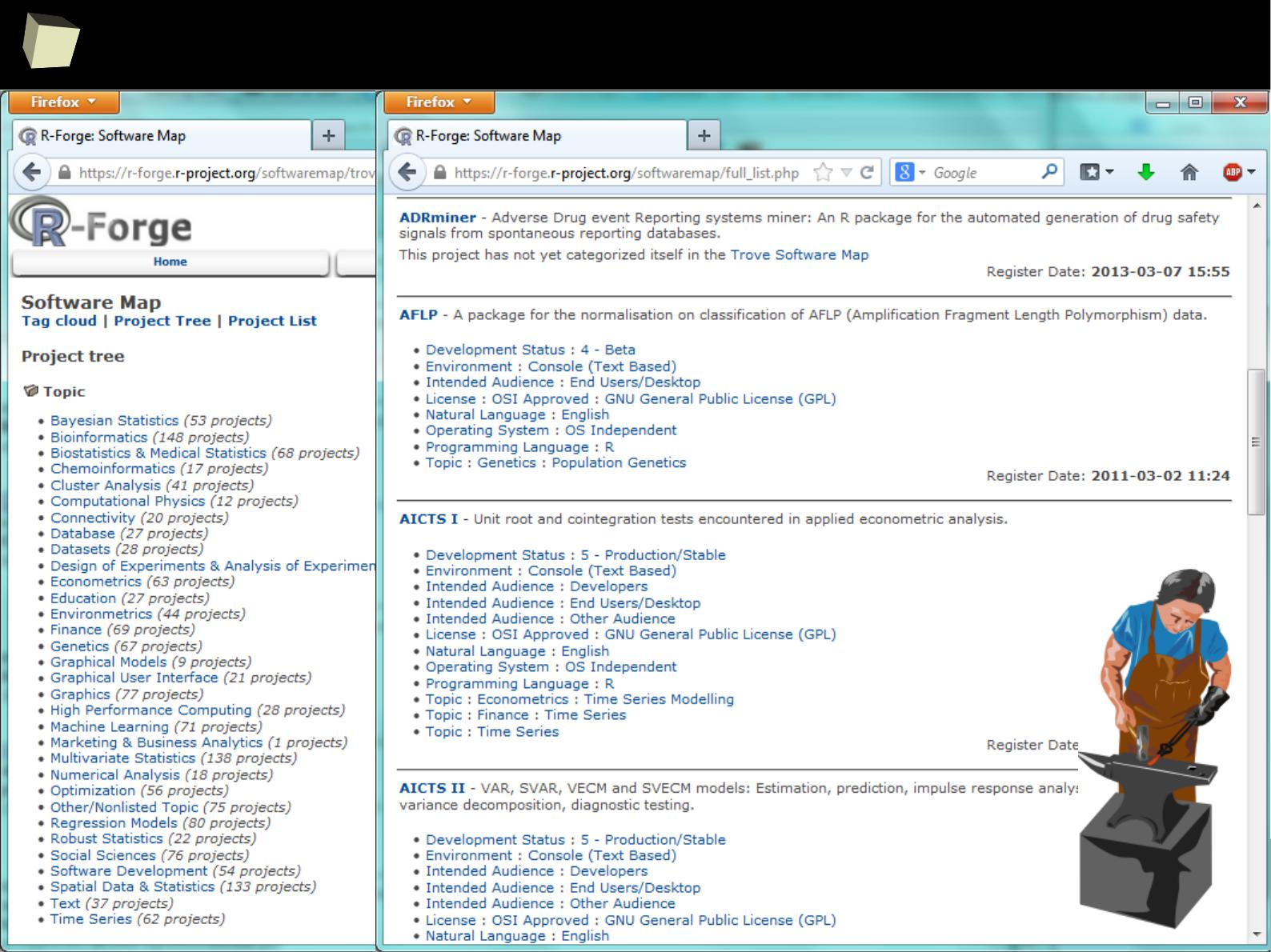

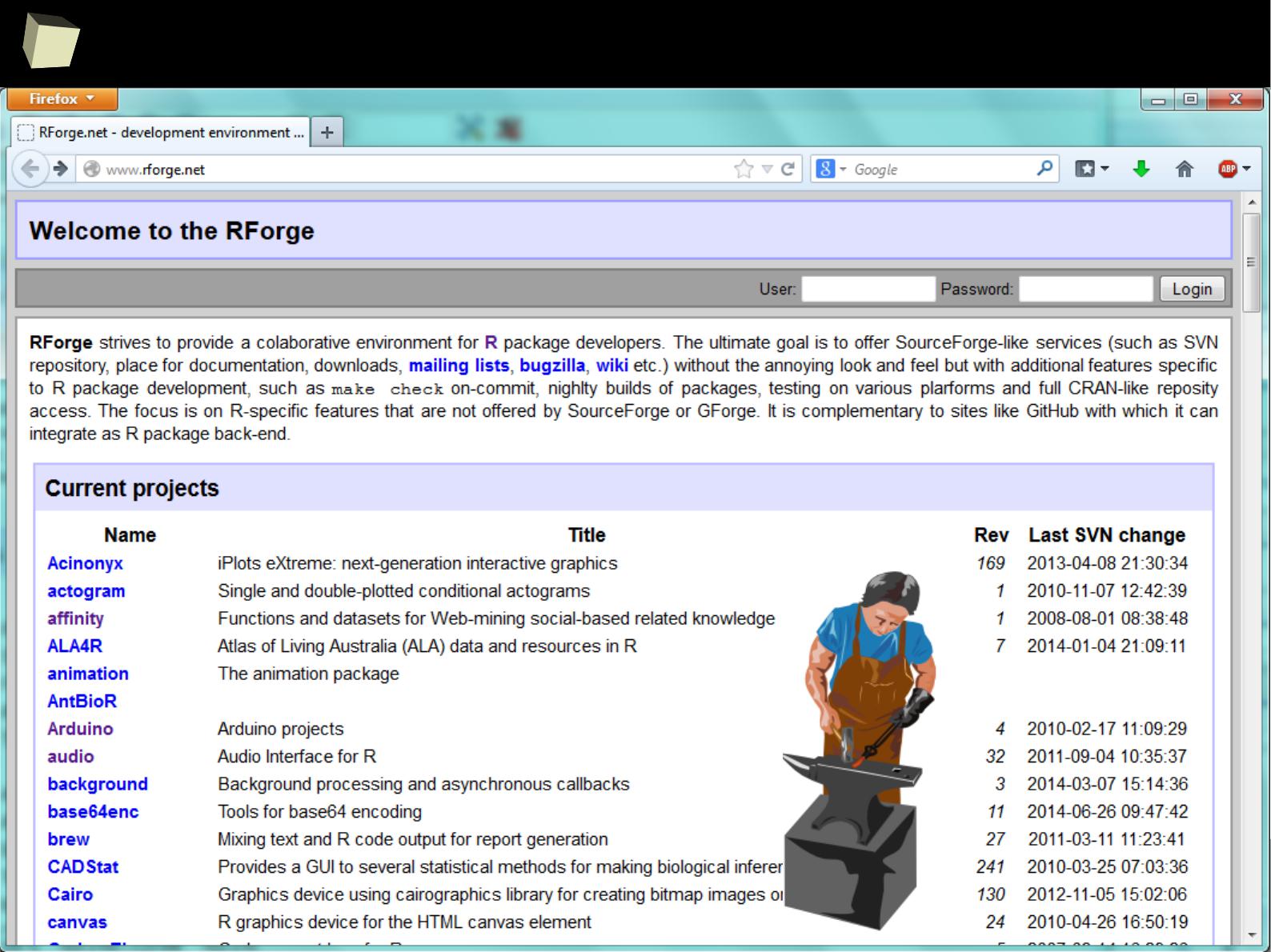

The Origin Of Things - RForge

8

6

The Origin Of Things - RForge

8

7

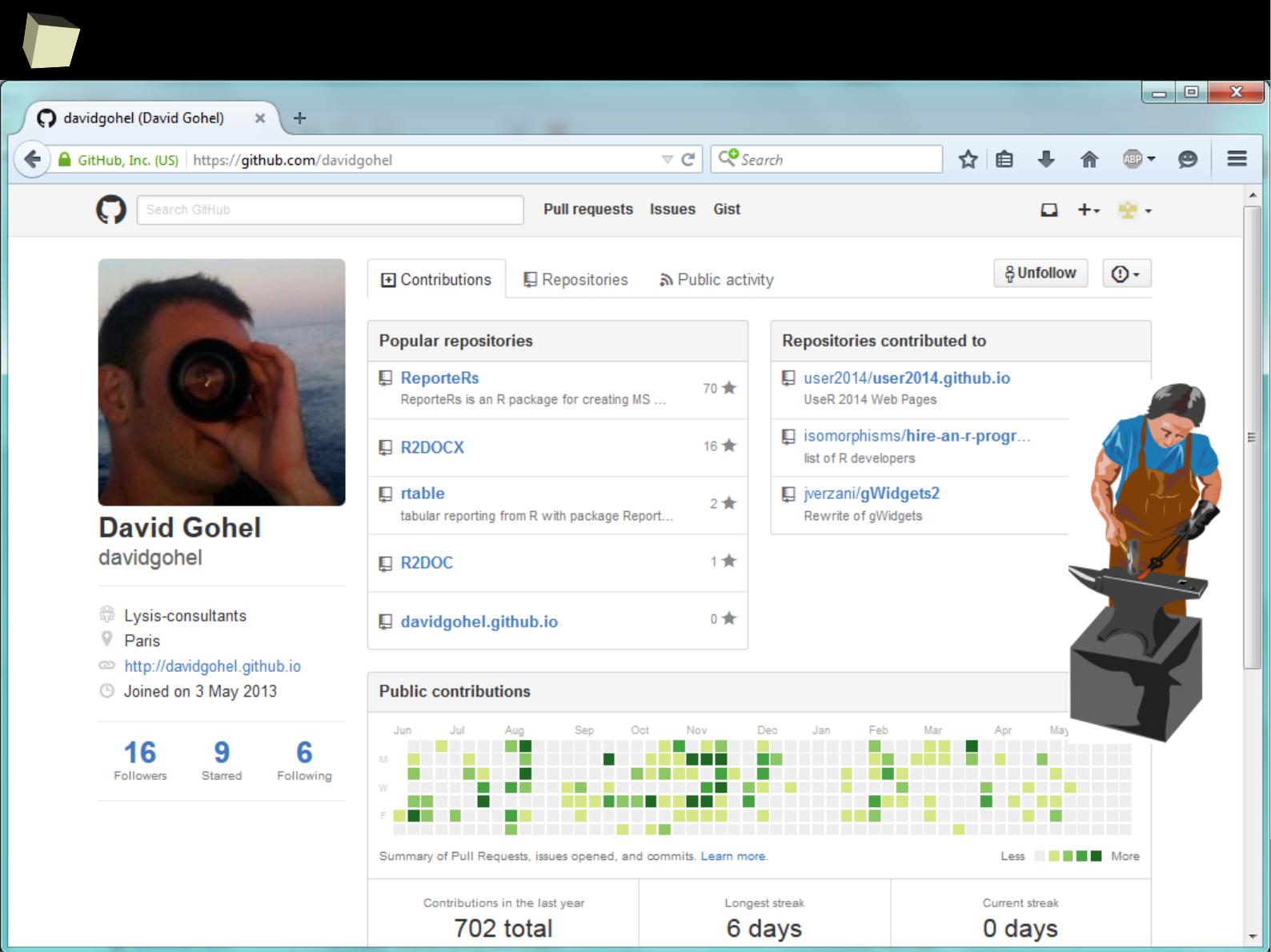

The Origin Of Things - GitHub

8

9

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

II 1/3 :) R is easy to maintain!

IV R is supported by the community

V R is supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

9

0

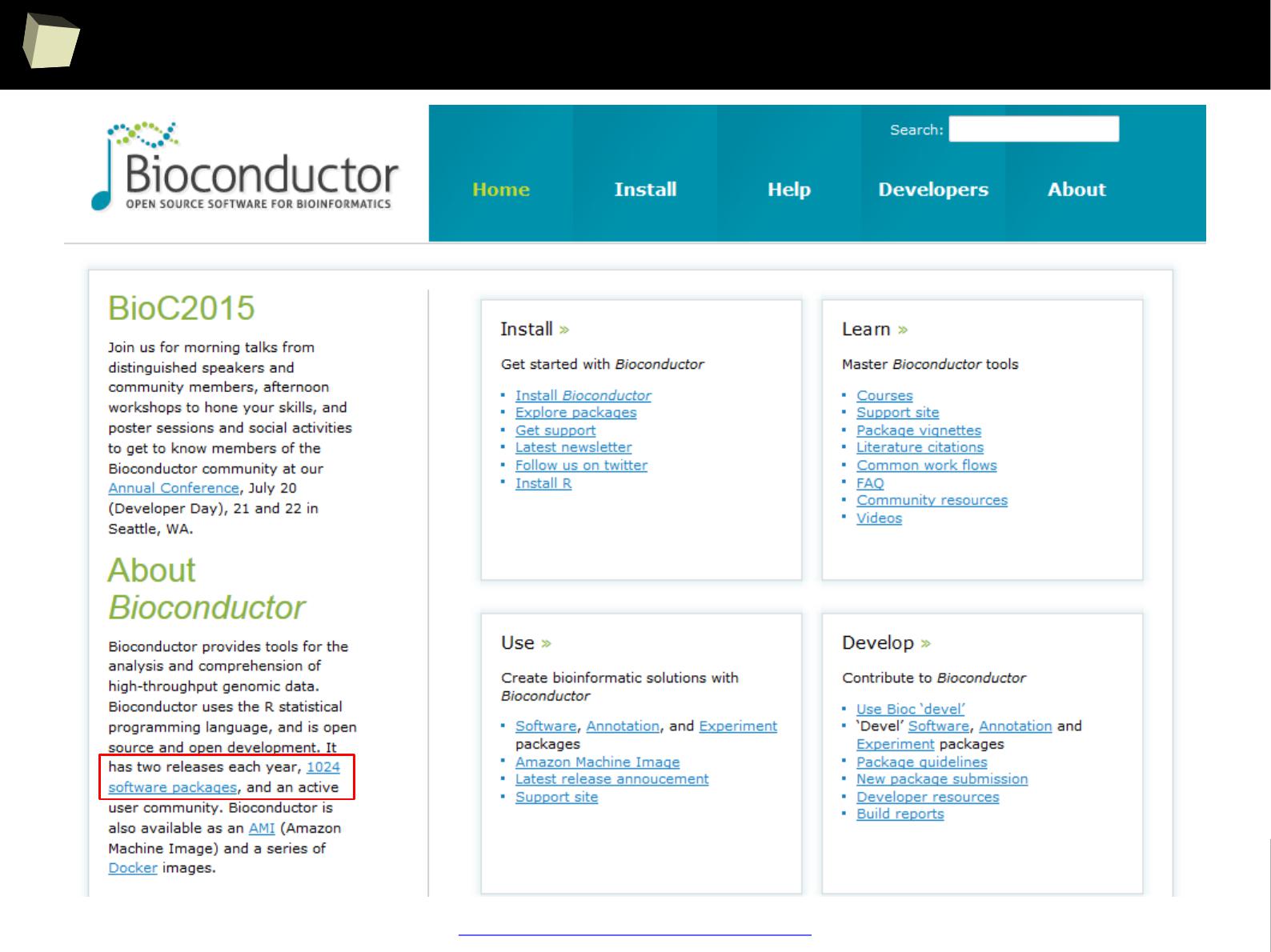

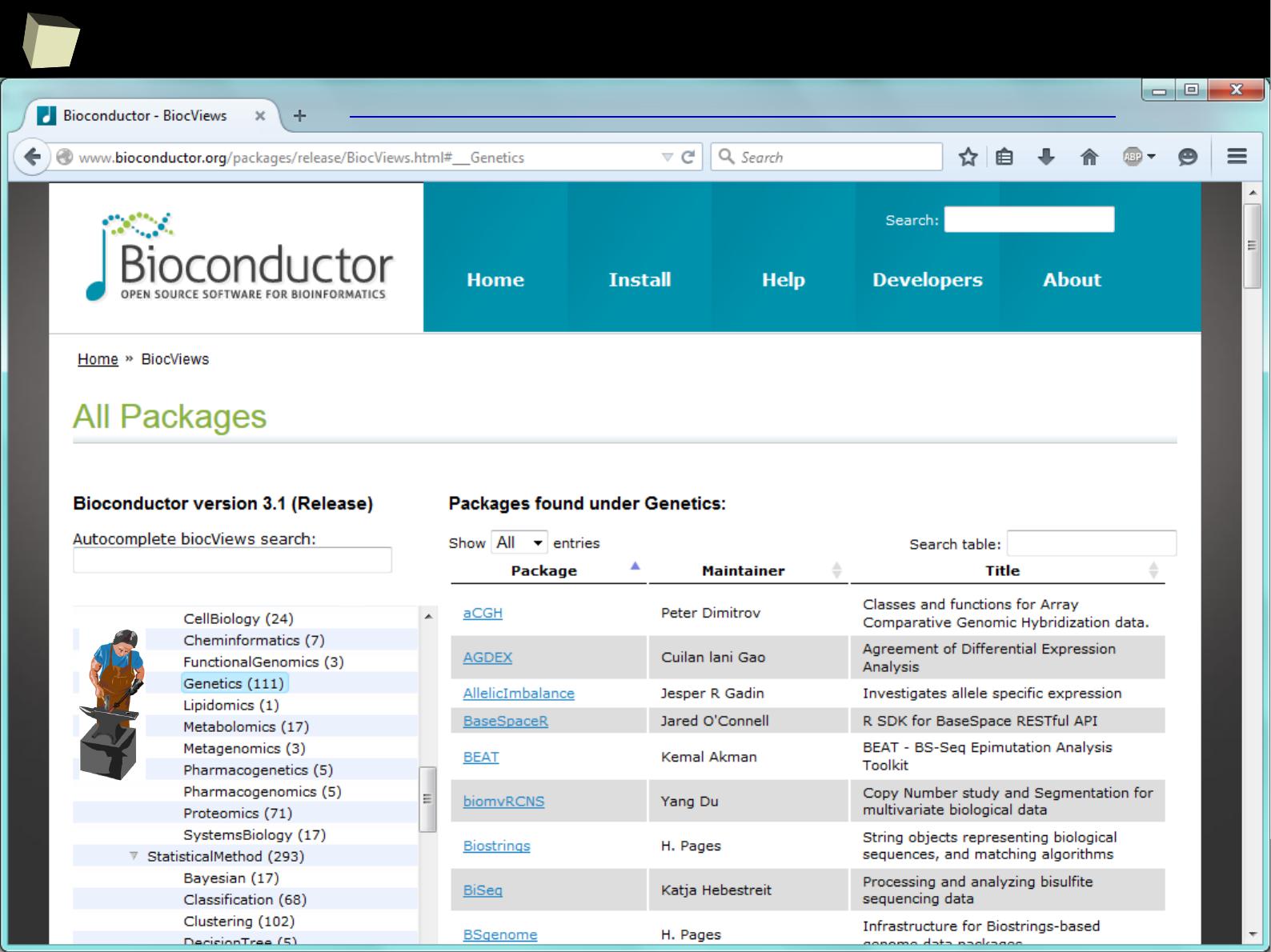

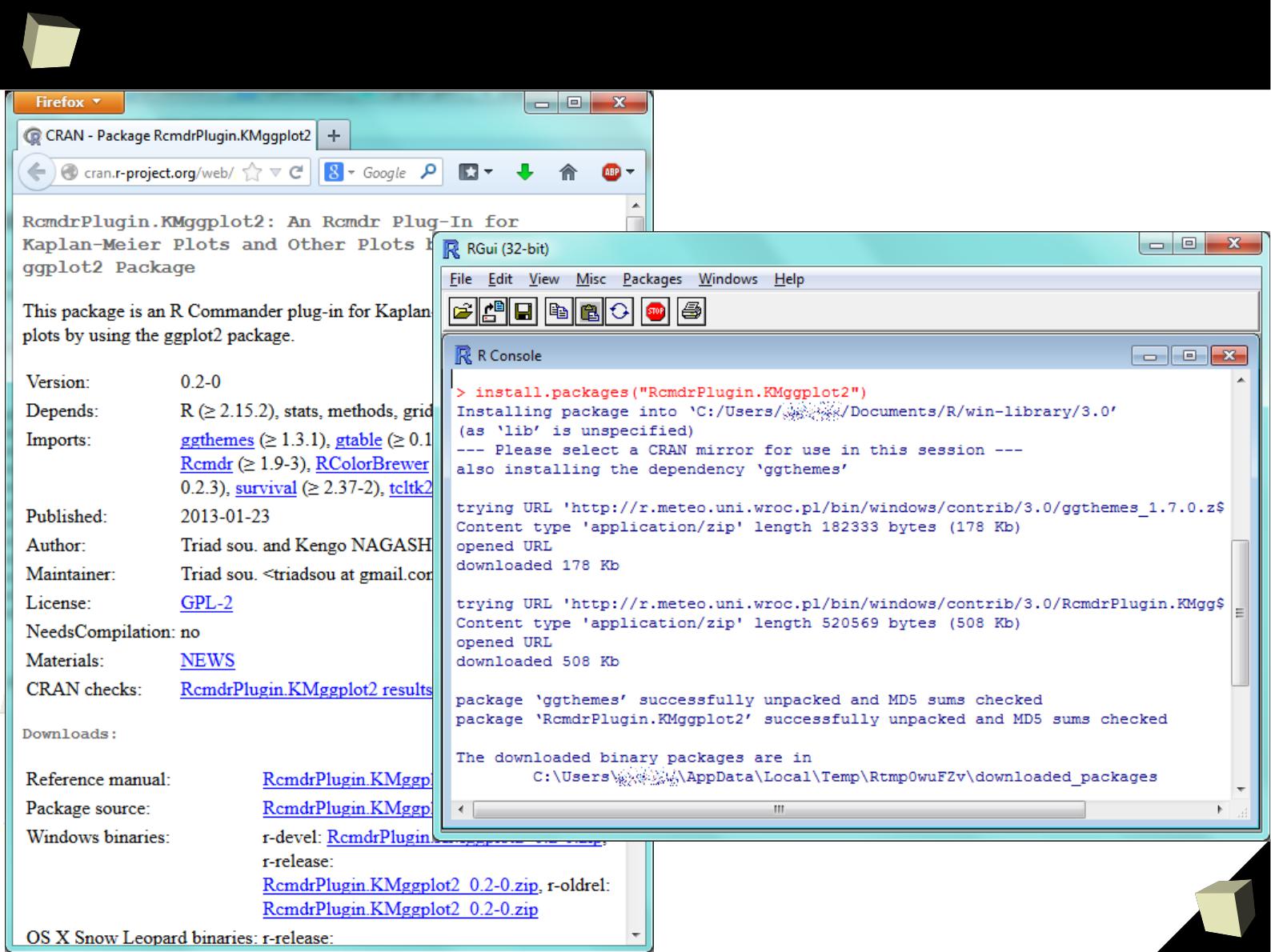

R is easy to maintain!

R is easy to maintain. Managing installed packages and keeping them up-to-

date becomes a piece of cake:

●

Packages are available in CRAN, GitHub, BioConductor and Omegahat

repositories. CRAN Repositories are mirrored in more than 50 countries.

●

Dependencies between packages are resolved automatically.

Just type install.packages(“package_name”) in the console and R will

download necessary packages, validate

MD5

and install them.

●

Packages in a binary form can be installed from local .zip archives

●

Packages can be also built from sources (common approach on Linux)

●

Stay up-to-date with update.packages(). This could not be easier.

●

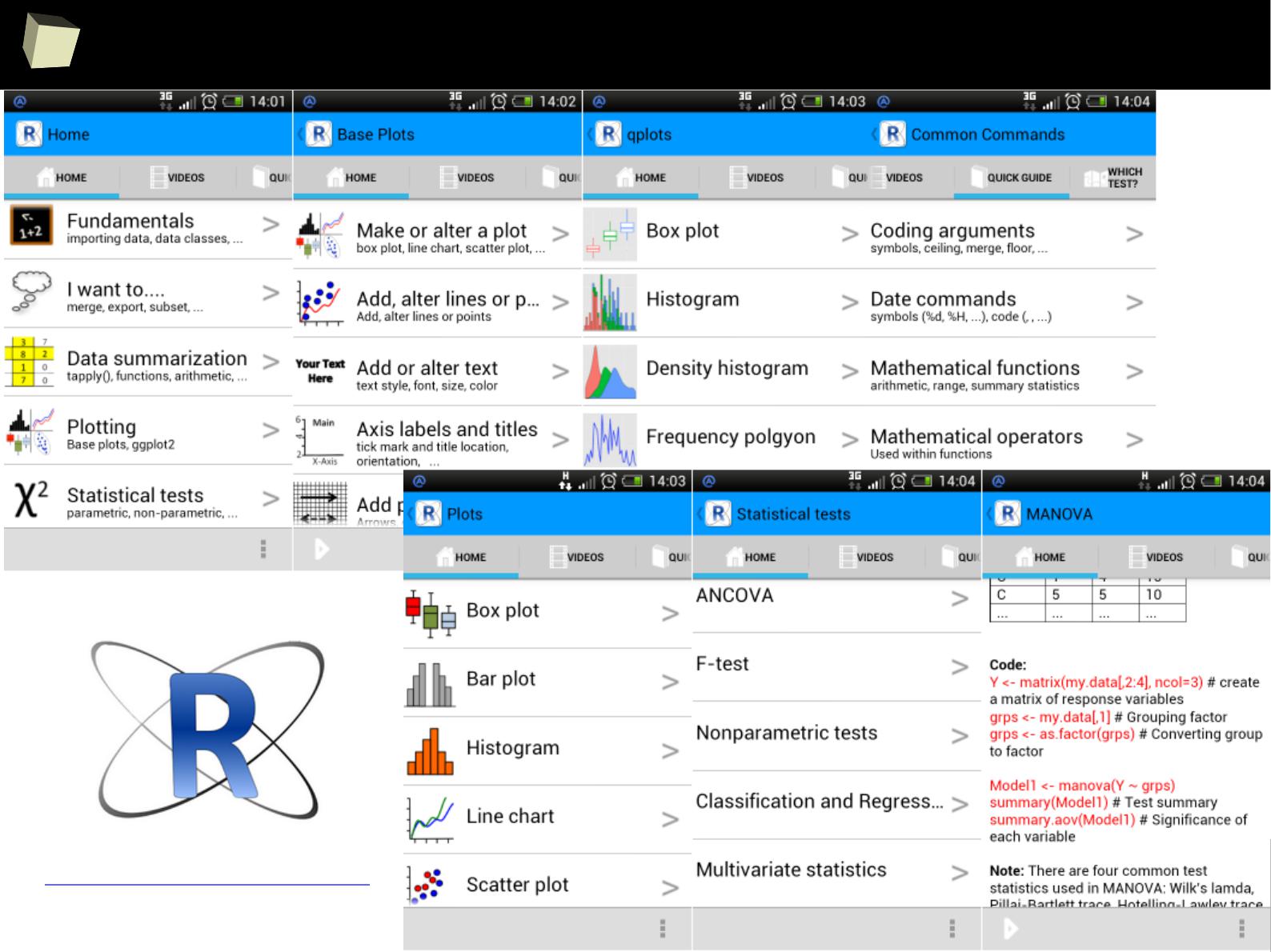

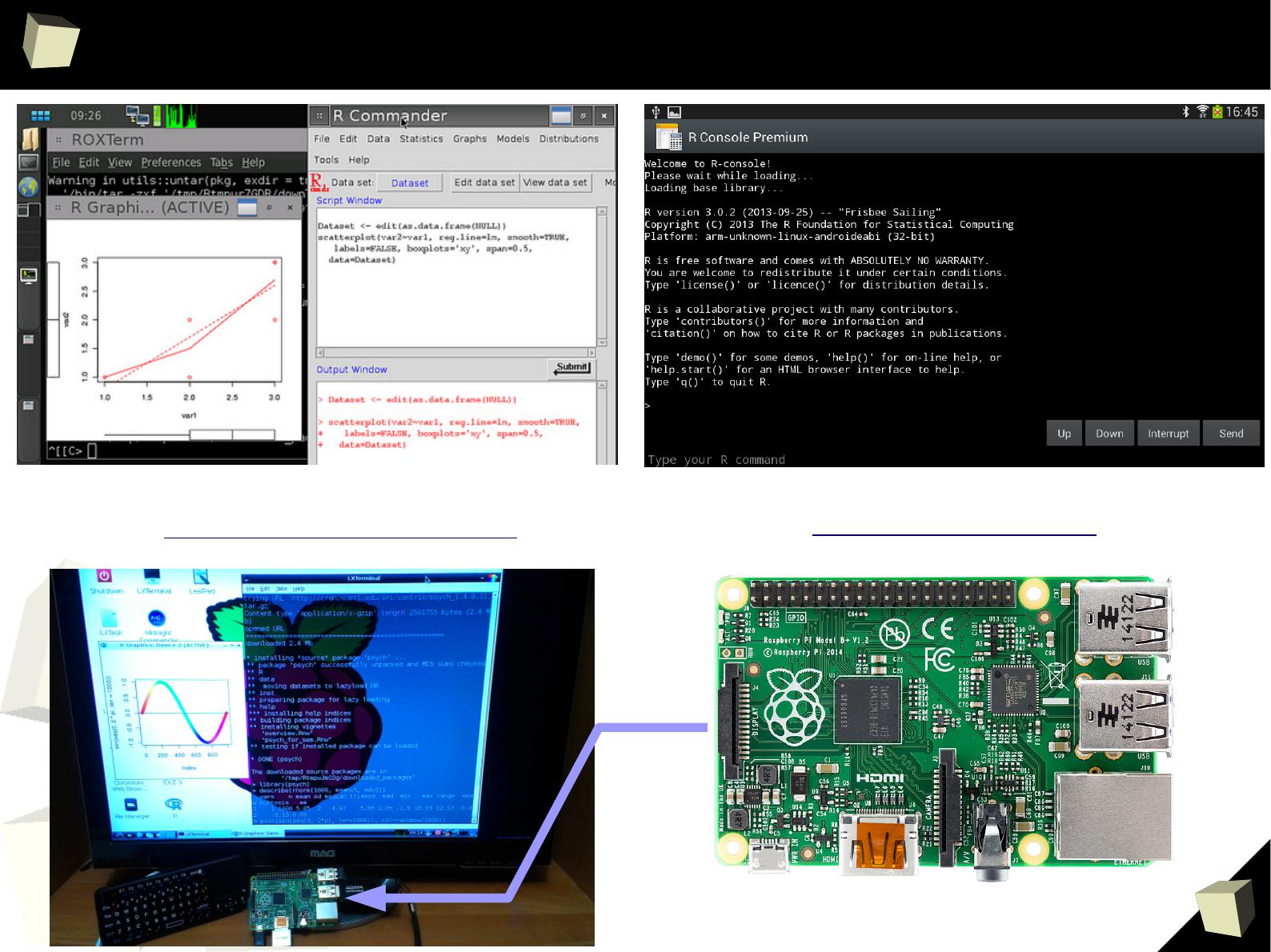

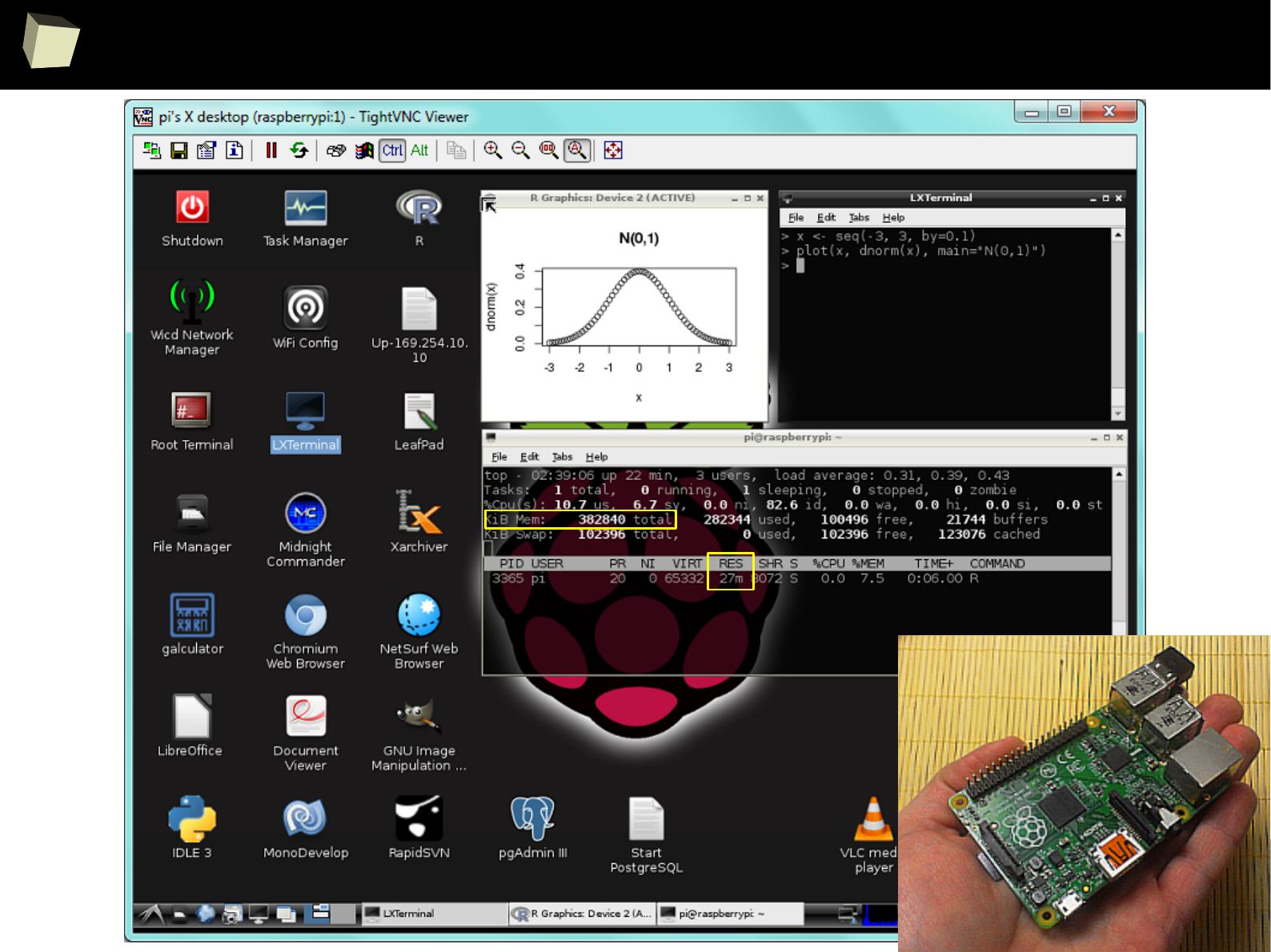

By the use of installr package entire environment can be updated at once.

●

One can have multiple libraries of packages in order to organize them

9

1

R is easy to maintain!

9

2

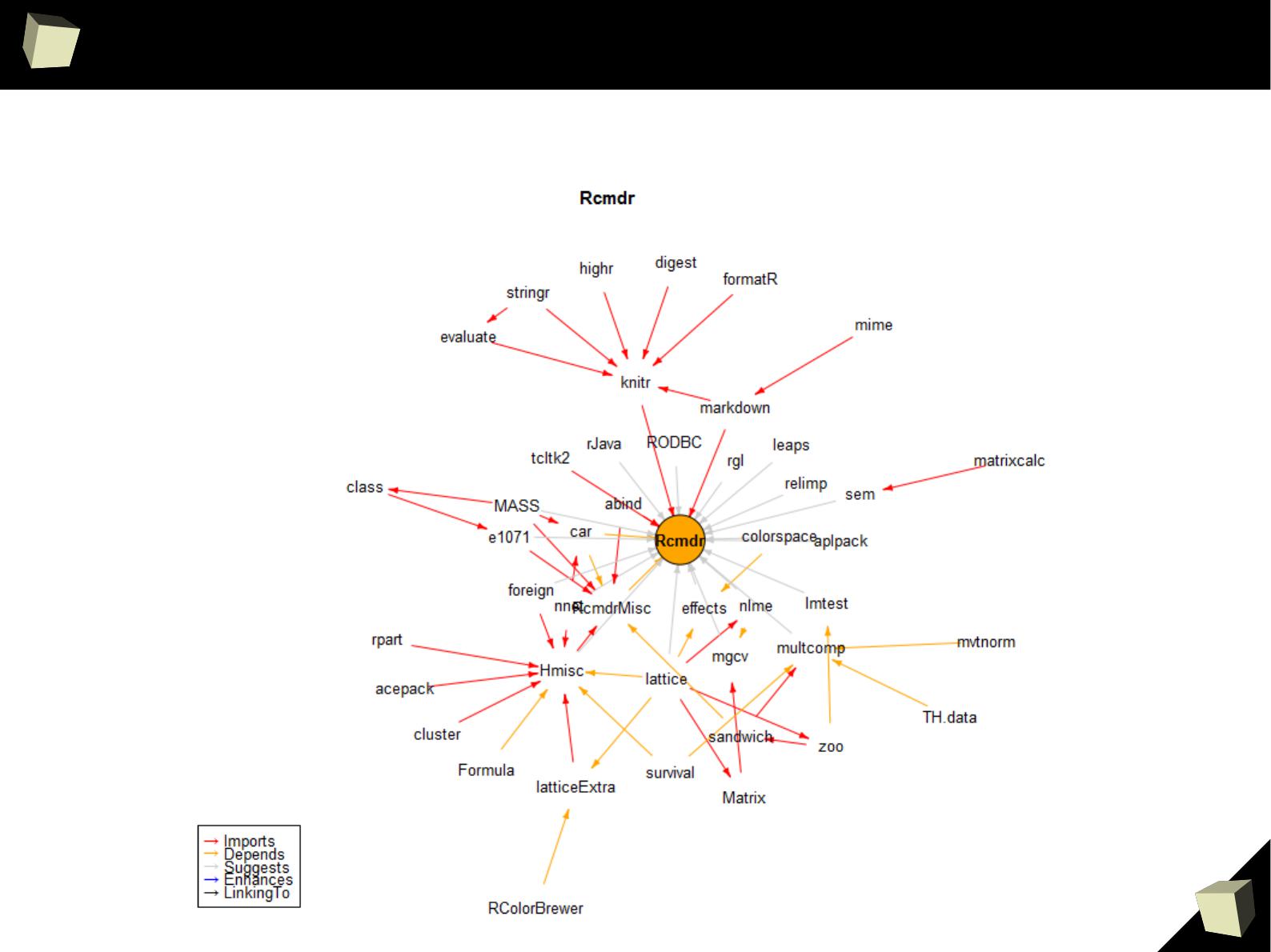

CRAN D.I.Y.

With the miniCRAN package one can build his own, private mini repository of

R packages. This is a perfect solution for creating dedicated, in-house

production environments for the following reasons:

●

You may wish to mirror only a subset of CRAN, for security, legal

compliance or any other in-house reason

●

You may wish to restrict internal package use to a subset of public

packages, to minimize package duplication, or other reasons of coding

standards

●

You may wish to make packages available from public repositories other

than CRAN, e.g. BioConductor, r-forge, OmegaHat, etc.

●

You may wish to add custom in-house packages to your repository

www.r-bloggers.com/introducing-minicran-an-r-package-to-create-a-private-cran-repository/

Do not forget to visit the quick introduction to miniCRAN.

9

3

CRAN D.I.Y.

MiniCRAN also helps you to track dependencies between packages.

9

4

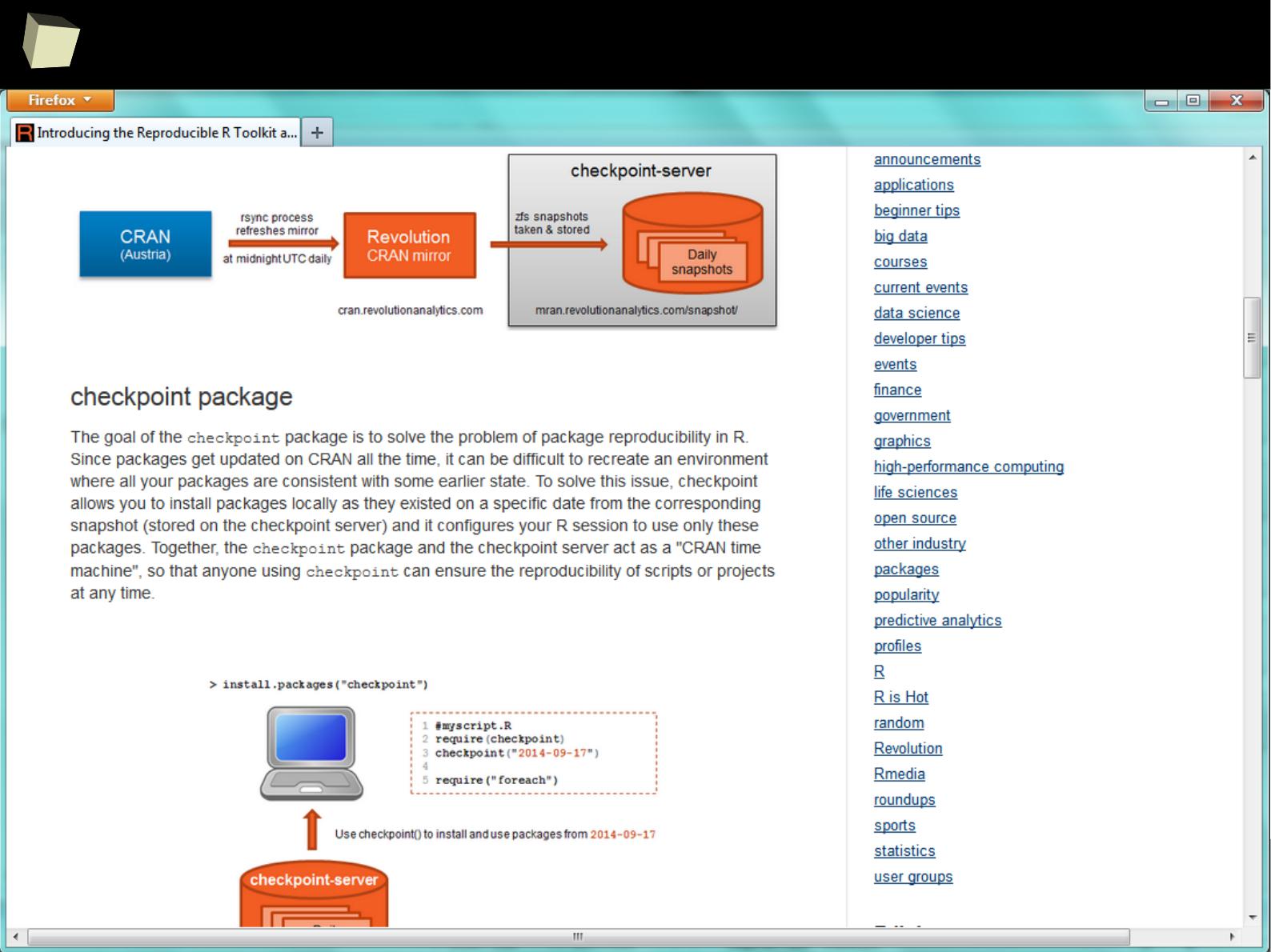

Versioned CRAN – meet Revolution::checkpoint

9

5

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

II 2/3 :) R is not resource consuming!

IV R is supported by the community

V R is supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

9

6

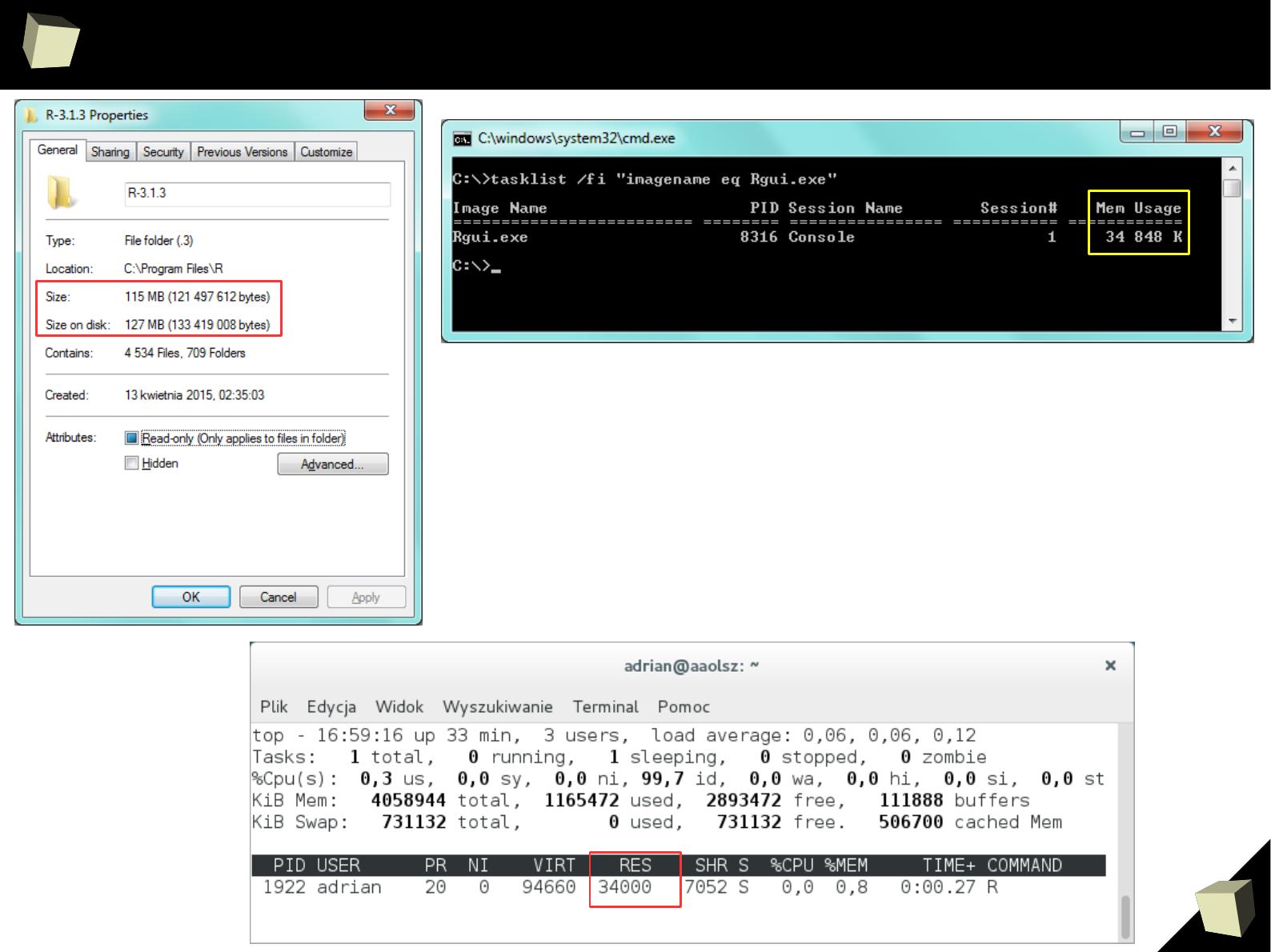

R is not resouRce consuming!

Fresh installation:

●

Disk space: ~ 130MiB

●

RAM: ~ 35MiB

9

7

R is not resouRce consuming!

9

8

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is supported by the world of science

IV R is supported by the community

V R is supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

9

9

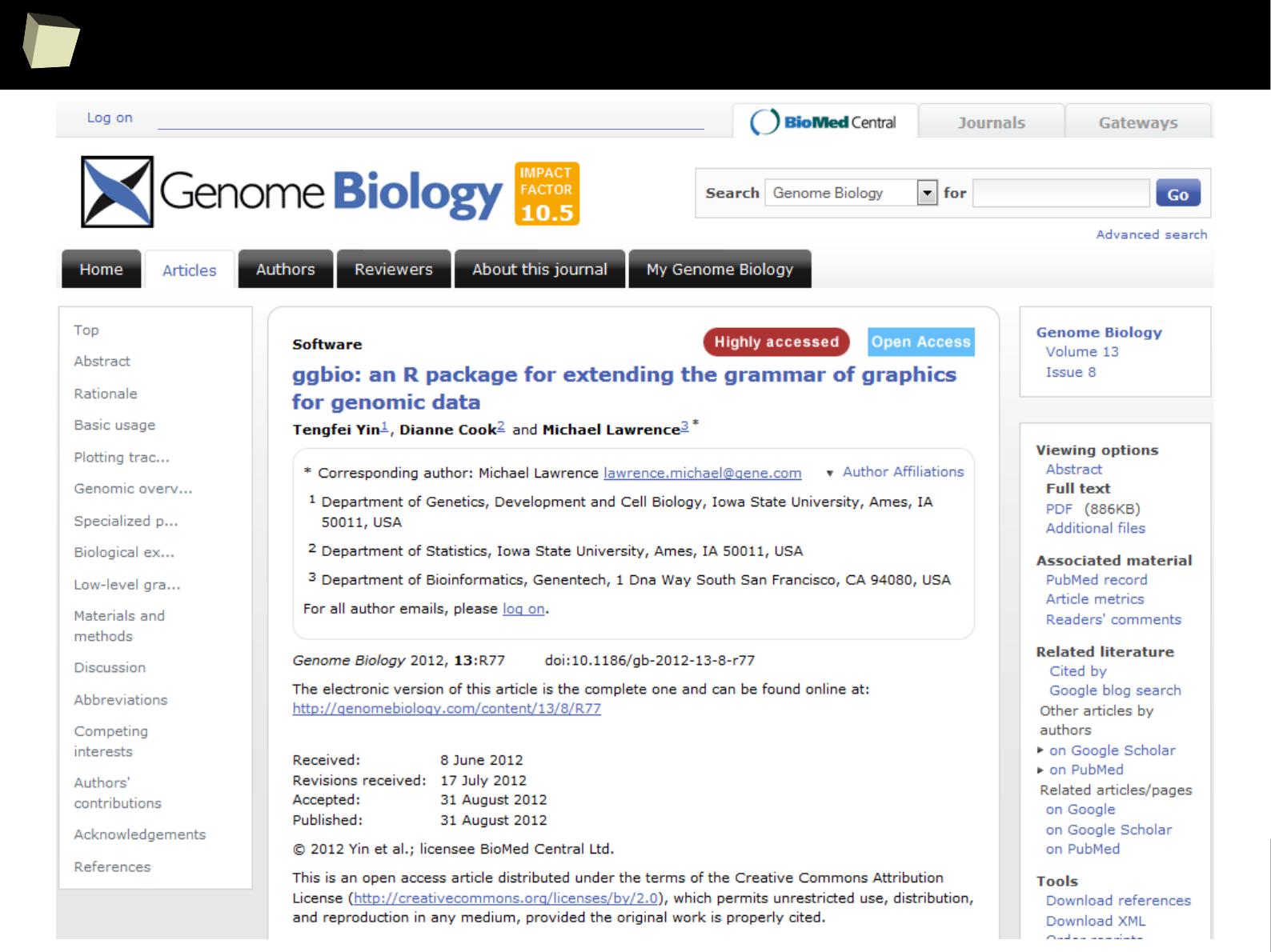

...supported by the science

At the center of the R Community is the R Core Group of approximately 20

developers who maintain R and guide its evolution. They are experienced

statisticians, well-known in the world of science, with significant achievements.

Each thematic section has its own academic supervisor.

Robert Gentleman's articles about R and Bioconductor are among the most cited

in bioinformatics: over 5200 citations according to Google Scholar

April 2014

There are numerous e-learning materials on the Internet which provide valuable

assistance in data analysis with R. These are often provided by prestigious

academic centers like Pricenton, Stanford or Hopkins.

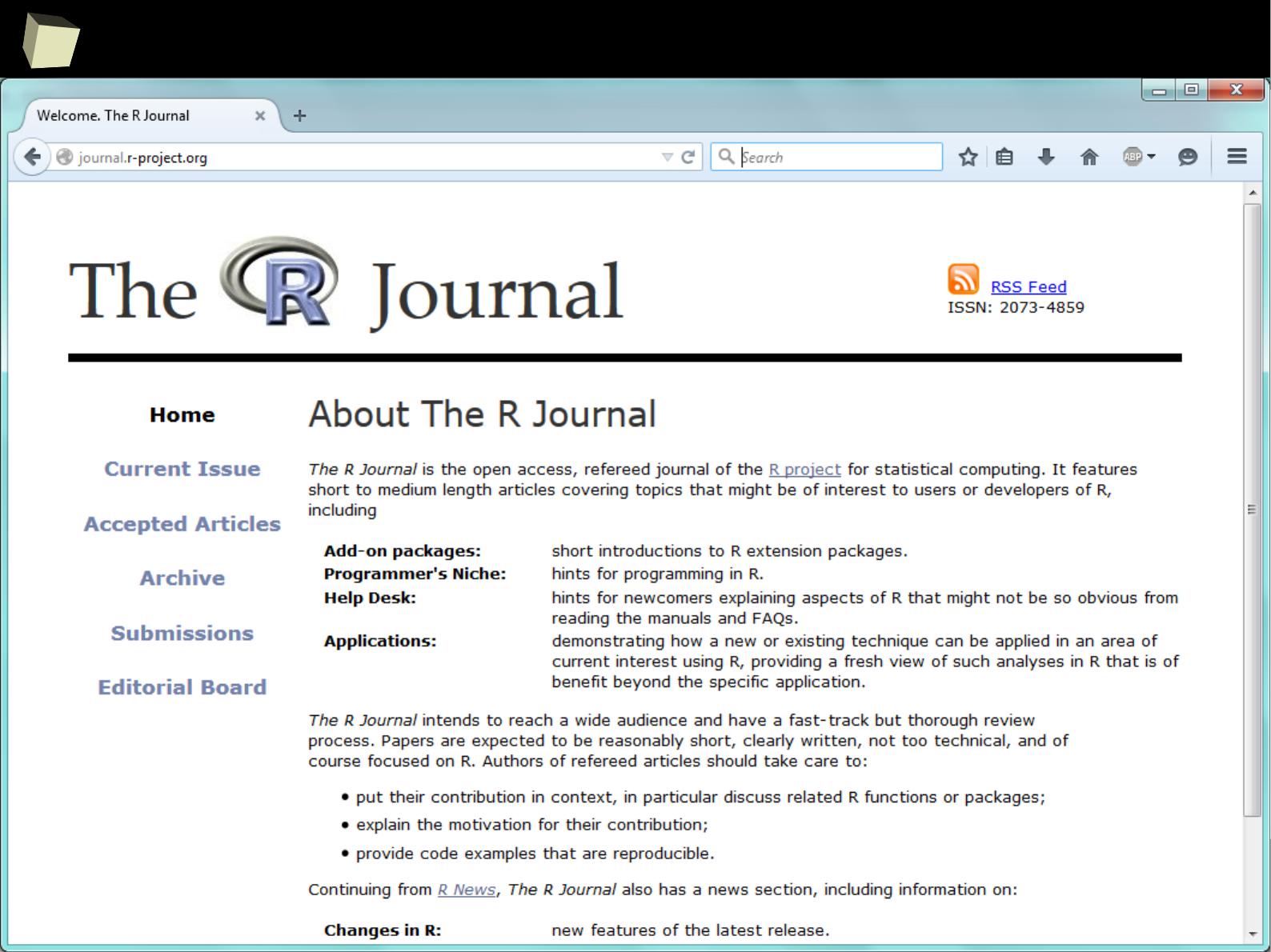

The R project is closely linked with “the R Journal” which is a mine of scientific

knowledge of using R for professional data analysis.

1

0

0

...supported by the science

1

0

1

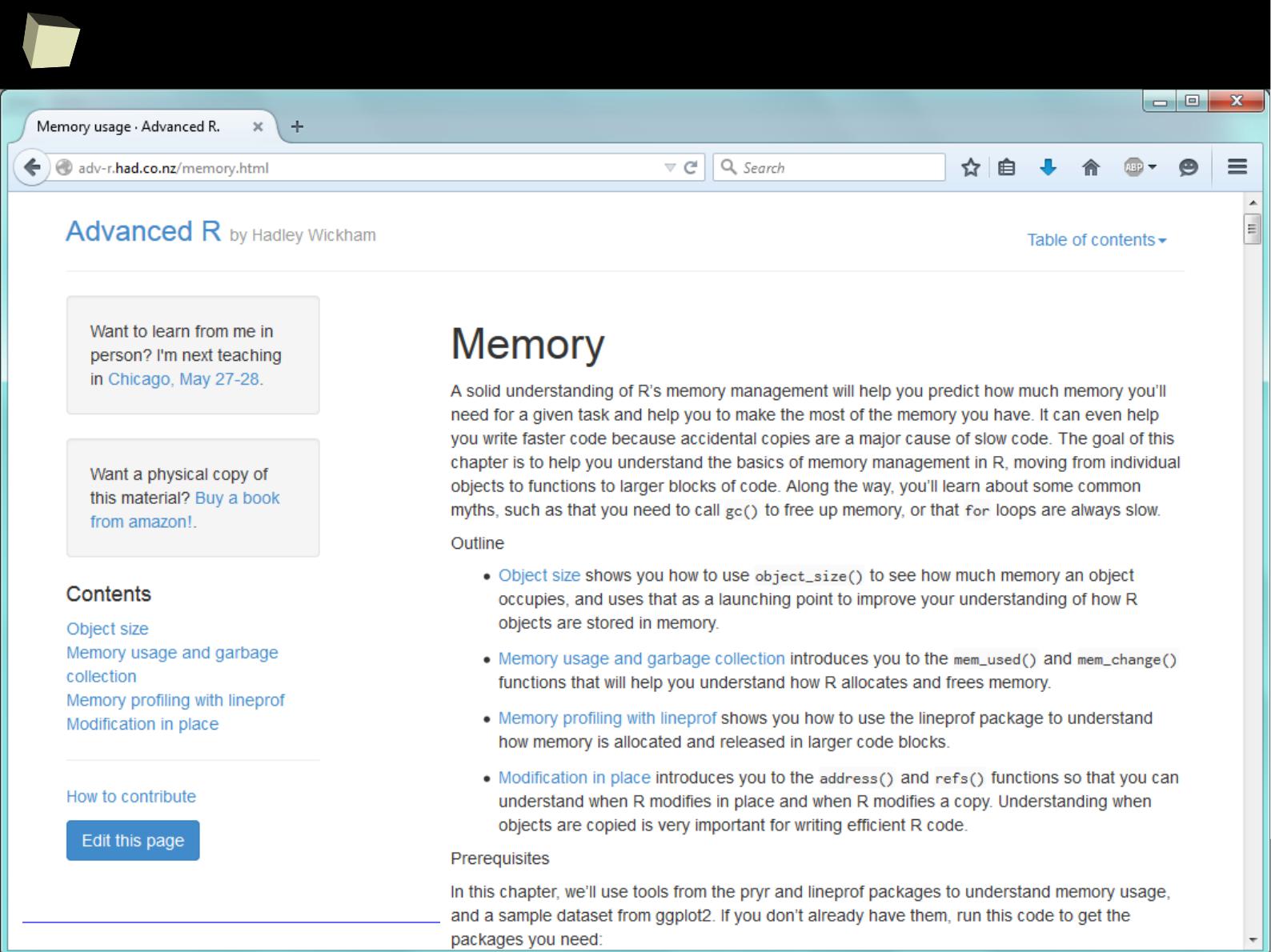

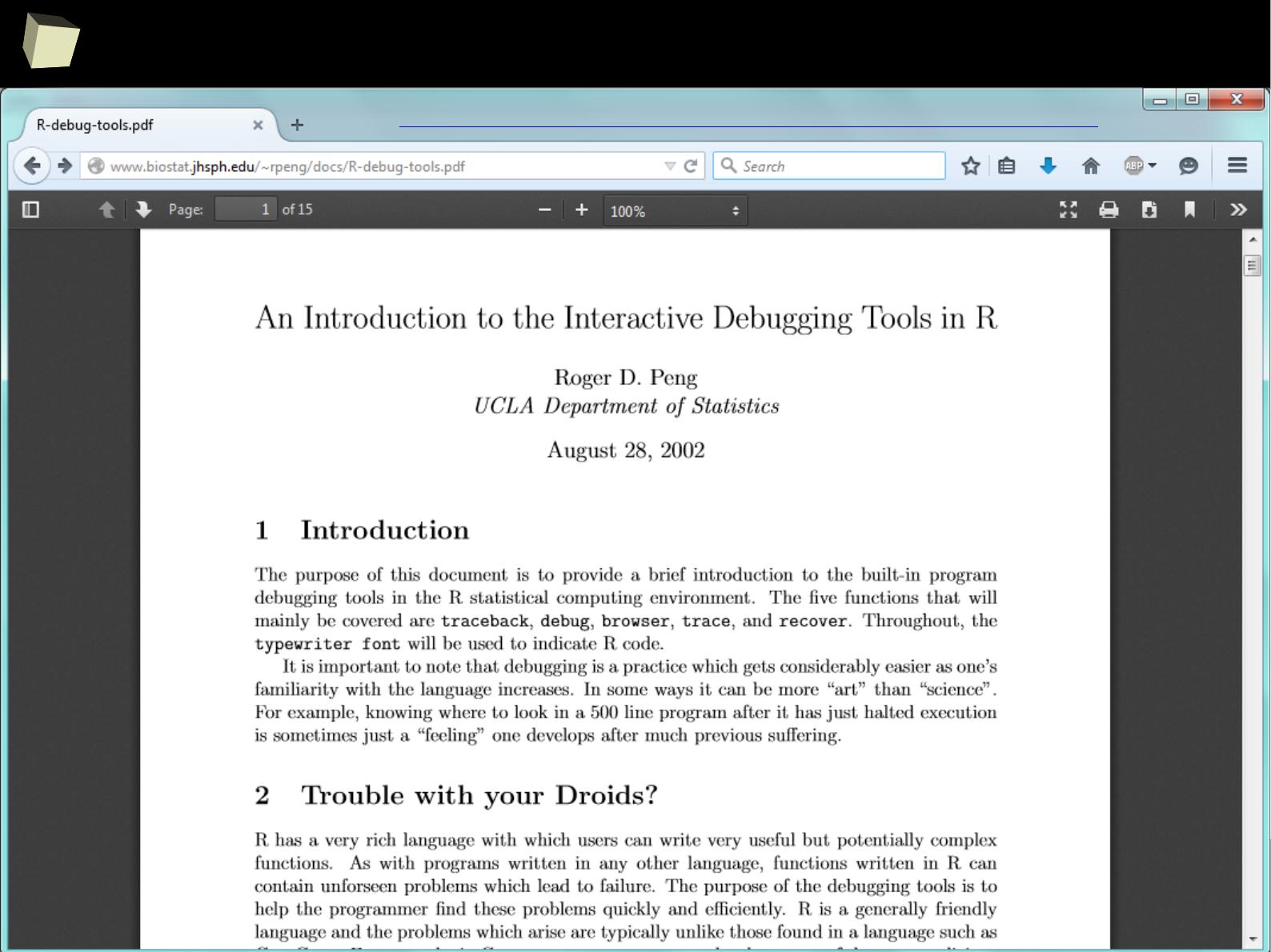

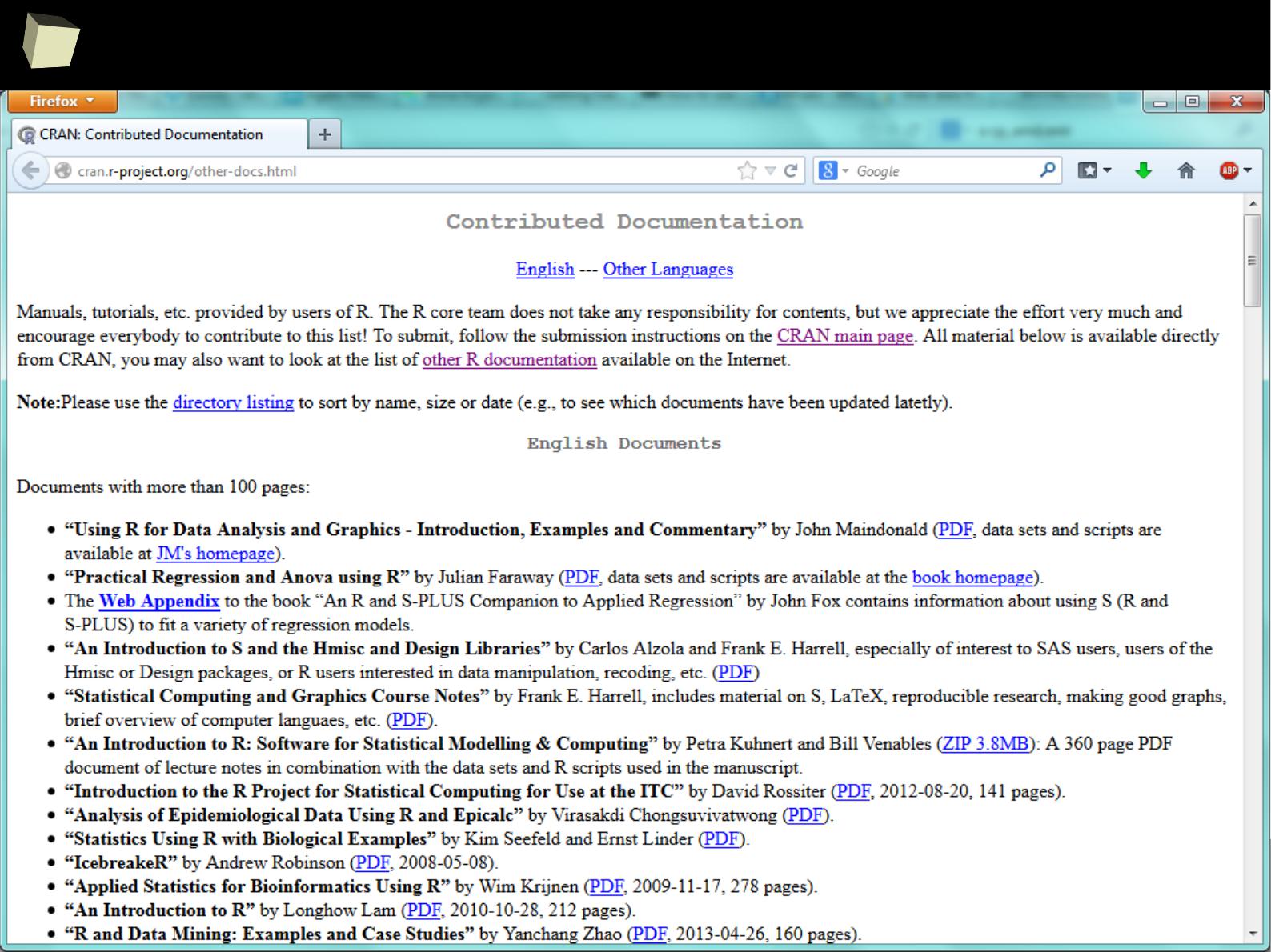

where to leaRn?

1. http://cran.r-project.org/manuals.html

2. http://cran.r-project.org/other-docs.html

3. http://cran.r-project.org/doc/manuals/r-patched/R-intro.html

4. http://stats.stackexchange.com/questions/138/resources-for-learning-r

5. http://www.youtube.com/results?search_query=R+learn+statistics

6. http://www.statmethods.net - Quick R

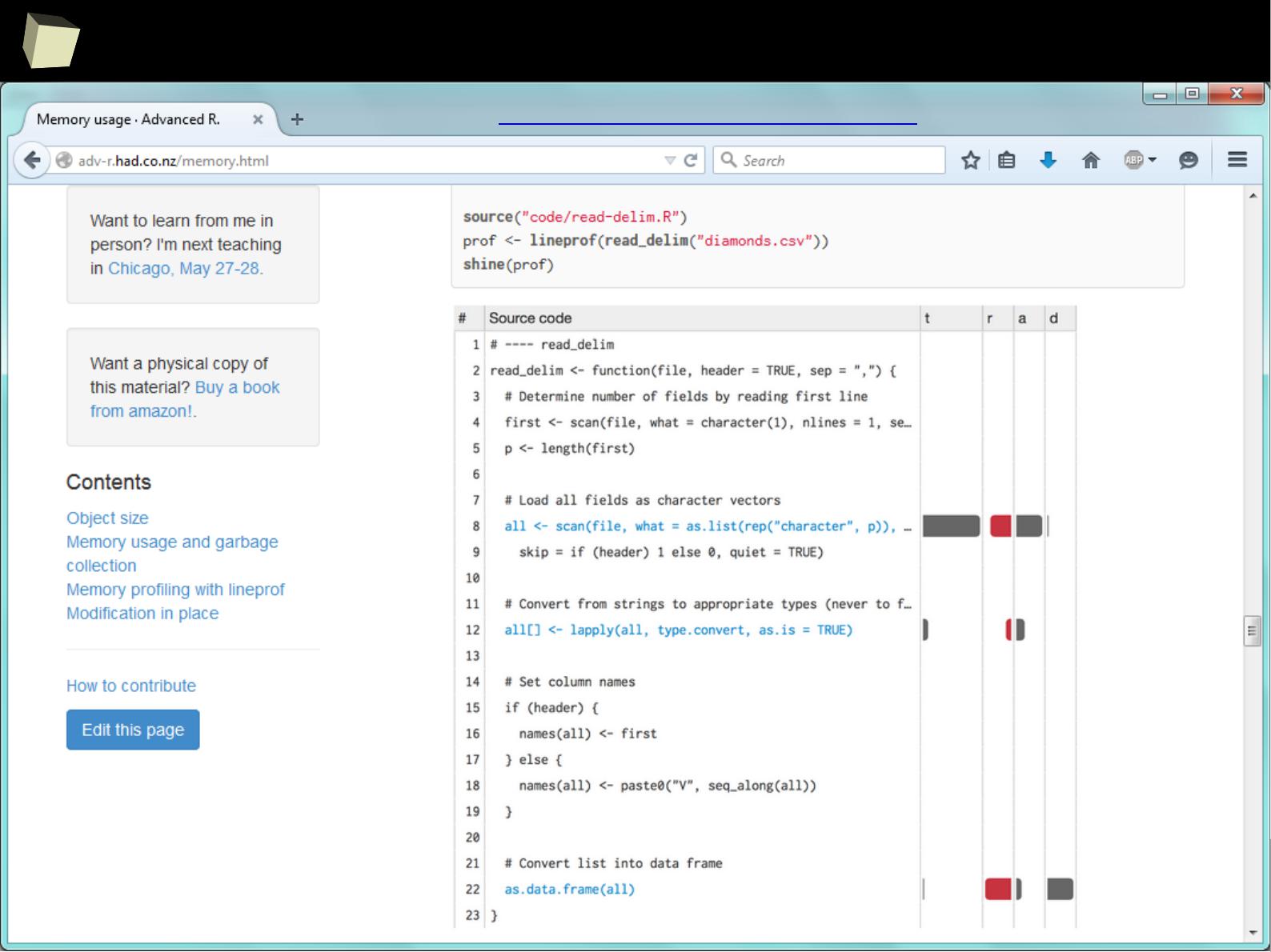

7. http://adv-r.had.co.nz - Advanced R by Hadley Wickham

8. http://www.cookbook-r.com

9. http://rseek.org/?q=learn+R

10. http://www.google.pl/search?q=learn+R

There are hundreds of places containing e-learning materials devoted to R.

Good places to start learning R:

1

0

2

Take a couRse!

1

0

3

Take a couRse!

1

0

4

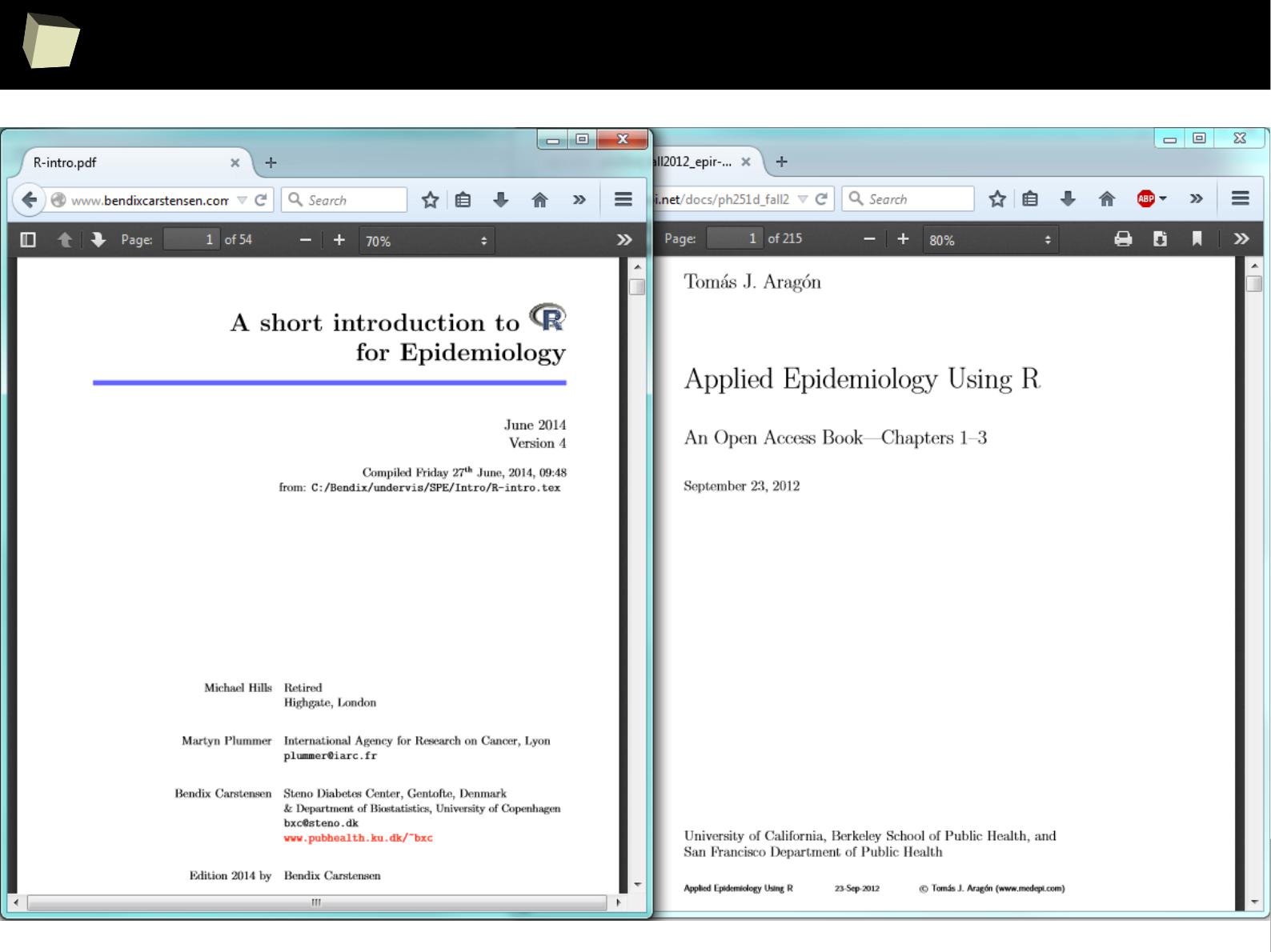

Look! This library contains pRiceless resources!

1

0

5

...thousands of valuable papeRs at our fingertips

1

0

6

...thousands of valuable papeRs at our fingertips

1

0

7

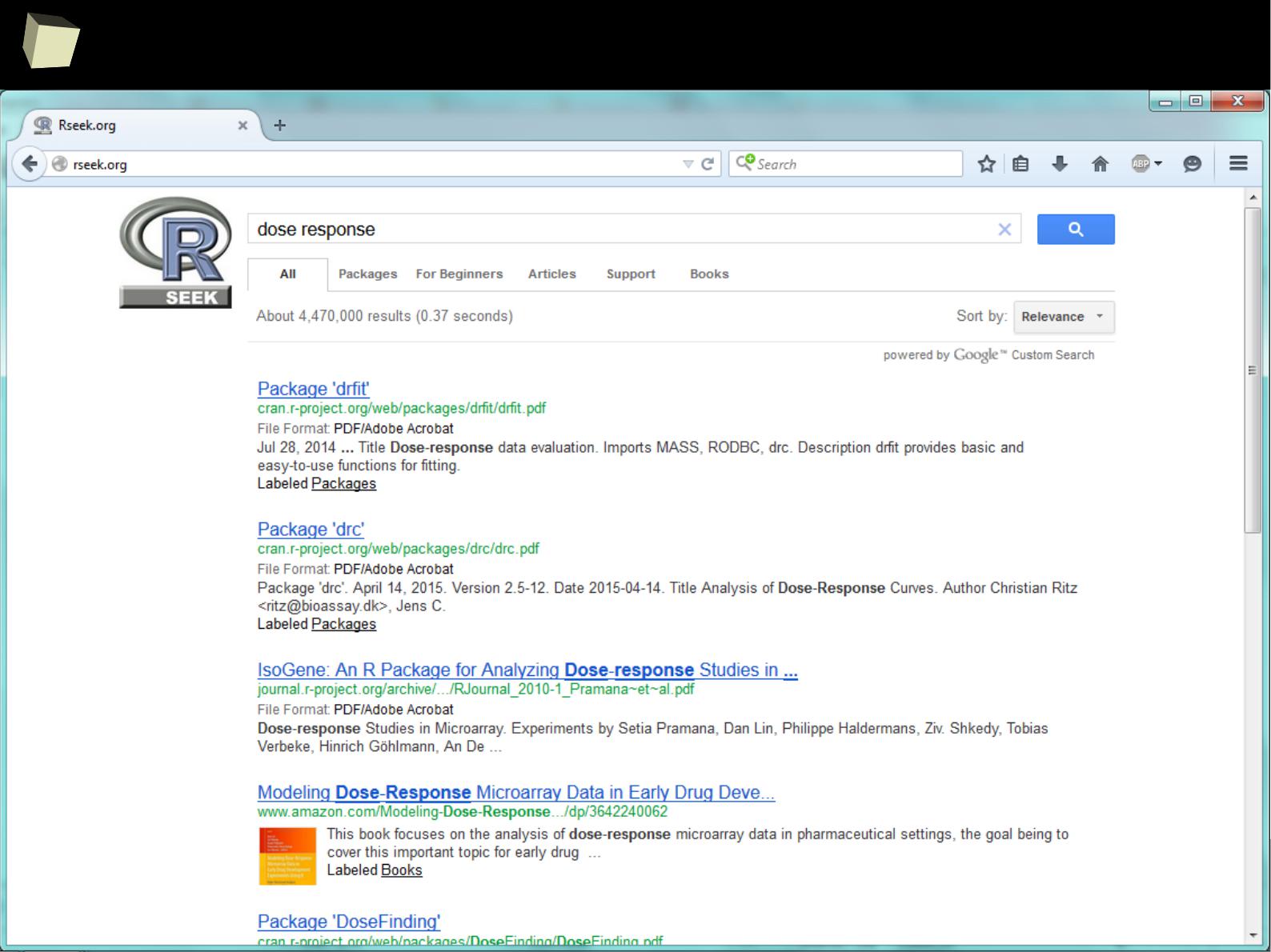

Where to seaRch? → Rseek.org

1

0

8

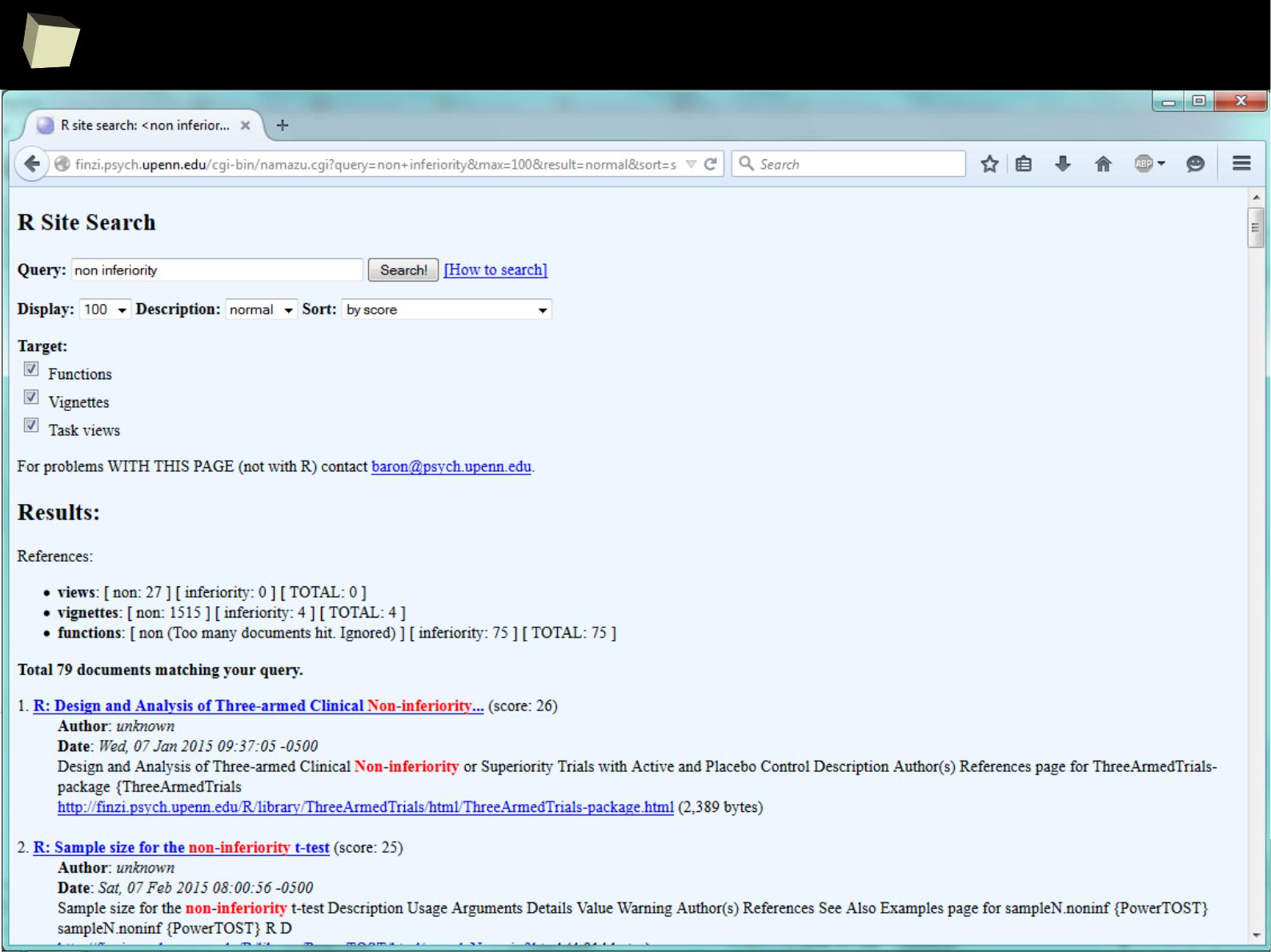

Where to seaRch? → R Site Search

1

0

9

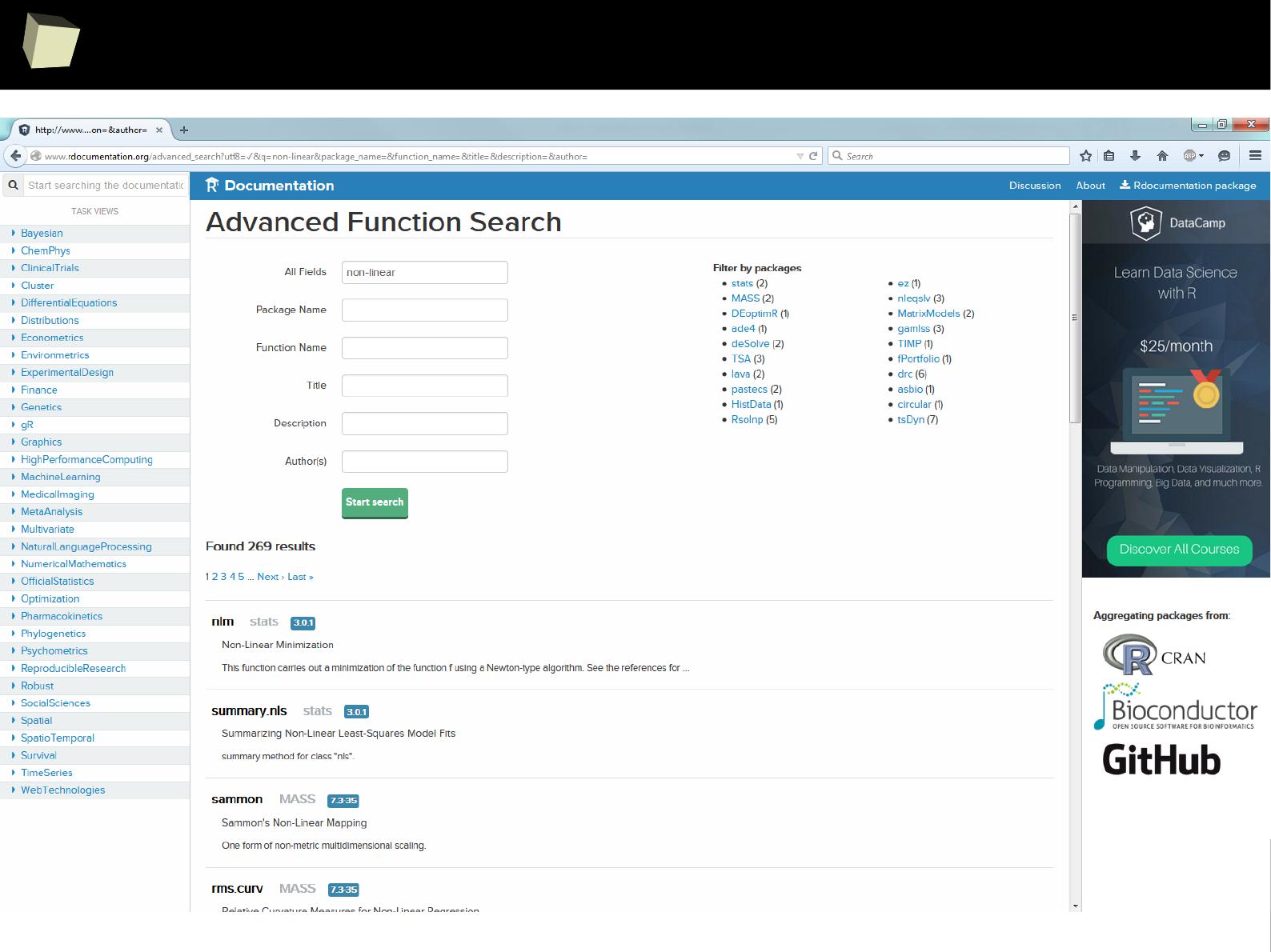

Where to seaRch? → R Documentation

1

1

0

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is supported by the world of science

IV R is supported by the community

V R is supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

1

1

1

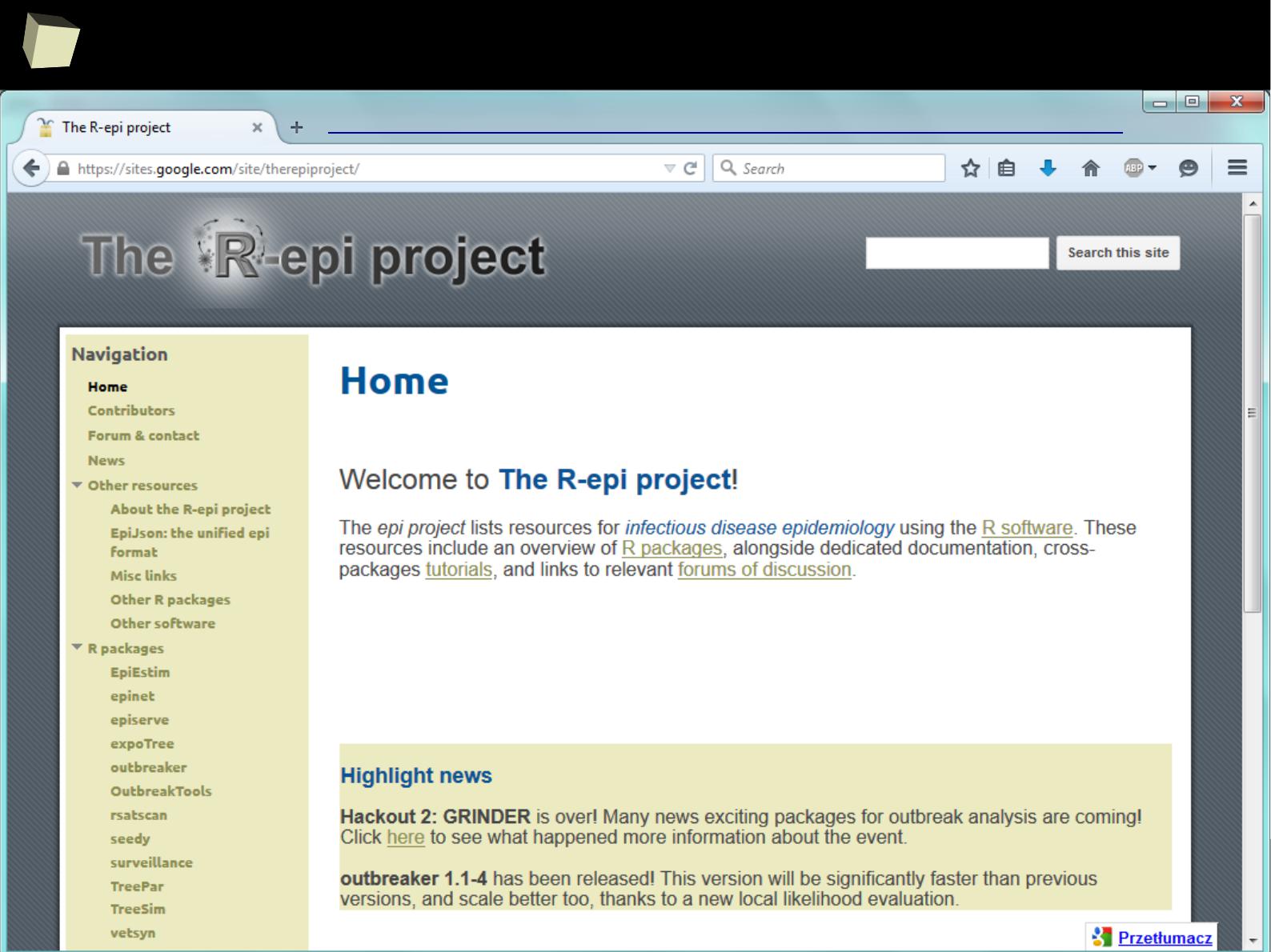

...supported by the community

R is a global community of more than 2 million

and still growing!

users

(2012, Oracle)

and

developers who voluntarily contribute their time and technical expertise to

maintain, support, test and extend the R language and its environment, tools

and infrastructure (e.g. CRAN). Among them are experienced statisticians, often

employed in the well-known pharmaceutical companies like Merck or Amgen.

Hundreds of bloggers maintain their webpages, take active part in communities

both online and offline through hundreds of forums and mailing lists, building

knowledge base (visit rseek.org). UseRs also organize meetings and conferences.

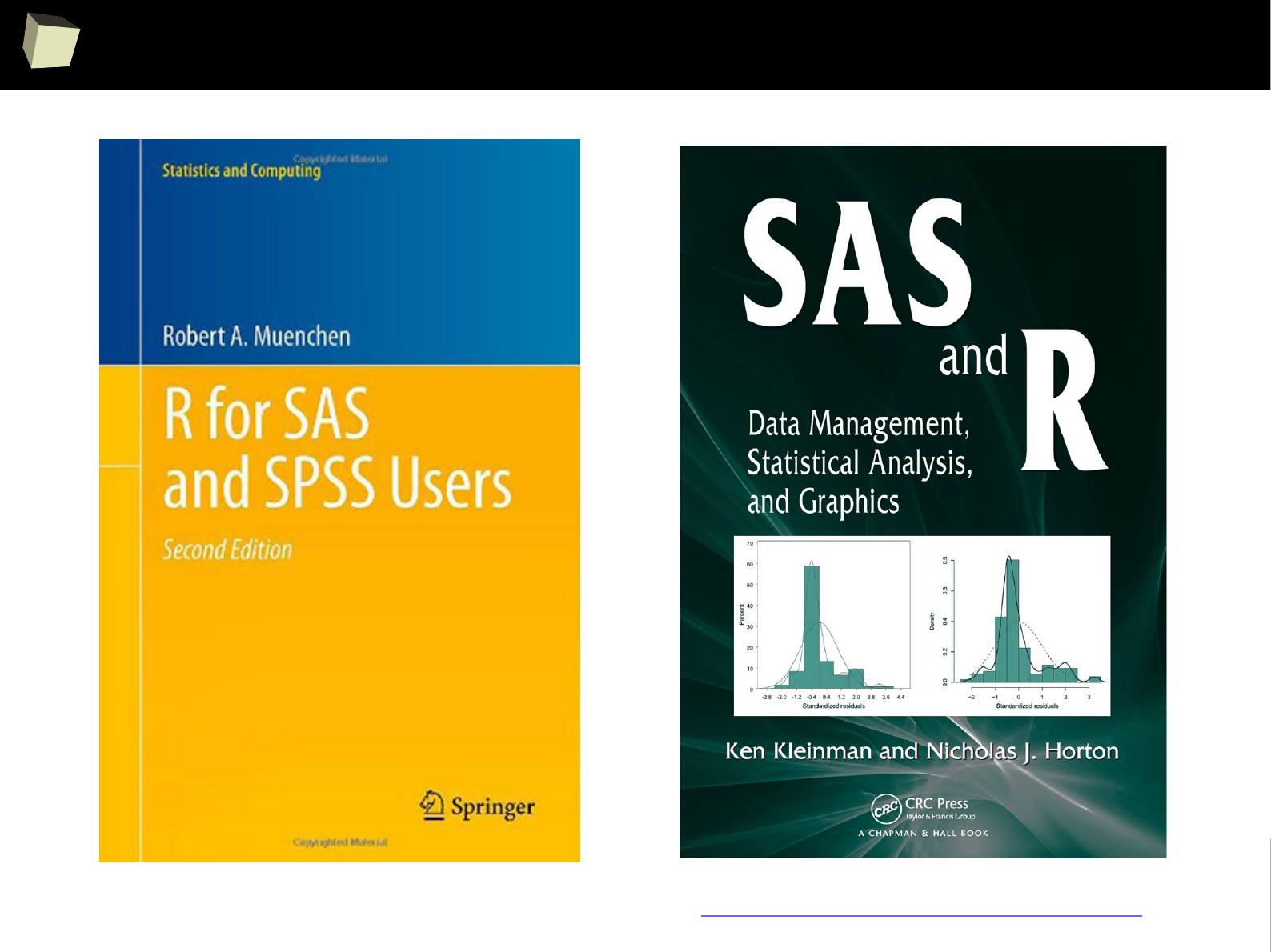

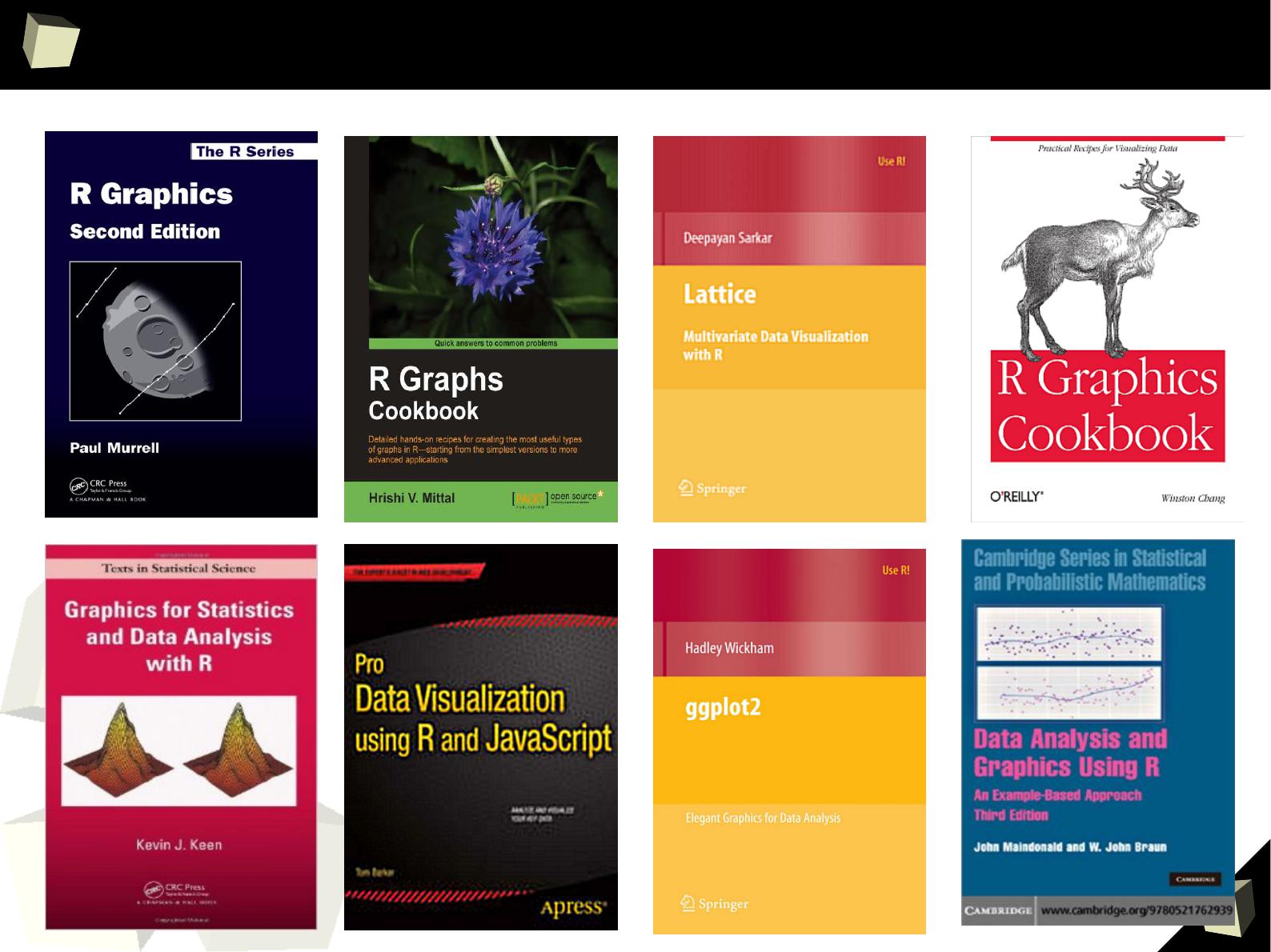

There are dozens of valuable books written both by academics, researchers and

“regular” R users. These books are issued by the prestigious publishing houses

like Springer Verlag and Wiley & Sons.

1

1

2

...supported by the community

The size of the R user community (diffcult to define precisely, because

there are no sales transactions, but conservatively estimated as being in

the tens of thousands, with some independent estimates in the hundreds of

thousands), provides for extensive review of source code and testing in

“real world" settings outside the connes of the formalized testing performed

by R Core.

This is a key distinction, related to product quality, between R and

similar software that is only available to end users in a binary, executable

format. In conjunction with detailed documentation and references

provided to end users, the size of the R user community, all having full

access to the source code, enables a superior ability to anticipate and verify

R's performance and the results produced by R.

http://www.r-project.org/doc/R-FDA.pdf

1

1

3

...supported by the community

1

1

4

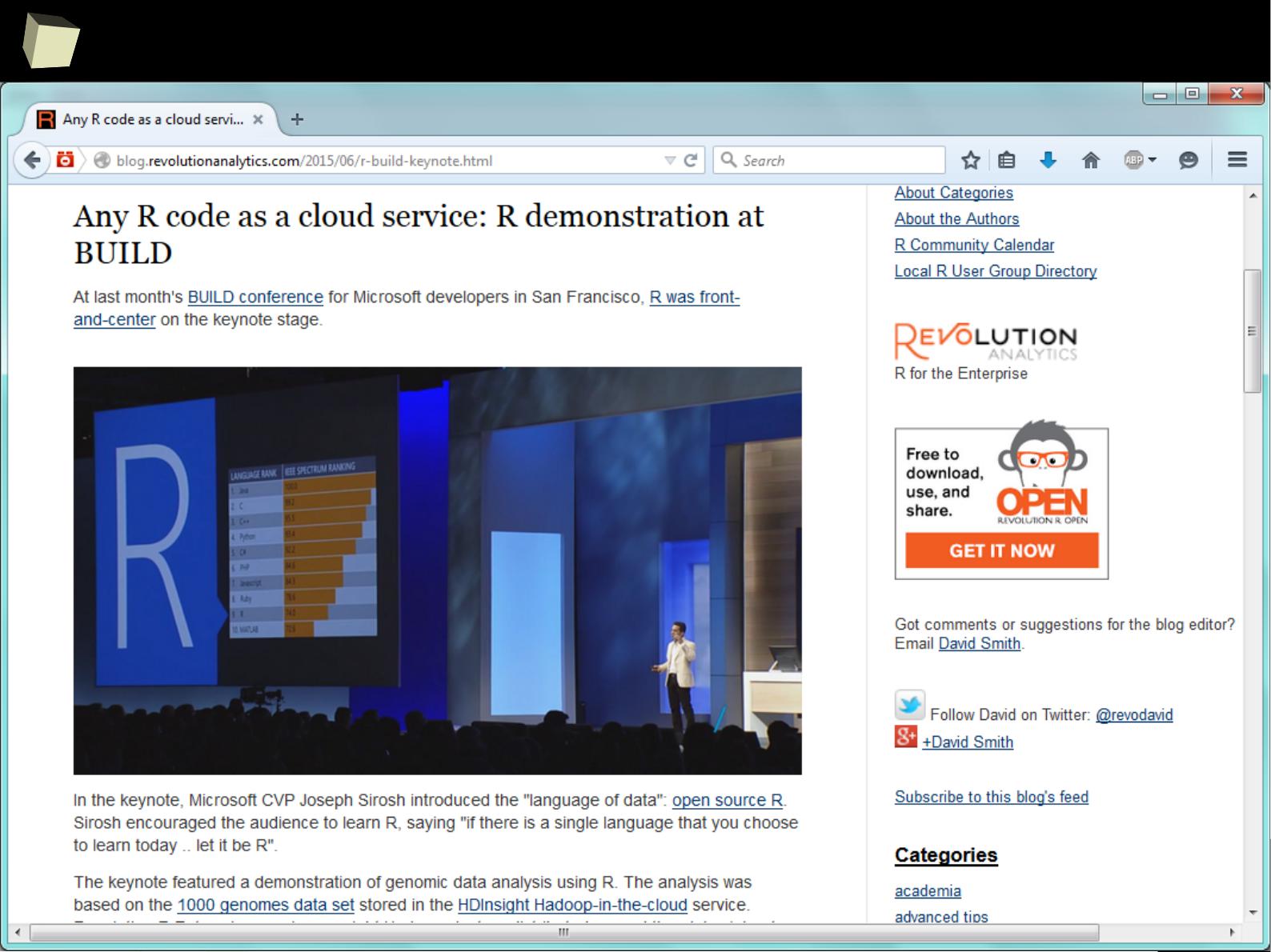

R at Microsoft's BUILD 2015 conference

say “wow!”

1

1

5

...supported by the community

1

1

6

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is supported by the world of science

IV ½ :) Books

V R is supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

1

1

7

...some Polish books

1

1

8

...some foReign books...

1

1

9

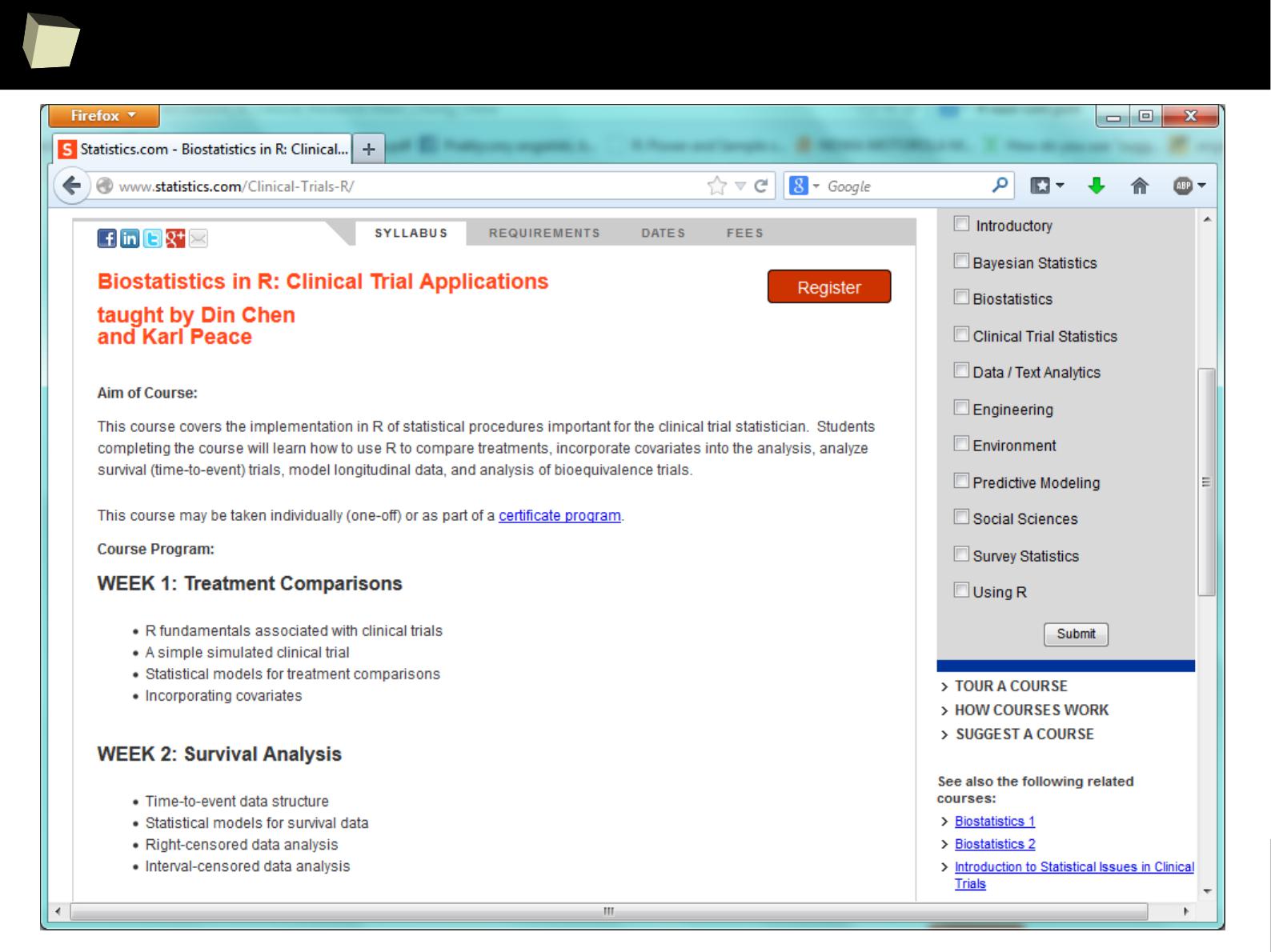

...and courses provided by their authors...

1

2

0

...more foreign books...

1

2

1

...and more foreign books...

1

2

2

...and more foreign books...

1

2

5

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is (widely) supported by the world of science

IV R is supported by the community

V R is (increasingly) supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

1

2

6

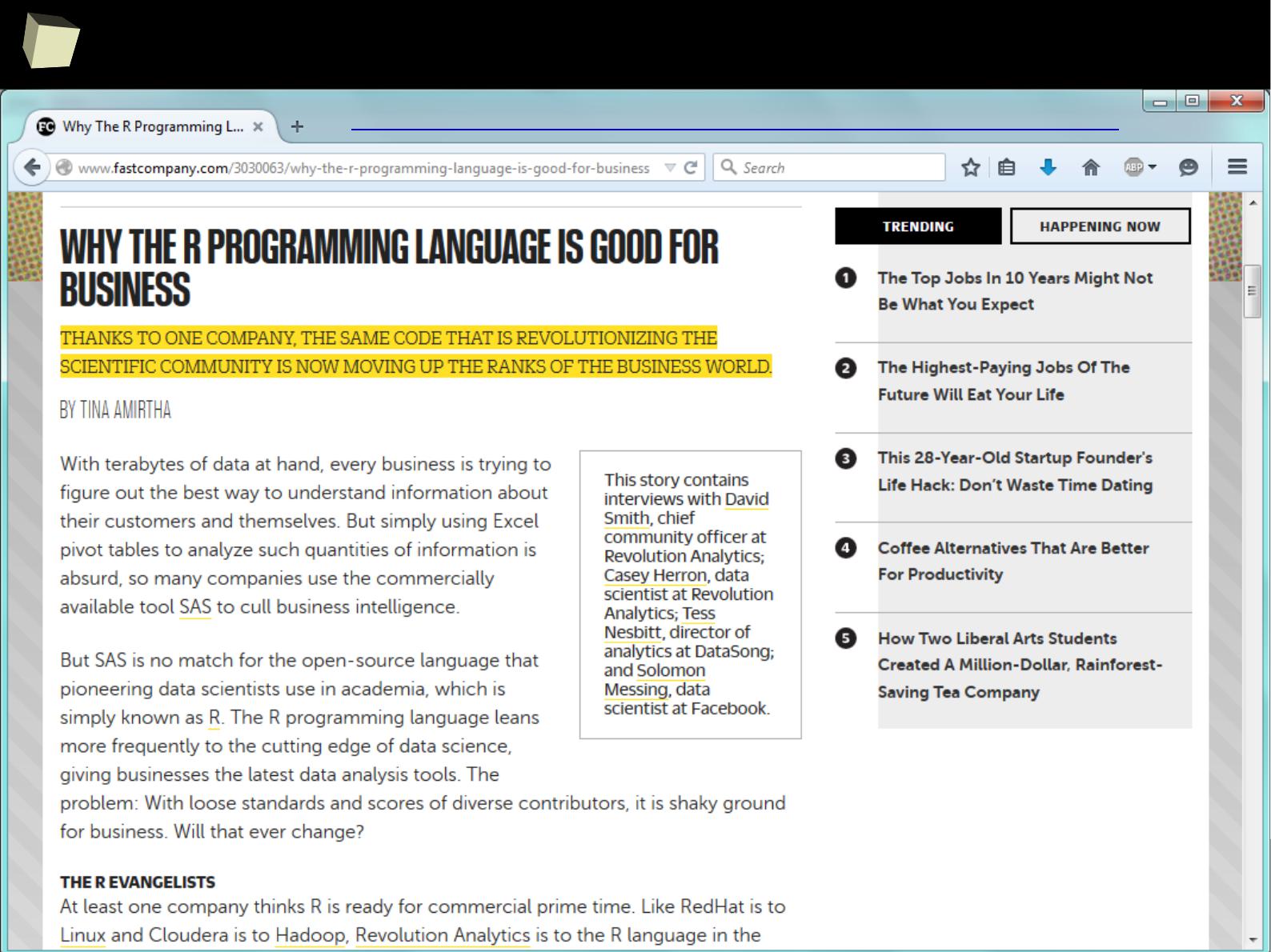

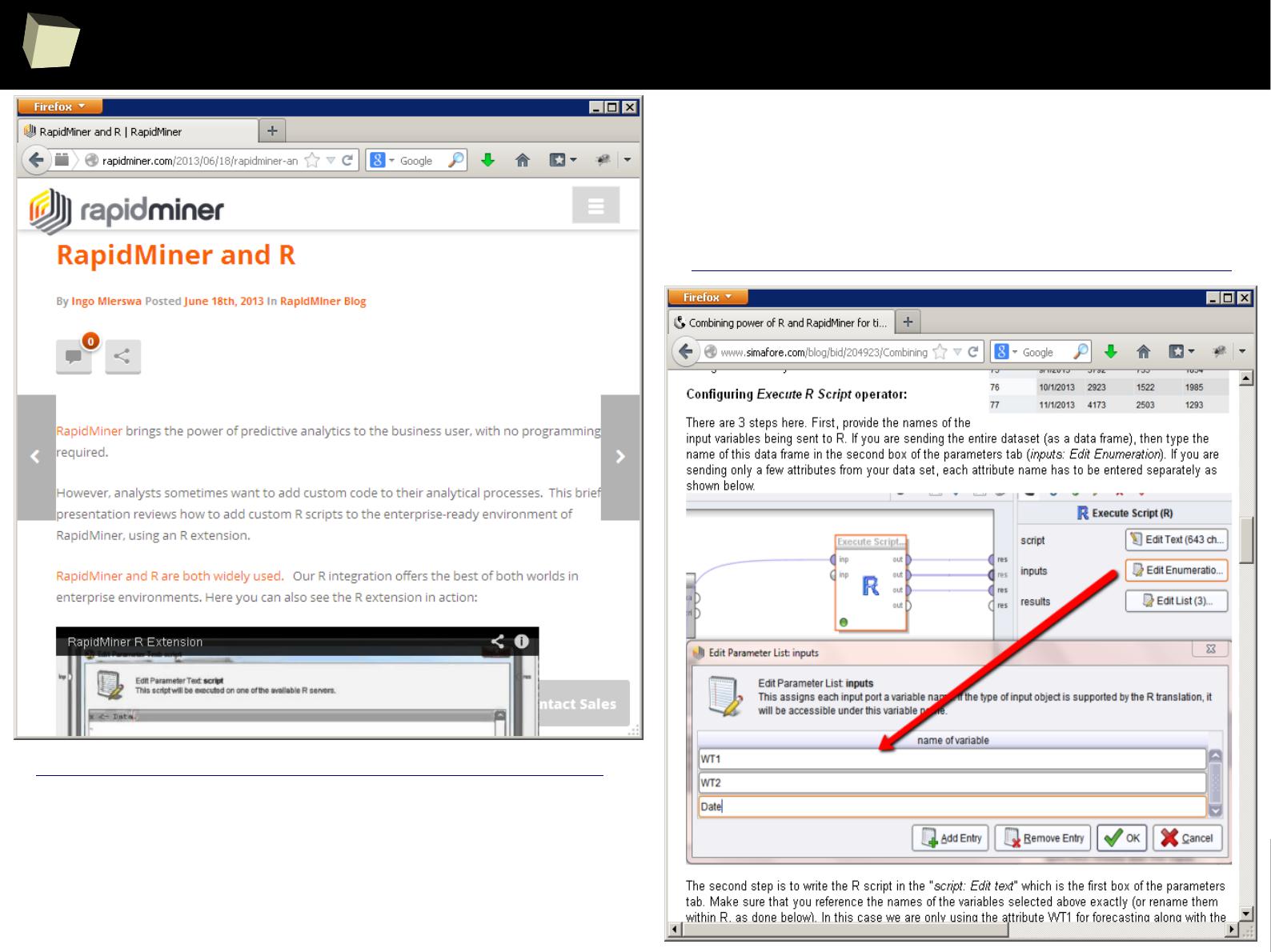

...supported by the business

The biggest tycoons in the market of statistical analysis and data mining

recognize the potential of the R and develop specialistic packages as well as

provide methods of easy integration between their products and R. These include

companies such as Microsoft, Oracle, StatSoft, IBM (SPSS), Teradata, Merck,

Tibco, Sybase, RapidMiner, SAS and others.

The situation is well described by the two following articles:

1. Adoption of R by large Enterprise Software Vendors

2. R integrated throughout the enterprise analytics stack

1

2

8

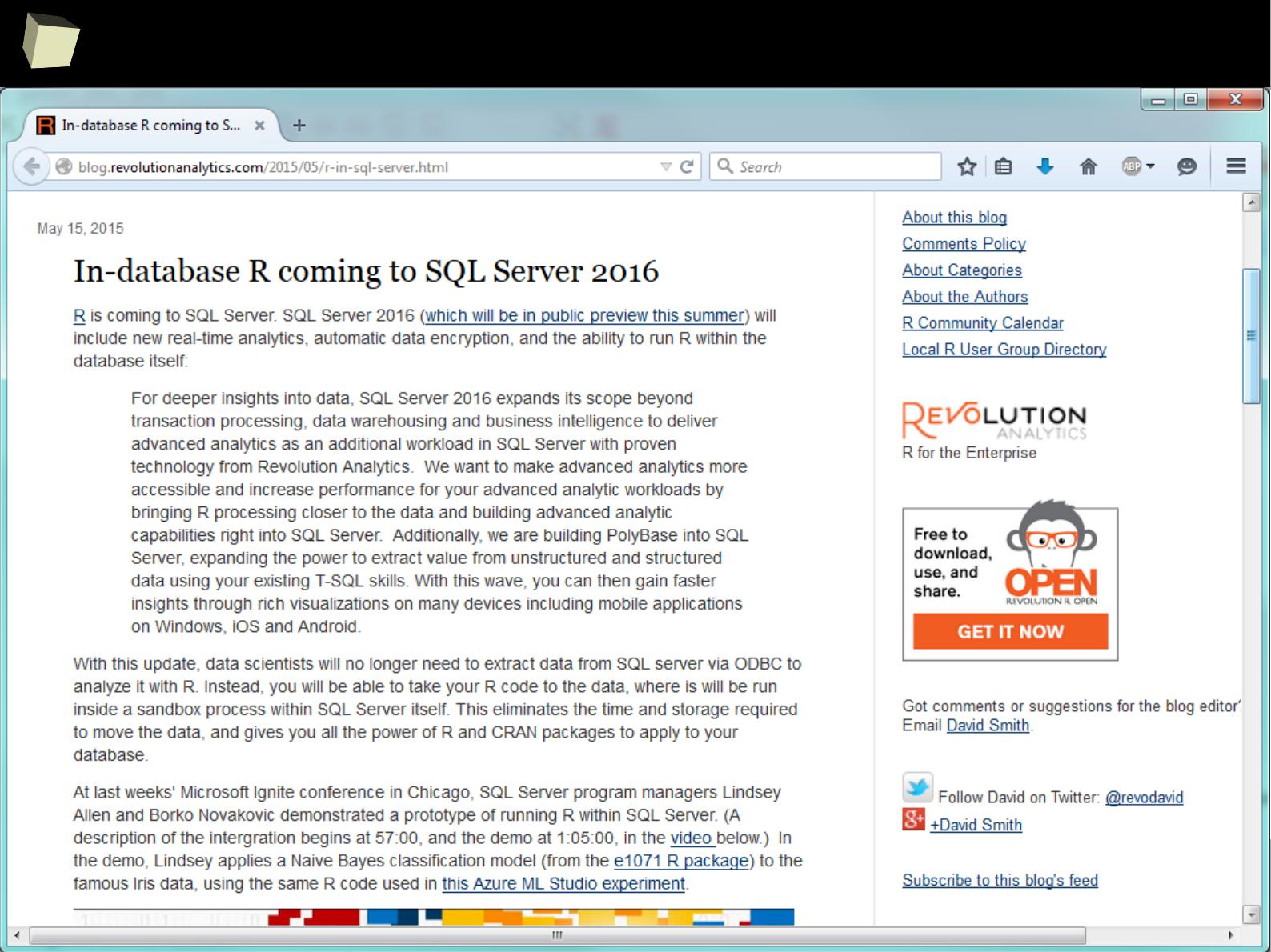

R feat. Microsoft

1

2

9

R feat. Microsoft

1

3

3

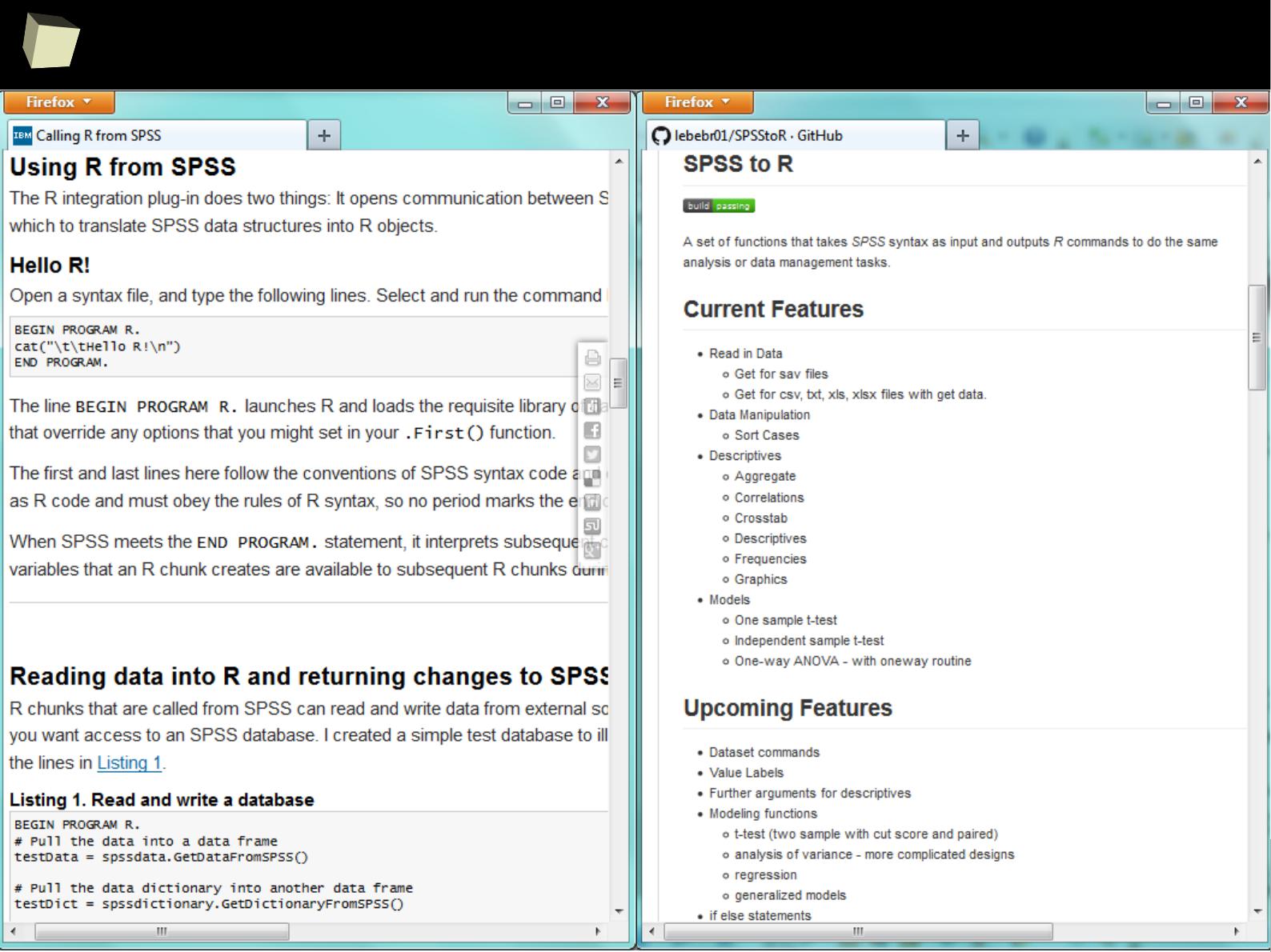

R feat. SPSS

1

3

4

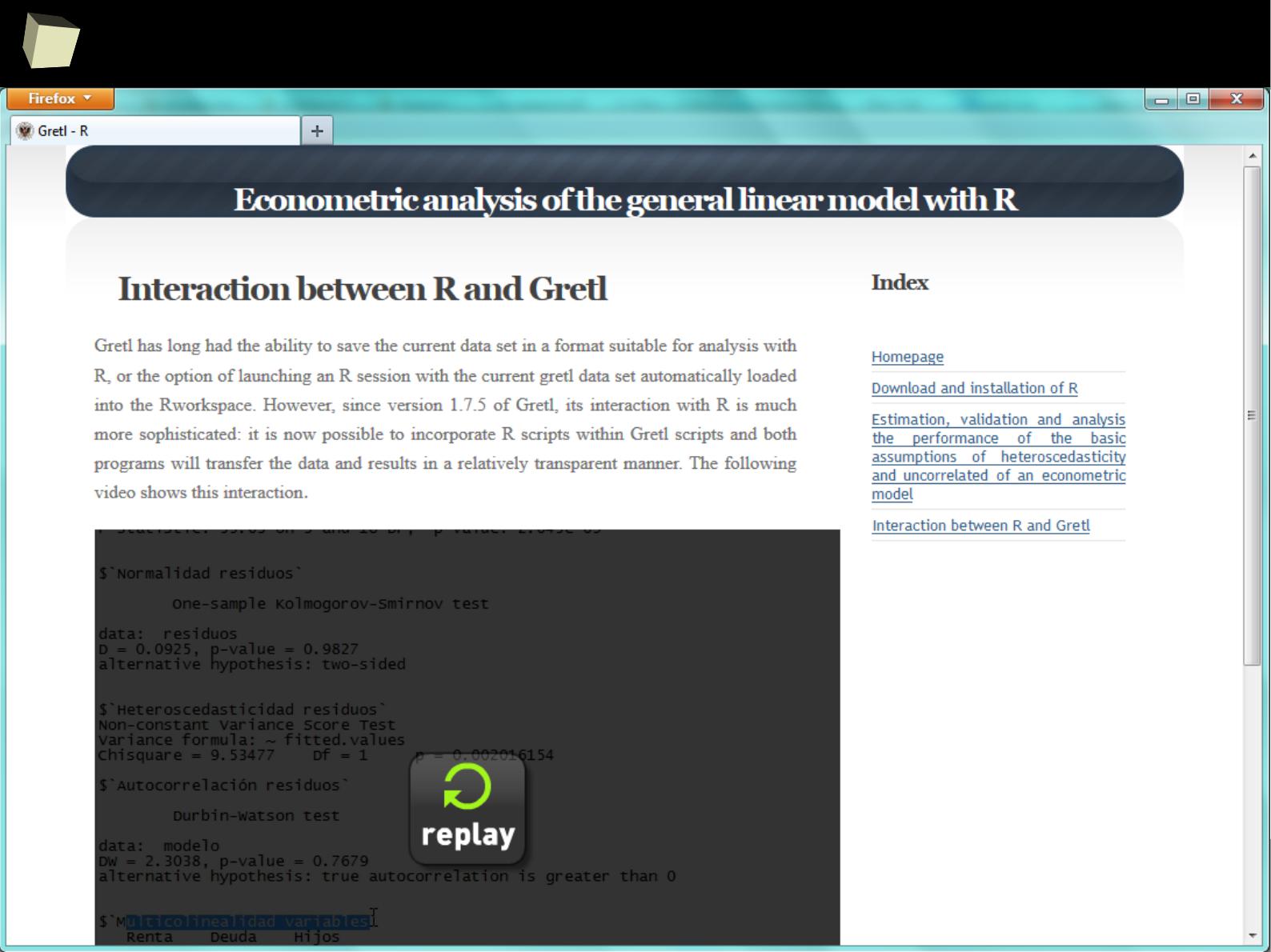

R feat. Gretl

1

3

5

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is (widely) supported by the world of science

IV R is supported by the community

V ½ :) R and SAS

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

1

3

6

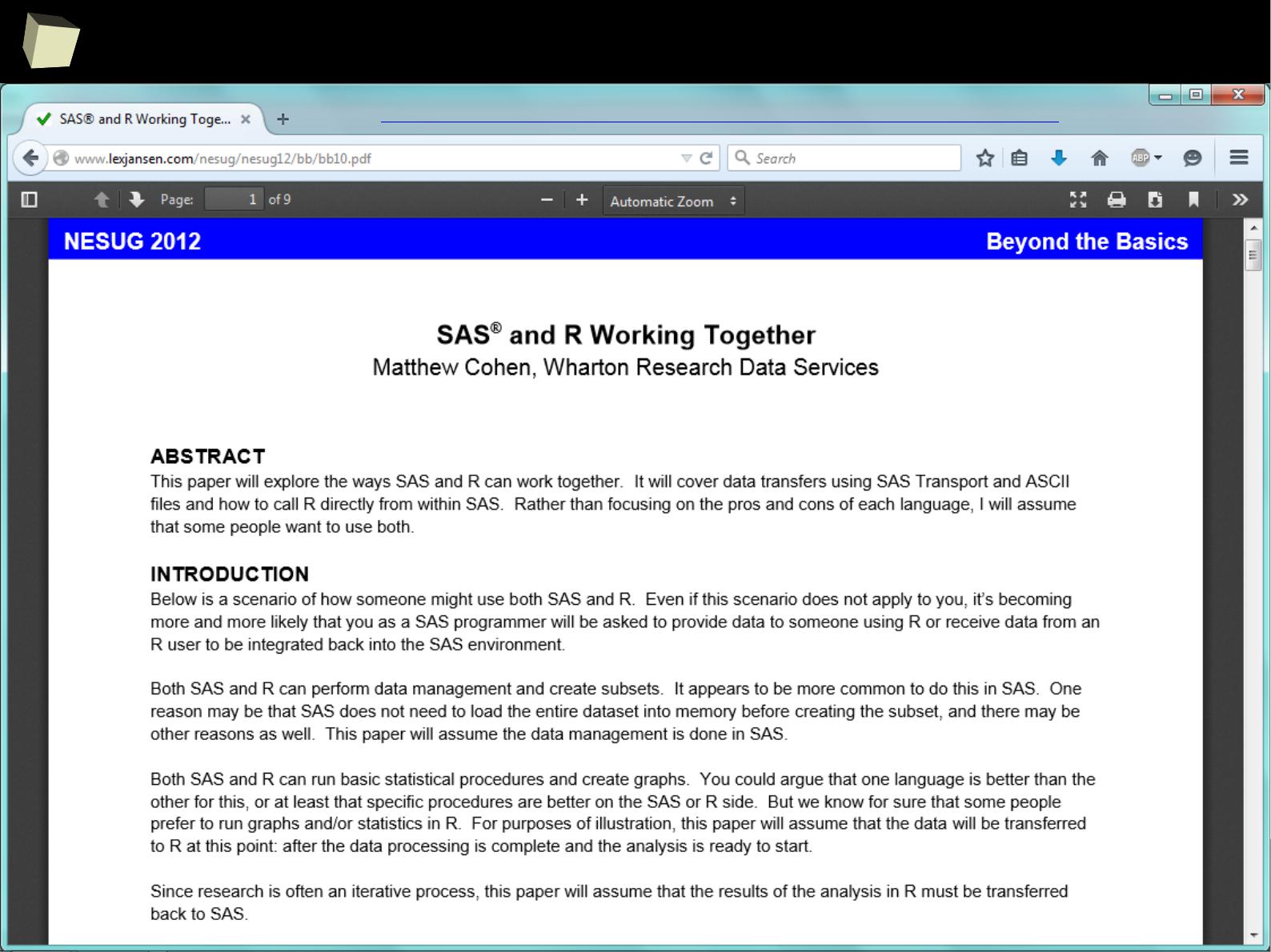

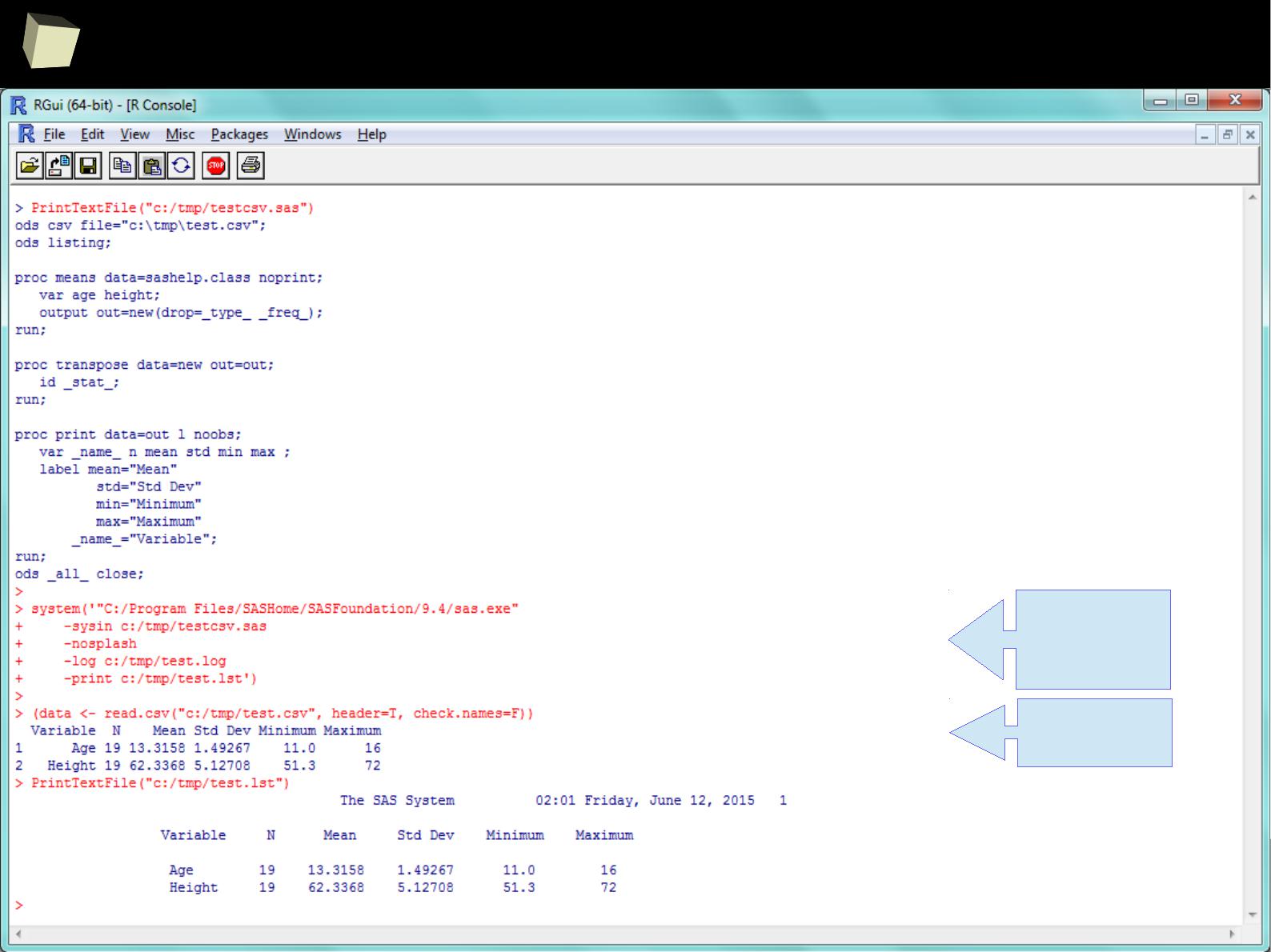

R feat. SAS

1

3

7

R feat. SAS

calling SAS

in batch

mode

reading

created CSV

1

3

8

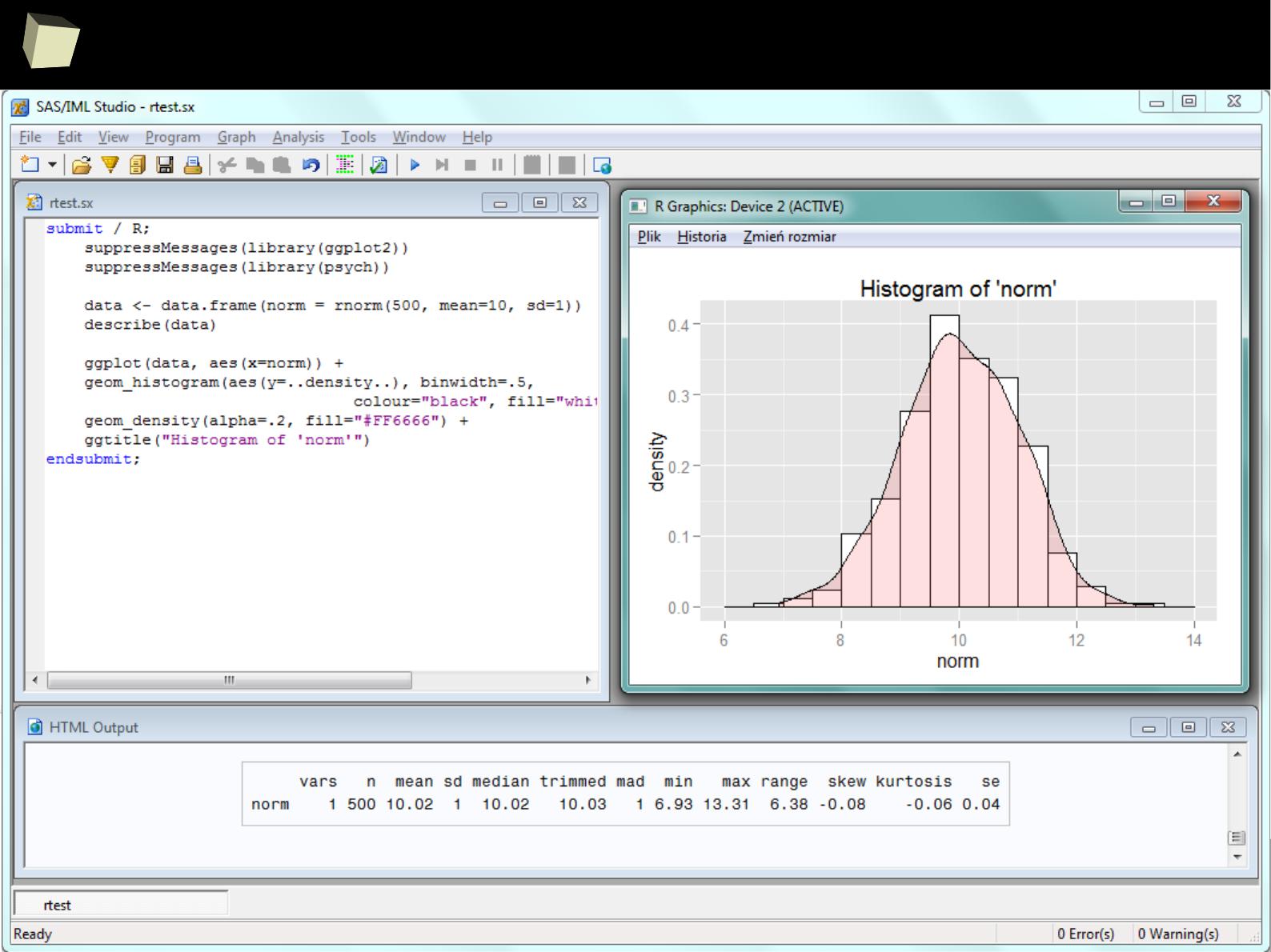

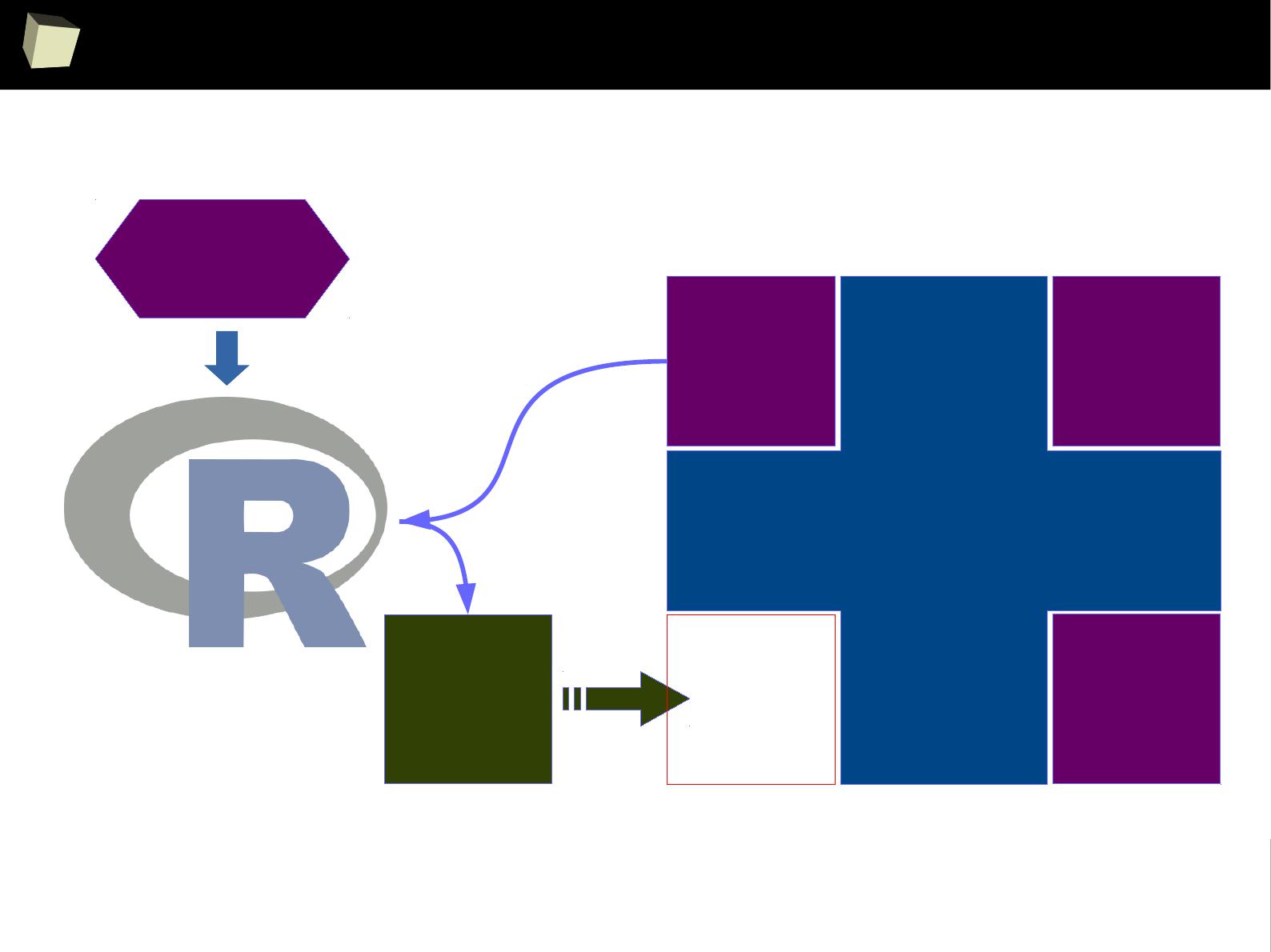

Enhance SAS with R via IML

SAS BASE

SAS BASE

SAS

SAS

IML

IML

SAS

SAS

module

module

#1

#1

SAS

SAS

module

module

#2

#2

Missing

Missing

functio-

functio-

nality

nality

Call R

Call R

from

from

SAS

SAS

/via IML/

/via IML/

Required

Required

algorithm or

algorithm or

functionality

functionality

bidirectional

data

exchange

1

3

9

SAS – R companion

A set of factors makes the cooperation between SAS and R easier:

●

Package sas7bdat enables R reading SAS SAS7BDAT datasets without

having SAS installed

●

Package SASxport enables R both reading and writing SAS Transport files

(XPT) without having SAS installed.

It is removed from CRAN yet still available in the CRAN Archive.

●

Package foreign is another option for exchanging data with SAS

●

For several statistical methods SAS-compliant variants are created:

●

Contrast: contr.SAS

●

Quantiles: type 3 → nearest even order statistic

●

Type-III Sum of Square is available in R

●

Both SAS and R share the same numbers rounding algorithm

●

Both R and SAS can call each other in batch mode from a command line

●

R can also be accessed conveniently from SAS via IML module

●

With certain packages both SAS "logs" and "listings" can be imitated.

1

4

1

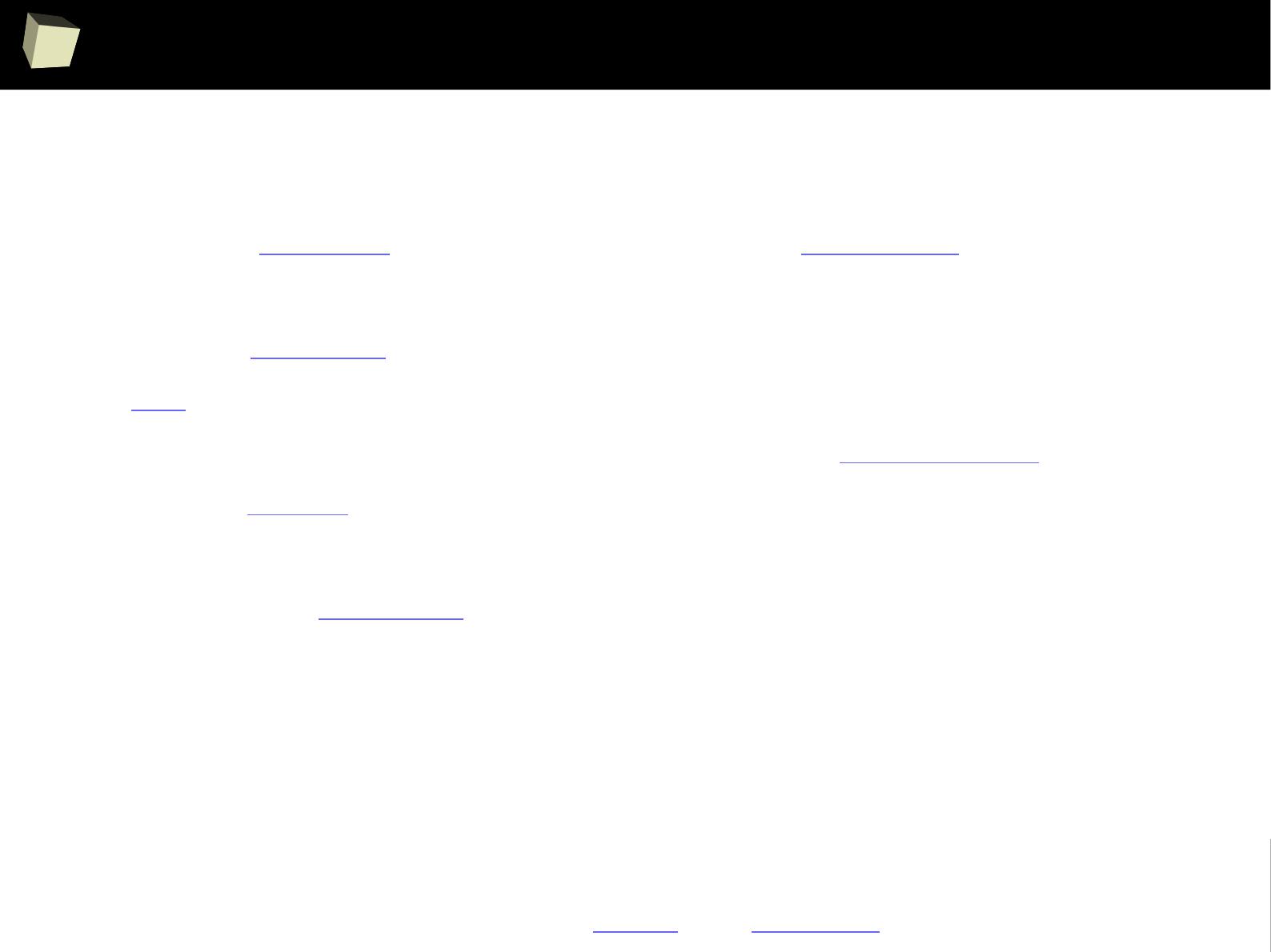

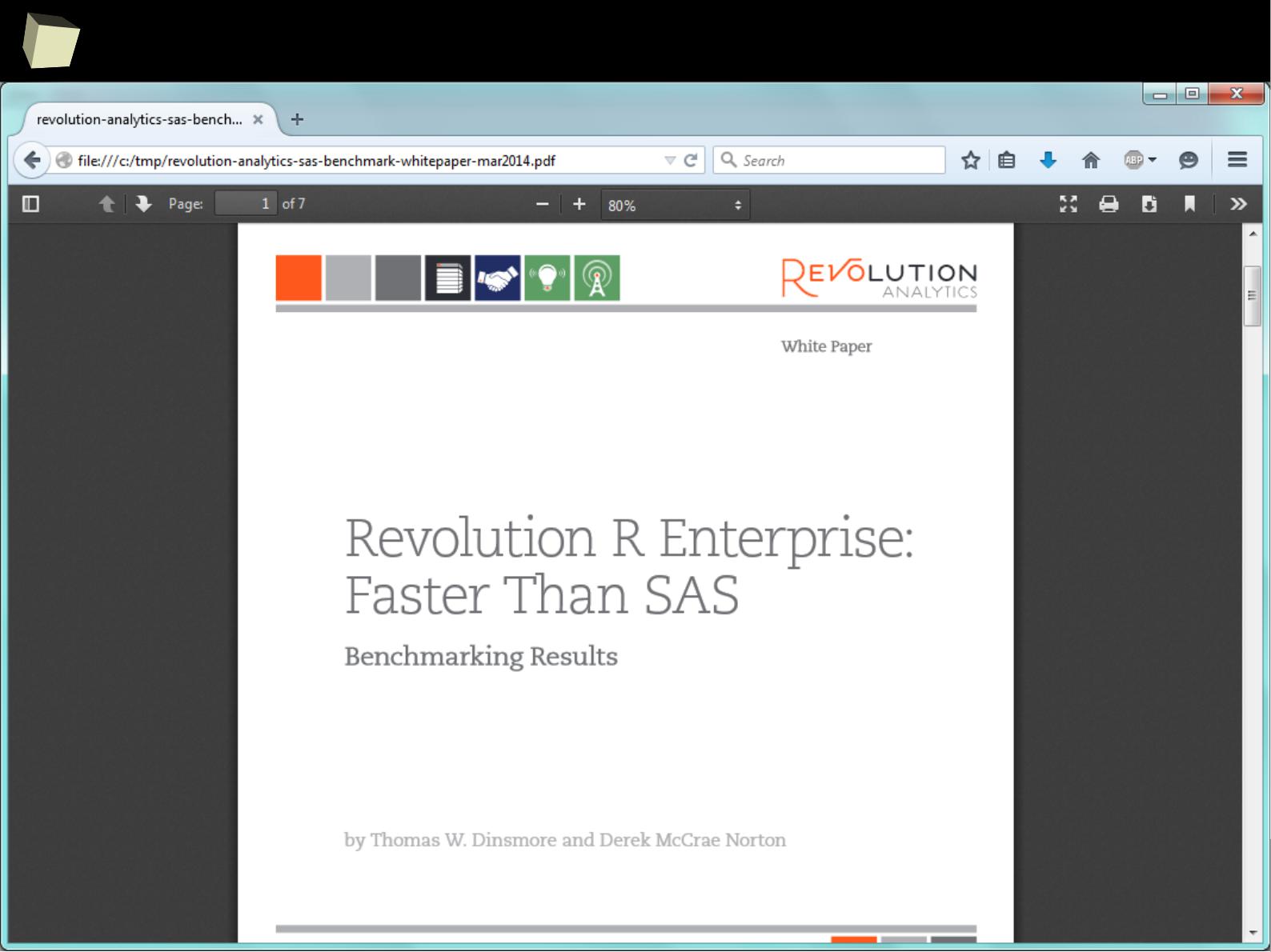

Let's MoRtal Kombat begin!

1

4

2

Let's MoRtal Kombat begin!

1

4

3

Let's MoRtal Kombat begin!

1

4

4

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is (widely) supported by the world of science

IV R is supported by the community

V R is (increasingly) supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

1

4

5

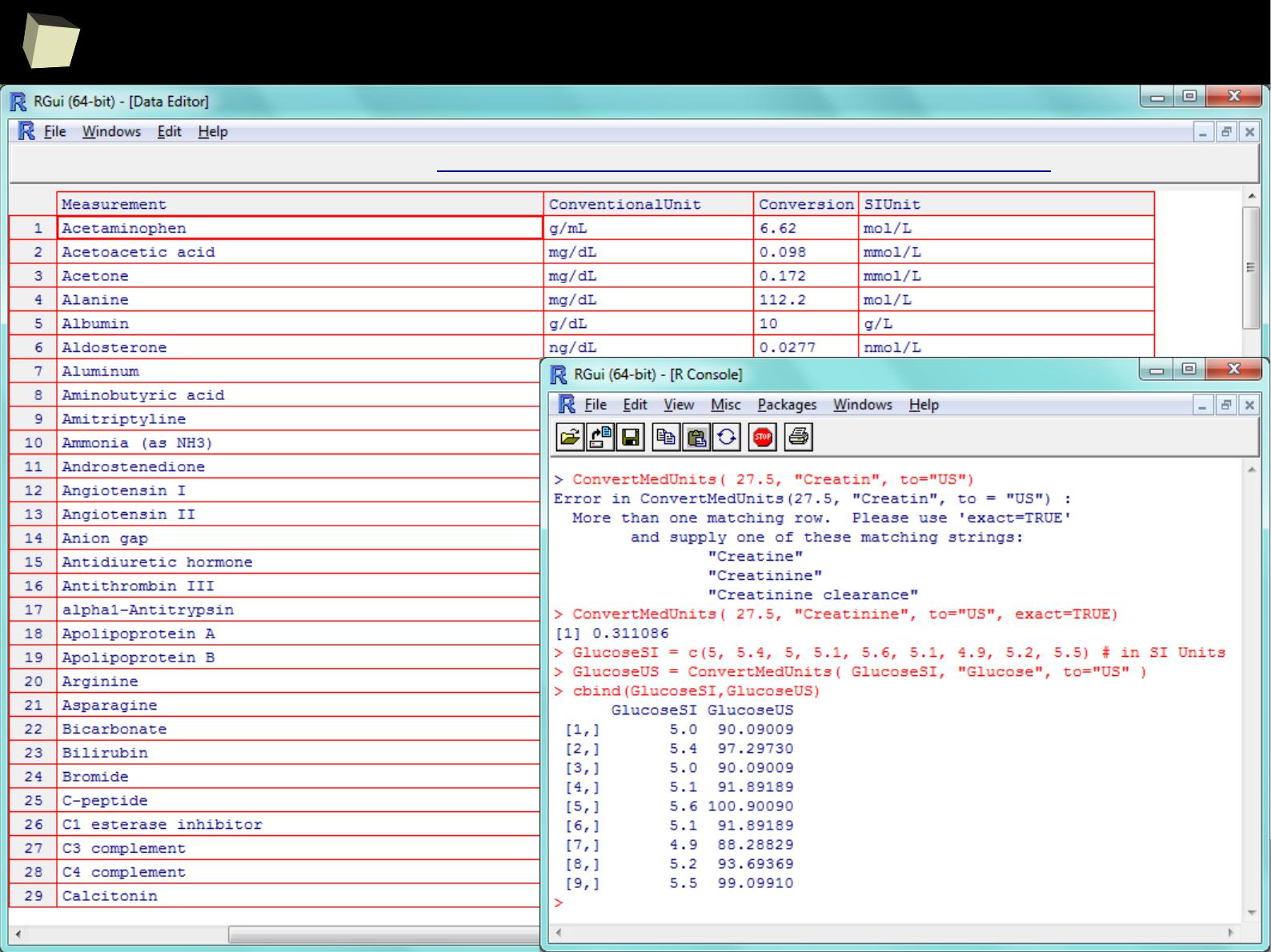

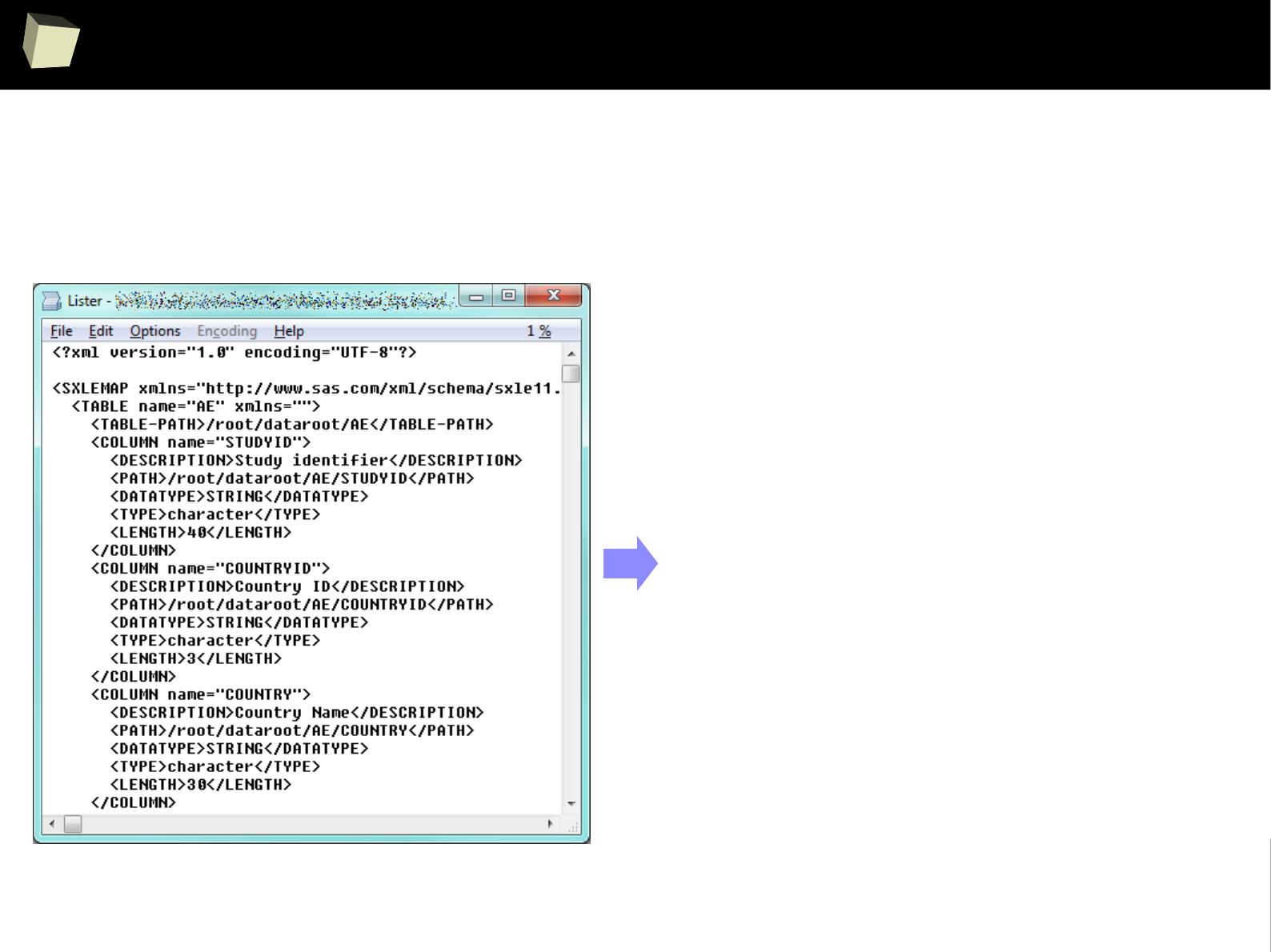

Does R speak XYZ ?

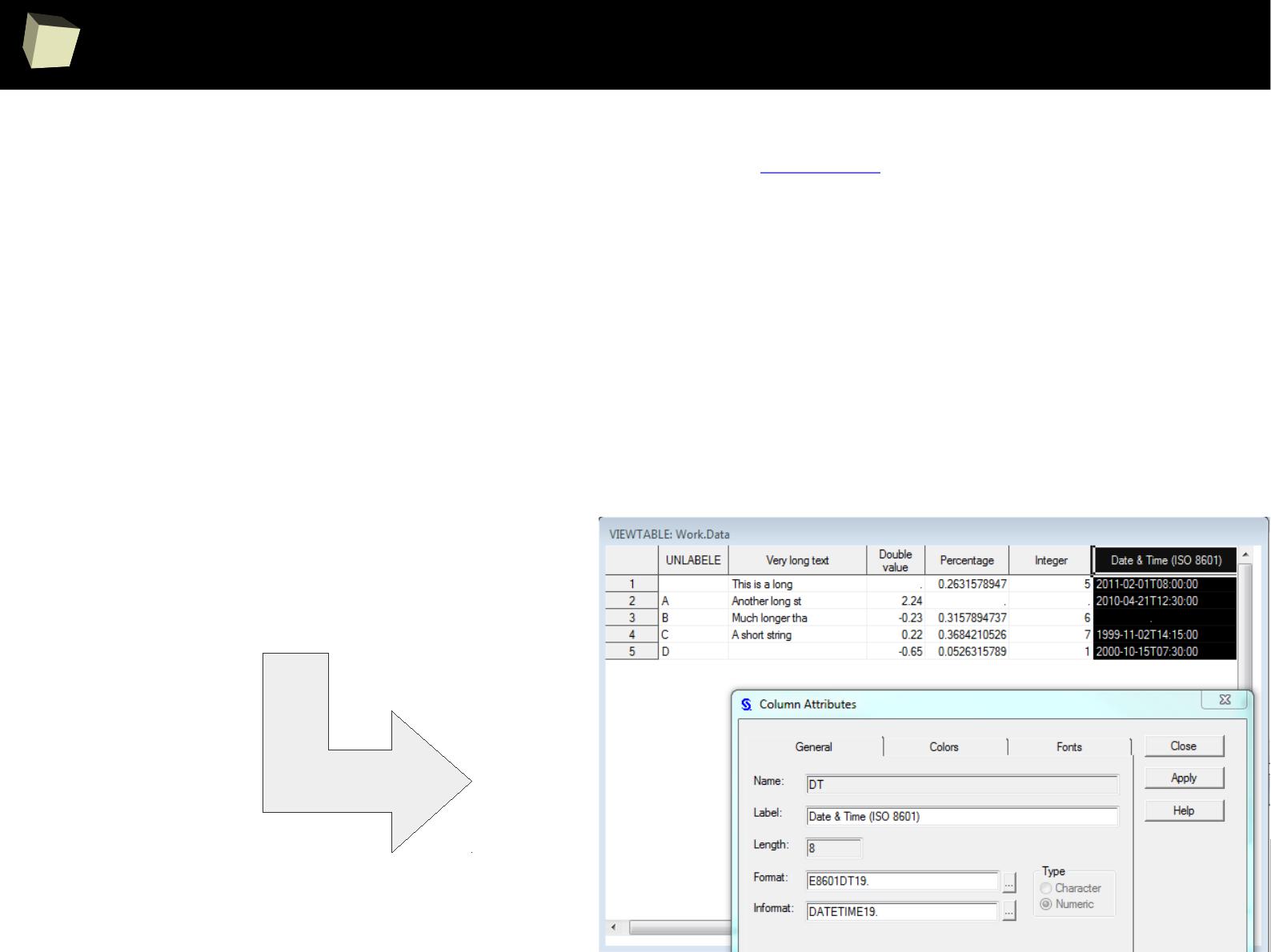

R is able to read data in many formats: MS Excel, OpenOffice Calc, Gnumeric,

DICOM, SPSS, Weka, Systat, Stata, EpiInfo, SAS datasets, SAS Transport, Systat,

Minitab, Octave, Matlab, DBF (dBase, FoxPro), CSV/TSV, XML, HTML, JSON and...

One can access data stored in various databases via native drivers (MySQL,

PostgreSQL, SQLite, MS Access) as well as ODBC and JDBC interfaces. This gives

easy access to any database for which appropriate drivers are available: Oracle,

SQL Server, MySQL, dBase, PostgreSQL, SQLite, DB/2, Informix, Firebird, etc.

1

4

6

Does R speak XYZ ?

> read.csv("c:/tmp/data.csv", header=TRUE, sep=";")

Column.1 Column.2 Column.3

1 1 First 2011-01-03

2 2 Second 2011-01-04

3 3 Third 2011-01-05

4 4 Fourth 2011-01-06

5 5 Fifth 2011-01-07

> sqldf::read.csv.sql("c:/tmp/data.csv", sql="SELECT * FROM file f WHERE f.`Column.1` BETWEEN 2 AND 3", sep=";")

Column.1 Column.2 Column.3

1 2 Second 2011-01-04

2 3 Third 2011-01-05

> openxlsx::read.xlsx("c:/tmp/data.xlsx", sheet=1, colNames=TRUE, detectDates = TRUE)

Column.1 Column.2 Column.3

1 1 First 2011-01-03

2 2 Second 2011-01-04

3 3 Third 2011-01-05

4 4 Fourth 2011-01-06

5 5 Fifth 2011-01-07

> data <- read.ods("c:/tmp/data.ods", sheet=1)

> colnames(data) <- data[1, ]

> data[-1, ]

Column 1 Column 2 Column 3

2 1 First 2011-01-03

3 2 Second 2011-01-04

4 3 Third 2011-01-05

5 4 Fourth 2011-01-06

6 5 Fifth 2011-01-0

CSV

CSV

SQL

Excel

Calc

1

4

7

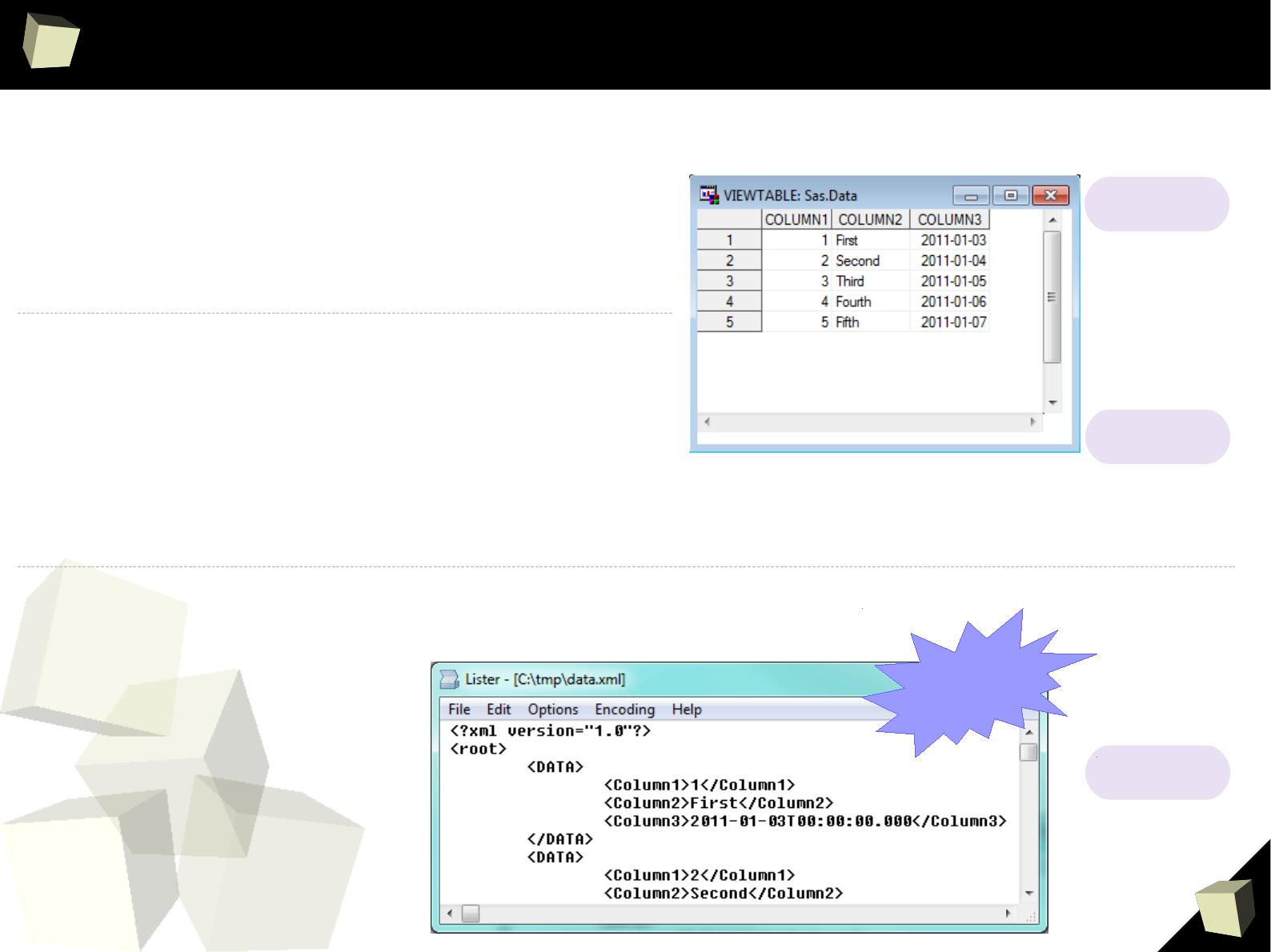

Does R speak XYZ ?

> read.xport("c:/tmp/data.xpt")

COLUMN1 COLUMN2 COLUMN3

1 1 First 2011-01-03

2 2 Second 2011-01-04

3 3 Third 2011-01-05

4 4 Fourth 2011-01-06

5 5 Fifth 2011-01-07

> data=read.sas7bdat("c:/tmp/data.sas7bdat")

> data$COLUMN4 <- as.Date(data$COLUMN3, origin = "1960-01-01")

> data

COLUMN1 COLUMN2 COLUMN3

1 1 First 2011-01-03

2 2 Second 2011-01-04

3 3 Third 2011-01-05

4 4 Fourth 2011-01-06

5 5 Fifth 2011-01-07

> xml <- xmlParse("c:/tmp/data.xml")

> xmlToDataFrame(xml, homogeneous = TRUE, collectNames = TRUE, colClasses = c("integer", "character", "character"))

> data$Column3 <- as.Date(data$Column3)

> data

Column1 Column2 Column3

1 1 First 2011-01-03

2 2 Second 2011-01-04

3 3 Third 2011-01-05

4 4 Fourth 2011-06-03

5 5 Fifth 2011-01-07

XML

SAS

Transport

SAS 7

XPATH,

DTD & XSD

supported

1

4

8

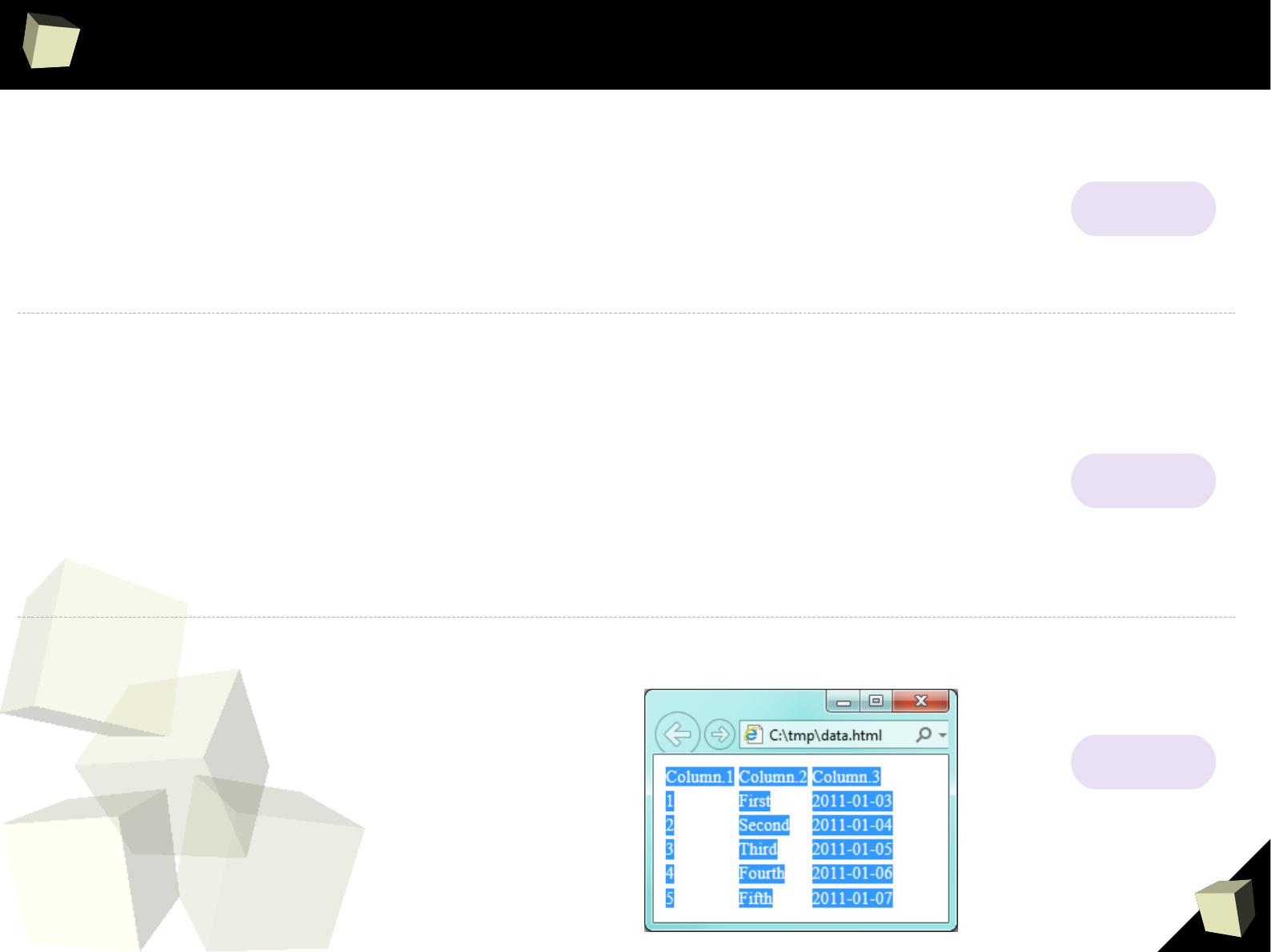

Does R speak XYZ ?

> readHTMLTable("c:/tmp/data.html", header =T, which = 1)

Column 1 Column 2 Column 3

1 1 first 2011-01-03

2 2 second 2011-01-04

3 3 third 2011-01-05

4 4 fourth 2011-01-06

5 5 fifth 2011-01-07

> json

[1] "{\"Column 1\":[\"1\",\"2\",\"3\",\"4\",\"5\"],\"Column 2\":

[\"First\",\"Second\",\"Third\",\"Fourth\",\"Fifth\"],\"Column 3\":[\"2011-01-03\",\"2011-01-04\",\"2011-01-05\",\"2011-01-

06\",\"2011-01-07\"]}"

> as.data.frame(fromJSON(json))

Column.1 Column.2 Column.3

1 1 First 2011-01-03

2 2 Second 2011-01-04

3 3 Third 2011-01-05

4 4 Fourth 2011-01-06

5 5 Fifth 2011-01-07

> read.table(file = "clipboard", sep = " ", header=TRUE) (# with some artefacts)

Column.1 Column.2 Column.3 X

1 1 First 2011-01-03 NA

2 2 Second 2011-01-04 NA

3 3 Third 2011-01-05 NA

4 4 Fourth 2011-01-06 NA

5 5 Fifth 2011-01-07 NA

HTML

JSON

Clipboard

1

5

0

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is (widely) supported by the world of science

IV R is supported by the community

V R is (increasingly) supported by the business

VI 1/3 :) R and relational databases

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

1

5

1

The R and SQL companion

1

5

2

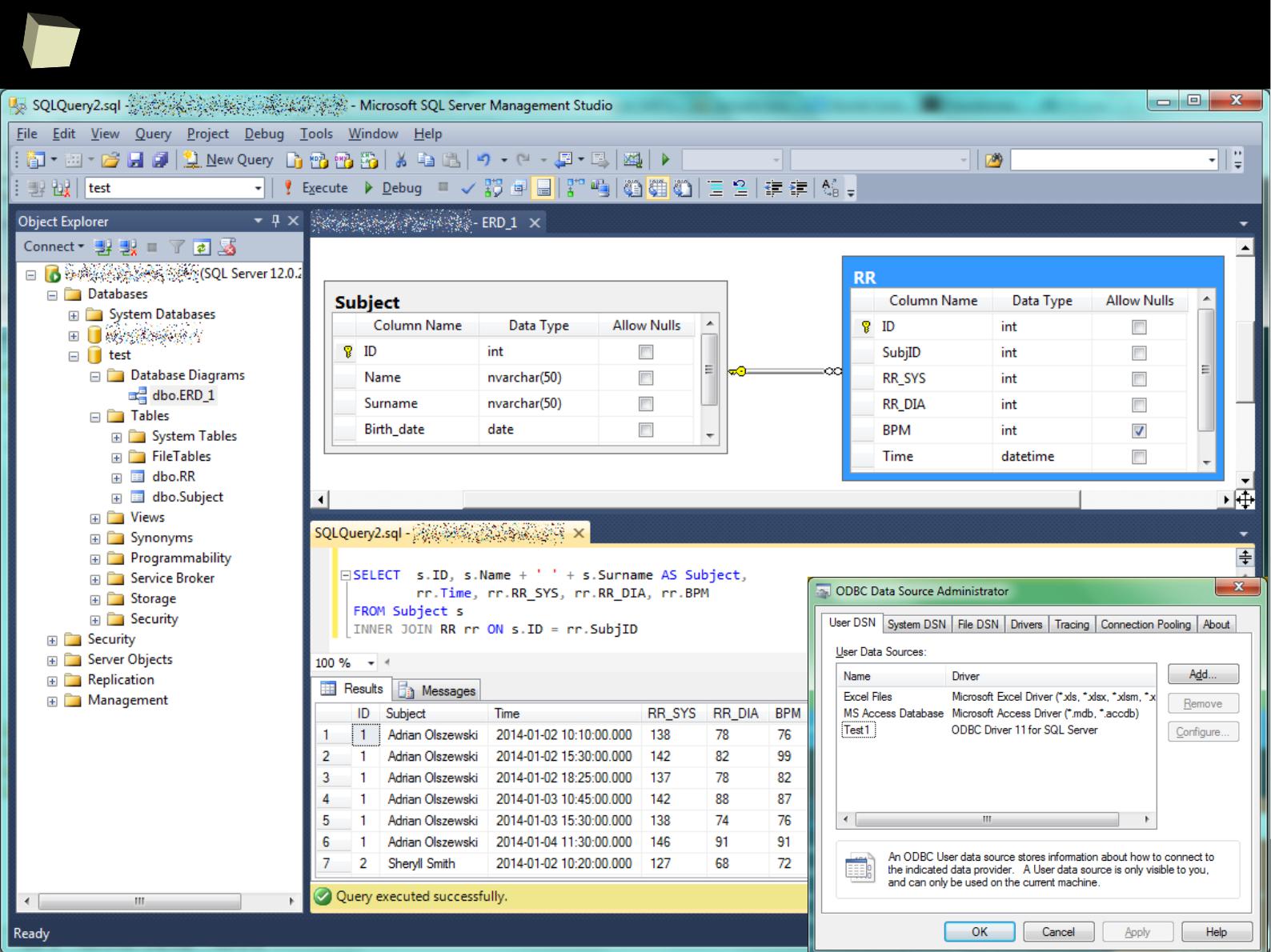

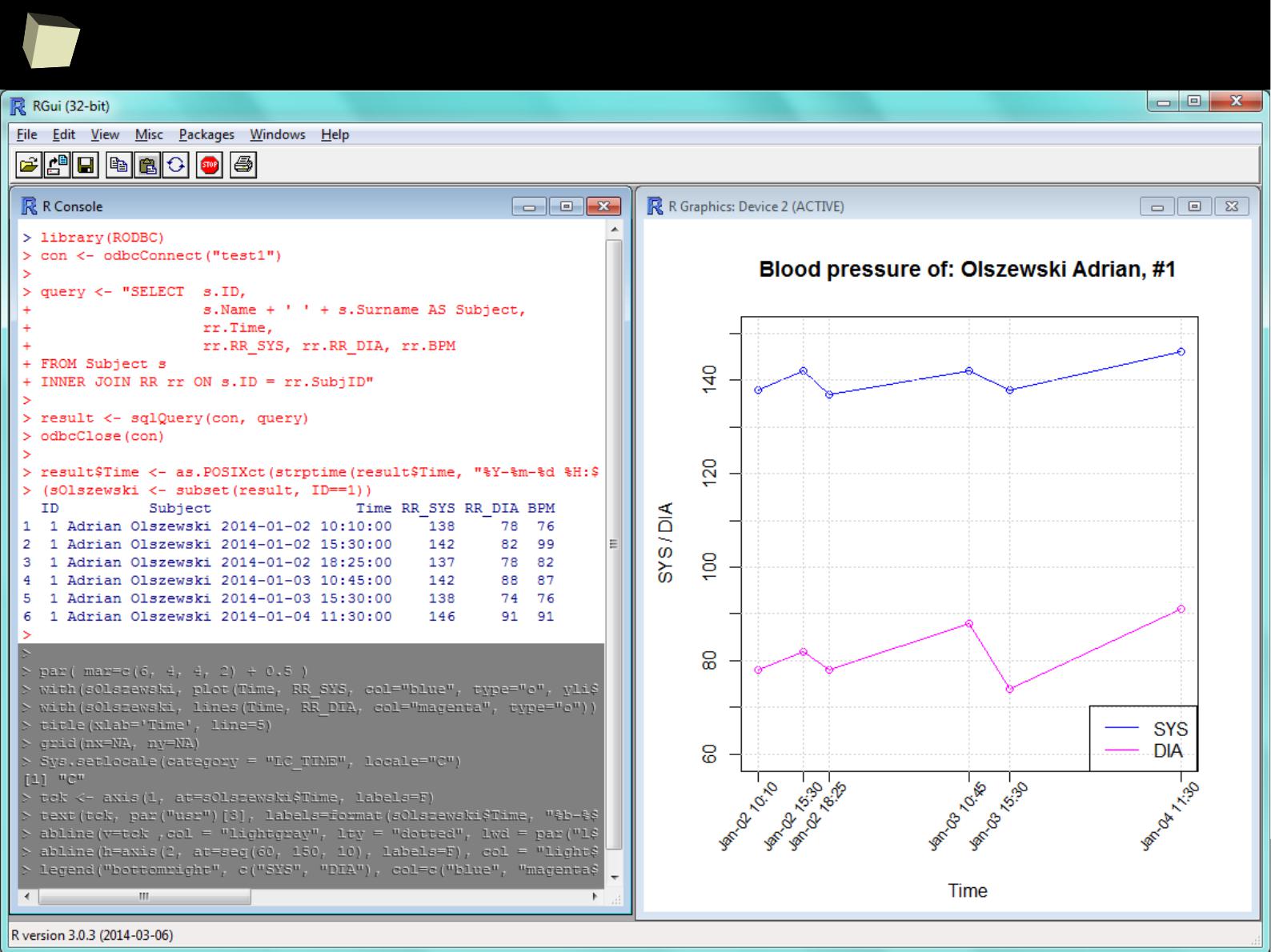

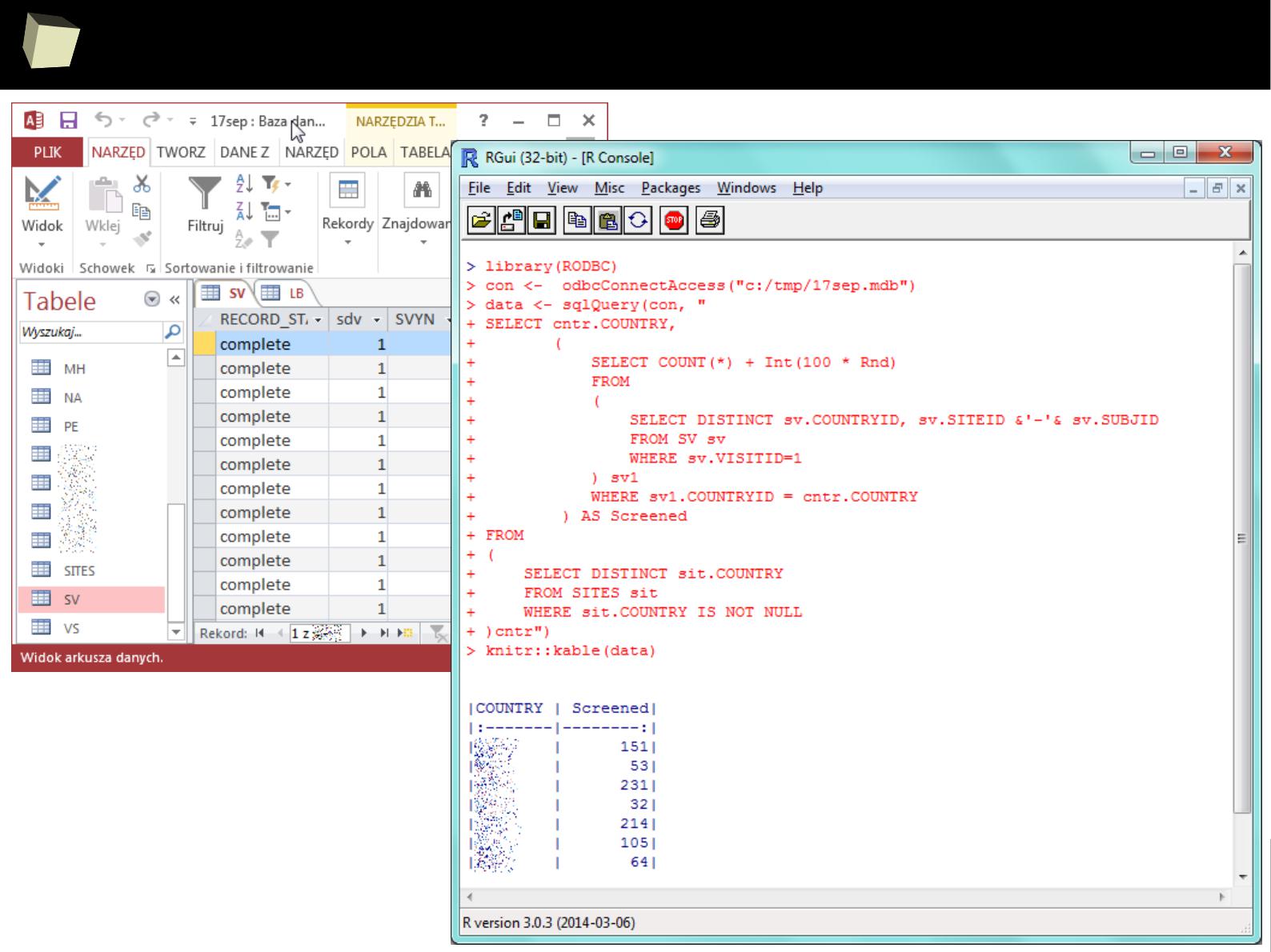

Querying data sources via ODBC ...

1

5

3

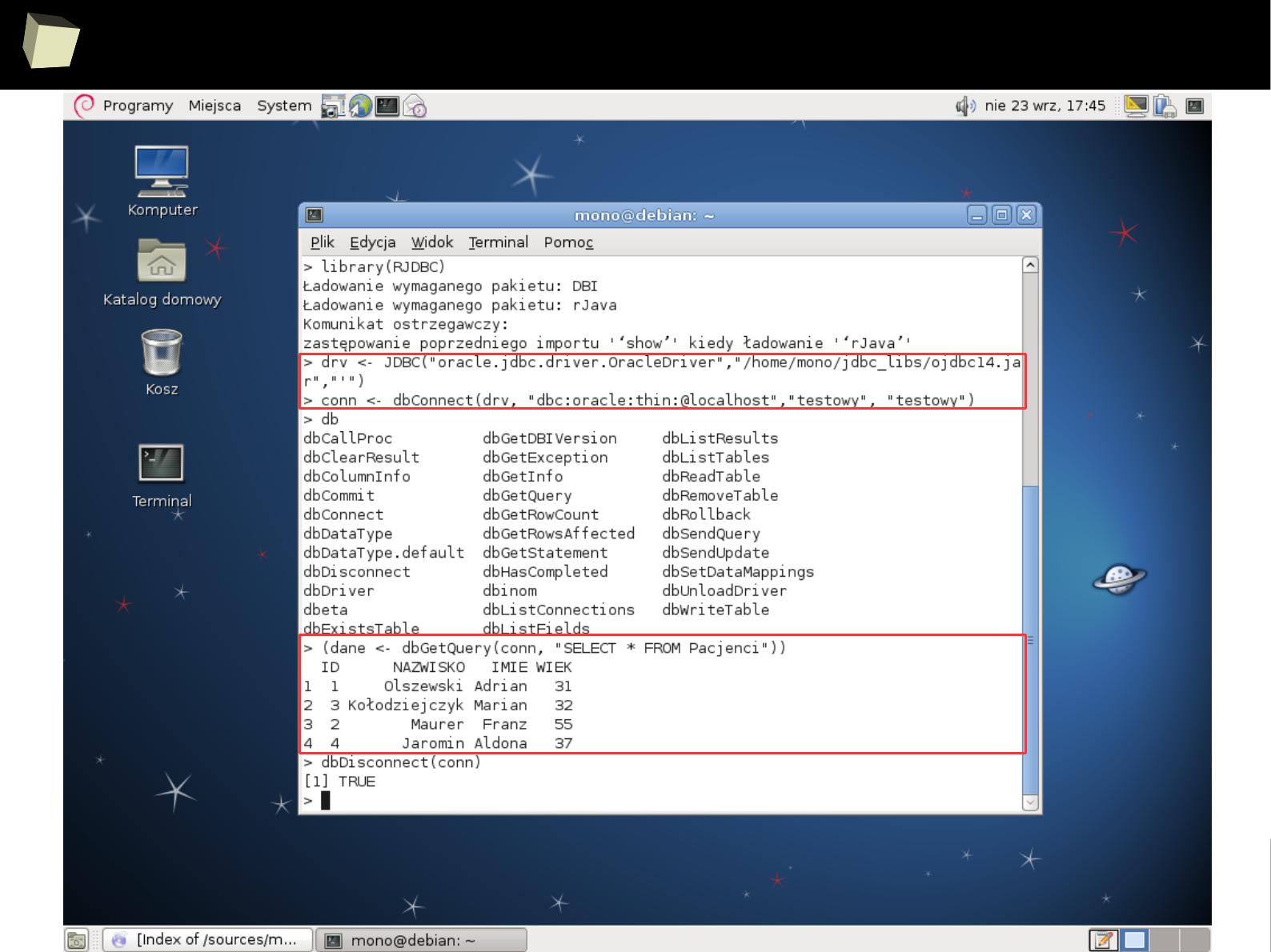

… or JDBC ...

1

5

4

… or diRect ...

1

5

6

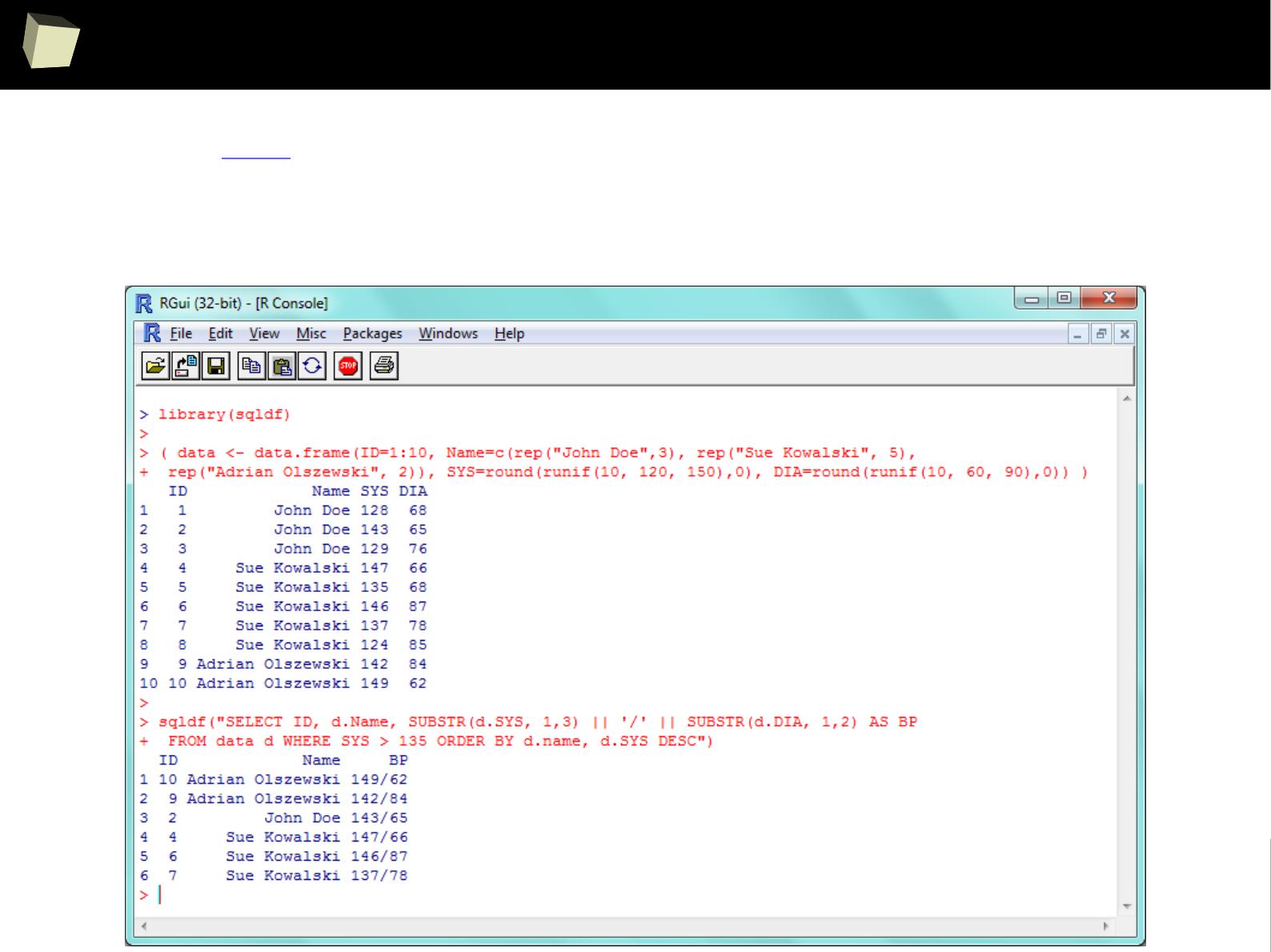

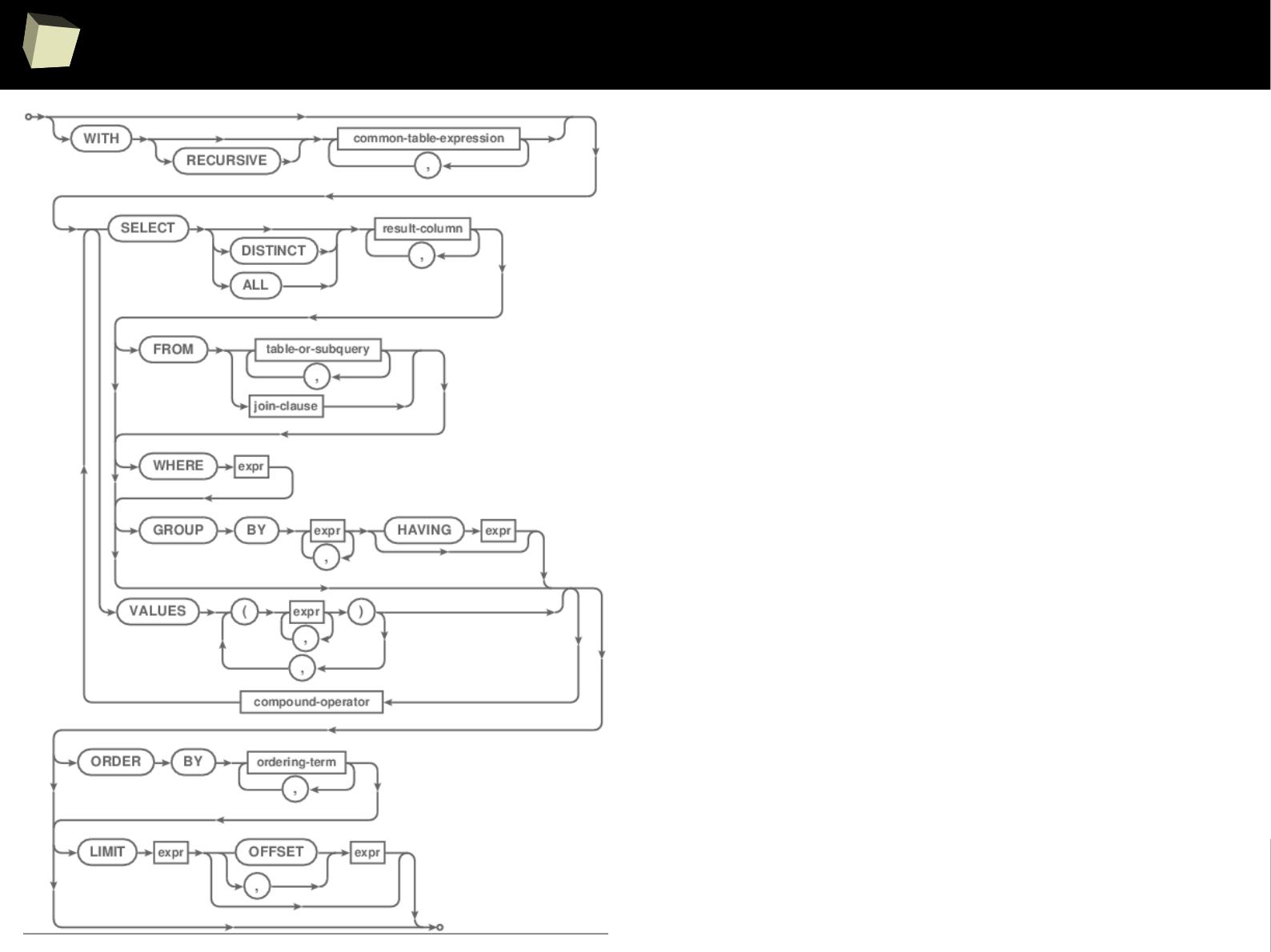

sqldf – a full blown SQL at your fingertips!

✔

Write any complex SQL queries

using your favourite syntax and engine

✔

Use complex sub-queries in:

✔

SELECT

✔

FROM / JOIN

✔

WHERE

✔

Nest up to 255 levels of sub-queries

✔

Use lots of engine-specific functions

✔

Limit results with LIMIT / OFFSET

✔

Query R data frames with spaces

and dots in column names

✔

… and much more!

1

5

7

Do I really need SQL for querying data.frames?

> attach(mydata)

> fit <- aov(y~A*B)

> summary(fit)

> layout(matrix(c(1:4), 2))

> plot(fit)

> TukeyHSD(fit)

> interaction.plot(A, B, y)

Why would one bother using SQL when R is capable itself of sub-setting

(filtering) data.frames, merging them, ordering, summarizing in sub-

groups and reshaping? There are various packages that make it easy!

SELECT Grp, Sex, COUNT(*)

FROM Table1 t1

INNER JOIN Table2 t2

ON t1.ID=t2.ID

WHERE t1.Age >=

(SELECT AVG(t.Age)

FROM Table1 t

WHERE t.ID=t1.ID)

GROUP BY Grp, Sex

HAVING COUNT(*) > 1

R is perfect for analysing data

SQL is ideal for querying data

Let everyone do what he does best!

1

5

8

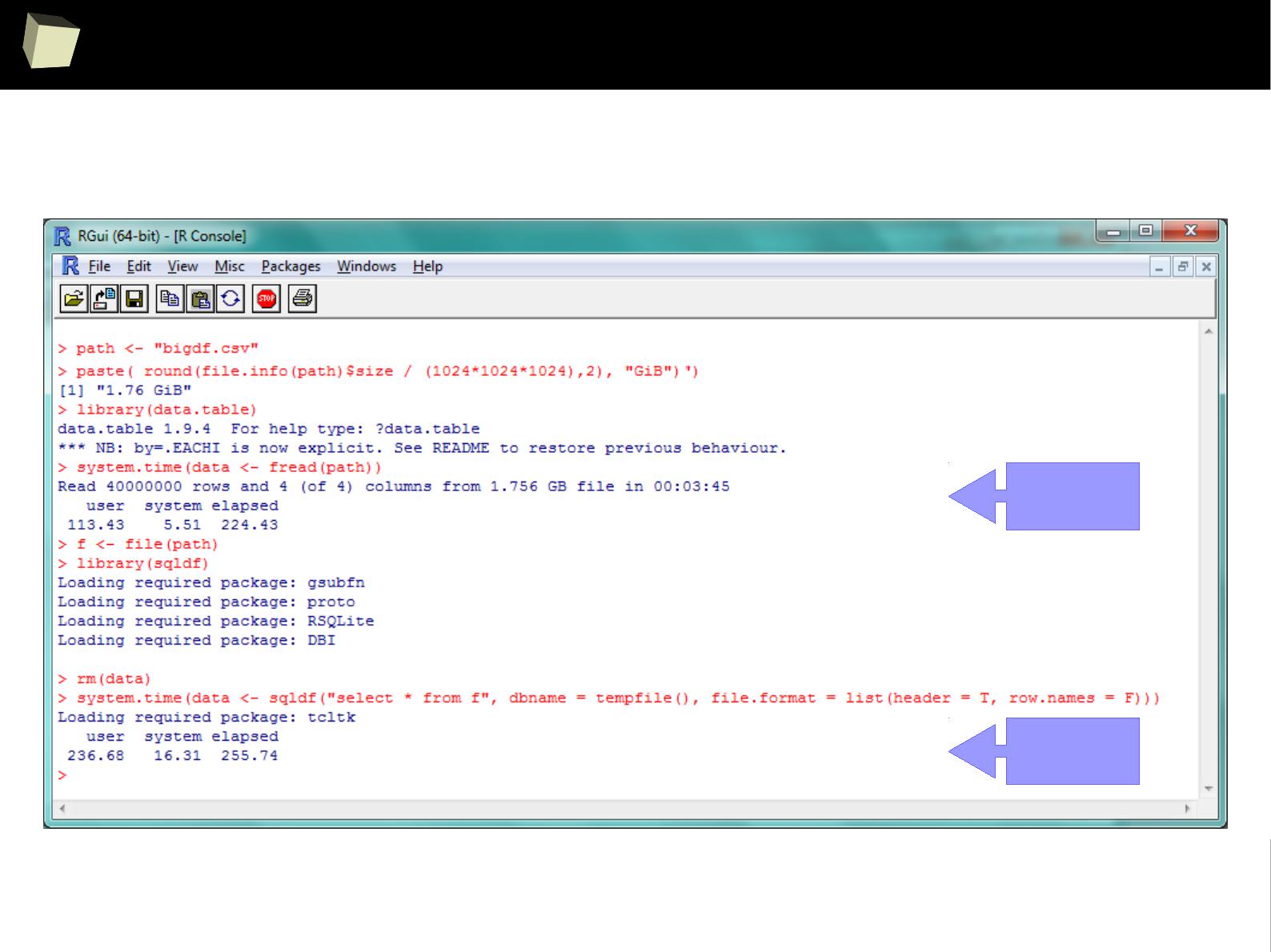

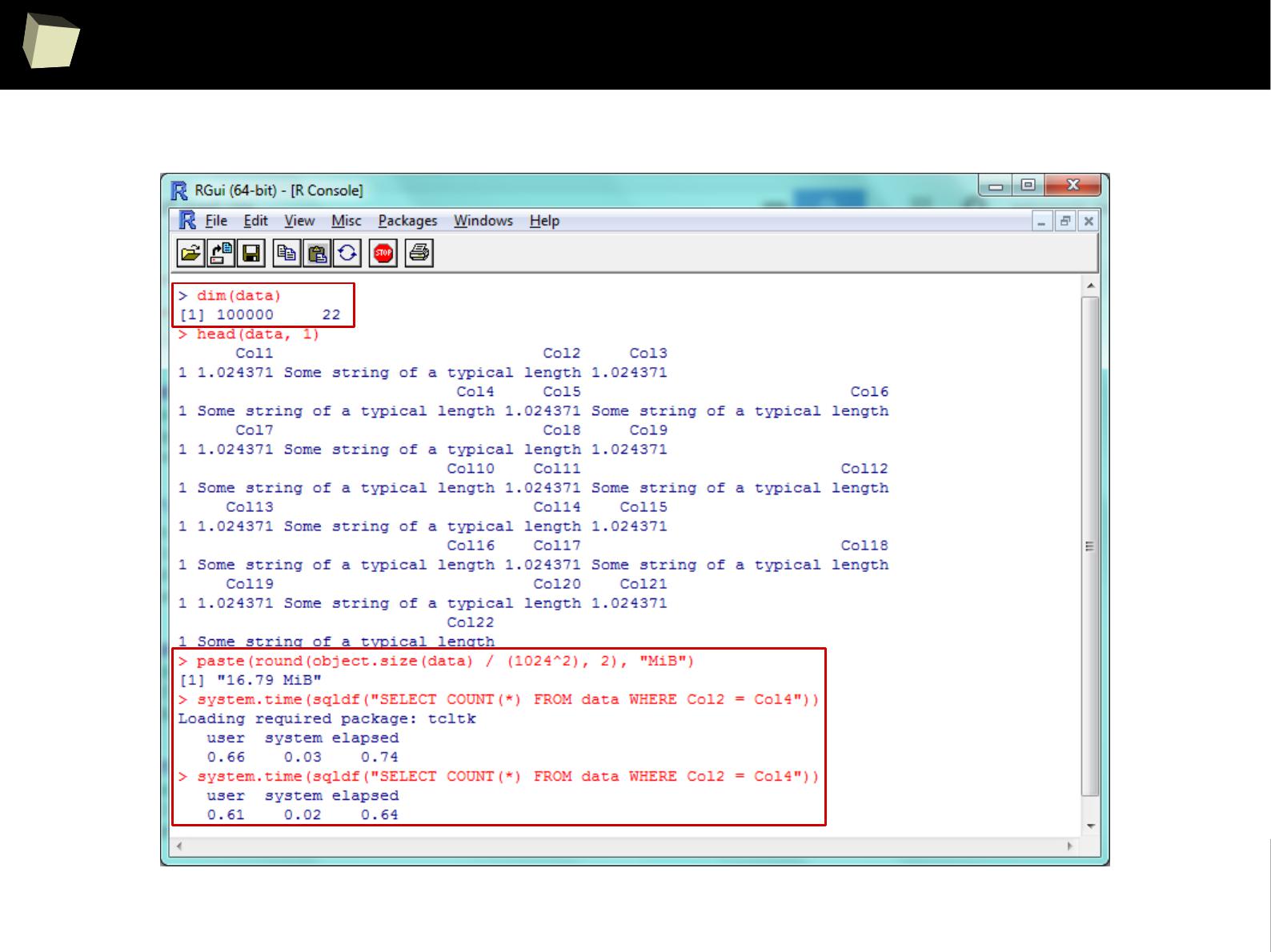

SQLite – it's quite fast!

The default, SQLite engine makes sqldf really fast.

Three simple benchmarks were run. Results are quite satisfying:

I. Reading a 1.7 GiB (4

columns

x 40,000,000

rows

) CSV file into R data.frame

●

via sqldf: 4m:20sec

●

via data.table::fread, the fastest method available in R: 3m:45sec

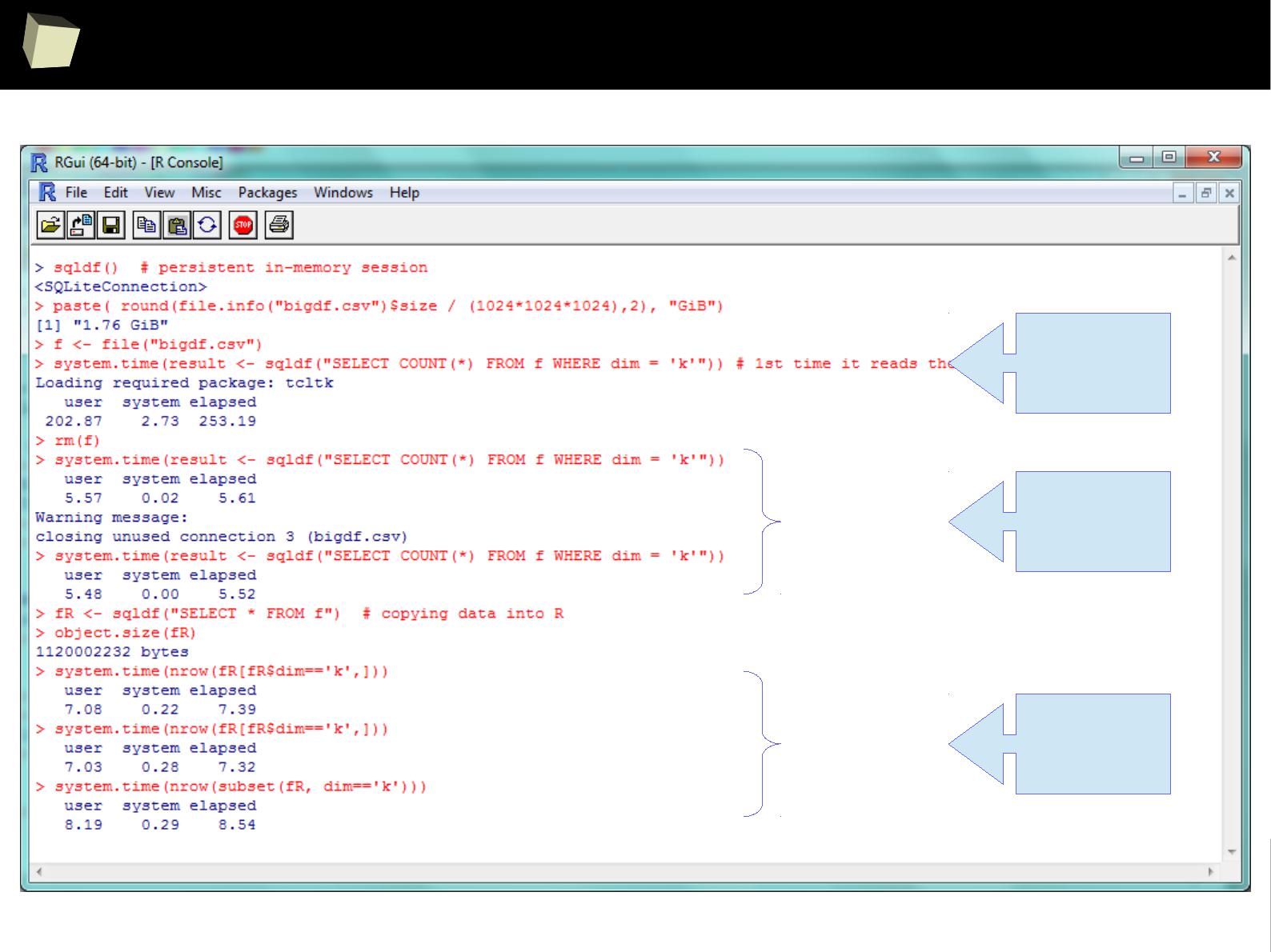

II. Querying this ~1.1 GiB dataset.

●

via sqldf: 5.6 sec

●

via the fastest, native sub-setting method: 7.4 sec

III. Querying a ~17 MiB (22

columns

x 100,000

rows

) data.frame

●

via sqldf: 0.7 sec – one won't notice any lags in everyday practice.

1 kB = 1000 B

1 kiB = 1024 B

1

5

9

SQLite – reading a huge CSV

fread

sqldf

1

6

0

SQLite – performance of querying huge dataset

reading

dataset

querying

via sqldf

querying

via native R

1

6

1

SQLite – performance of querying typical dataset

1

6

2

...much closer cooperation...

R can be invoked directly from within PostgreSQL and Microsoft SQL Server:

●

In PostgreSQL one can use the PL/R language (example)

●

In SQL Server there are three options:

●

write a CLR function which wraps invocations of a chosen

R↔.NET “connector”: R.NET, RserveCLI, StatConnector, etc.

●

create and use DCOM objects directly (sp_OACreate)

●

NEW: use native T-SQL calls to run R directly from SQL Server

This enables the user to easily perform statistical analyses directly under the

database engine and employ complex validation rules in triggers.

1

6

3

Easy data transfeR

With R and a rich set of database drivers (ODBC, JDBC, native) it is easy to

transfer data between various sources with only few lines of code.

Actually, this is even easier than in C# :)

tables <- list()

tables[["tab1"]] <- read.csv("c:/tmp/table1.csv") # source 1

tables[["tab2"]] <- read.xlsx("c:/tmp/mysheet.xlsx", sheet=2) # source 2

tables[["tab3"]] <- sqlQuery(otherCon, "SELECT * FROM Table") # source 3

…

myCon <- odbcConnectAccess("c:/tmp/my.mdb"))

for(table in names(tables)){

sqlSave(myCon, tables[[table]], tablename = table) # that's all!

}

OdbcClose(myCon)

Isn't this just beautiful?

1

6

4

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is (widely) supported by the world of science

IV R is supported by the community

V R is (increasingly) supported by the business

VI 2/3 :) Advanced data manipulation

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many options to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

1

6

5

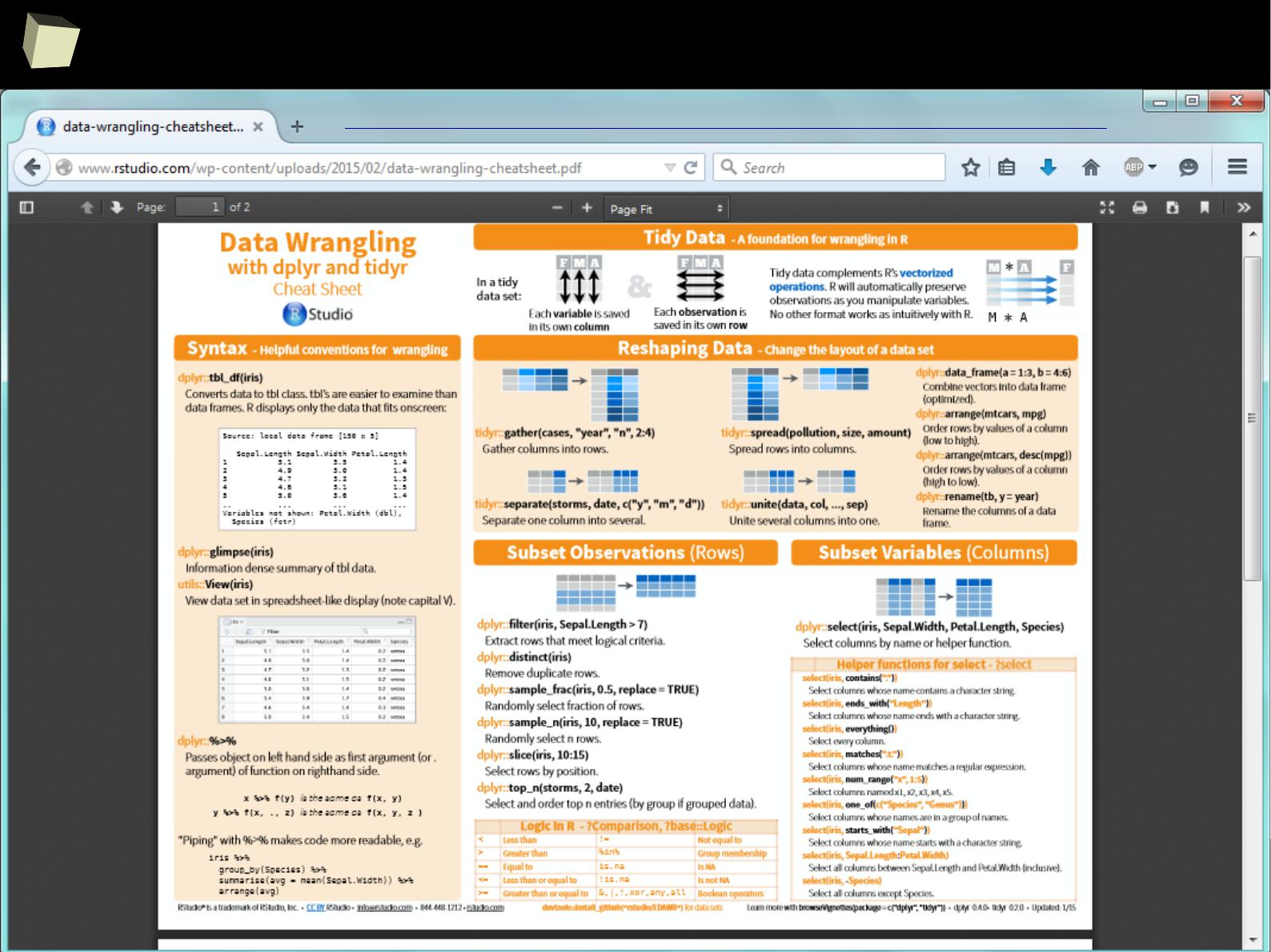

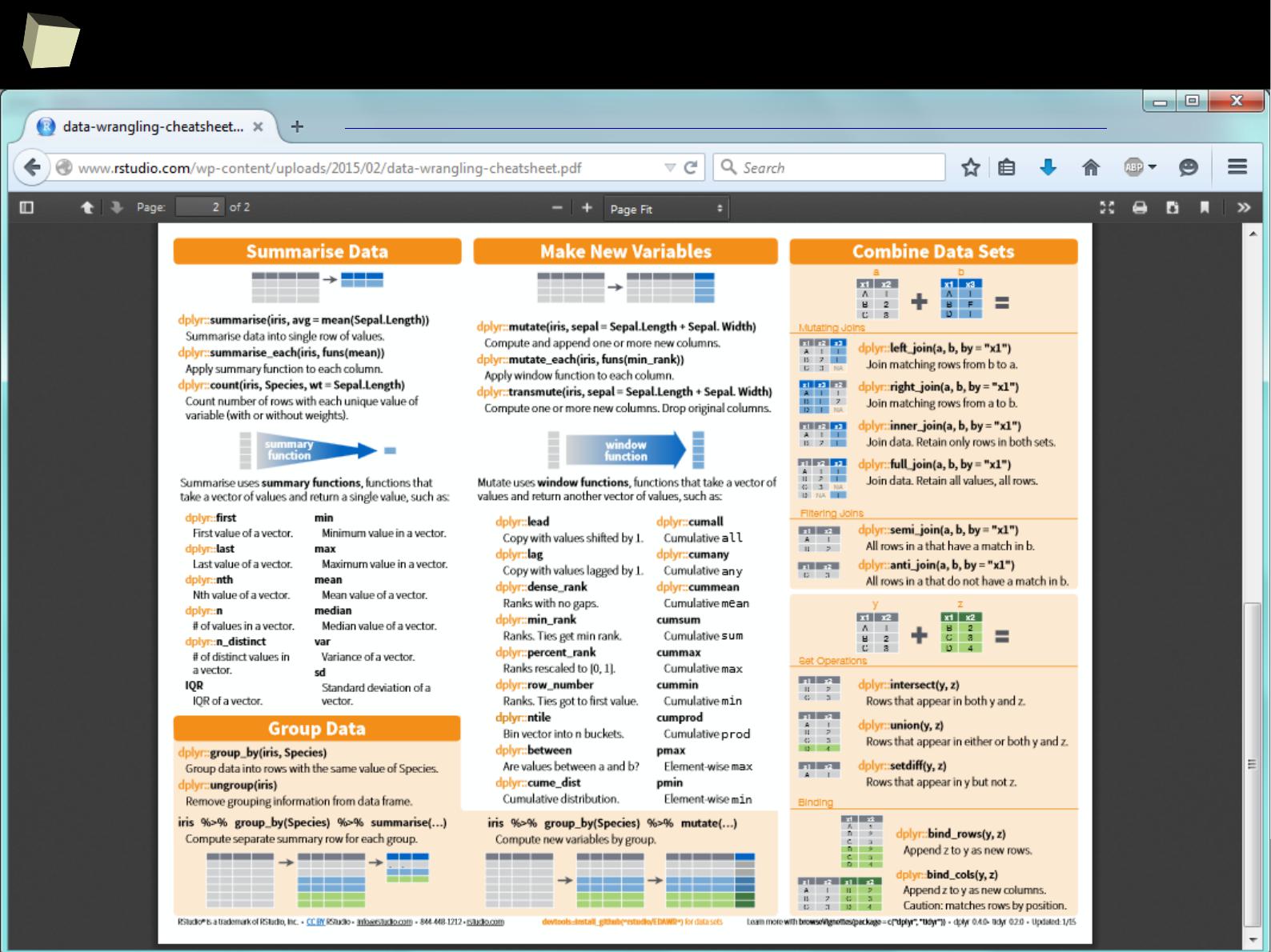

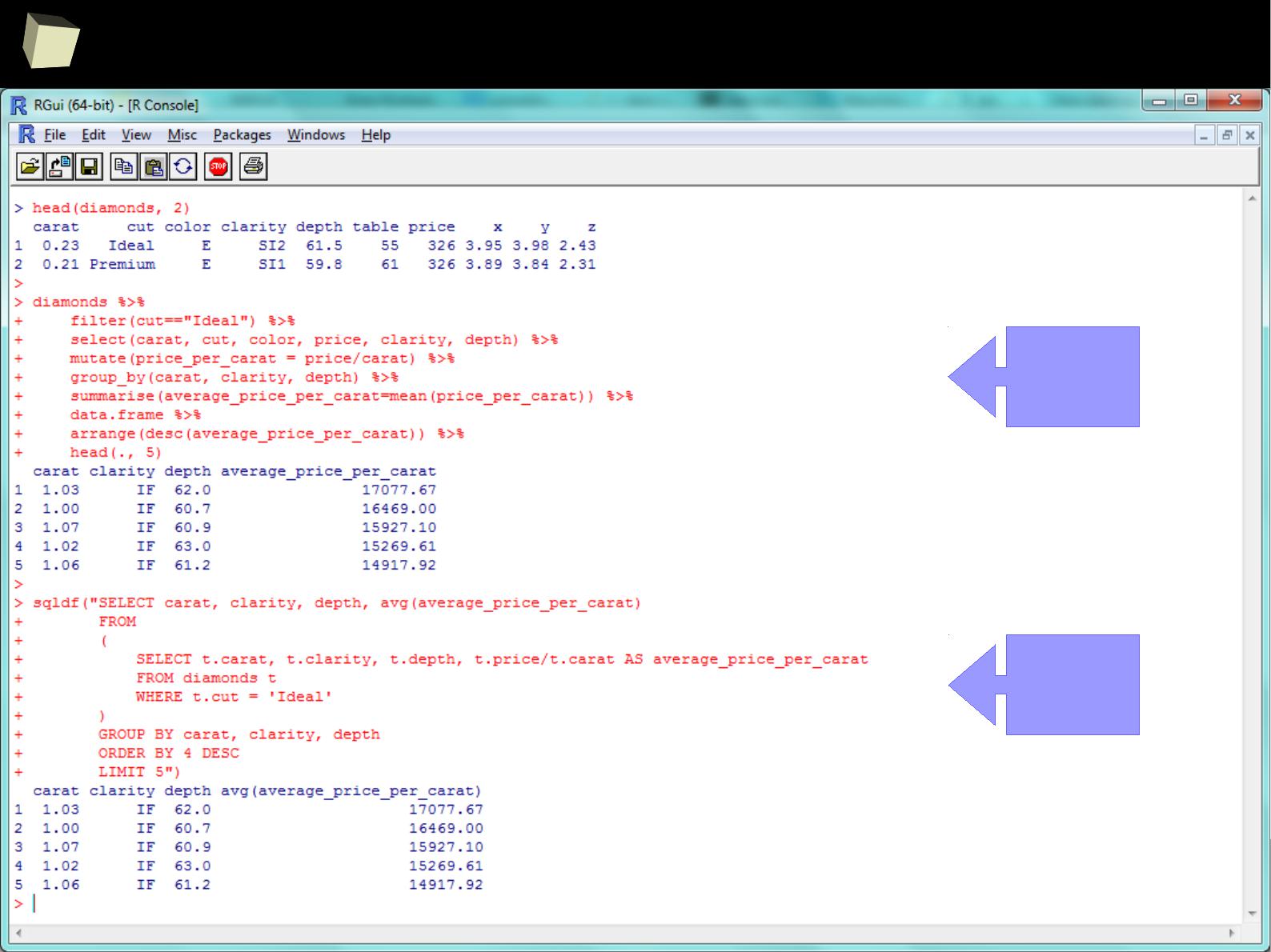

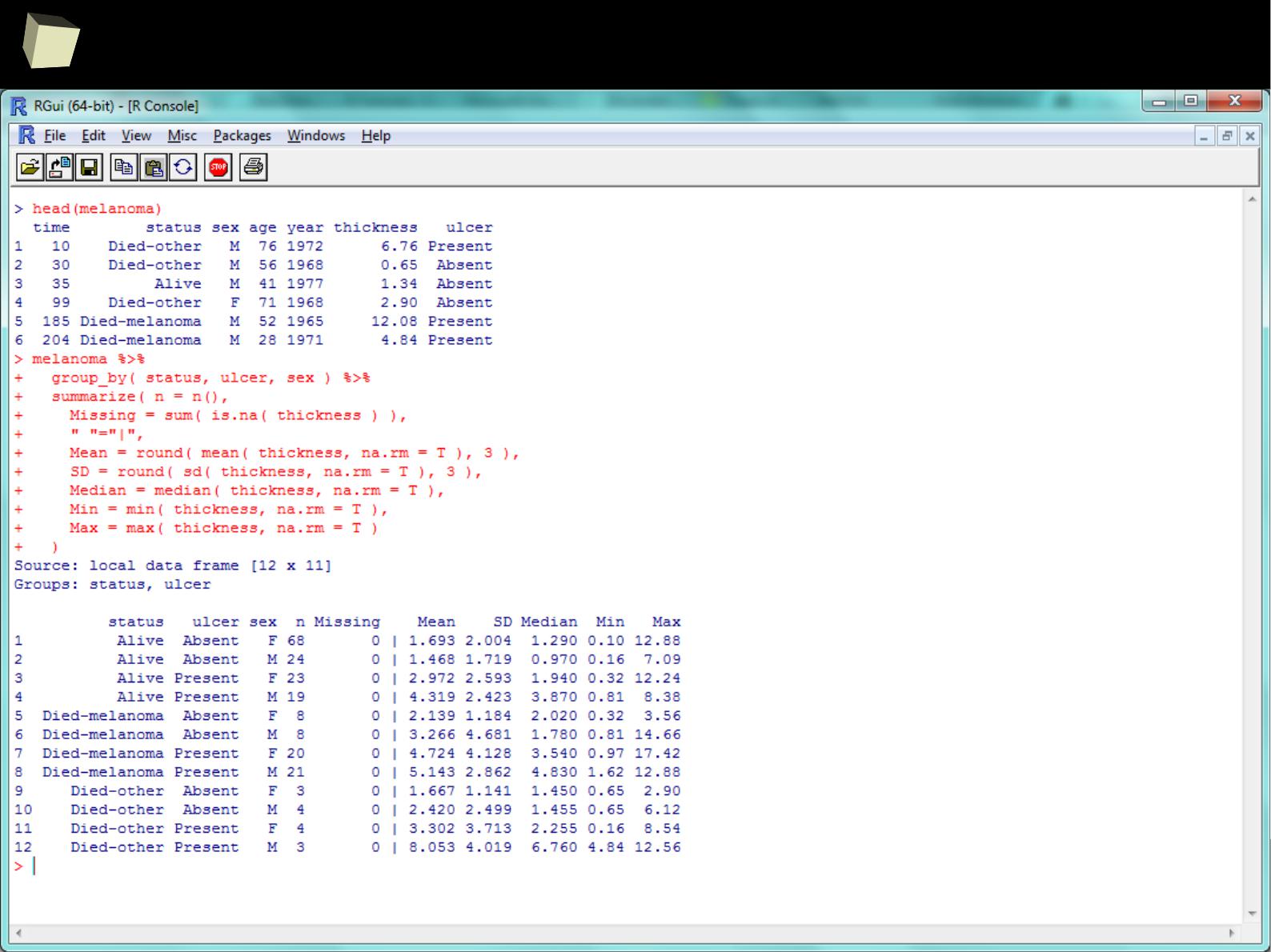

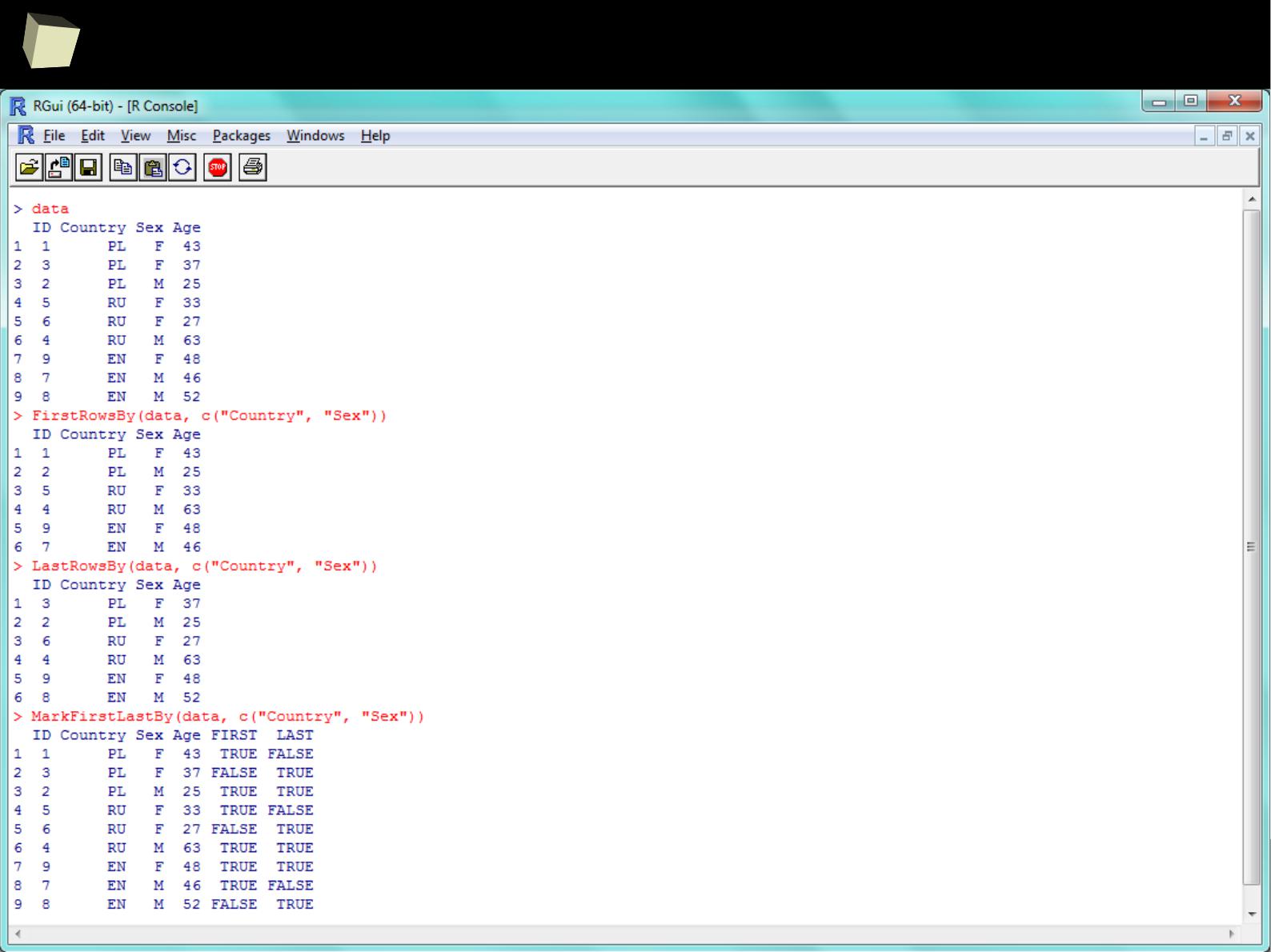

Manipulating data

SQL is extremely useful for querying data but it is not the only option the user

can choose. ddplyr, reshape2, tidyr and data.table libraries provide a rich set

of functions for advanced querying and manipulating data structures, i.e.:

●

Filtering (sub-setting) rows

●

Selecting variables (columns)

●

Adding new variables, e.g. computed (mutating and transmutating)

●

Ordering results (sorting)

●

Aggregating (grouping and summarizing)

●

Reshaping data from wide to long format and vice-versa

●

Combining (piping) the above operations by the chain operator %>%

Unquestionably, these packages are one of the top-most useful, “must-have”

packages in the analyst's toolbox.

1

6

6

Manipulating data

The chain operator %>% is a one of the most useful operators in R.

It remarkably facilitates common data processing tasks placing them in a flow.

dataset %>%

filter(column1 == 2 & column2 %in% c("A", "B", "C")) %>%

select(column1, column2, colum3) %>%

group_by(column1, column2, column3) %>%

summarize(Avg = mean(column3), SD = sd(column3)) %>%

mutate(CV = SD/Avg) %>%

arrange(desc(CV)) %>%

merge(., dictionary, by.x="column1", by.y="ID") %>%

head(., 10)

1

6

7

Querying data with dplyr

compared to SQL

dplyr

sqldf

1

6

8

With dplyr “group-by summaries” are easy!

1

6

9

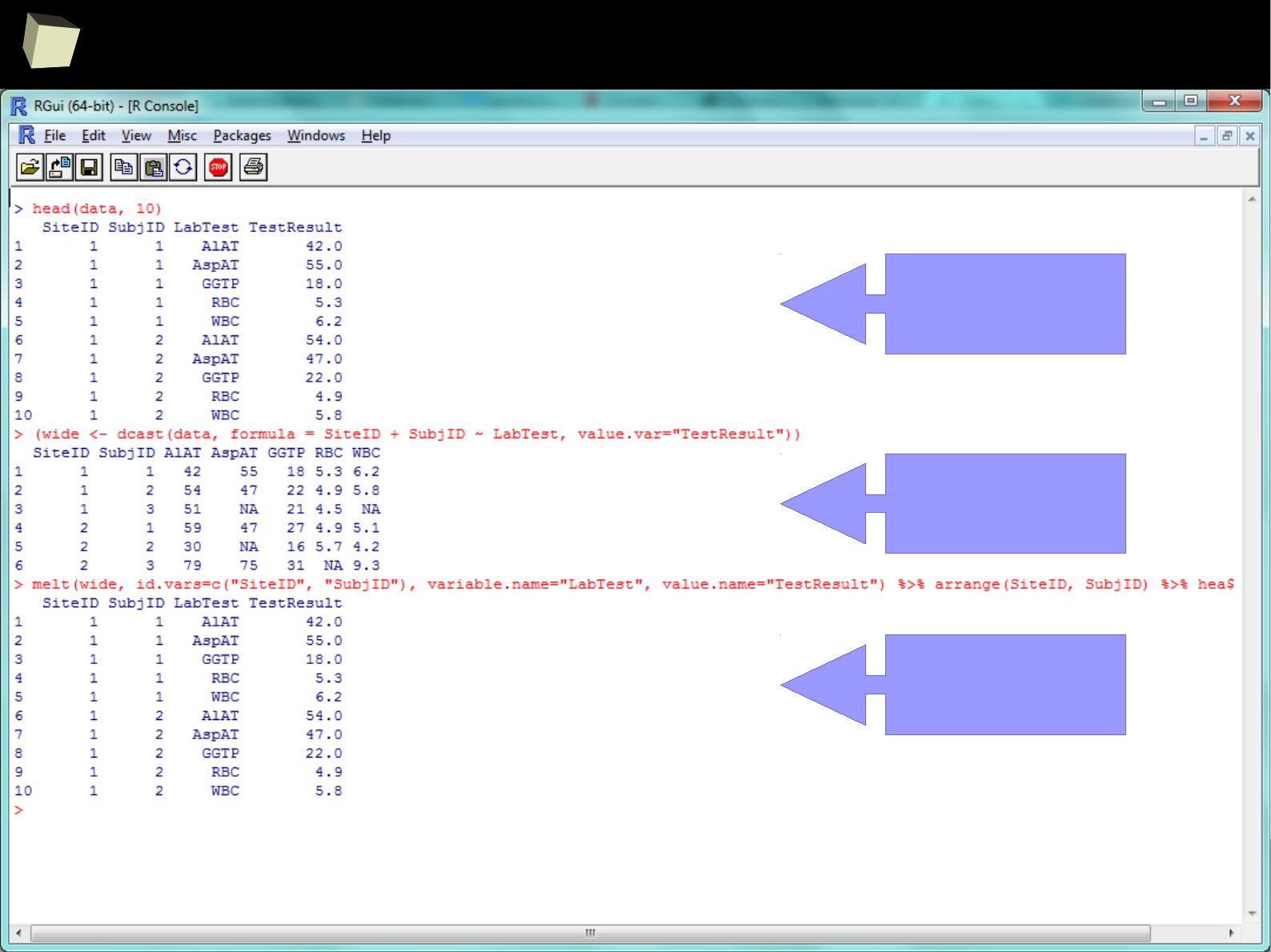

Reshaping long ↔ wide format with reshape2

source (long)

long wide→

wide long→

1

7

2

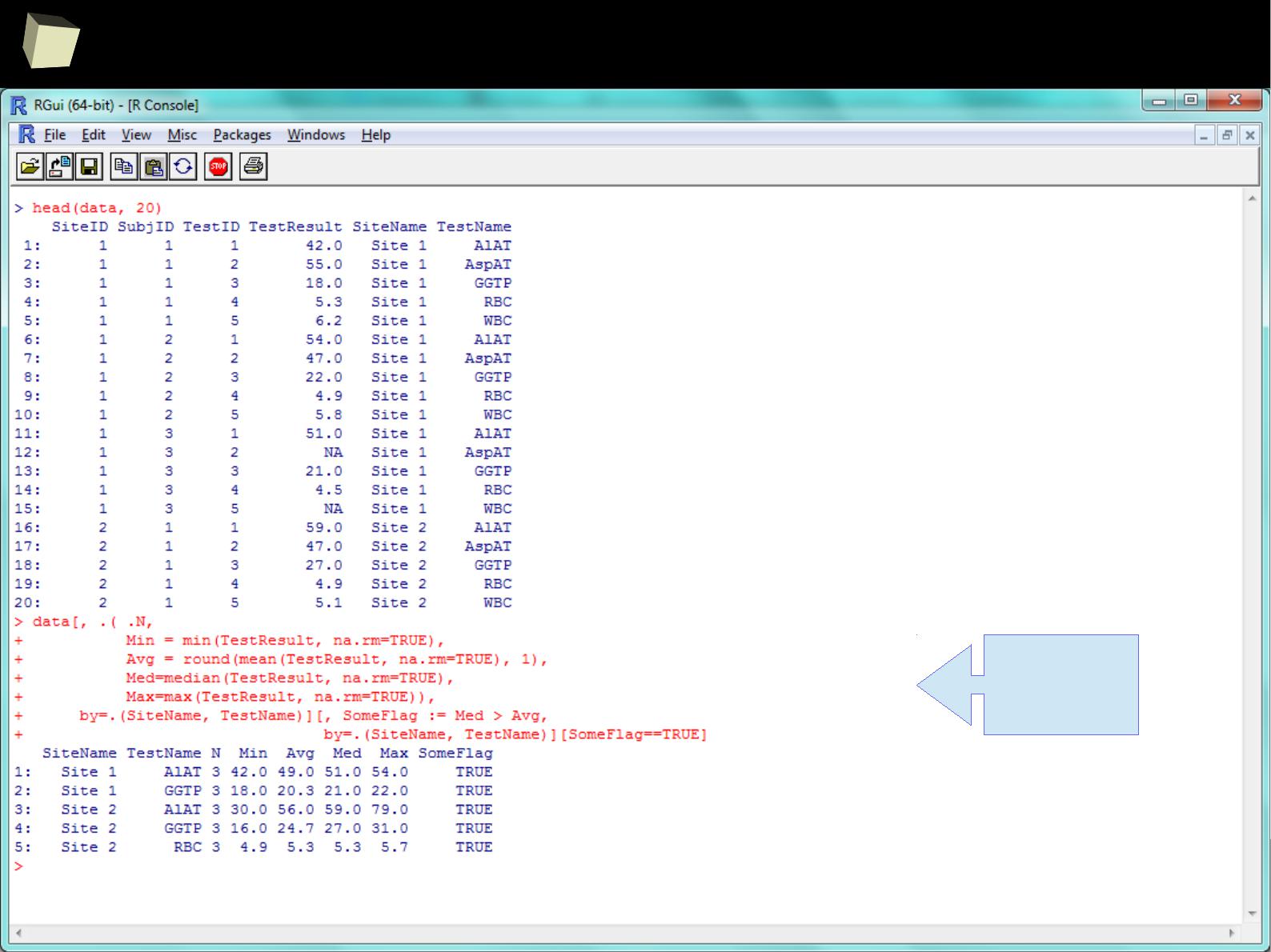

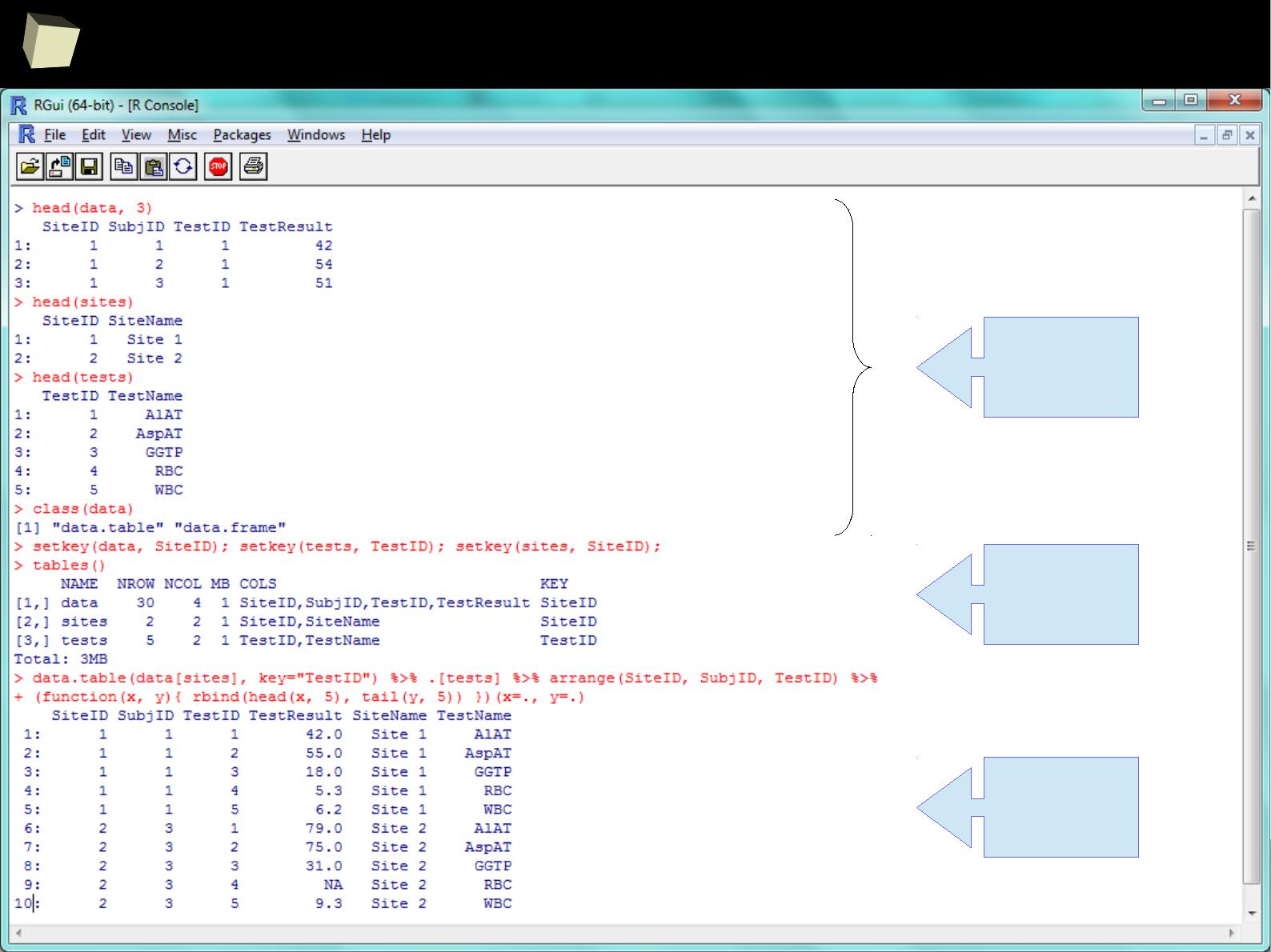

data.frame → data.table

data.table is a library providing a significant enhancement of the regular R

data.frame. It allows the user to perform really fast (indexing) in-memory

processing of huge volumes of data (>100 GB) with a relatively easy syntax.

It covers the following tasks:

●

filtering rows and selecting columns

●

adding and deleting (mutating) columns using no intermediate copies at all

●

joining tables by indexes

●

aggregating (calculations in sub-groups)

●

reading huge CSV files – this is the fastest method currently available in R

●

indexing selected content (set of columns) of a data.table

Data.tables are compatible with data.frames with some exceptions (FAQ 2.17).

1

7

3

With data.table summaries are easy too!

grouping,

mutating,

filtering

1

7

4

data.table feat. dplyr

3 x indexed

data.table

content of a

data.table

storage

merging,

chaining,

anonym. fn.

1

7

5

Manipulating data

Valuable resources:

●

http://seananderson.ca/2013/10/19/reshape.html

●

http://seananderson.ca/2014/09/13/dplyr-intro.html

●

http://www.sharpsightlabs.com/dplyr-intro-data-manipulation-with-r

●

http://cran.rstudio.com/web/packages/dplyr/vignettes/introduction.html

●

http://www.statsblogs.com/2014/02/10/how-dplyr-replaced-my-most...

●

http://www.cookbookr.com/Manipulating_data/Converting_data_between...

●

http://datatable.r-forge.r-project.org/datatable-faq.pdf

●

http://s3.amazonaws.com/assets.datacamp.com/img/blog/data+table...

●

http://github.com/Rdatatable/data.table/wiki/Benchmarks-%3A-Grouping

1

7

6

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is (widely) supported by the world of science

IV R is supported by the community

V R is (increasingly) supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

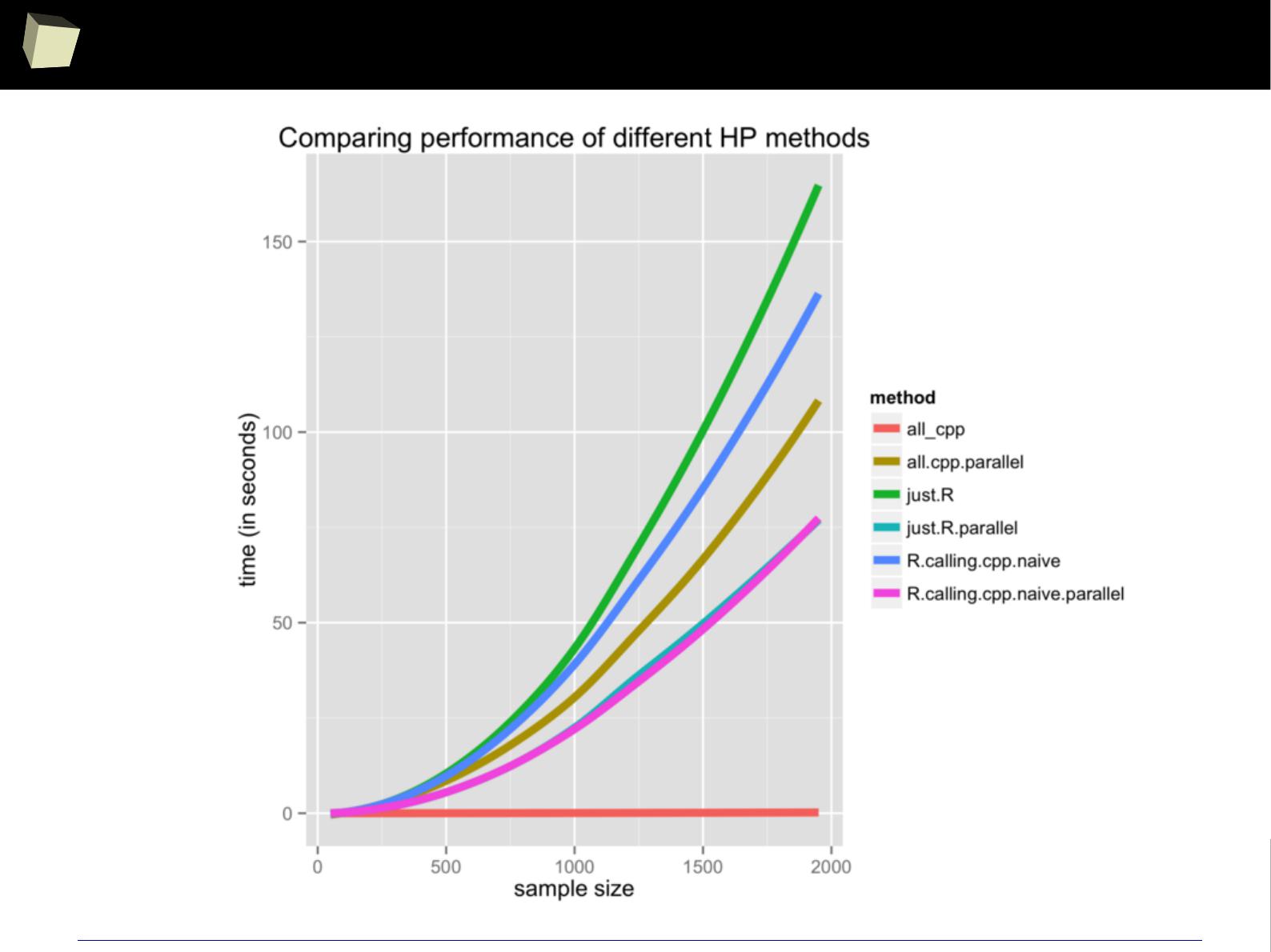

X There are many possibilities to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

1

7

7

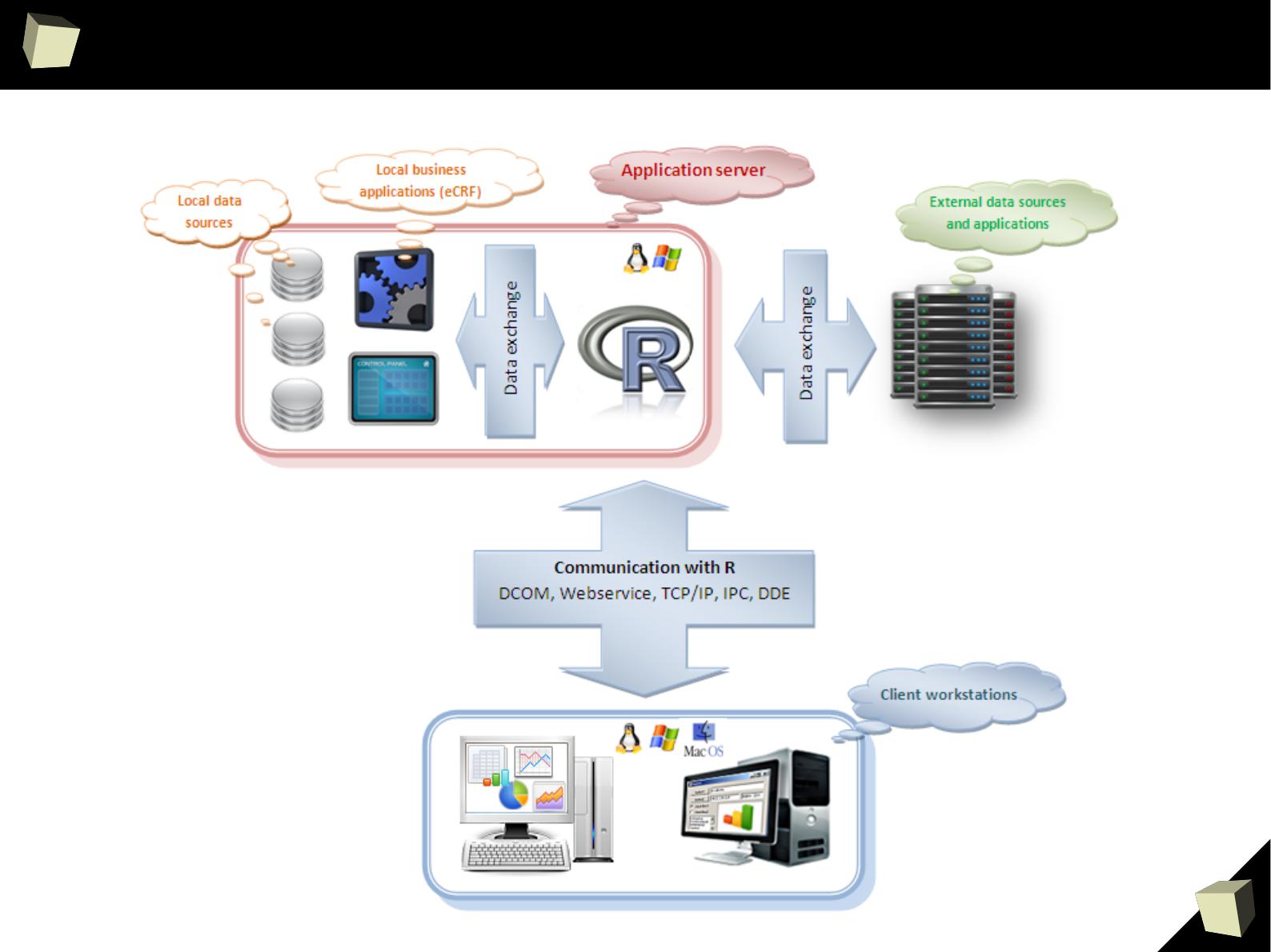

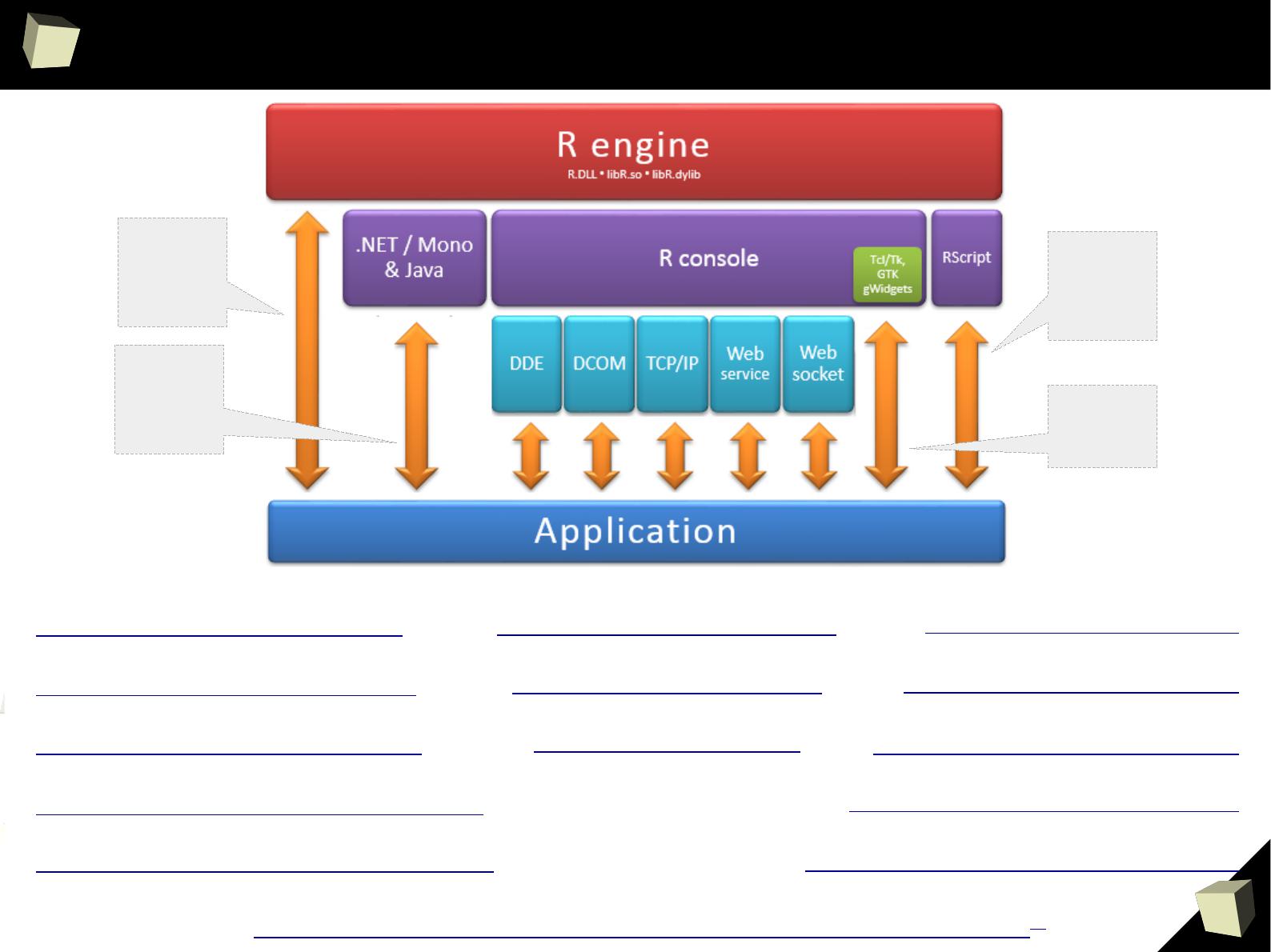

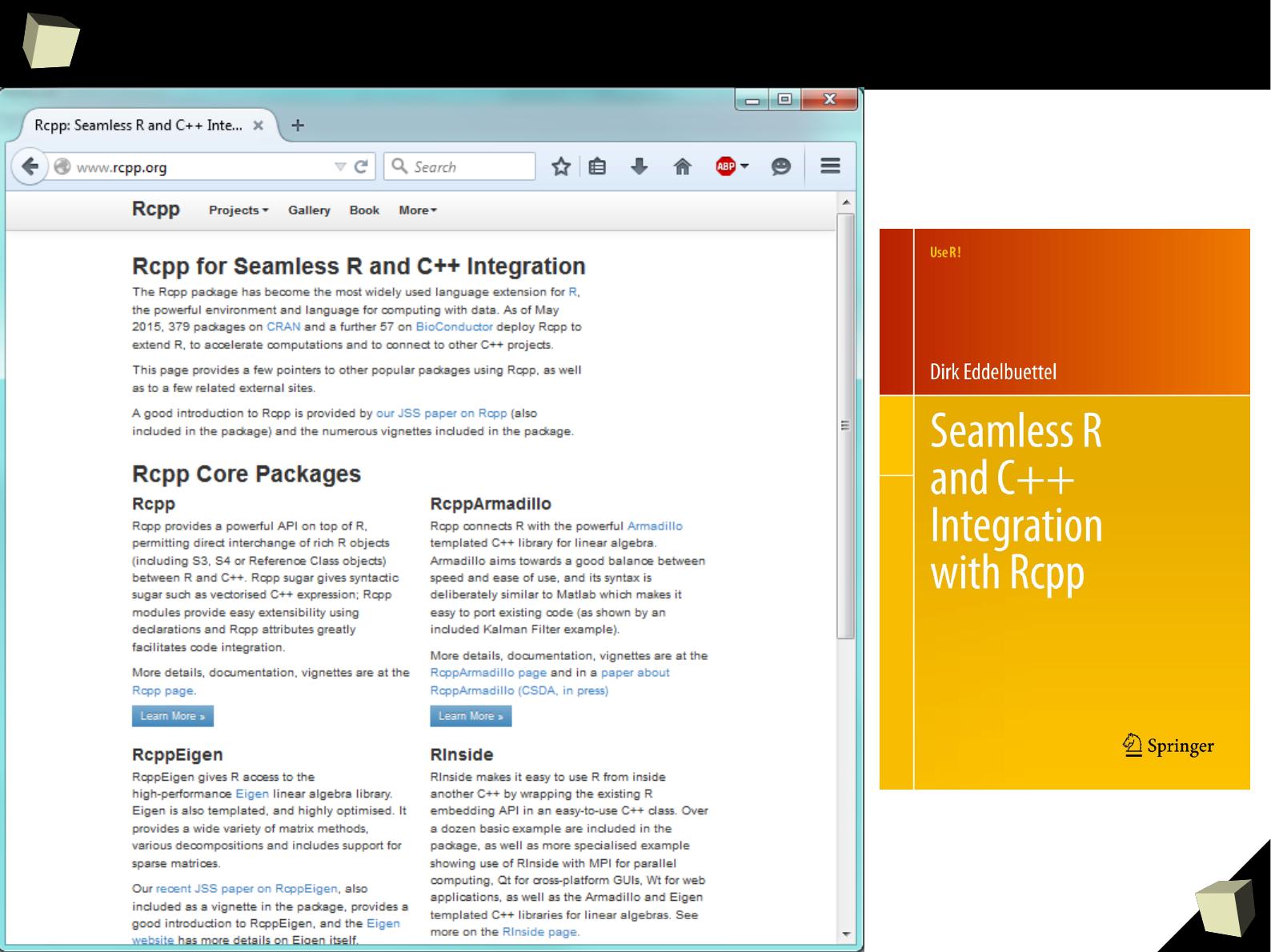

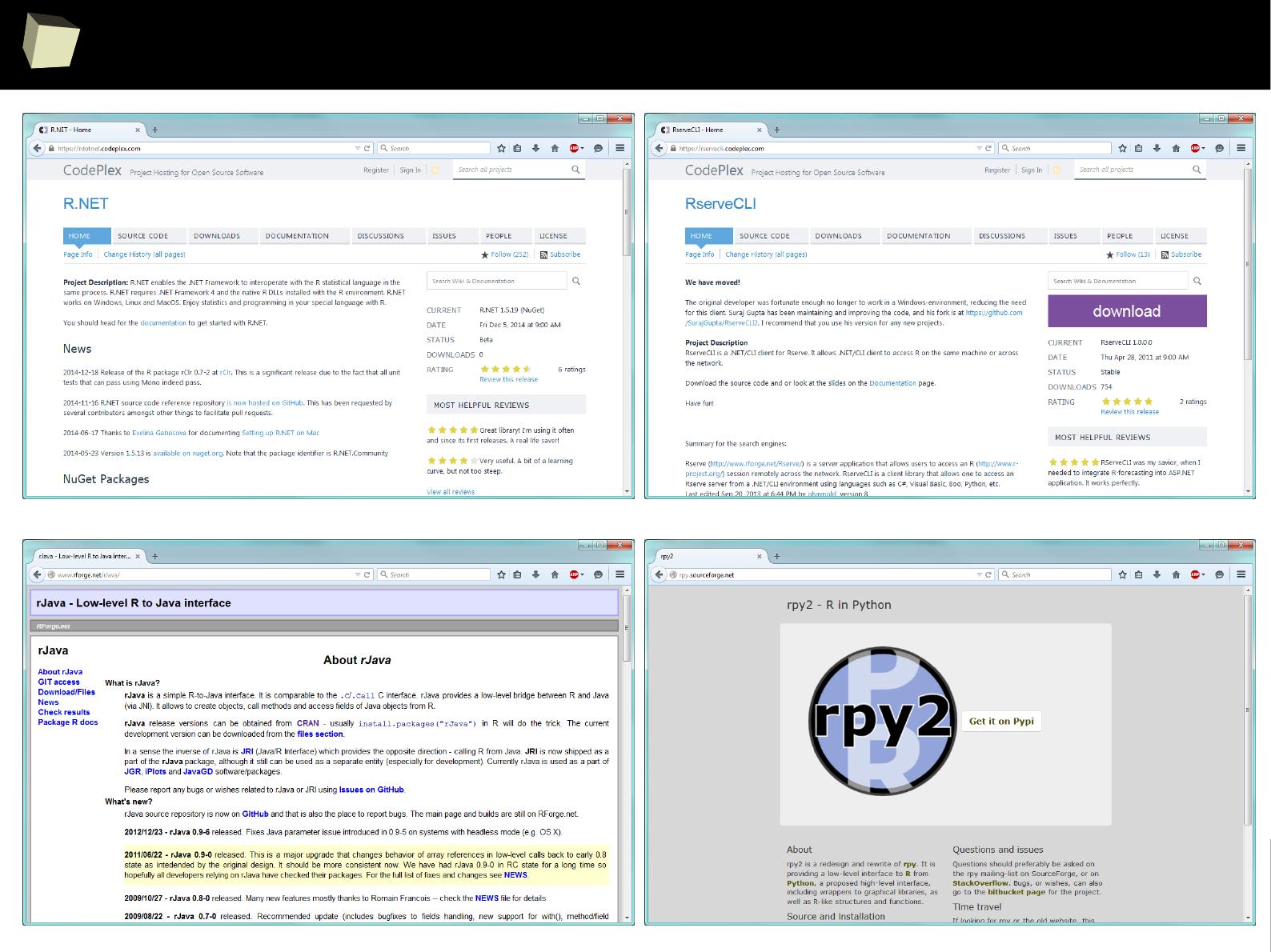

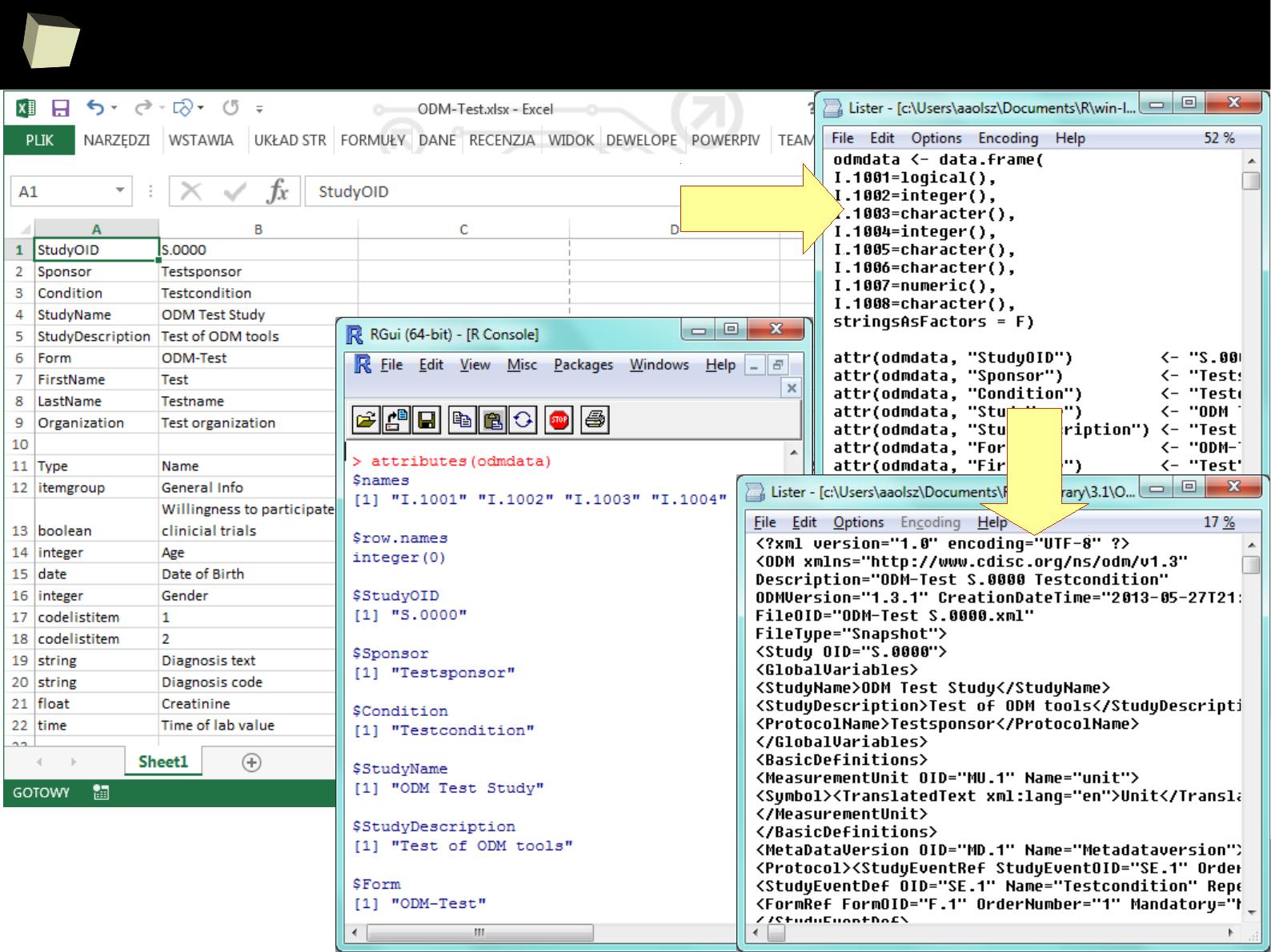

“R” stands for inteRoperability

R is not only a great statistical package. It is often used as a part of more

complex systems as a computing engine. It may also function as a standalone

computational server through the Web.

There are number of libraries that allow to communicate with R in many ways

(COM, TCP/IP, WebServices, DDE, direct linking) from many programming

languages and platforms (C++, Java, .NET/Mono, Perl, Python, Scala, ...) or

external applications able to act as a COM, DDE or Webservice client.

It's worth noting that R is able to call C/C++, Java, .NET, Perl and Python code.

In addition, R allows to create embedded graphical user interfaces (GUI) for

easier entering data and performing analyzes by people not familiar with R. One

can make use of the GTK, Tcl/Tk, wxWidgets and gWidgets toolkits or the

R GUI Generator.

1

7

8

“R” stands for inteRoperability

1

7

9

...”

there are so many ways of speaking

”

http://www.goldenline.pl/forum/2478242/nowy-interfejs-r-net/

PL

http://www.rforge.net/Rserve http://rdotnet.codeplex.com

http://rcom.univie.ac.at

http://rservecli.codeplex.com

http://ndde.codeplex.com

http://www.rforge.net/rJava

http://www.rstudio.com/shiny

http://rpython.r-forge.r-project.org

http://rpy.sourceforge.net

http://rclr.codeplex.com

http://www.omegahat.org/RSPerl

http://dahl.byu.edu/software/jvmr

http://www.rcpp.org

●

C++,

●

Python

●

Perl

●

Fortran

●

PHP

●

C#

●

VB.NET

●

F#

●

Java

●

Scala

Calling R

with a script

name as

parameter

Embedded,

dialog-based

applications

1

8

0

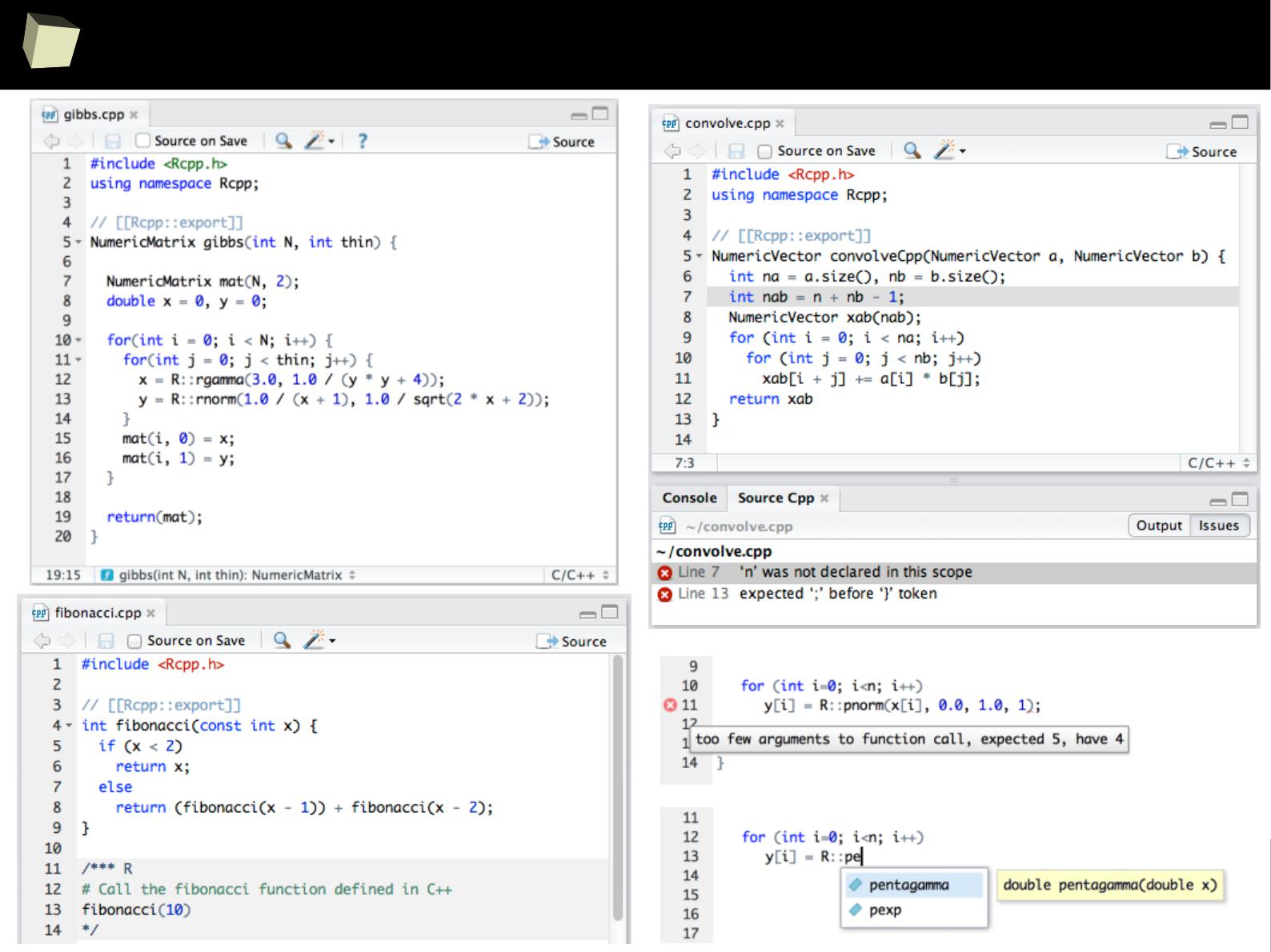

Easy R – C++ integration

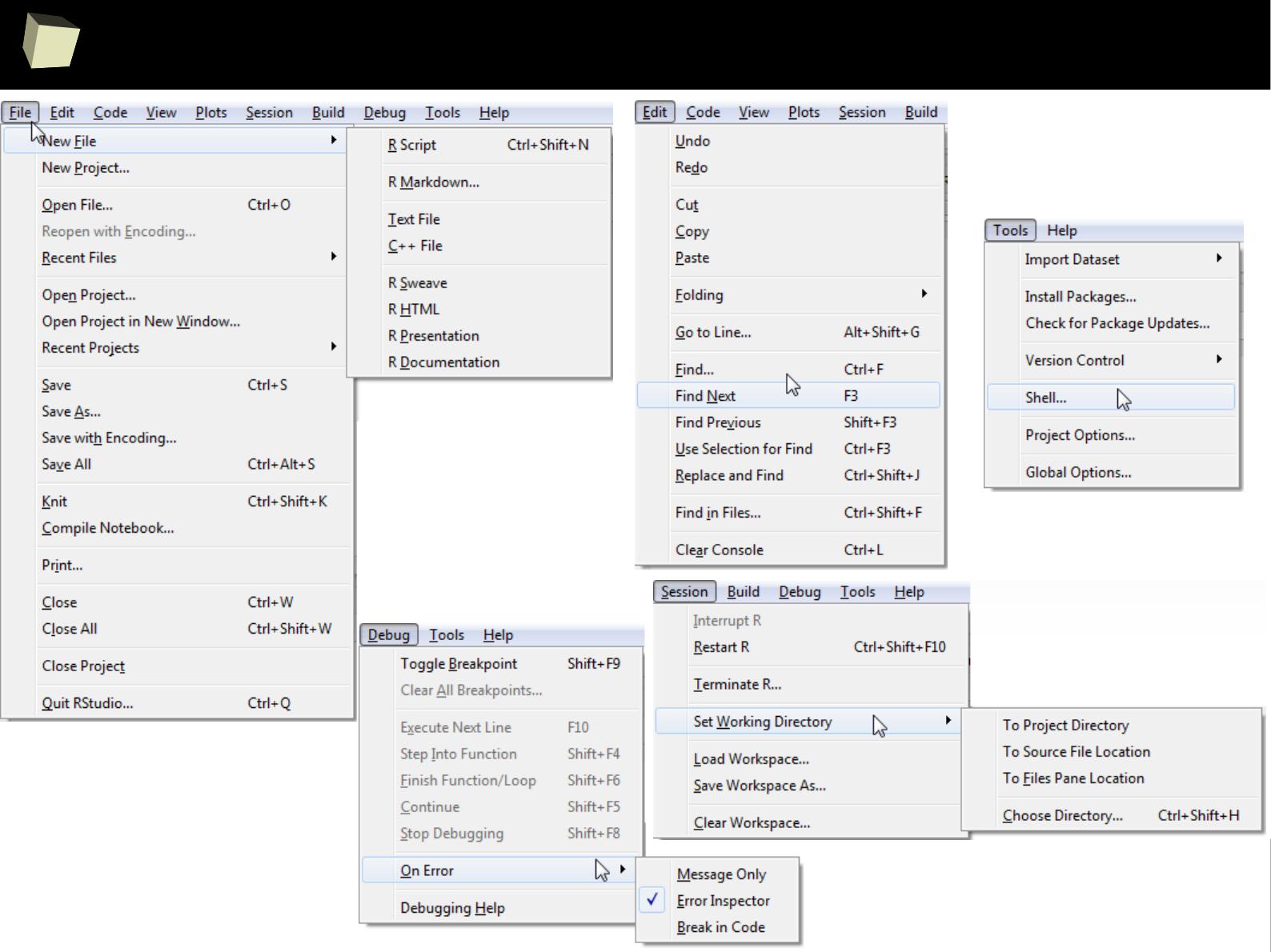

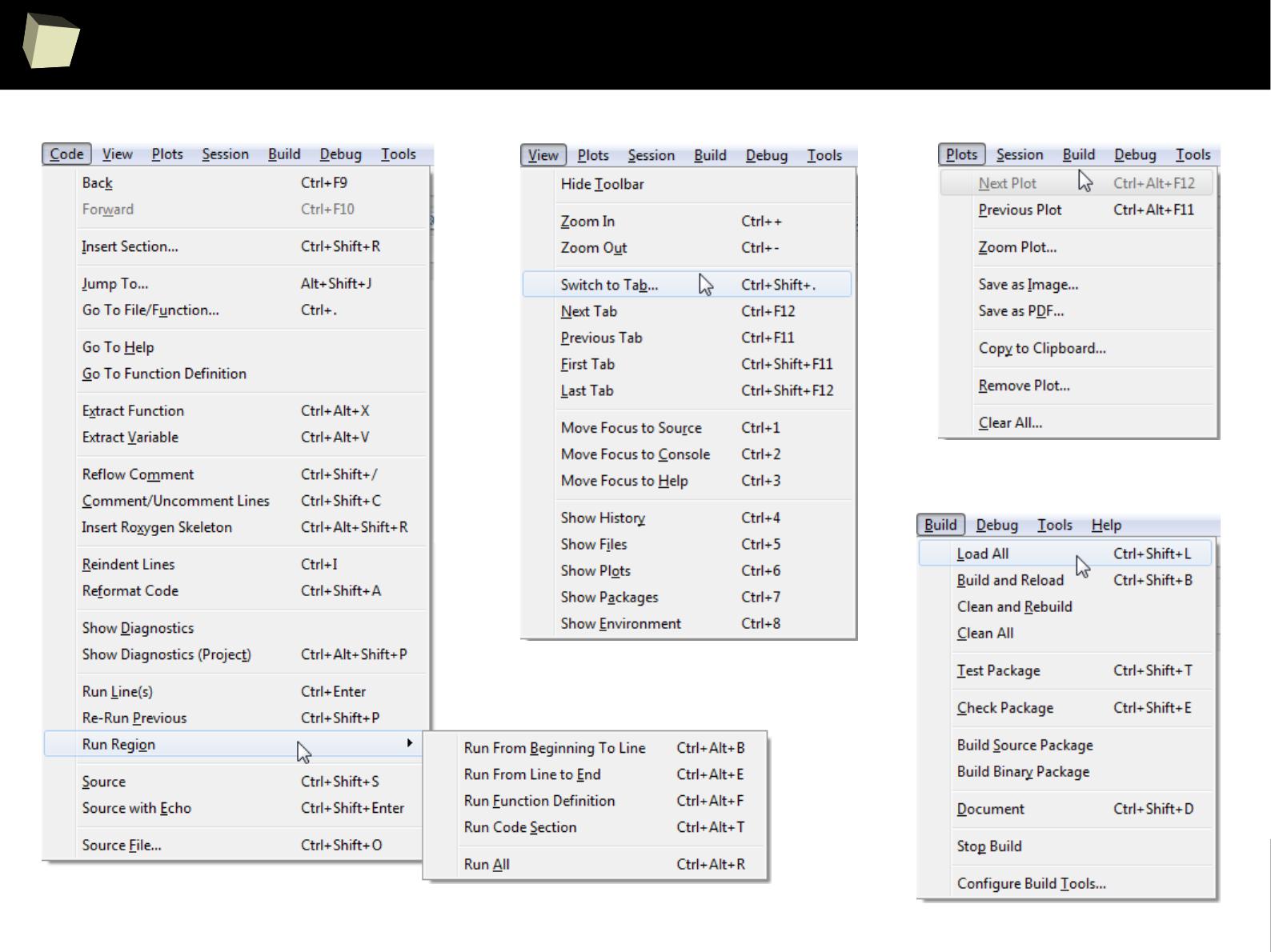

1

8

1

Various R bindings

1

8

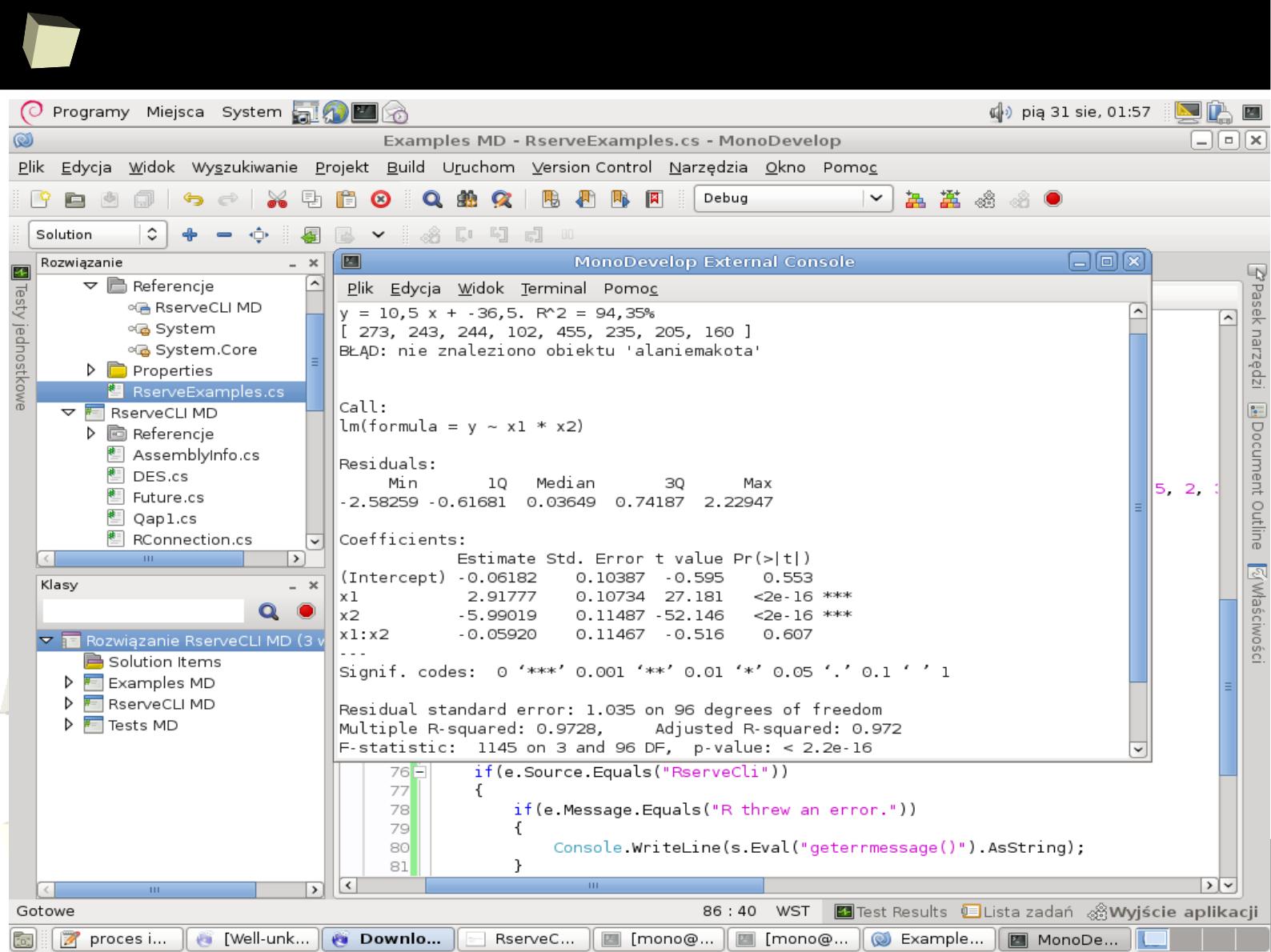

2

R under Mono on Debian via RserveCLI

1

8

3

R.NET interface

Sample .NET

applications

1

8

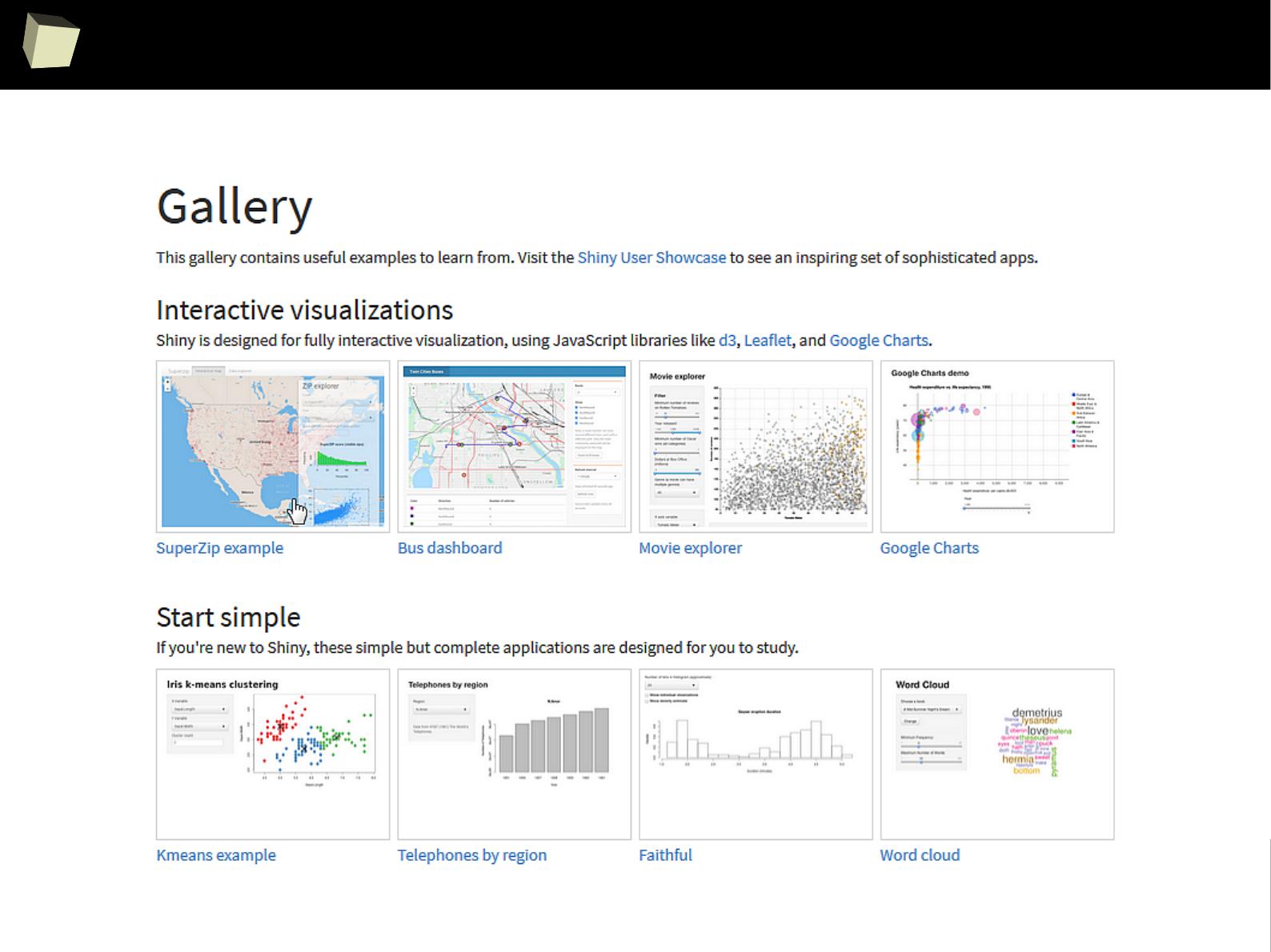

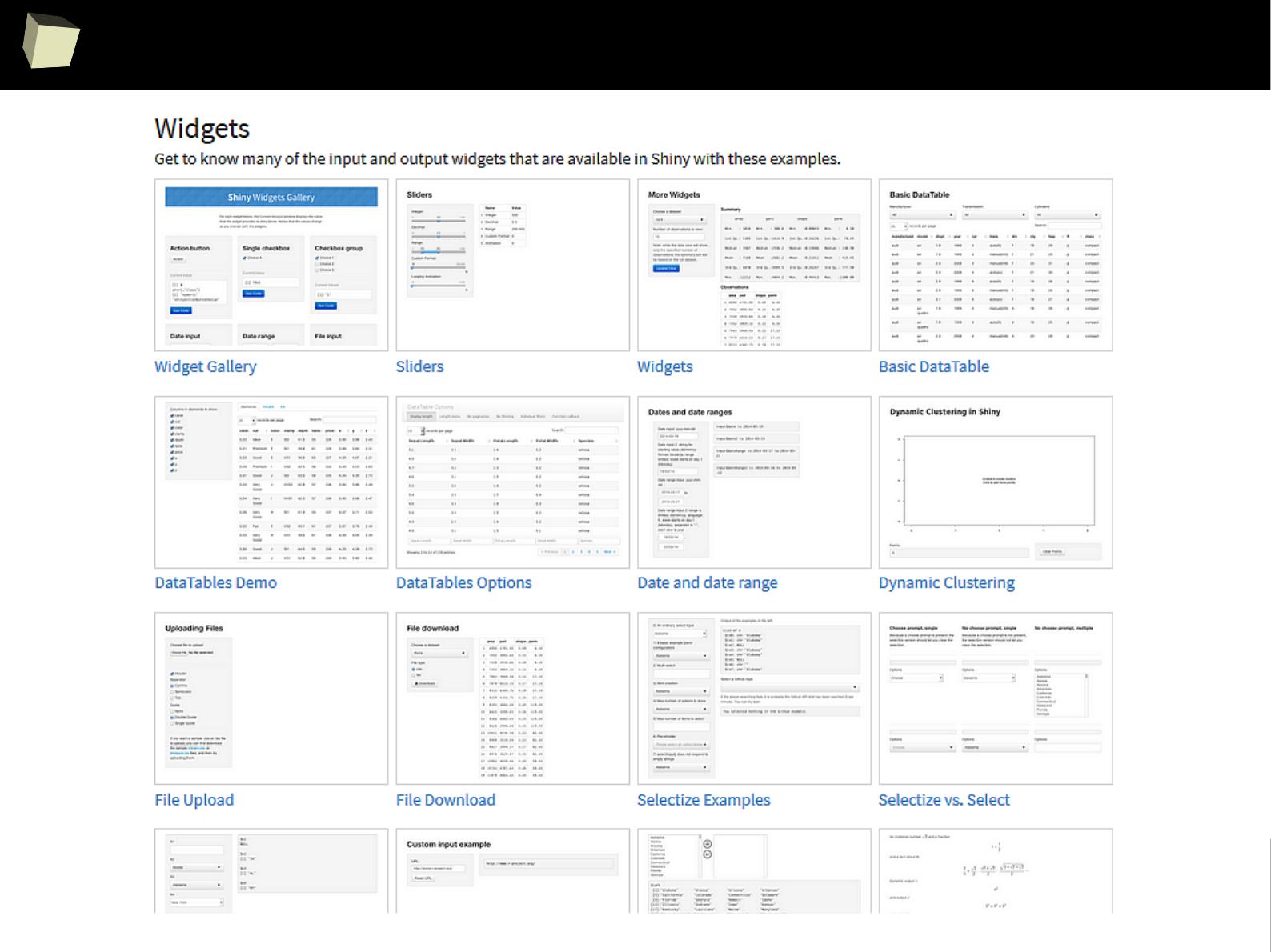

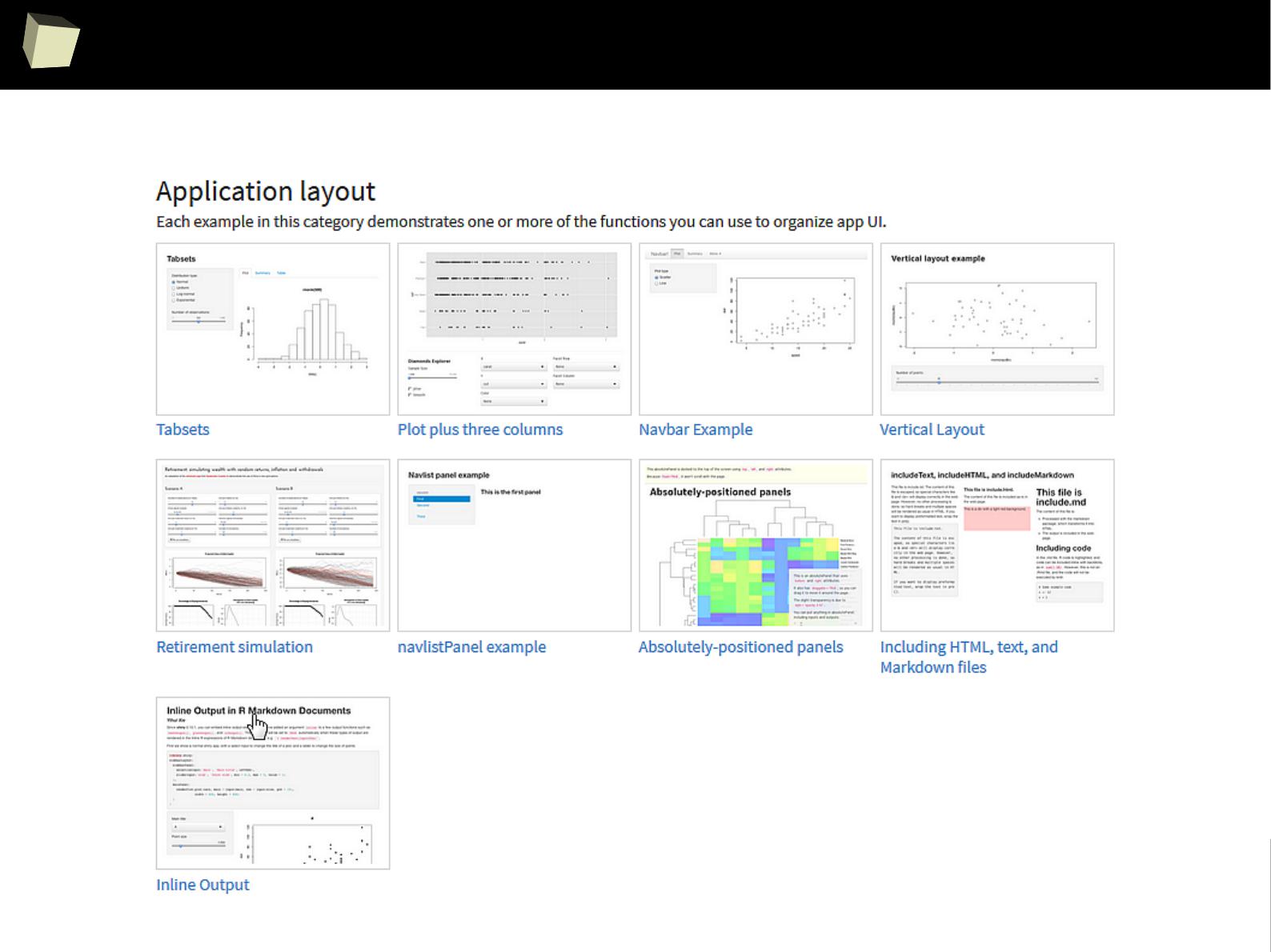

4

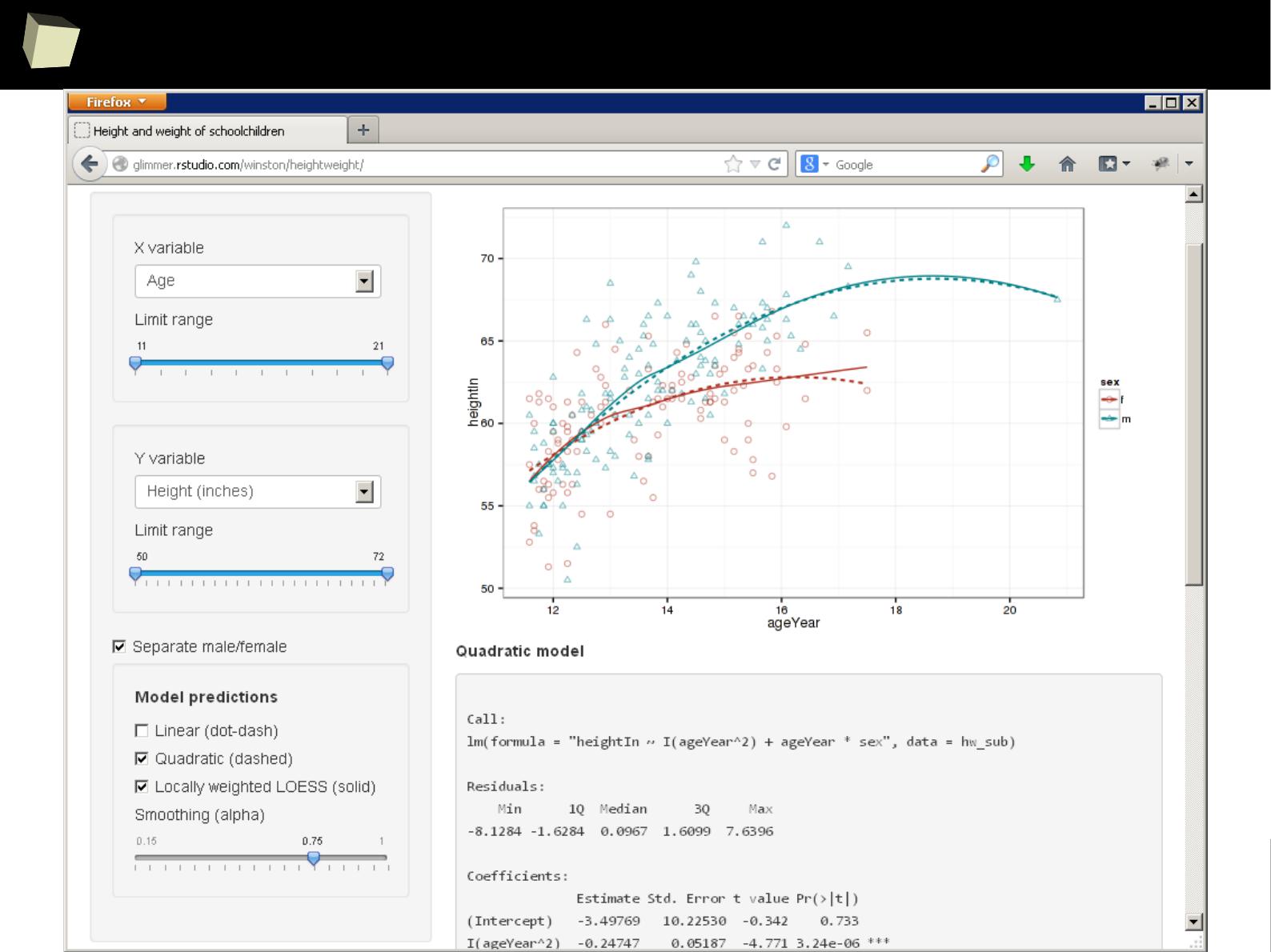

shining web applications with R and Shiny

1

8

5

shining web applications with R and Shiny

1

8

6

shining web applications with R and Shiny

1

8

7

shining web applications with R and Shiny

1

8

8

easy

(but shining)

web applications with R and Shiny

1

8

9

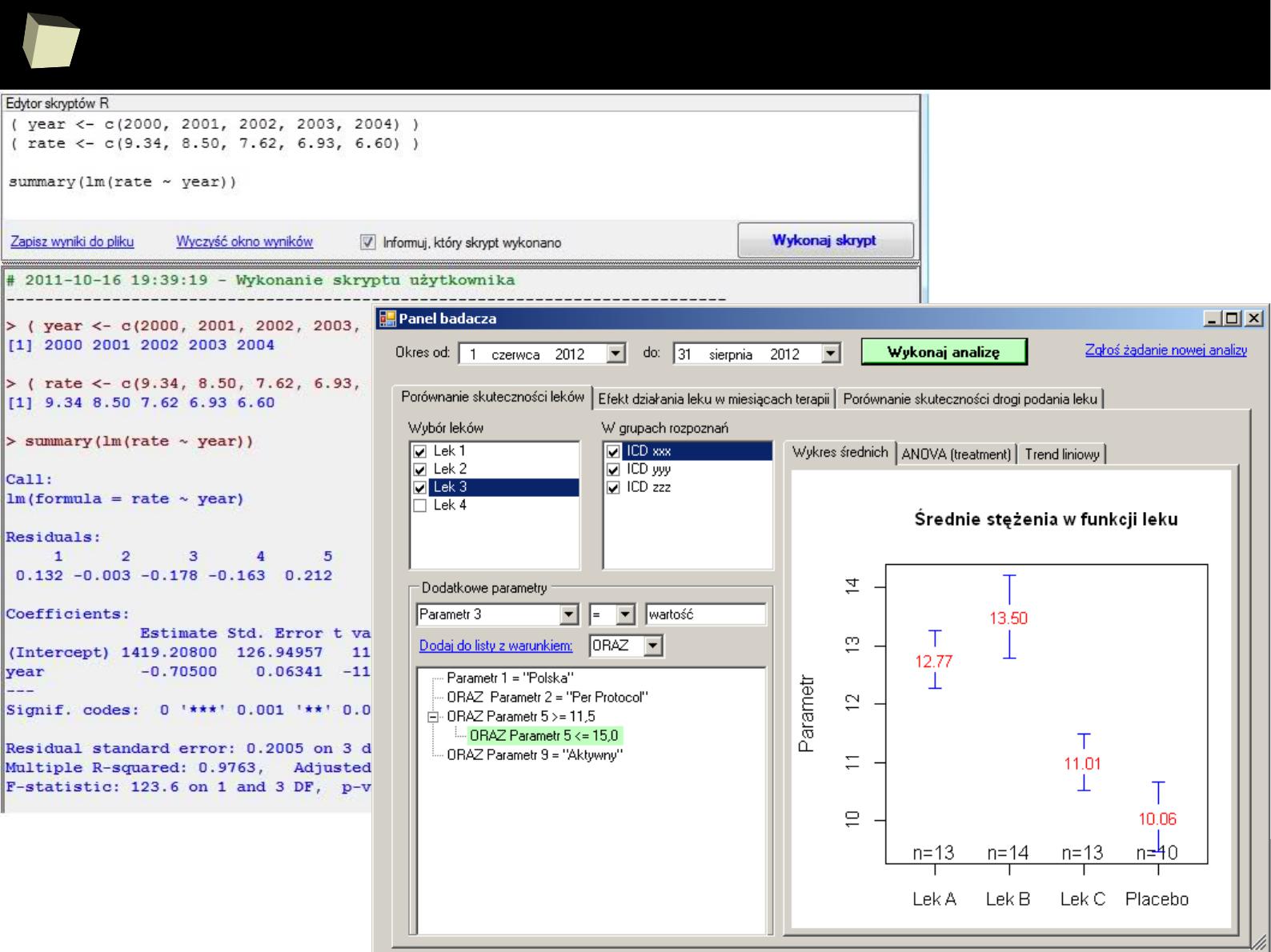

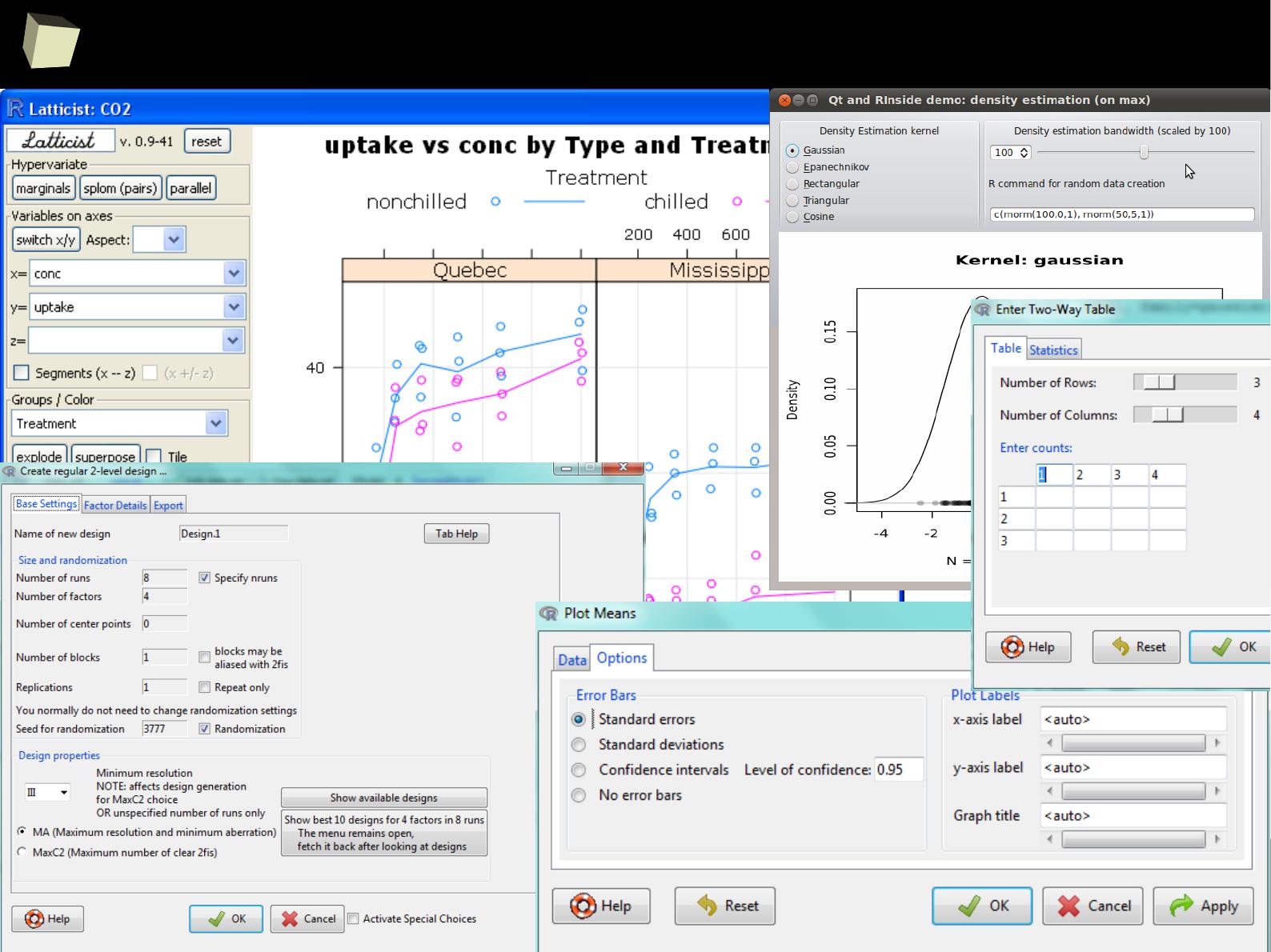

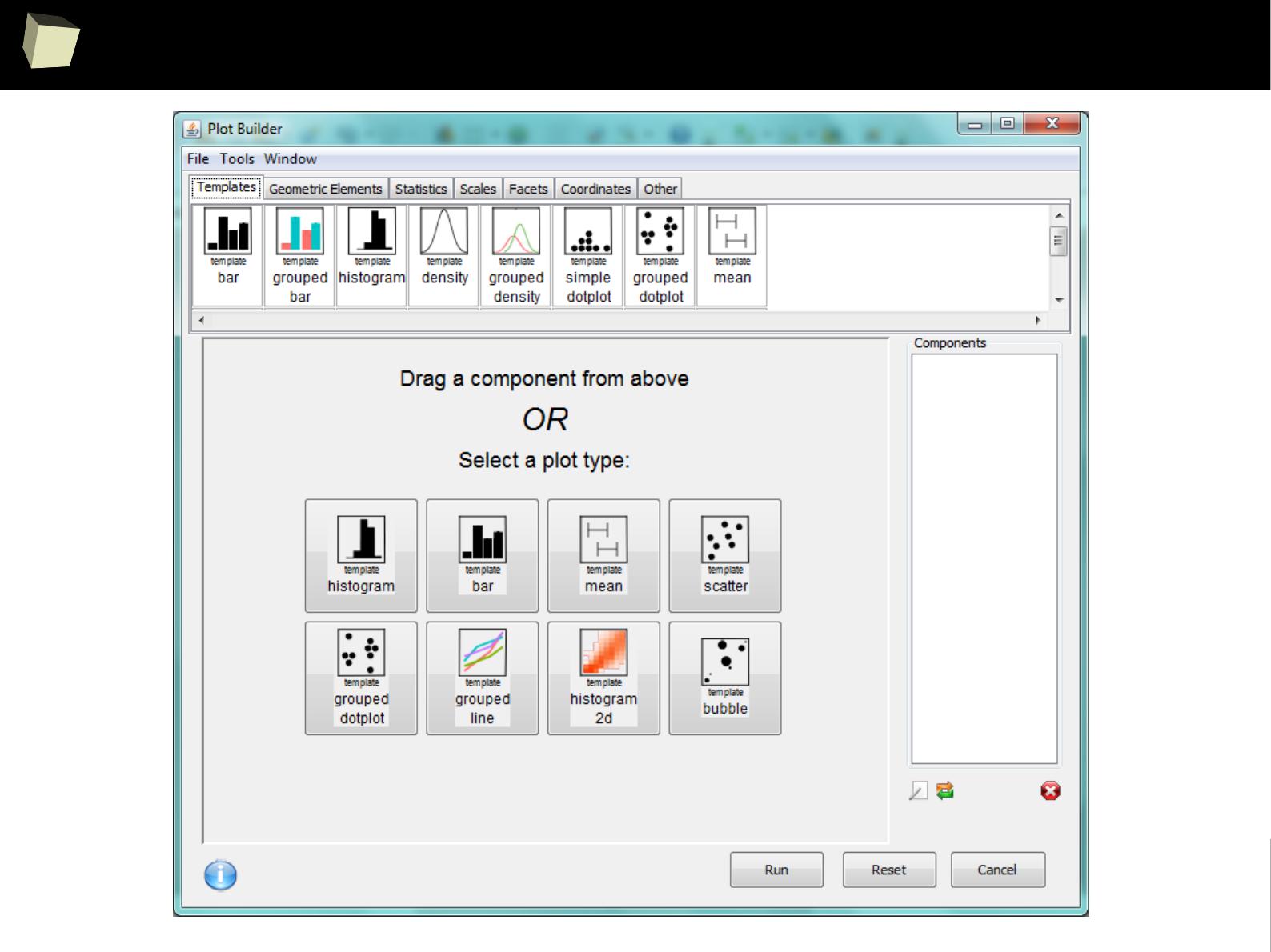

some GUI hosted by R

1

9

0

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is (widely) supported by the world of science

IV R is supported by the community

V R is (increasingly) supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many possibilities to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

1

9

1

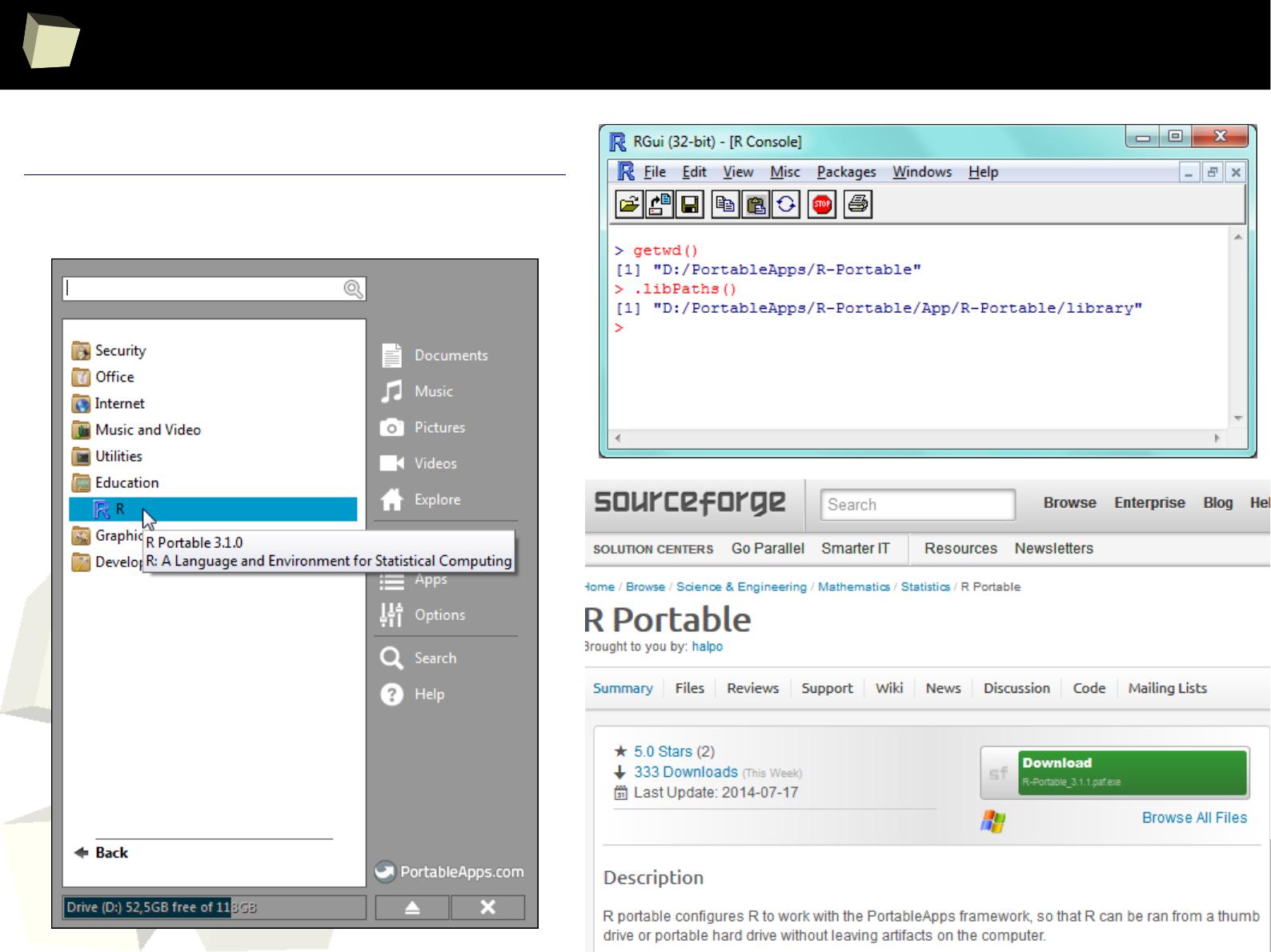

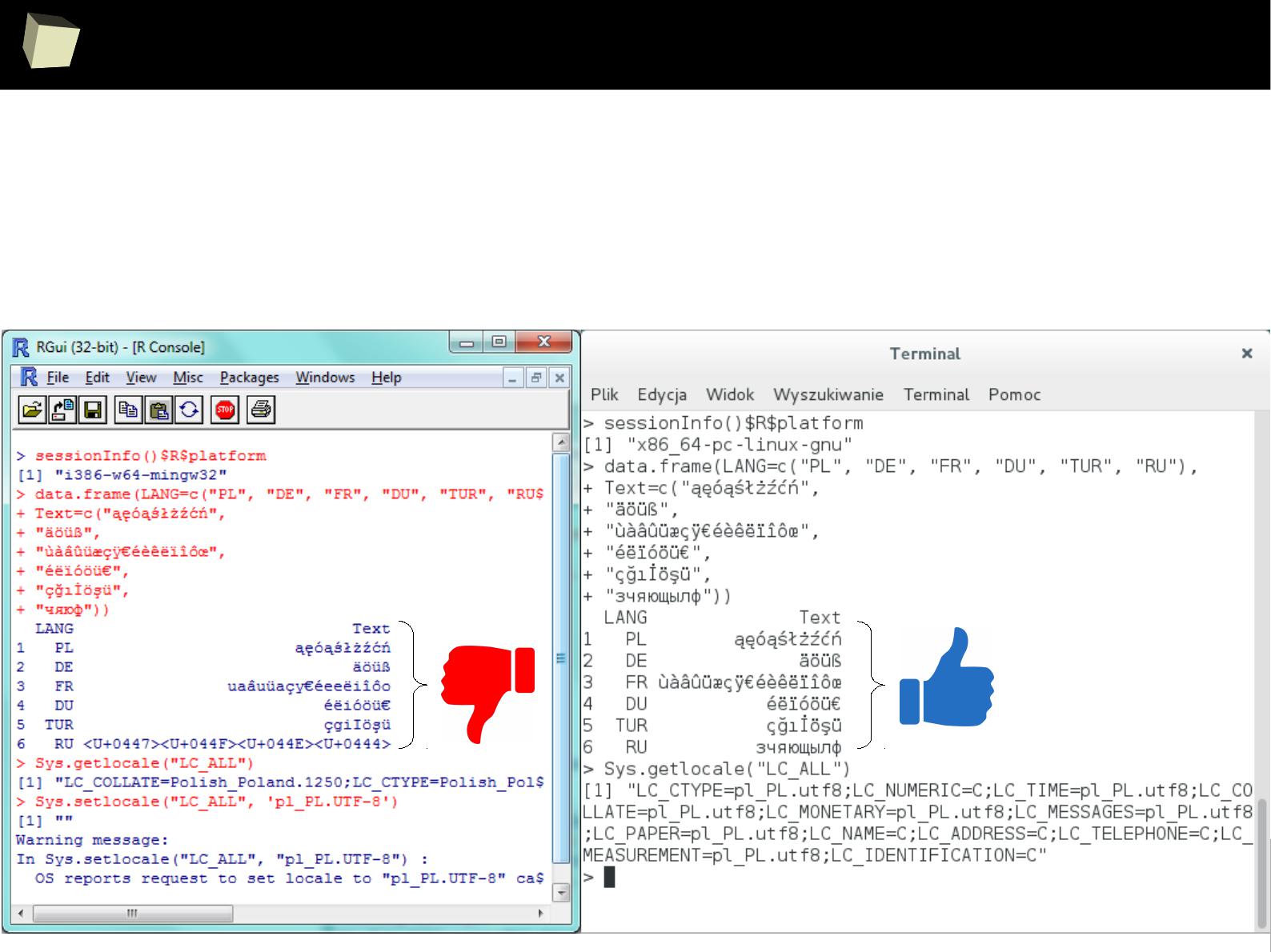

R is truly cRoss-platform

R can be run:

●

on many operating systems: Windows (95-8.1), Unix (Solaris, AIX, HP-UX),

Unix-like (GNU/Linux, FreeBSD), OSX and mobile: iOS, Android, Maemo

●

on minicomputers like RaspberryPi (Raspbian OS)

●

without installation, from any storage (USB device, CD/DVD, SD card)

portable

– just copy/paste the directory with R. You can have many versions of it.

Those properties make R an ideal base for creating:

●

Handy, self-contained tools used by CRO's Data Management and

Biometrics departments for easy monitoring the quality of collected data.

●

Cheap, easy to deliver and backup (copy/paste) computing environments

/free Linux + R + RStudio + PostgreSQL/MySQL + SVN + TrueCrypt + OpenVPN + VNC + rsync + Apache /

●

Independent computing units based on simplified minicomputers.

1

9

2

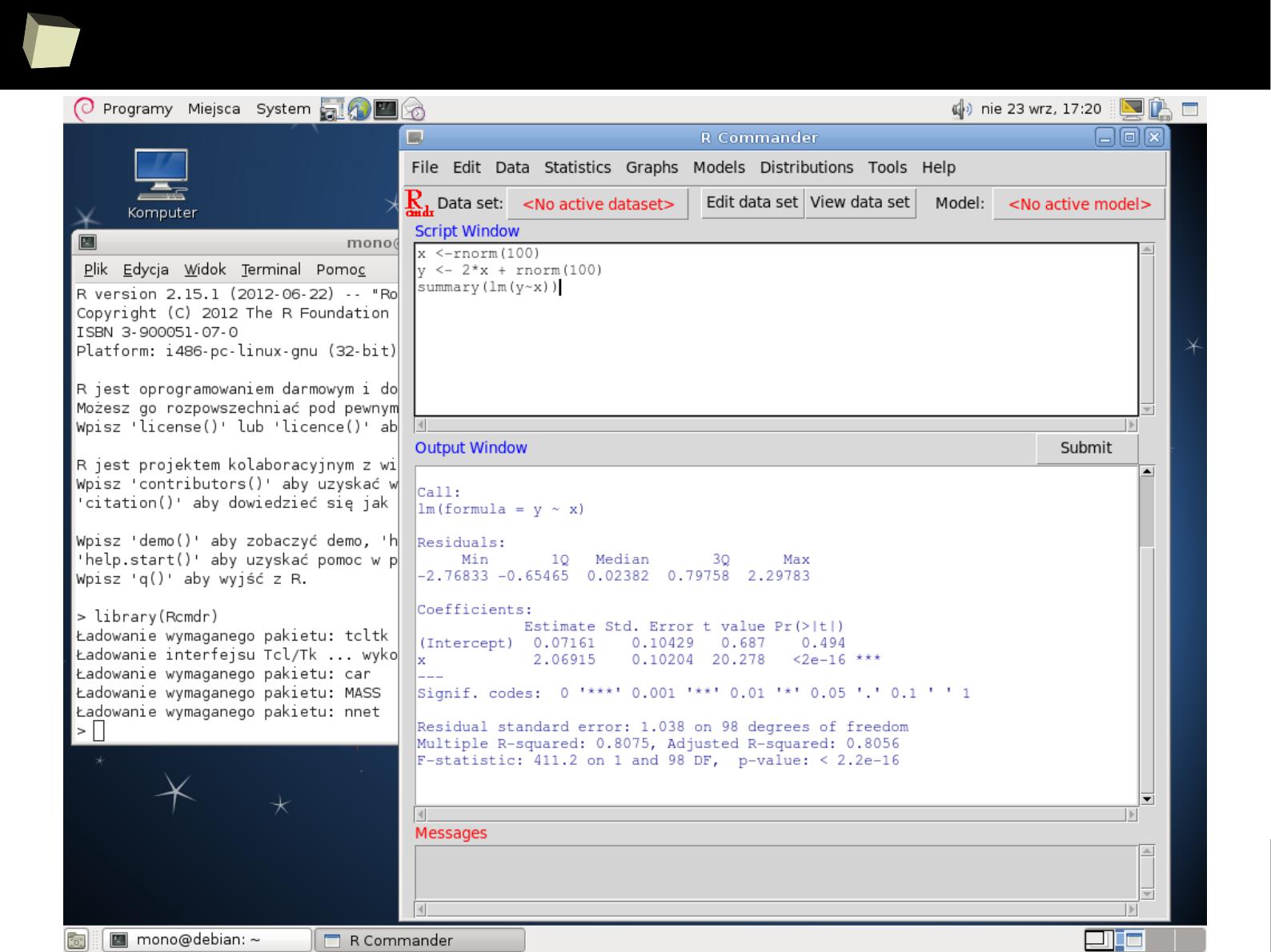

GNU/Debian “Wheezy”

1

9

3

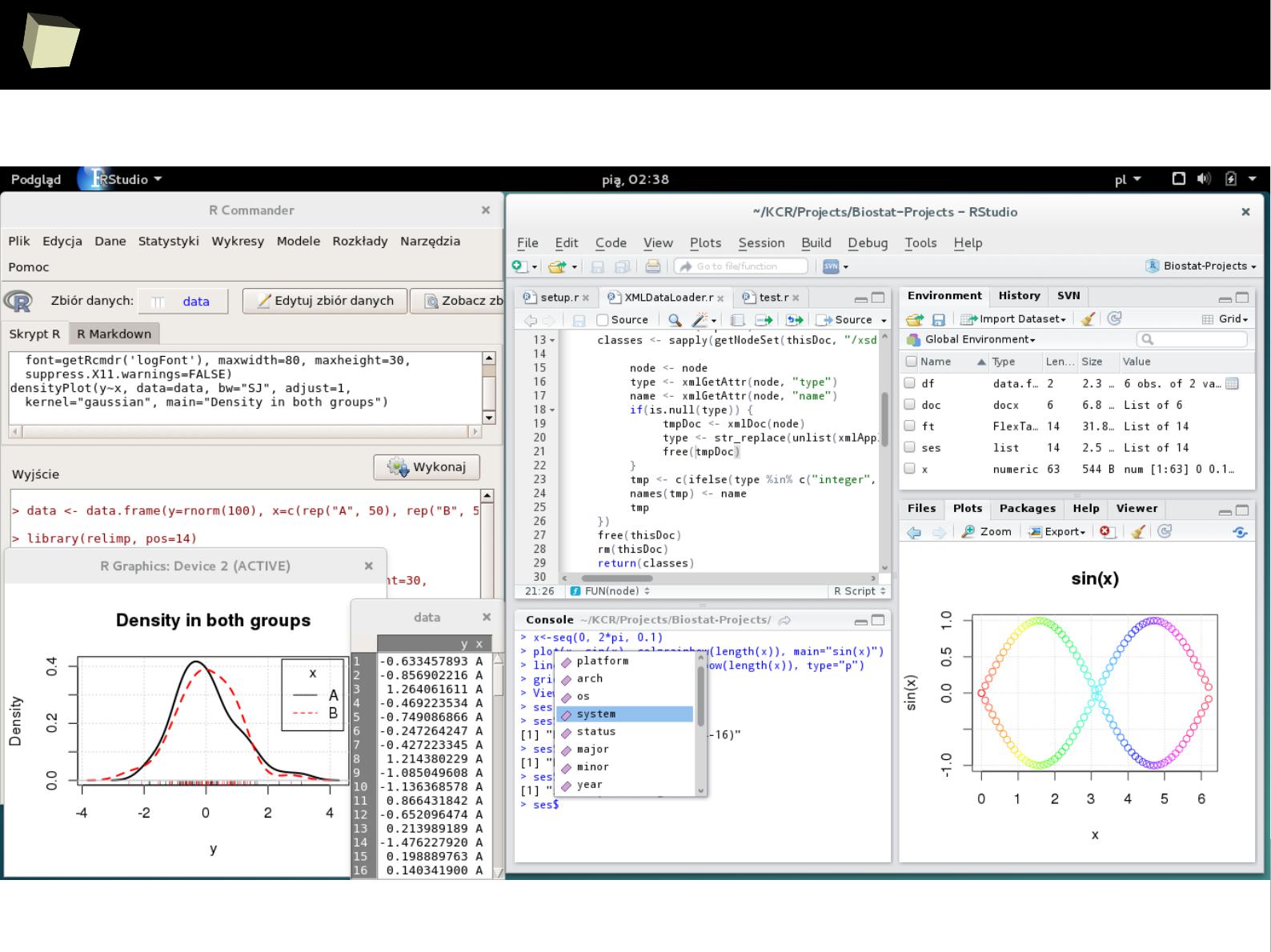

R and fRiends on Debian “Stretch”

1

9

4

MacOS / OS X

1

9

7

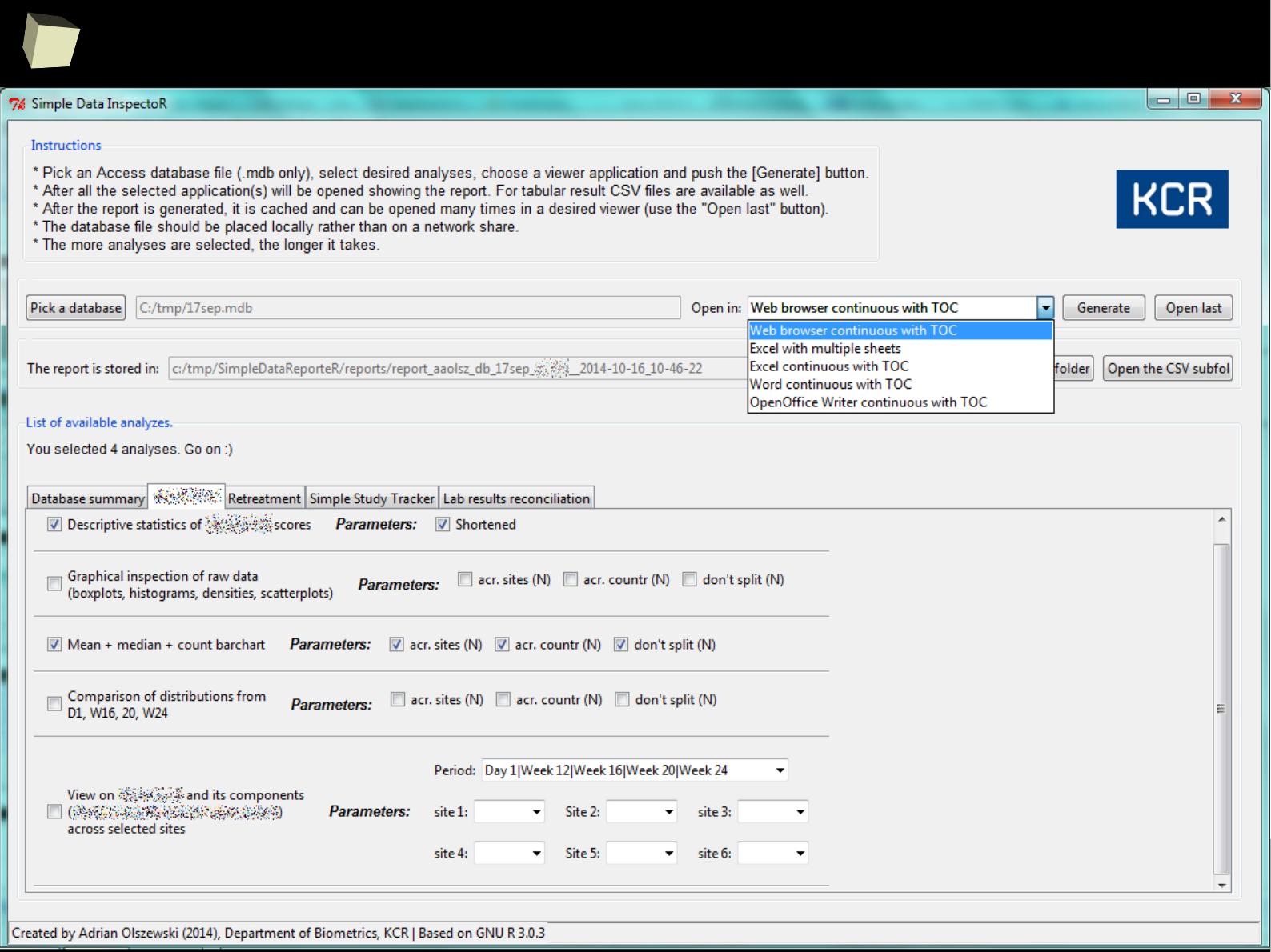

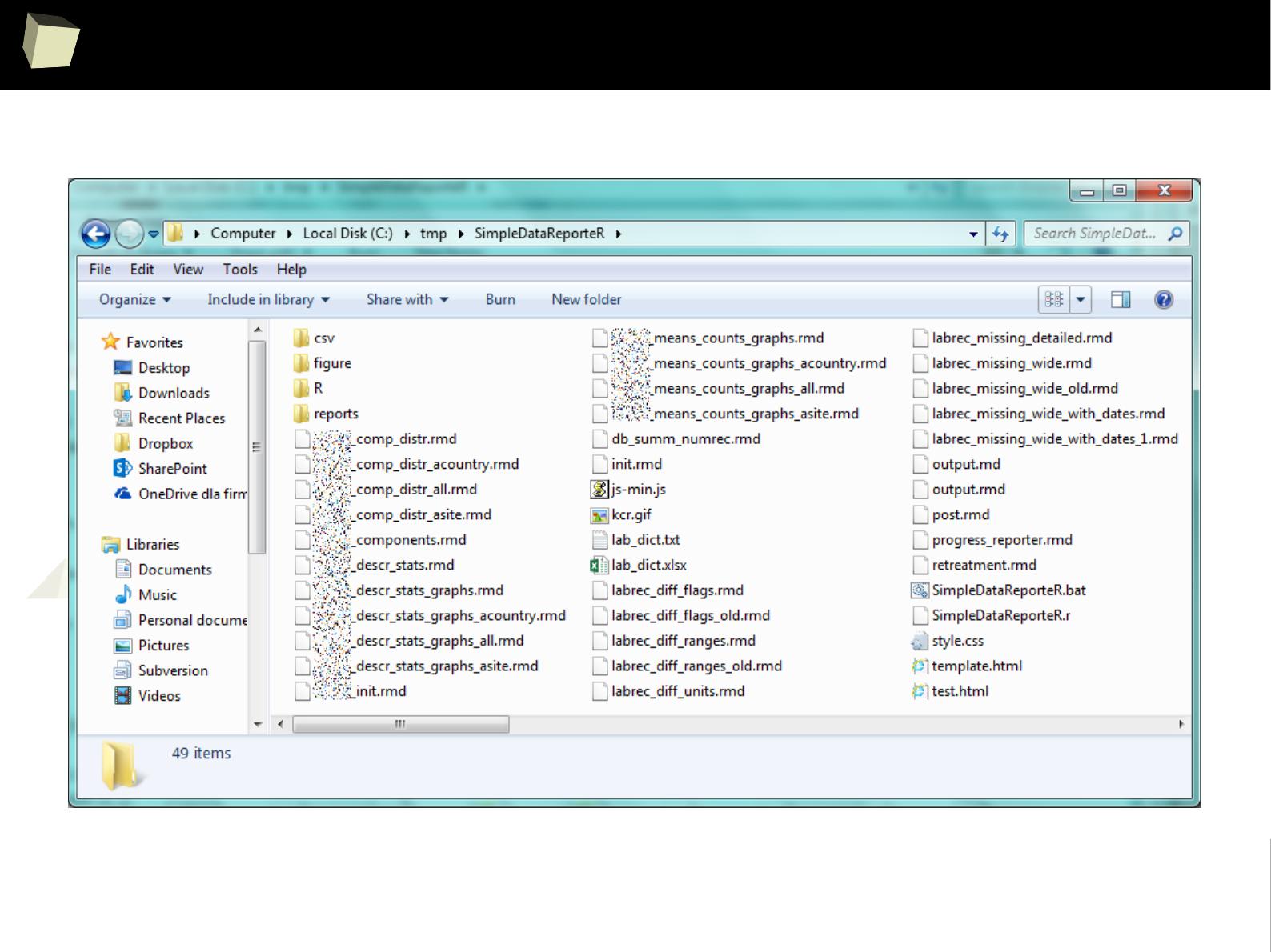

PoRtable in use – Simple Data InspectoR

1

9

8

♫

...

all you need is R

♫

Complete application: ~240MB raw,

~140MB after compression

1

9

9

13 reasons why you will love GNU R

I R is (extremely) cheap. In fact - it's free :)

II R has (extremely) wide range of capabilities

III R is (widely) supported by the world of science

IV R is supported by the community

V R is (increasingly) supported by the business

VI R is able to read data in many formats

VII Interoperability is easy to achieve

VIII R is truly cross-platform

IX R offers numerous ways of presenting data

X There are many possibilities to optimize the code

XI R is able to handle large amount of data

XII R has a set of fancy tools and IDEs

XII FDA accepted using R for drug trials!

2

0

0

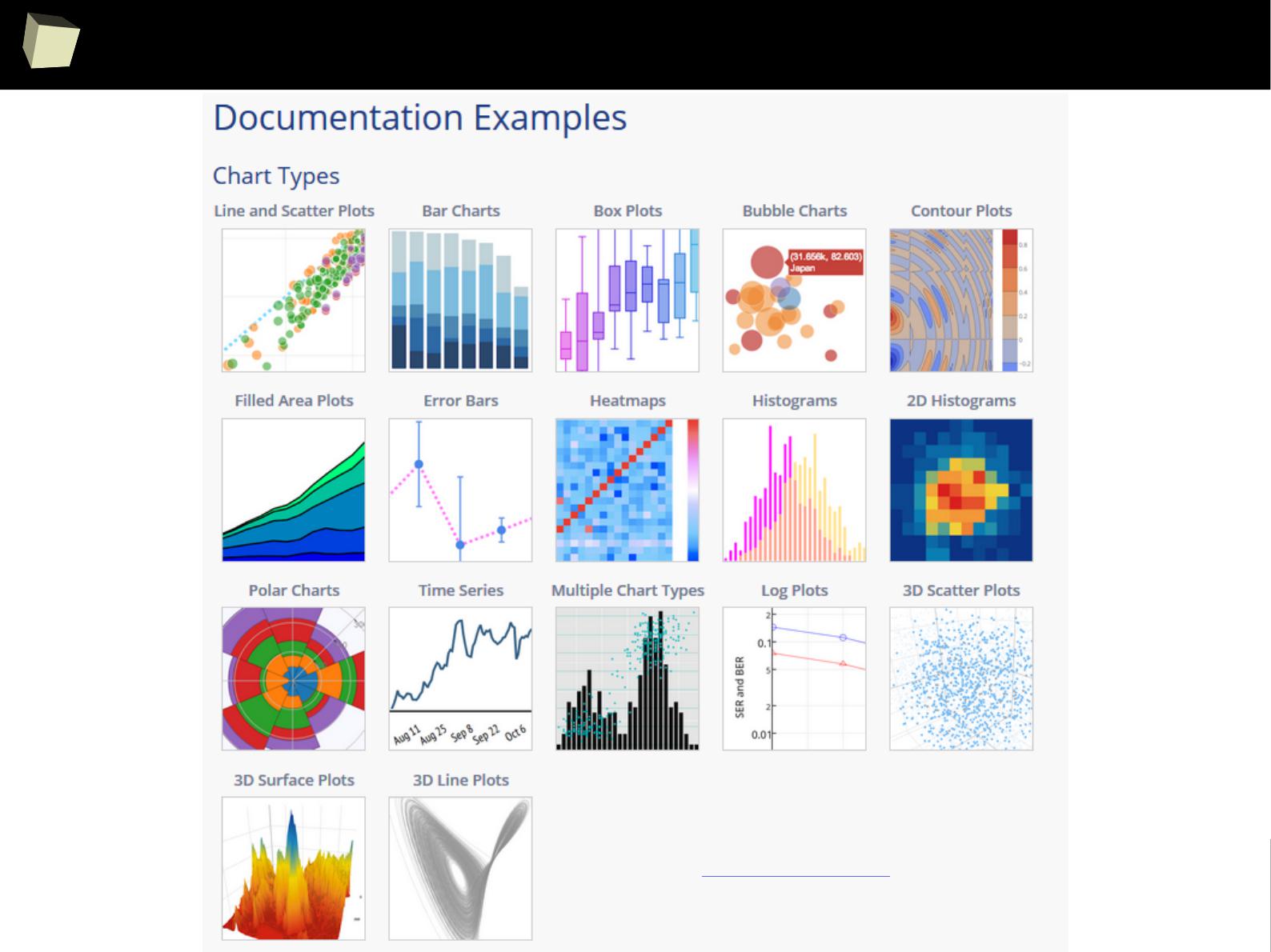

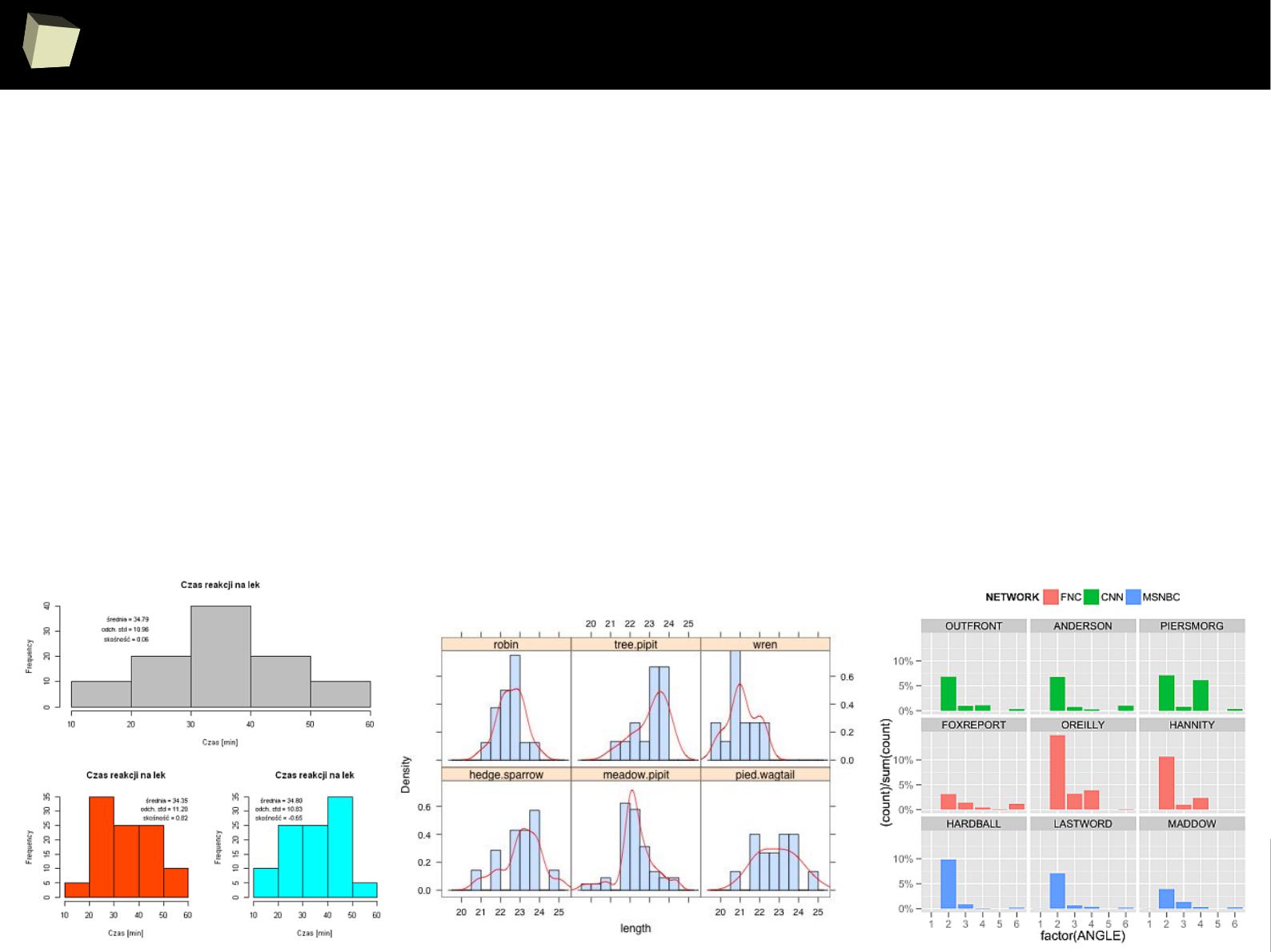

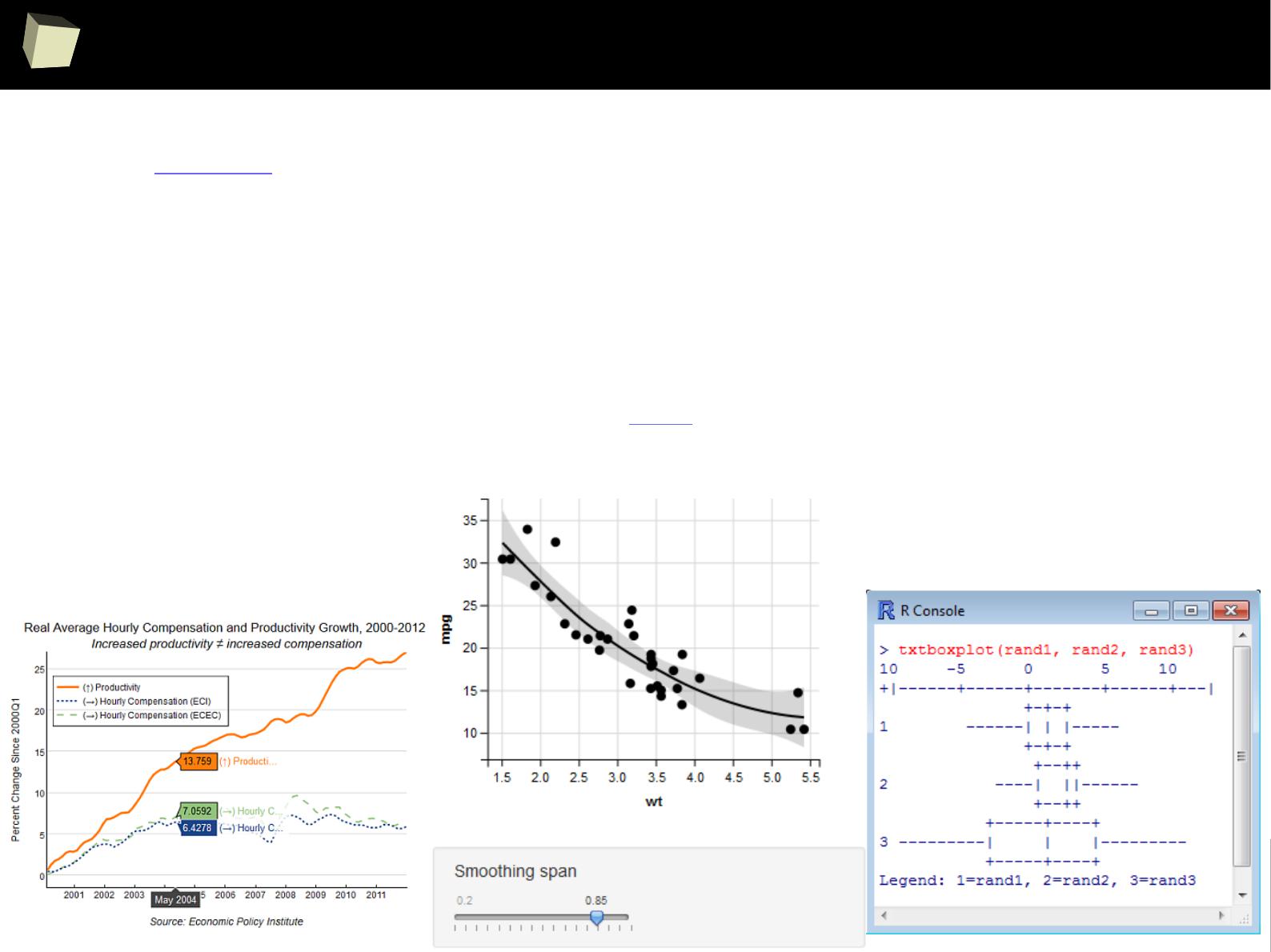

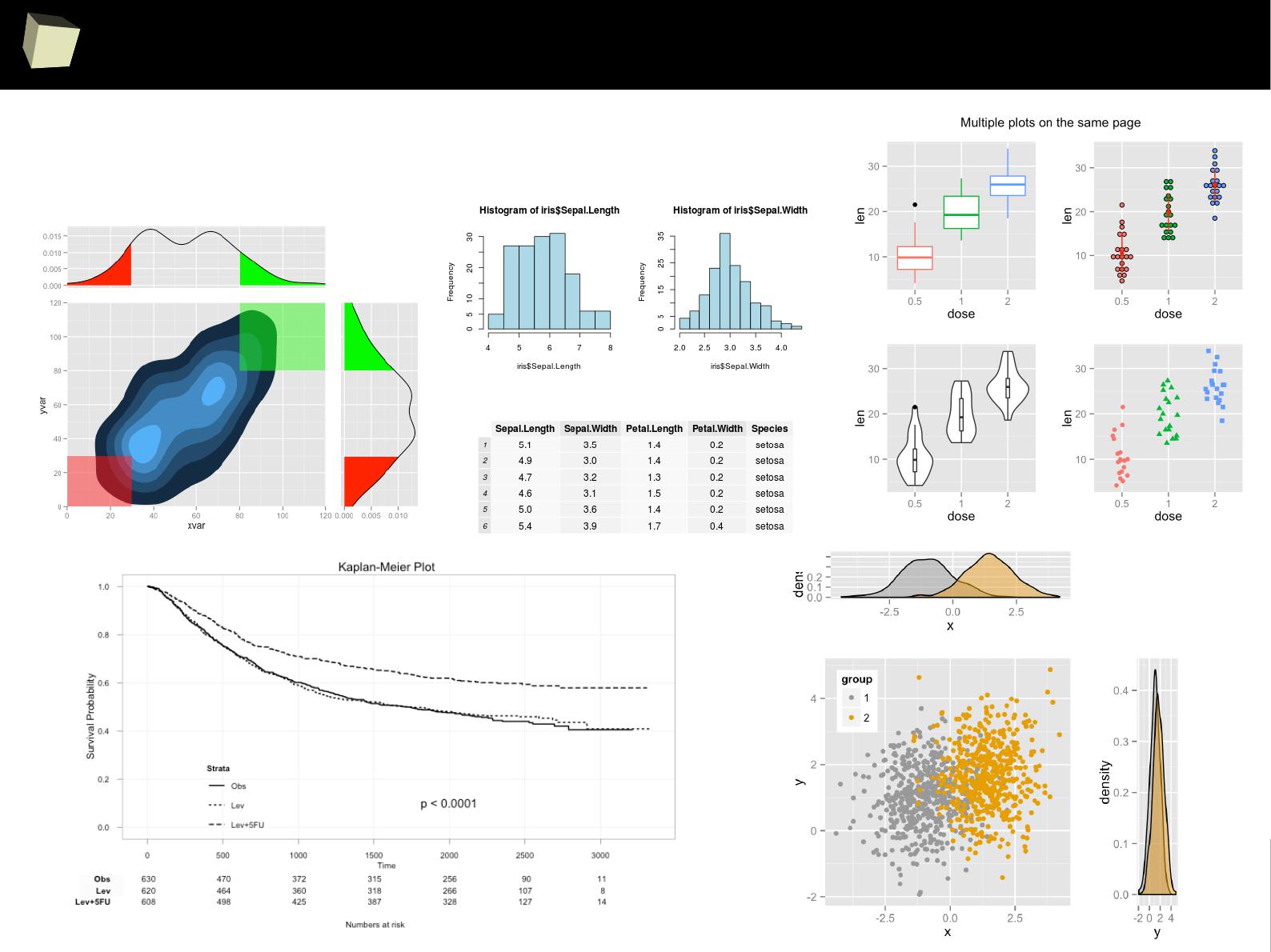

It's showtime!

R can produce output in a rich set of formats, such as:

●

pictures: JPG, PNG, BMP, GIF, TIFF, SVG, EMF

●

animations: GIF

directly or via ImageMagic

, AVI

via Ffmpeg

●

plain text files: CSV, TSV, XML, XPT (SAS transport files), ASCII

●

rich format docs: RTF, PS, PDF, MS Word (*.doc; *.docx

OpenXML

),

Open/Libre Office Writer (*.odt

OpenDocument

)

●

spreadsheets: MS Excel (*.xls; *.xlsx), OpenOffice Calc (*.ods)

●

HTML pages: simple pages, complex reports or presentations

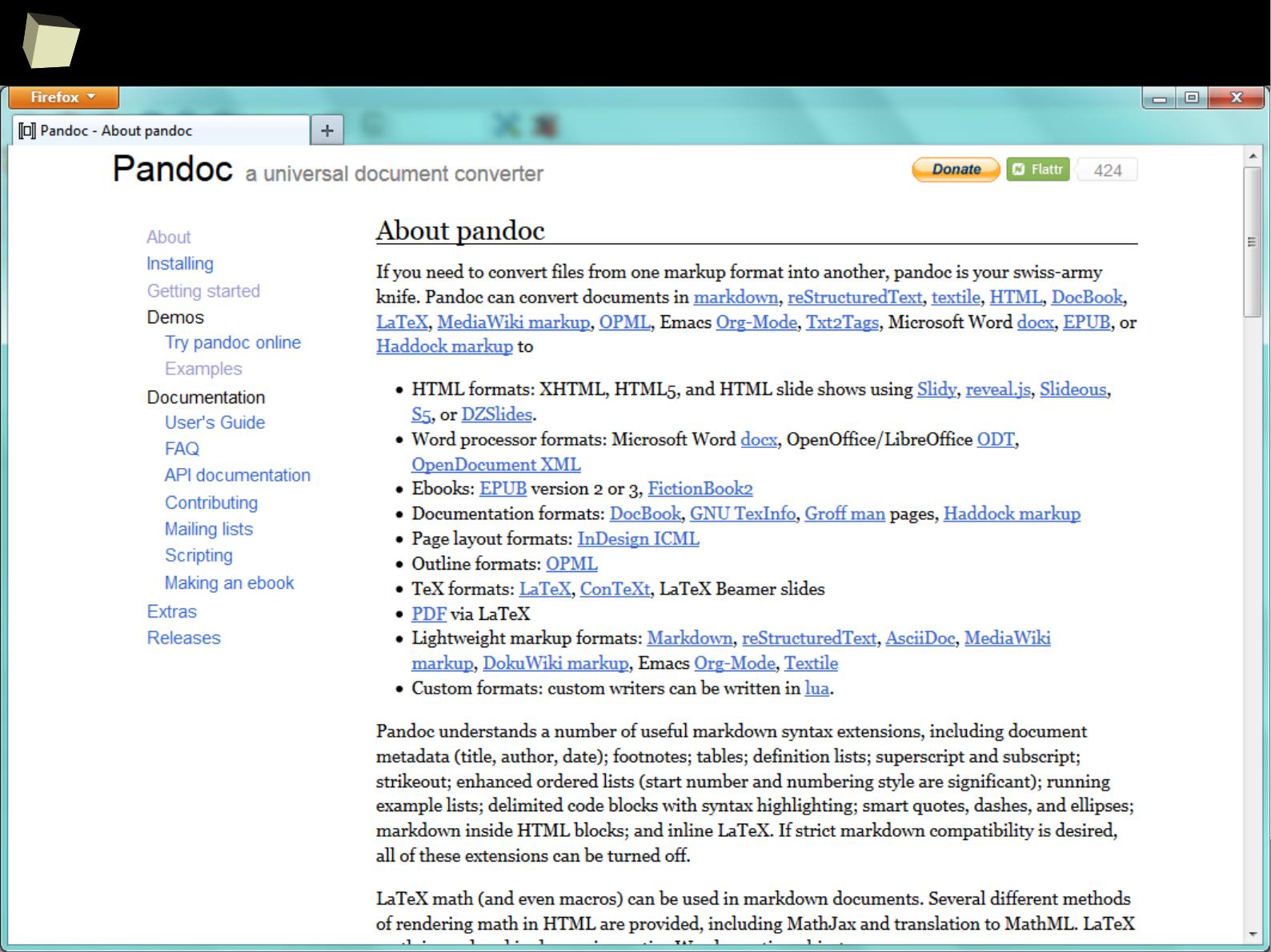

●

HTML files can be easily read by Word and Excel. Tables,

paragraphs, and styles are preserved

●

HTML is convertible to many other formats via pandoc

●

There is a way to obtain multi-sheet Excel workbooks

●

R can also send the results to an external application via WebService

JSON

2

0

1

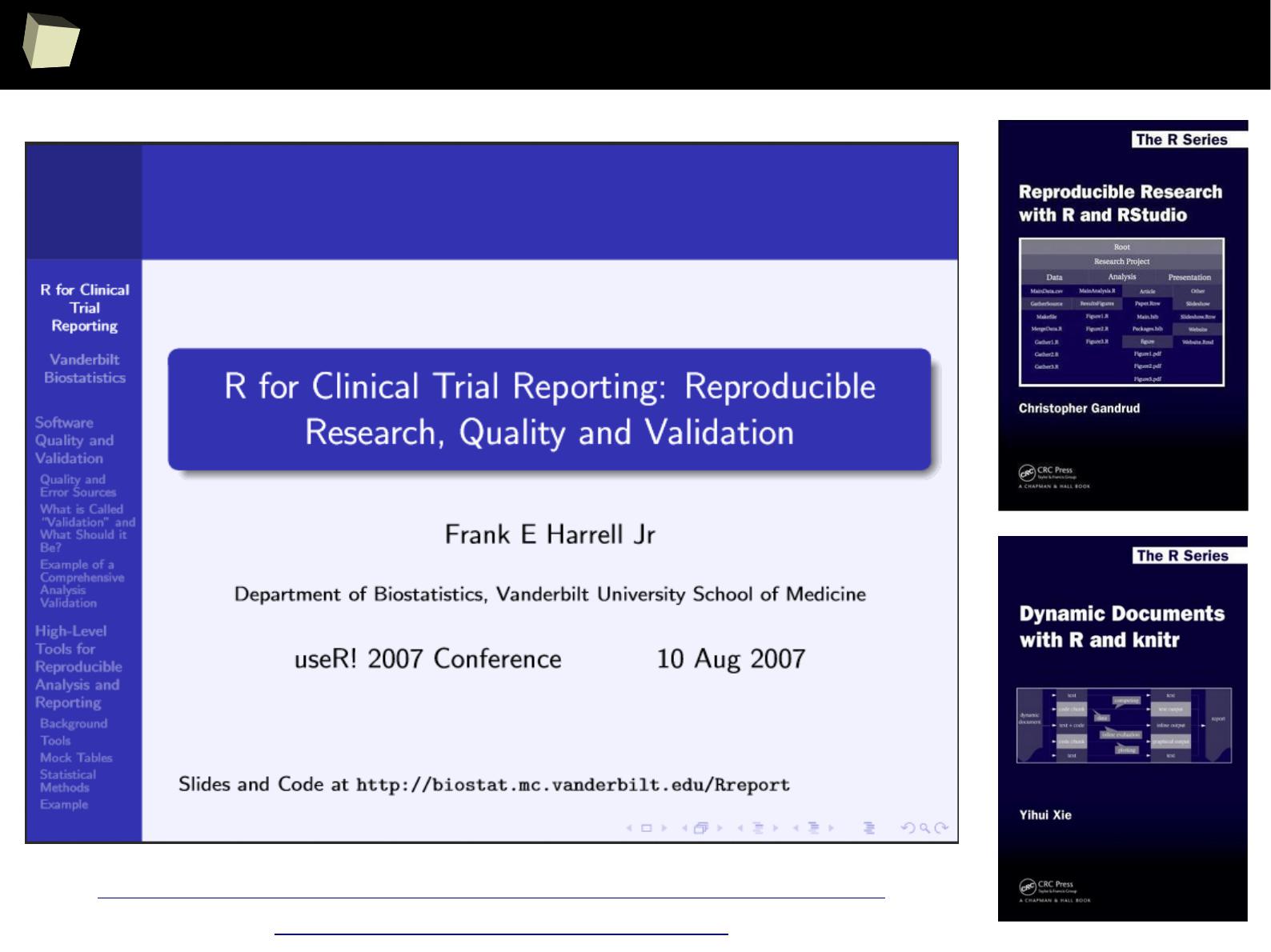

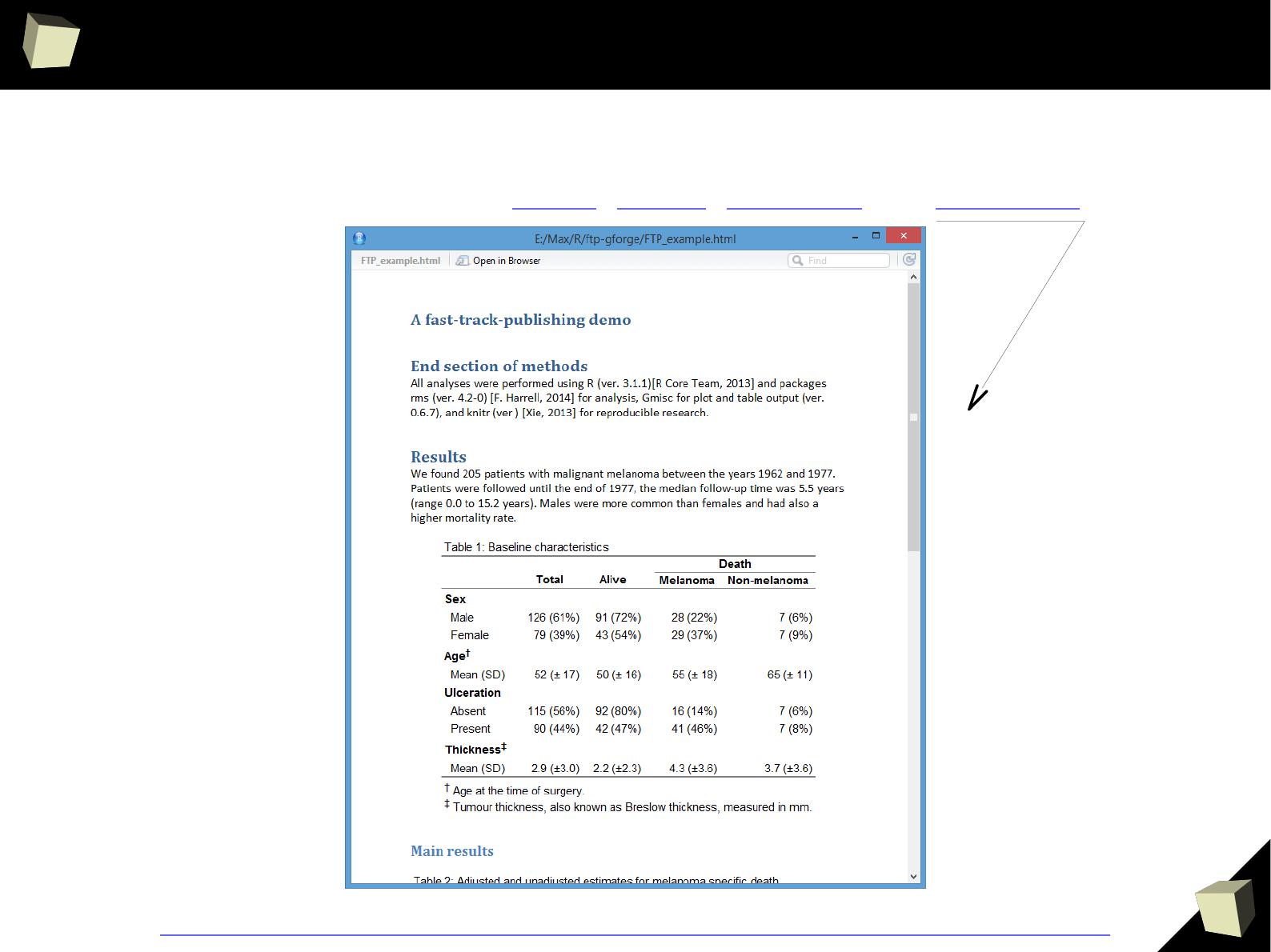

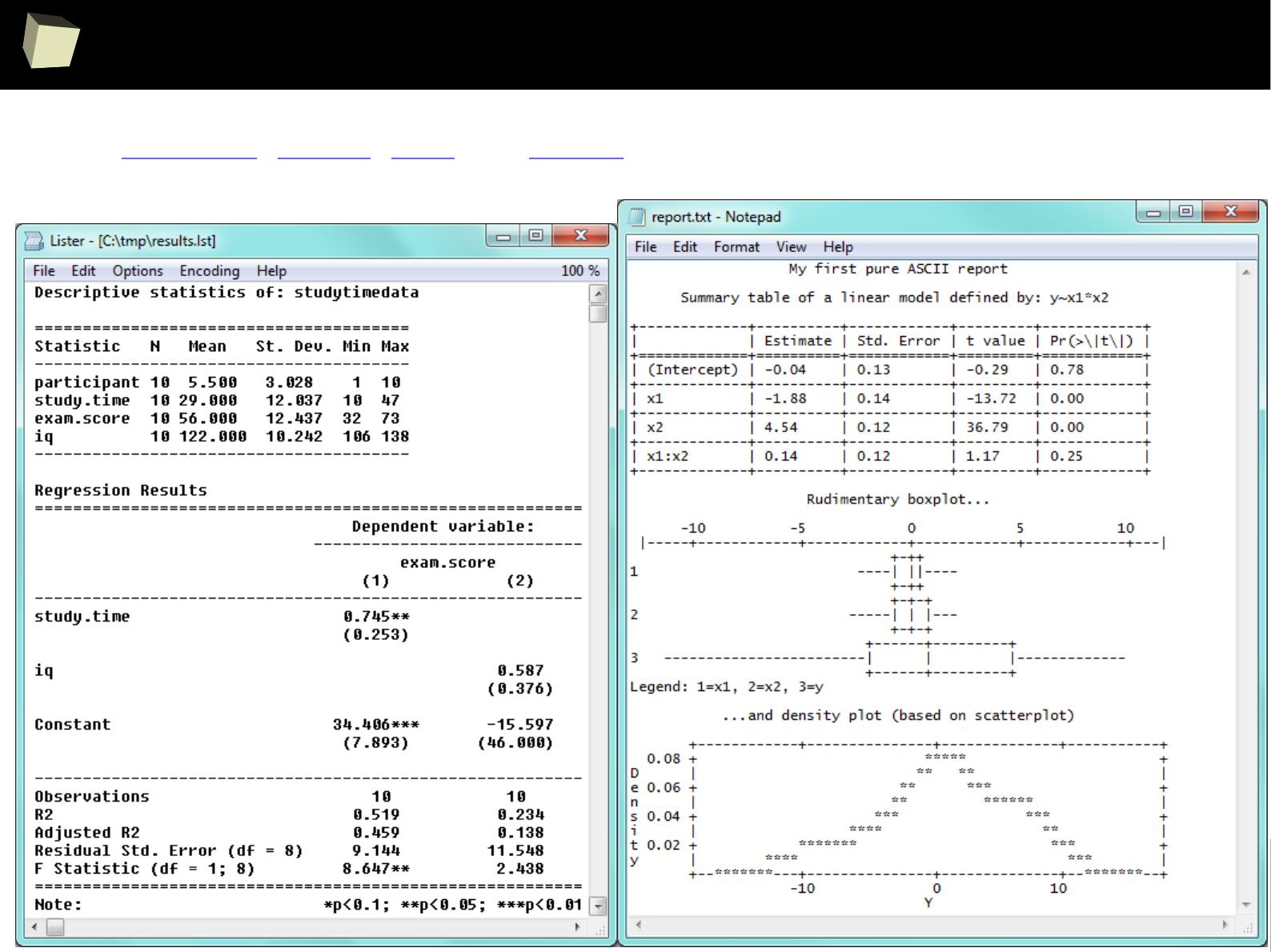

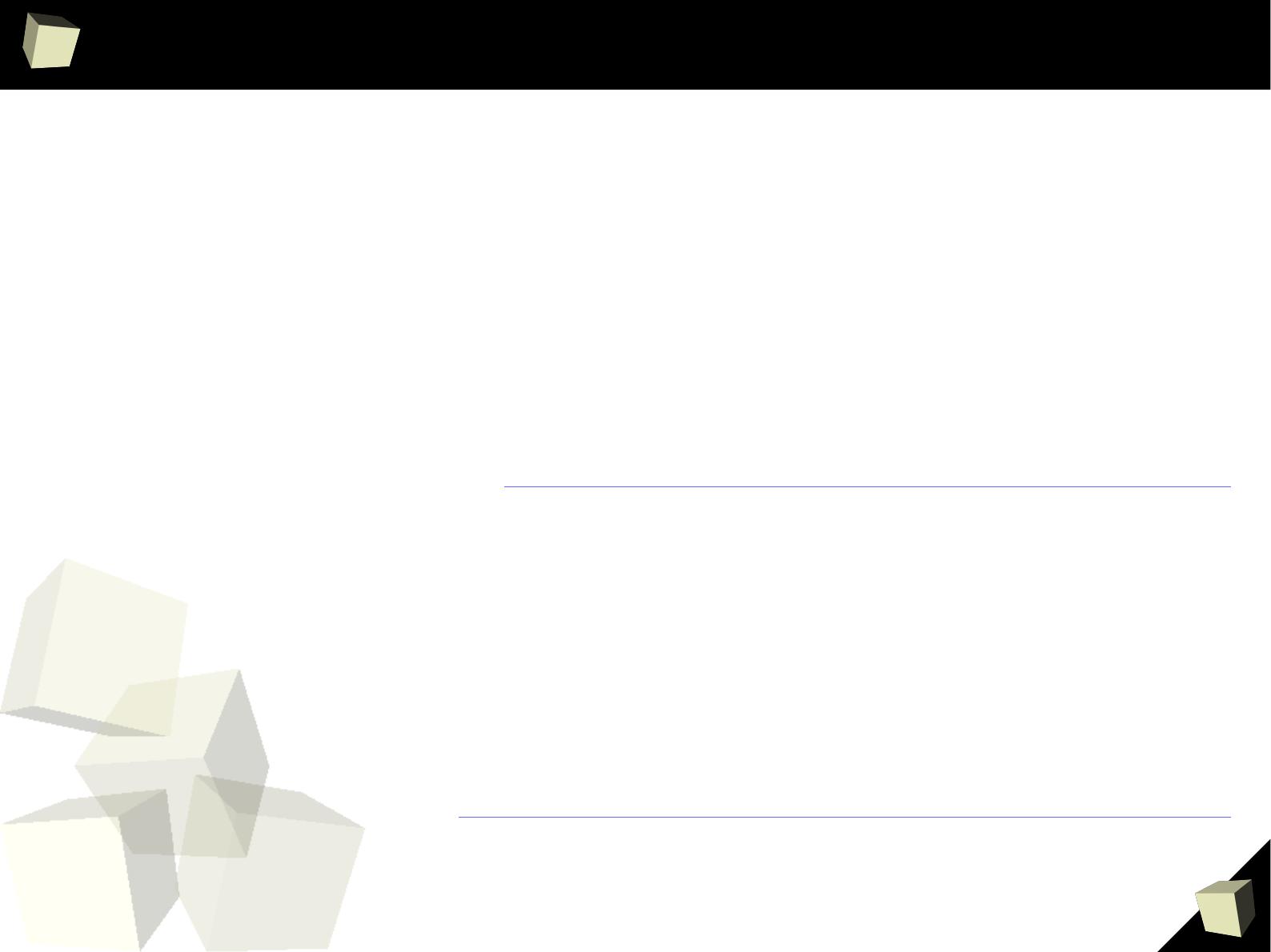

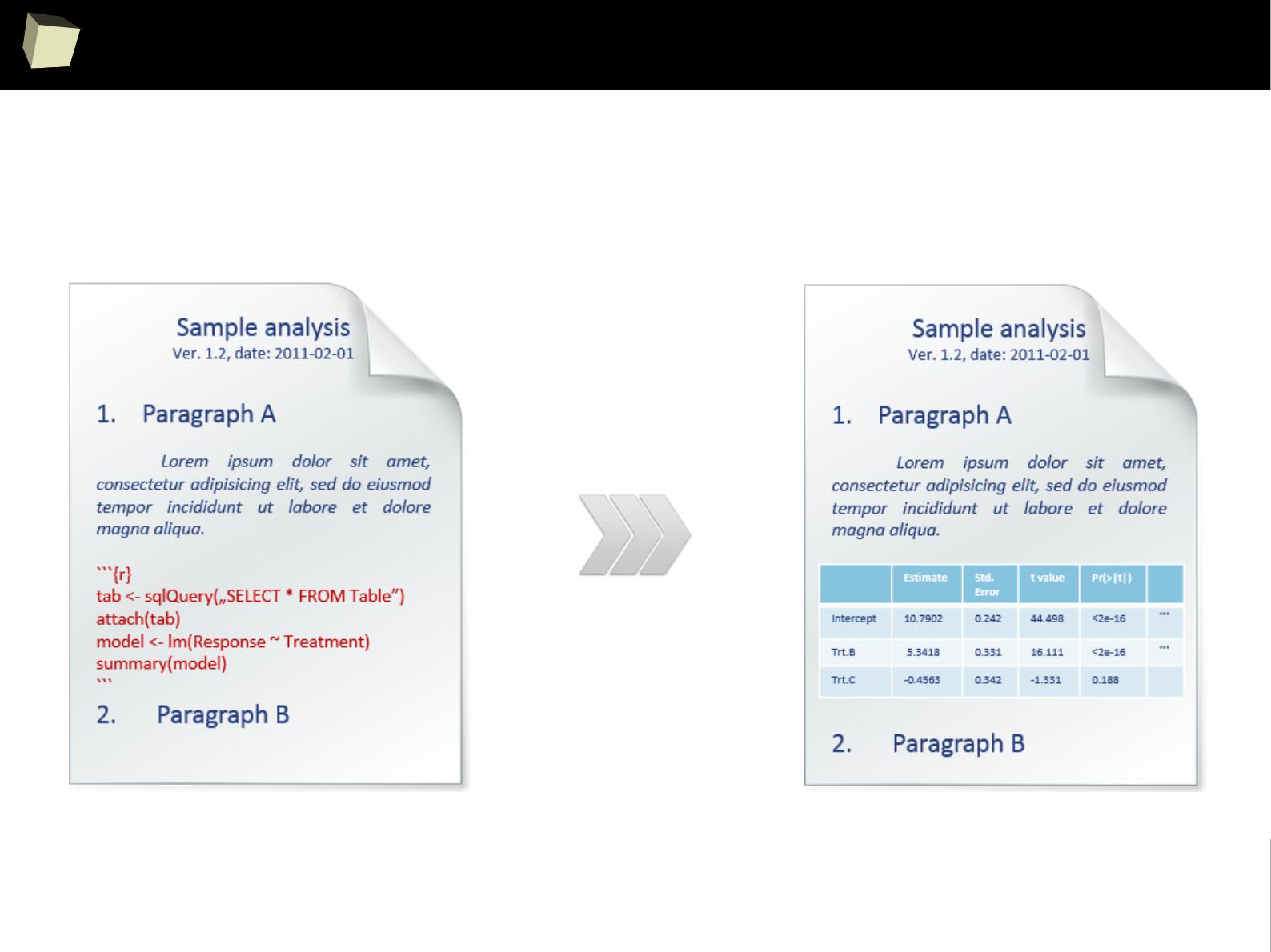

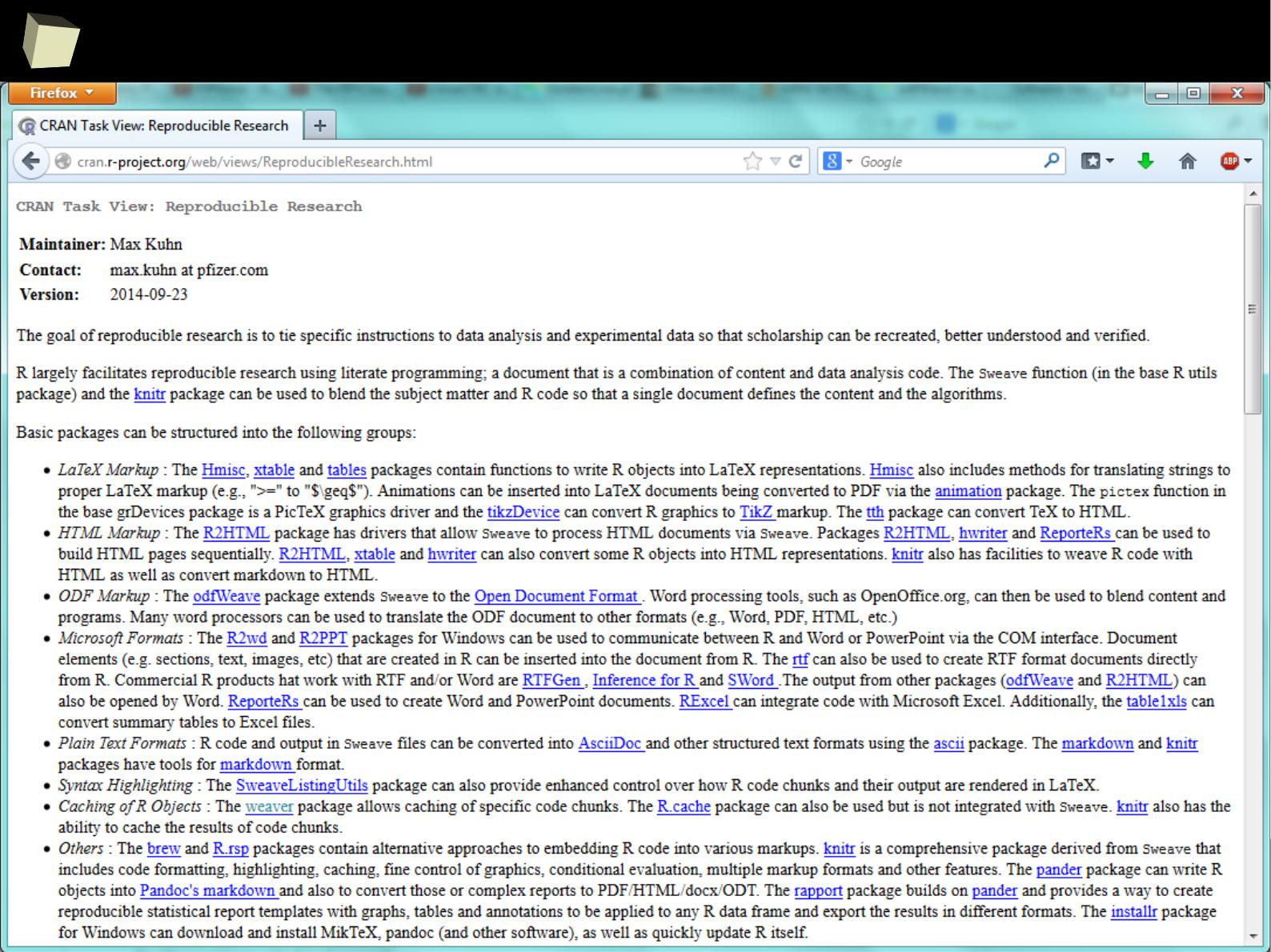

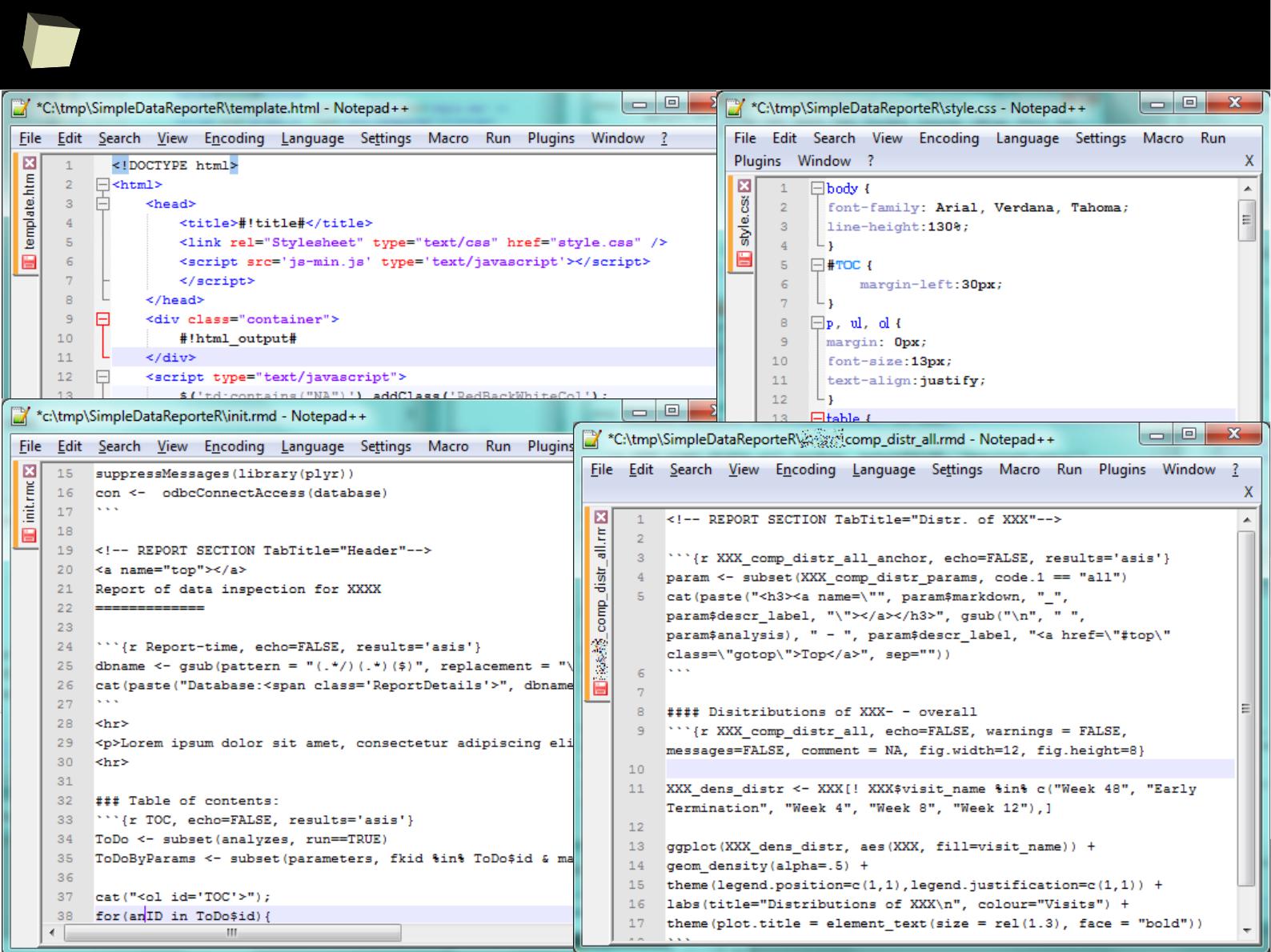

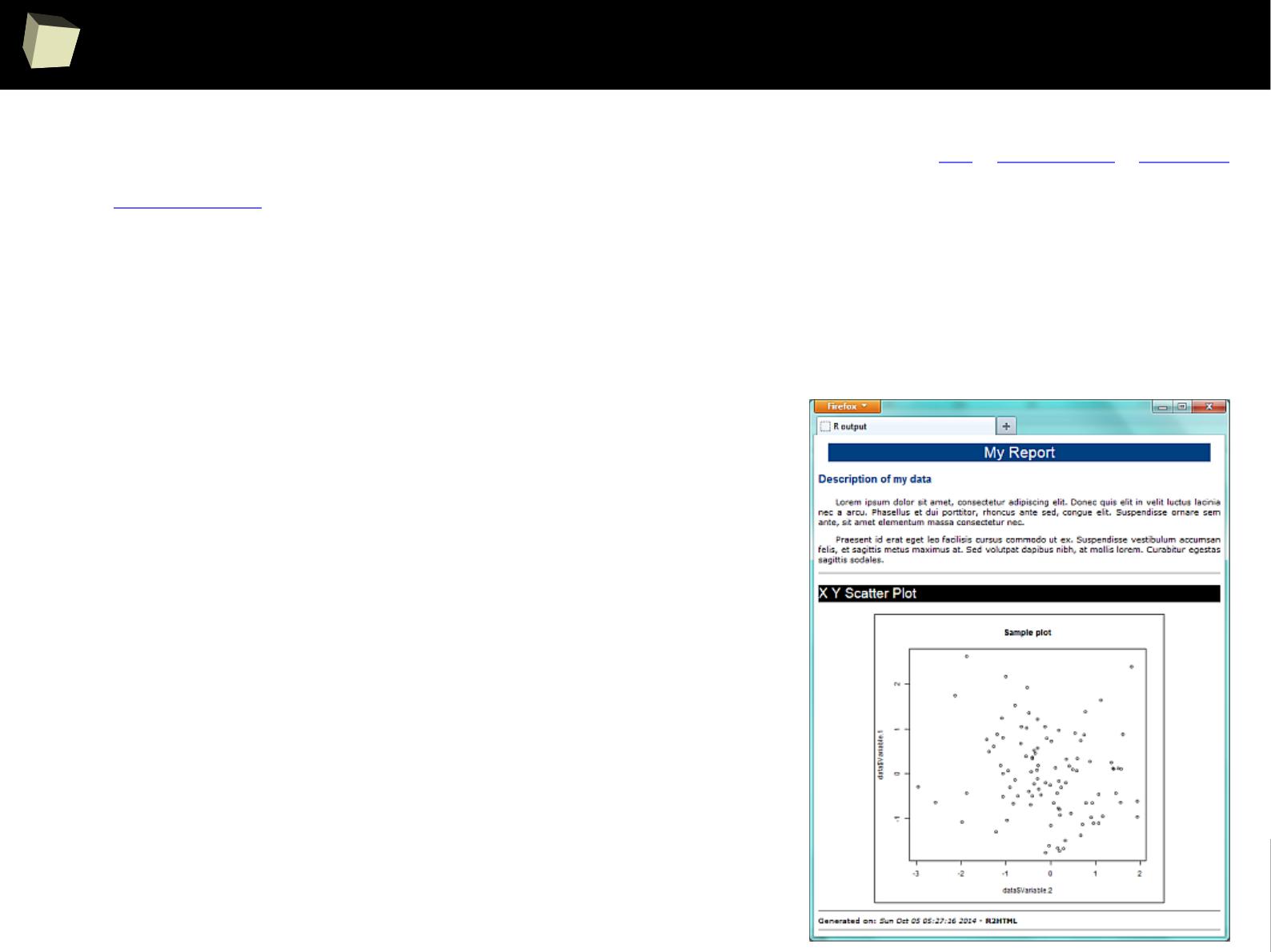

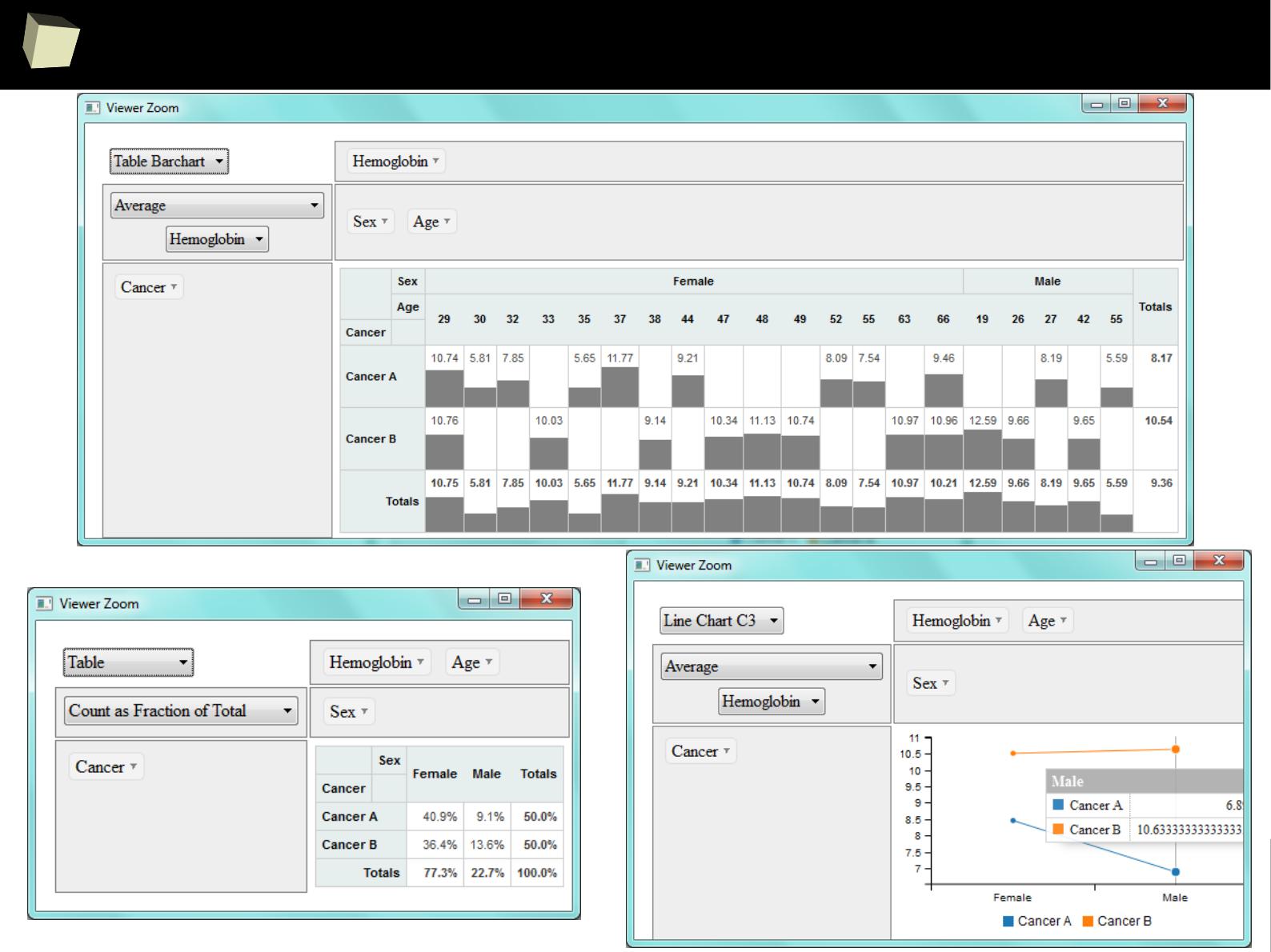

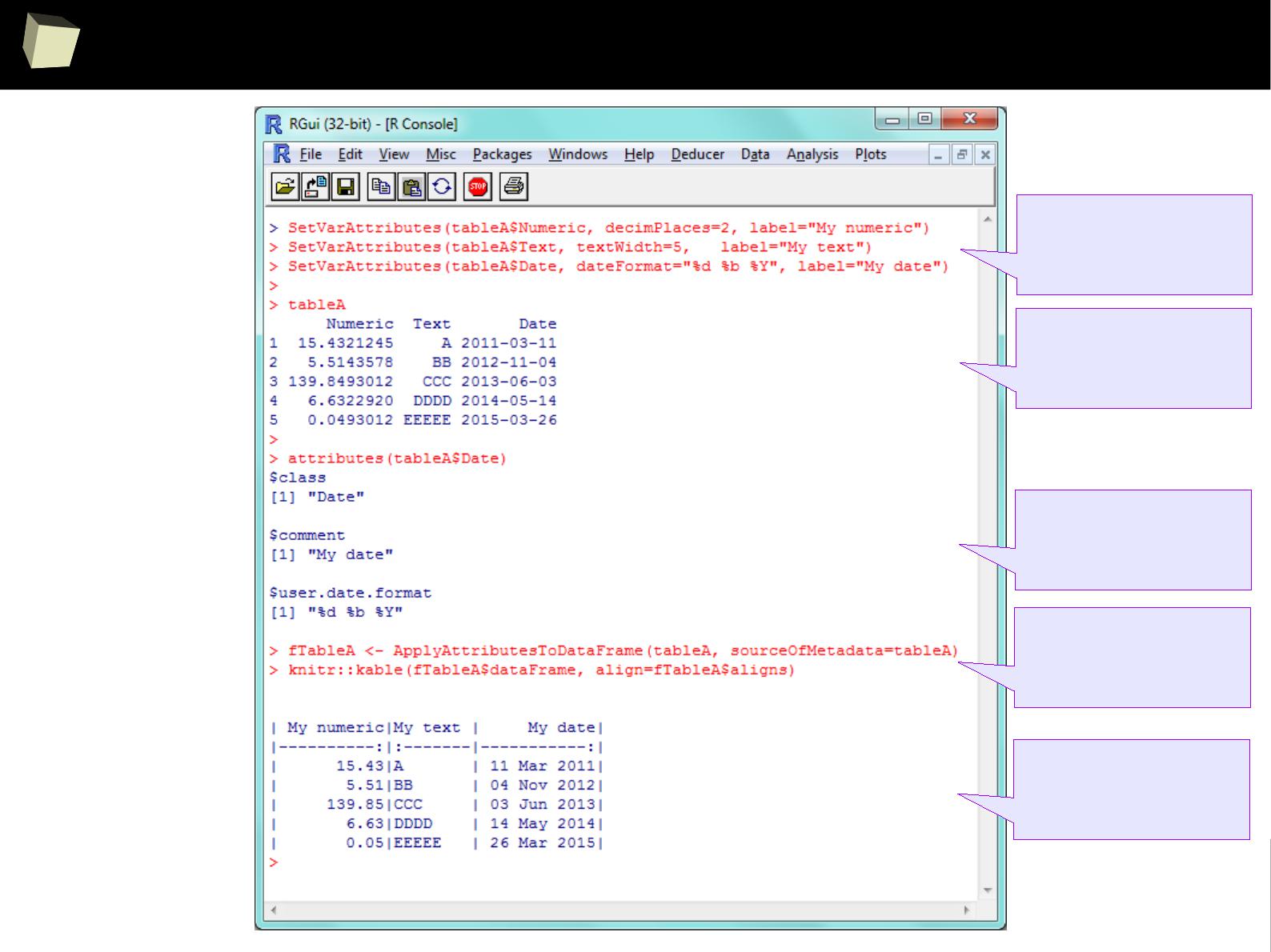

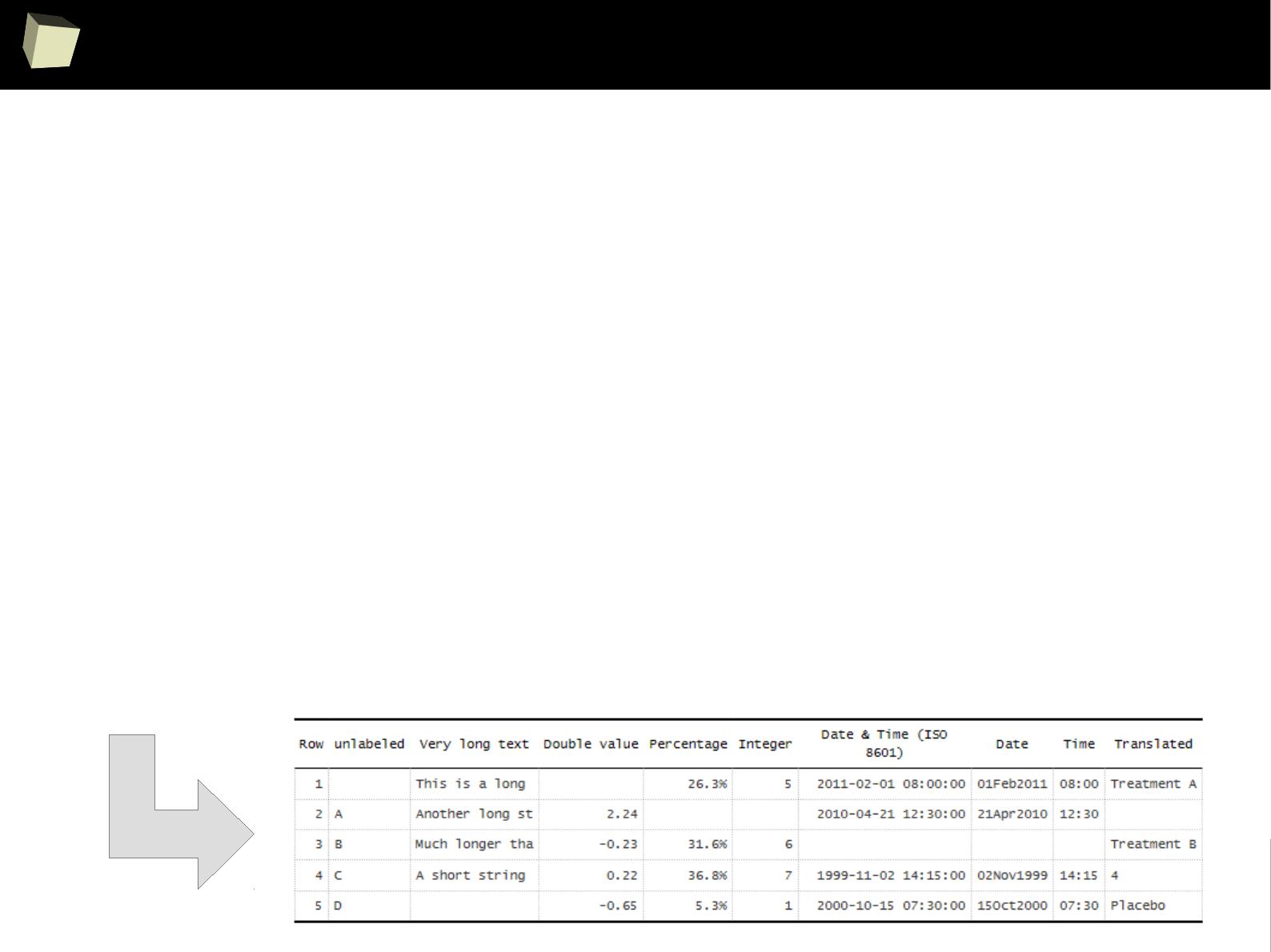

Reproducible Research

Definitions:

●

The goal of reproducible research is to tie specific instructions to data analysis

and experimental data so that scholarship can be recreated, better

understood and verified.

http://cran.r-project.org/web/views/ReproducibleResearch.html

●

The term reproducible research refers to the idea that the ultimate product of

academic research is the paper along with the full computational environment

used to produce the results in the paper such as the code, data, etc. that can

be used to reproduce the results and create new work based on the research.

http://en.wikipedia.org/wiki/Reproducibility#Reproducible_research

2

0

2

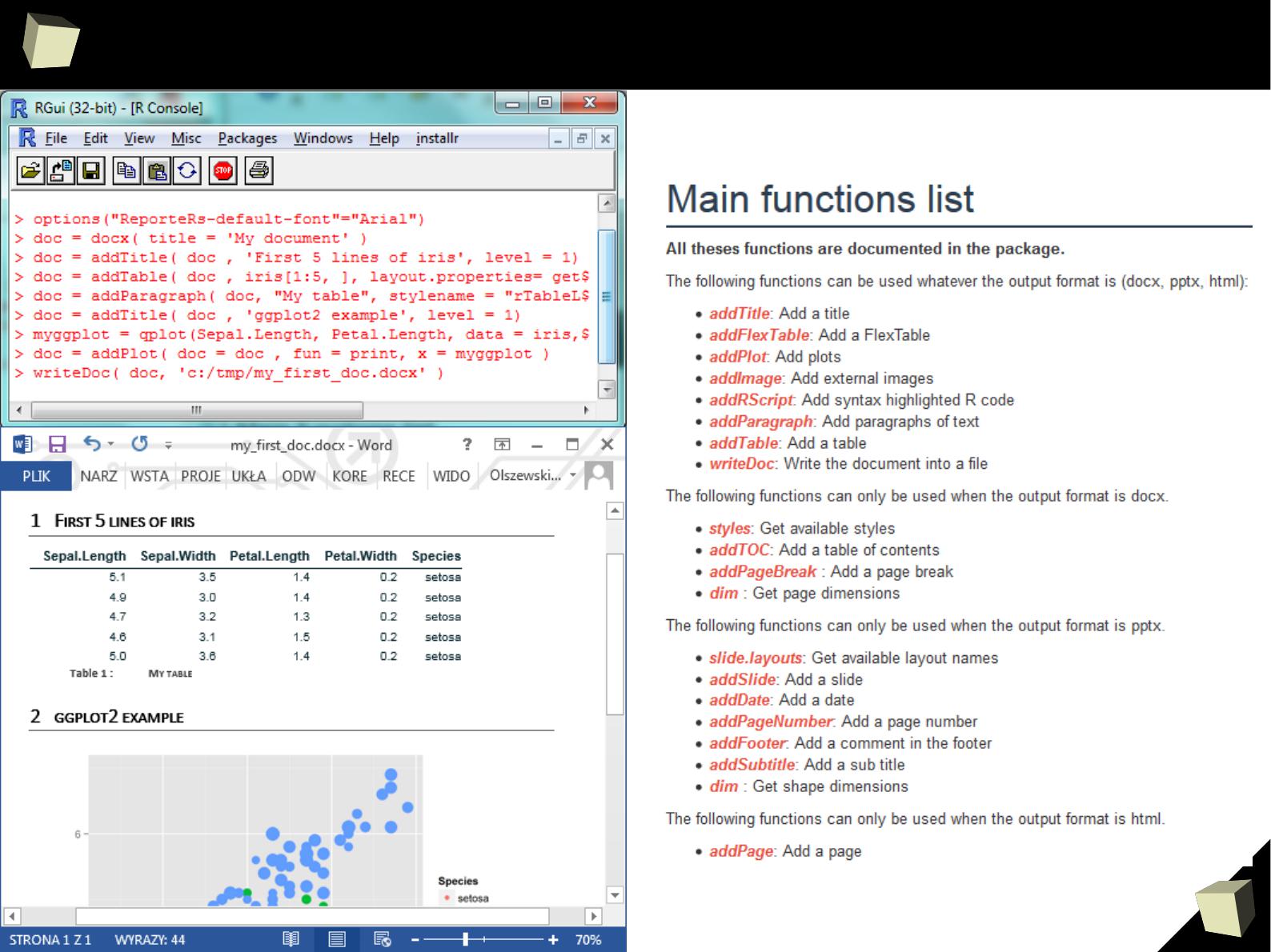

Reproducible Research

In simply words, documents are created from templates containing presentation

code (Markdown, HTML, LaTeX) or formatted paragraphs (MS Word, Open

Document, RTF) mixed with chunks of R code.

After the template is processed, chunks of R code are replaced with the result

they produce.

With this approach one can forget about continuous copying and pasting objects

(charts and tables) from the statistical package into a word processor.

Such documents are self-contained in that, they are capable to perform alone all

the tasks required to complete rendering:

●

setup the environment (install required packages, apply configuration),

●

read data from the indicated sources or generate artificial datasets

●

analyze the retrieved data and produce output objects (tables, graphics)

2

0

3

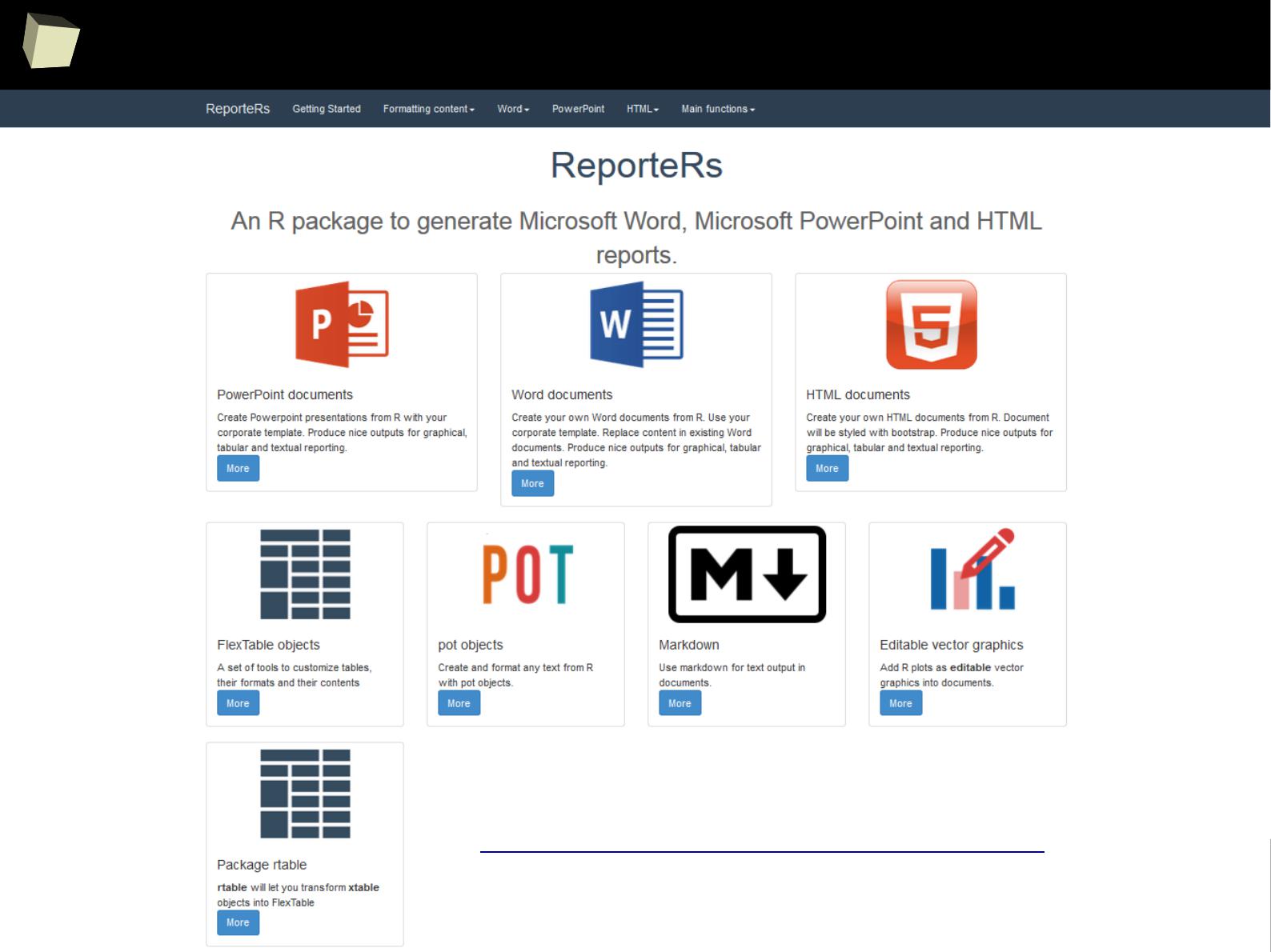

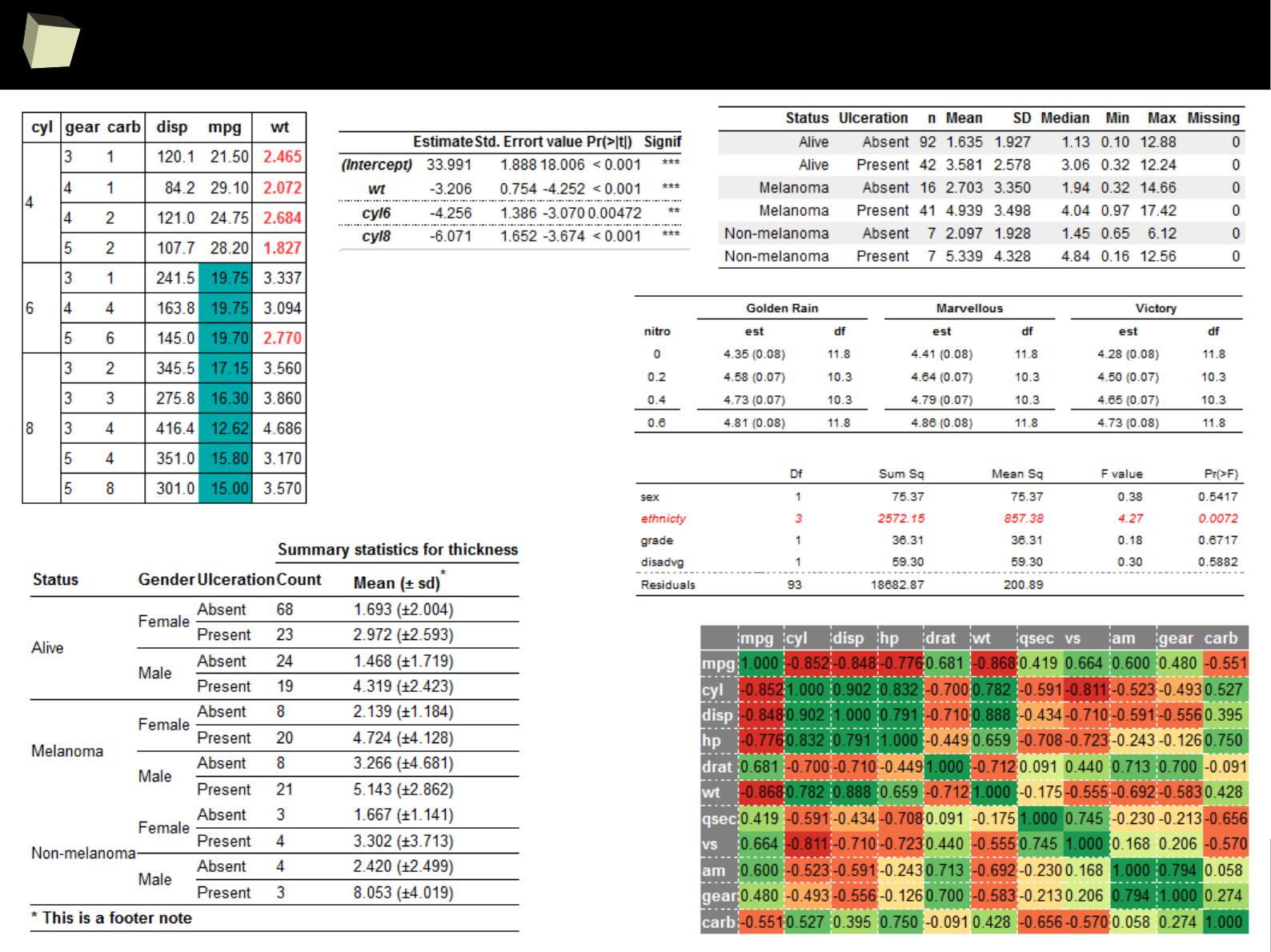

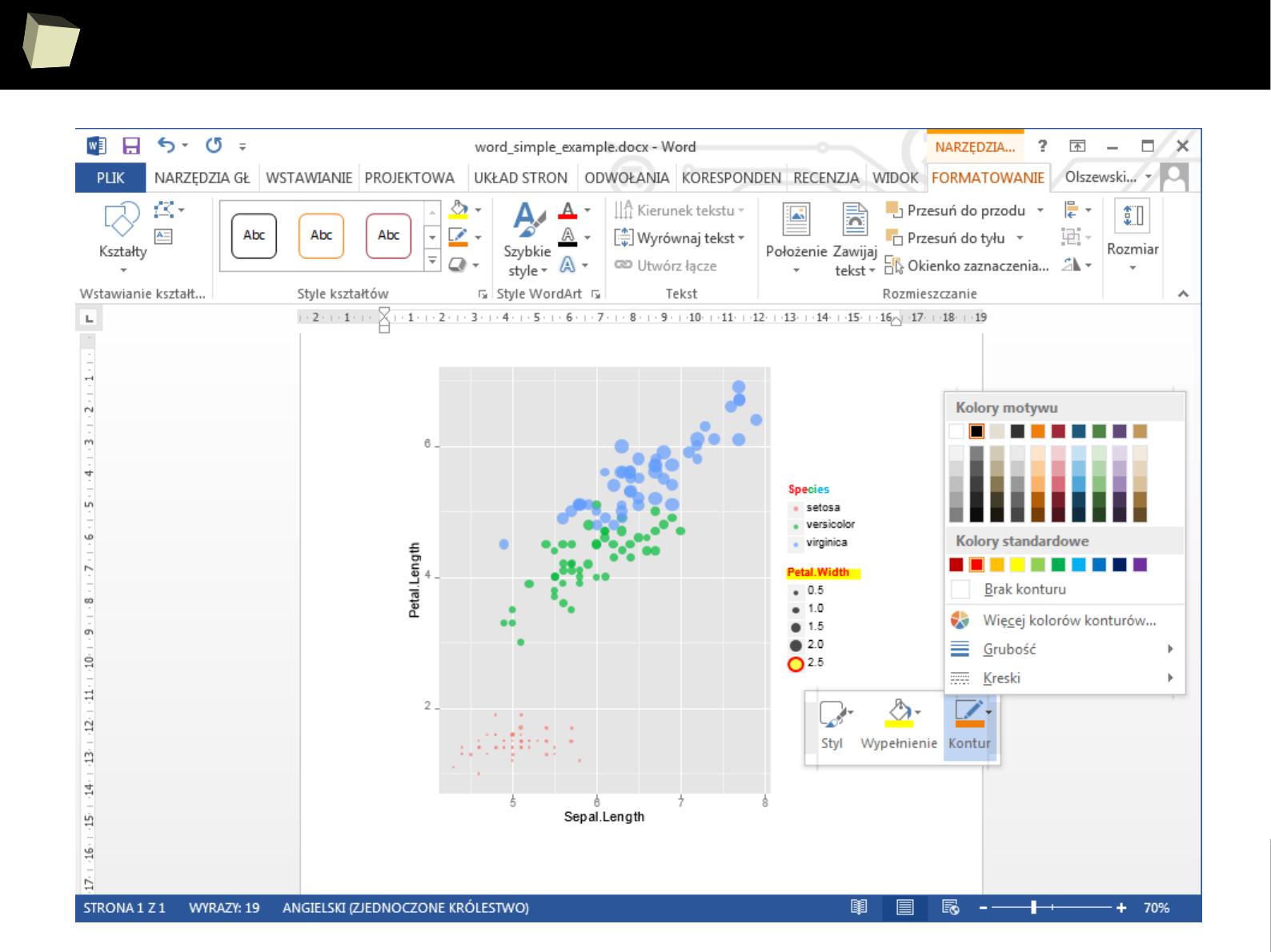

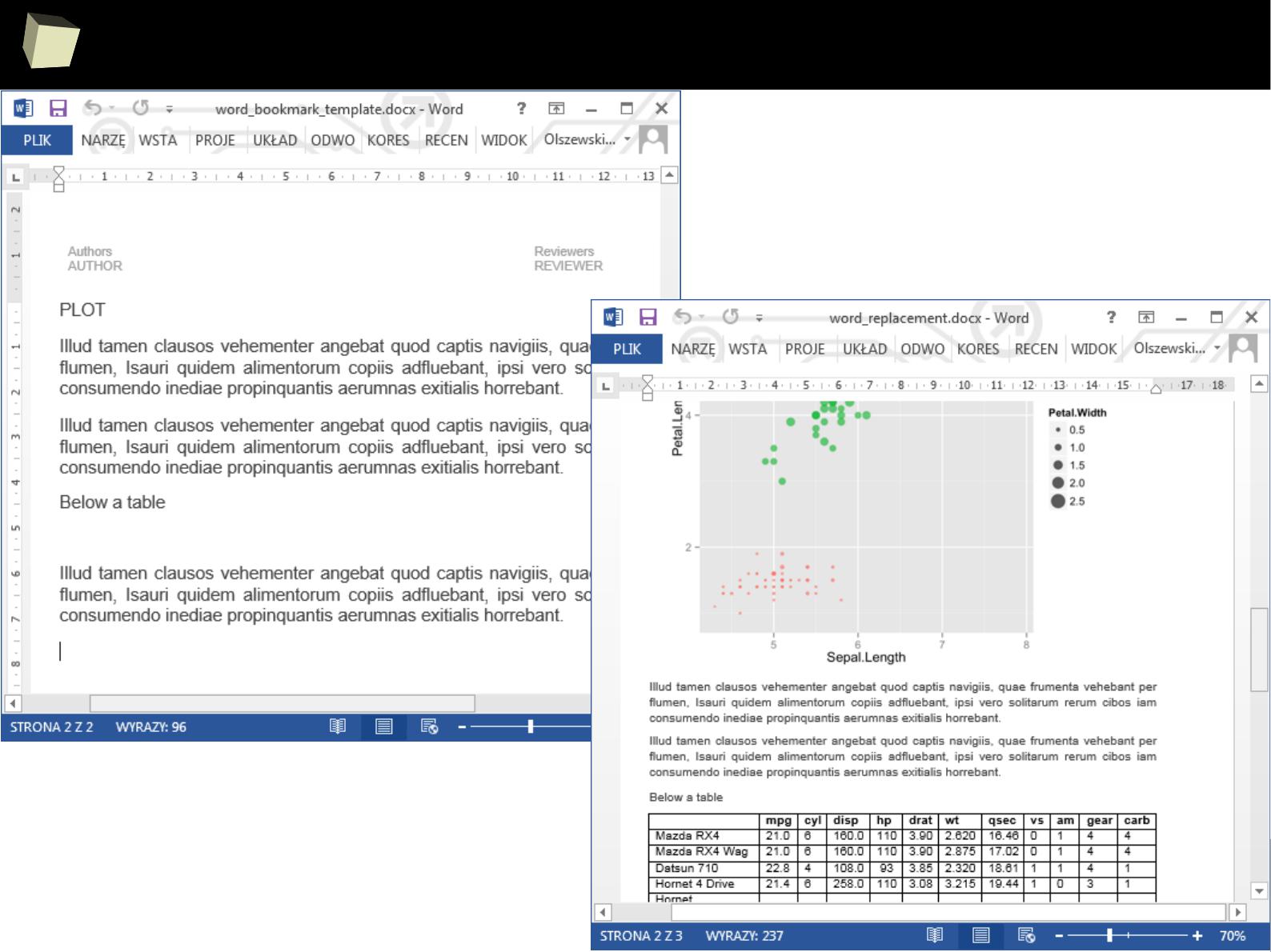

Reproducible Research

●

Knitr + pandoc

●

Sweave

●

OdfWeave

●

ReporteRs

2

0

4

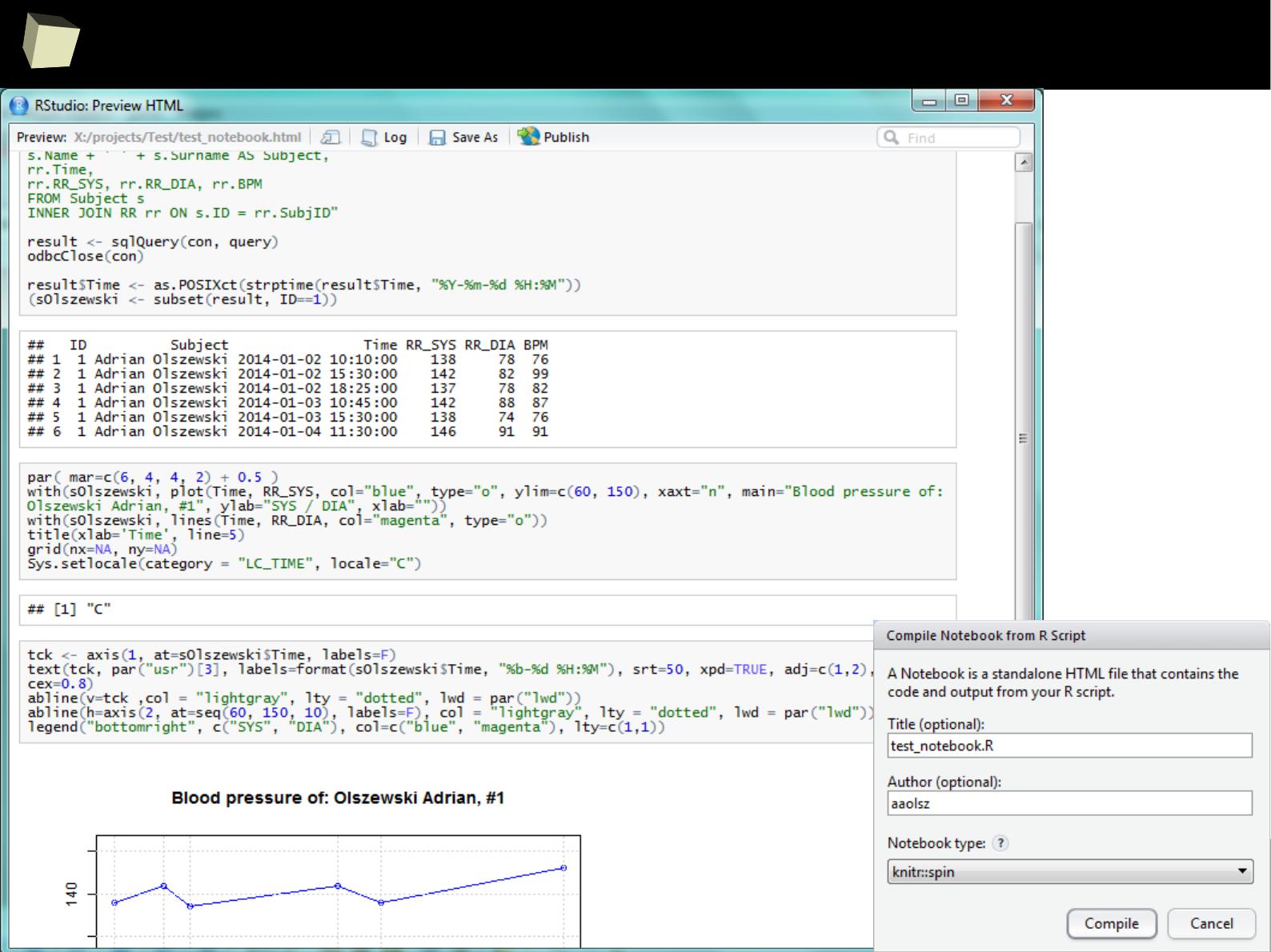

Reproducible Research

2

0

6

Reproducible Research

2

0

7

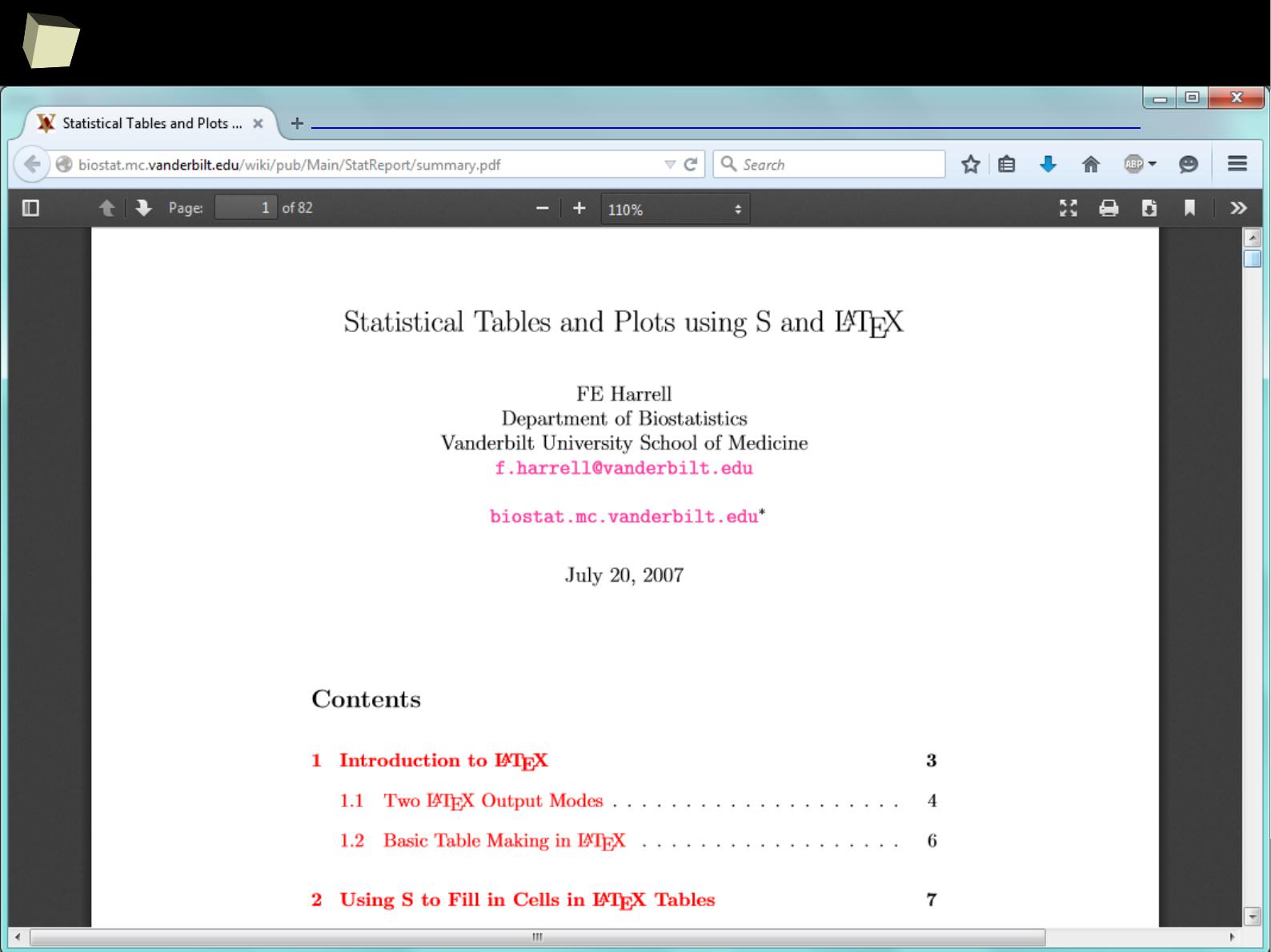

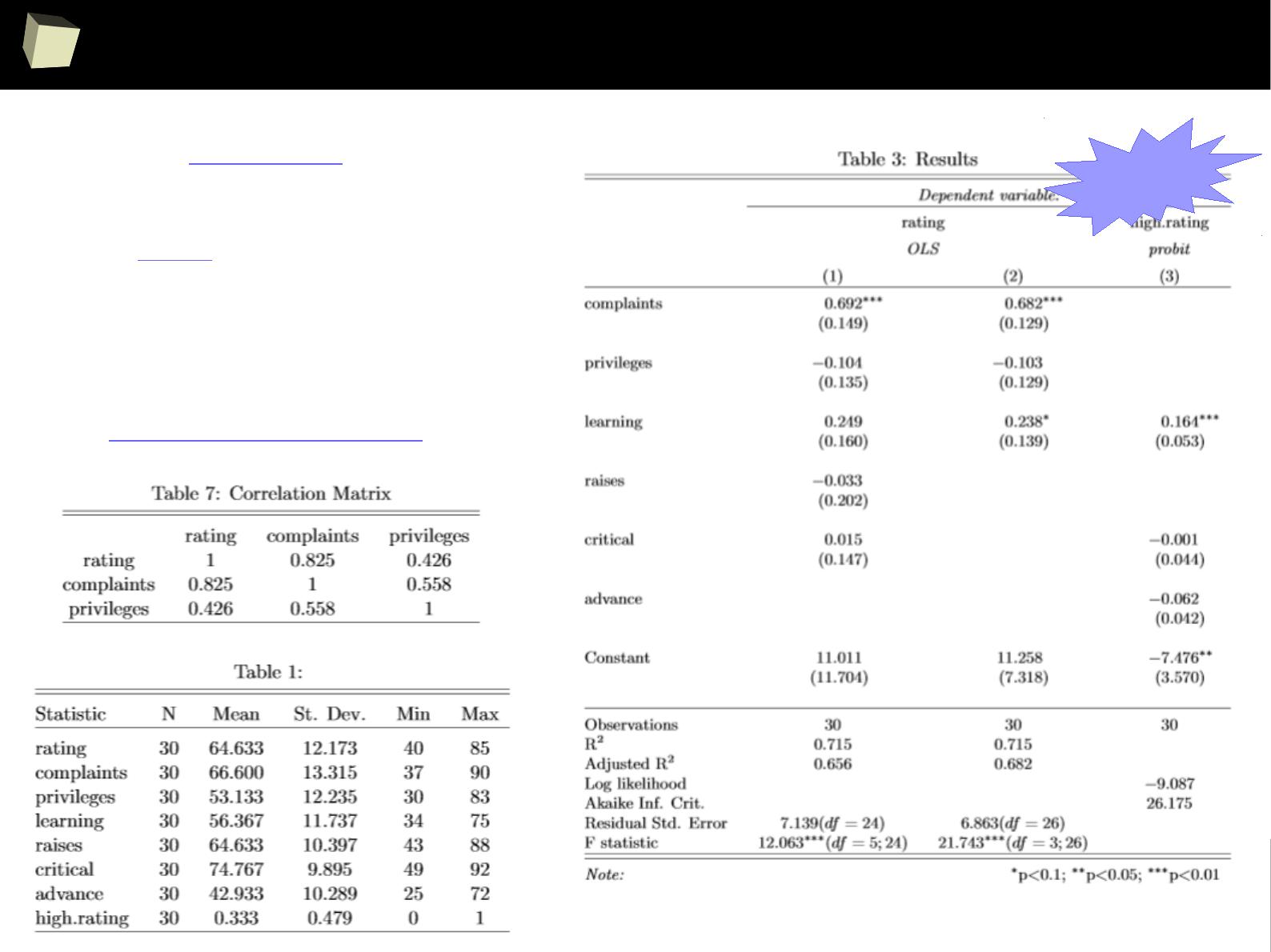

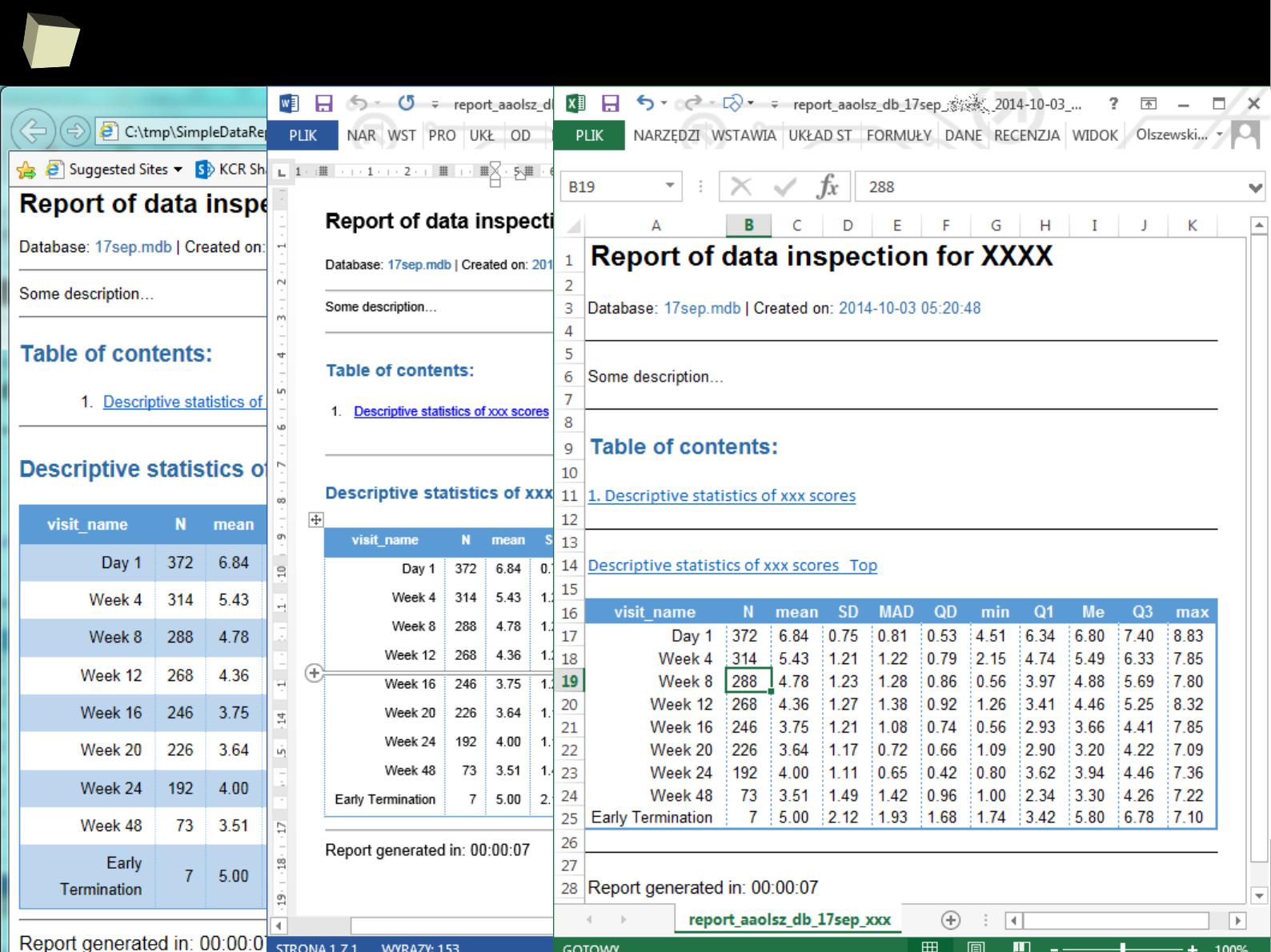

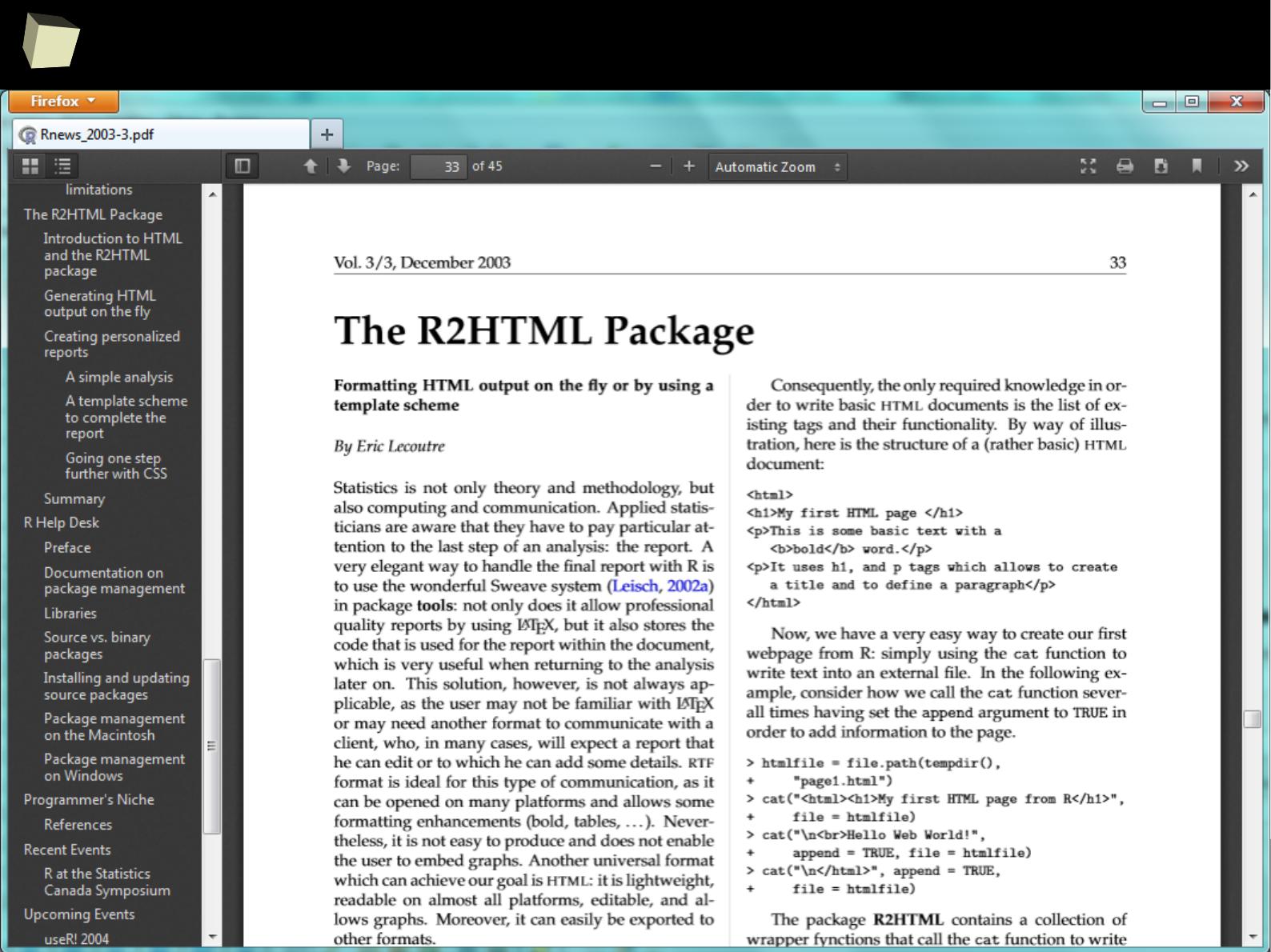

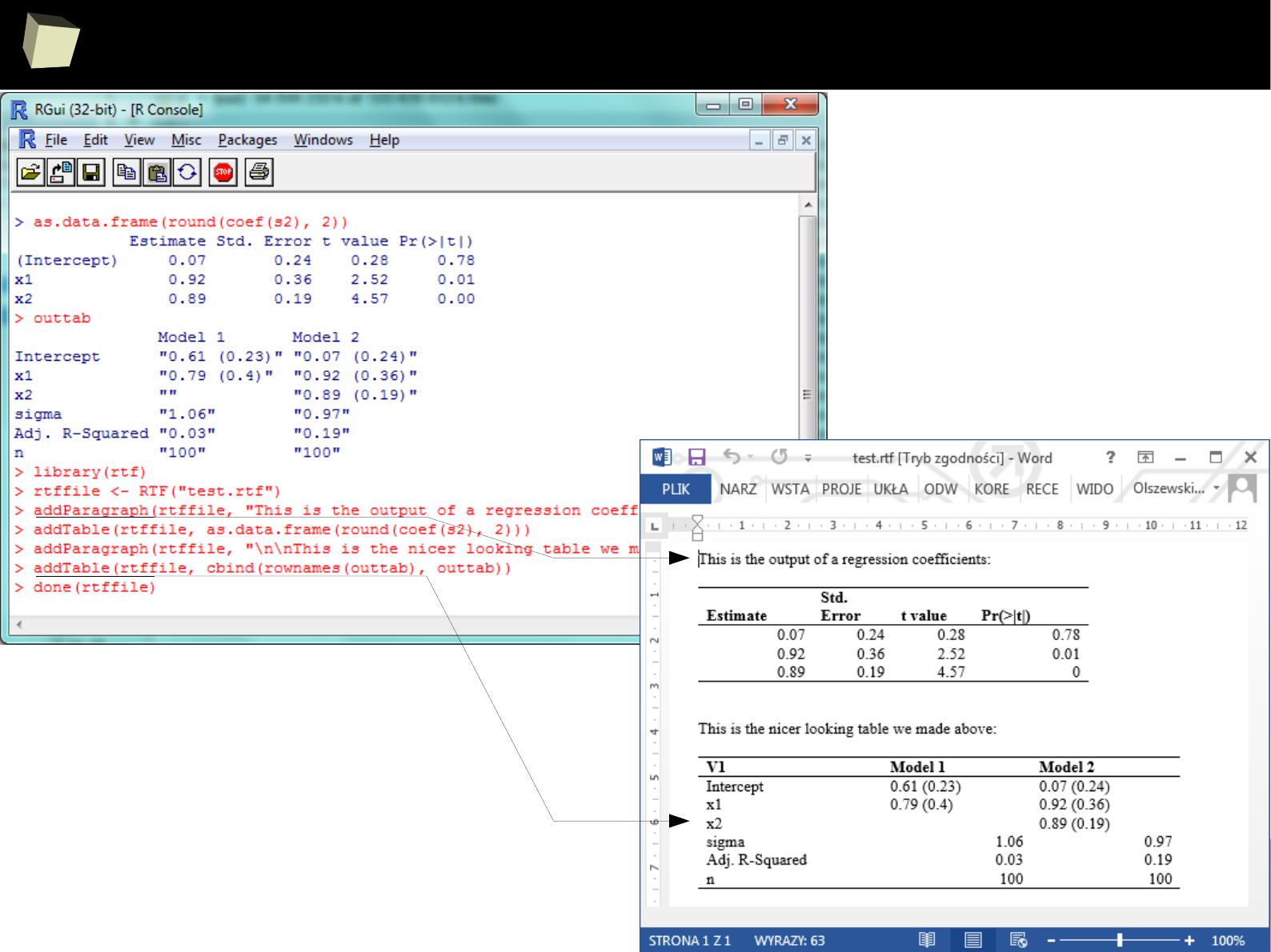

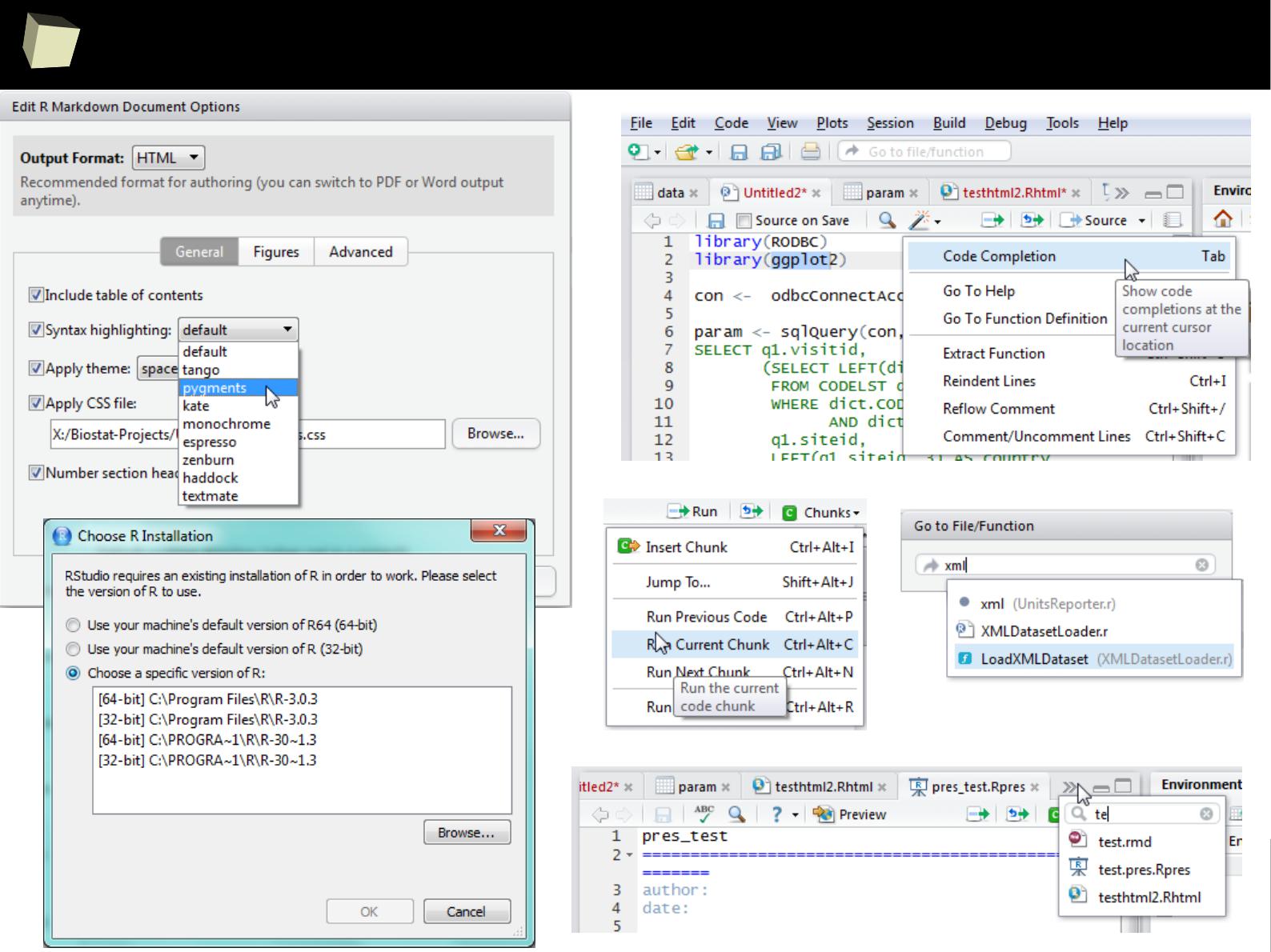

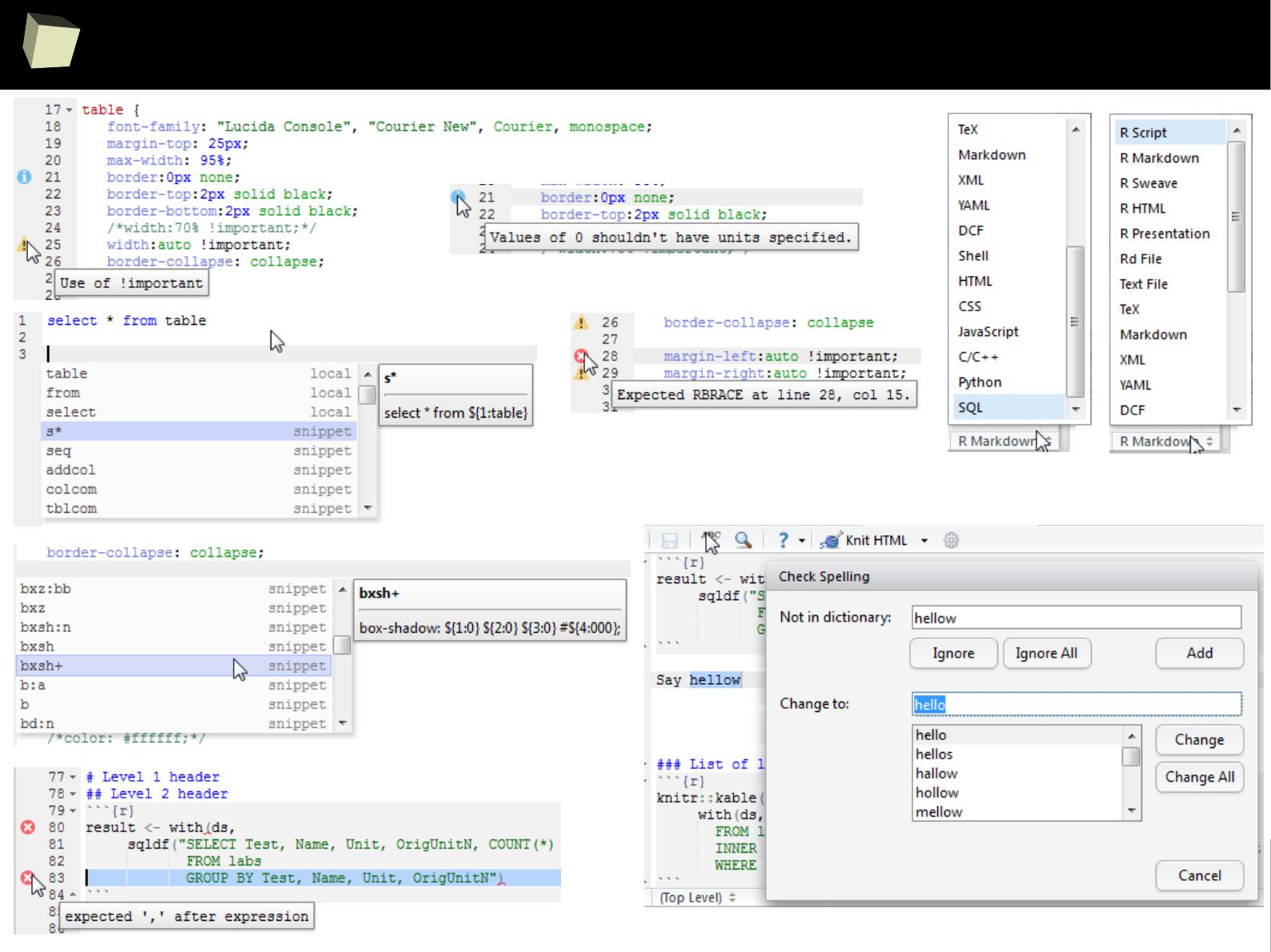

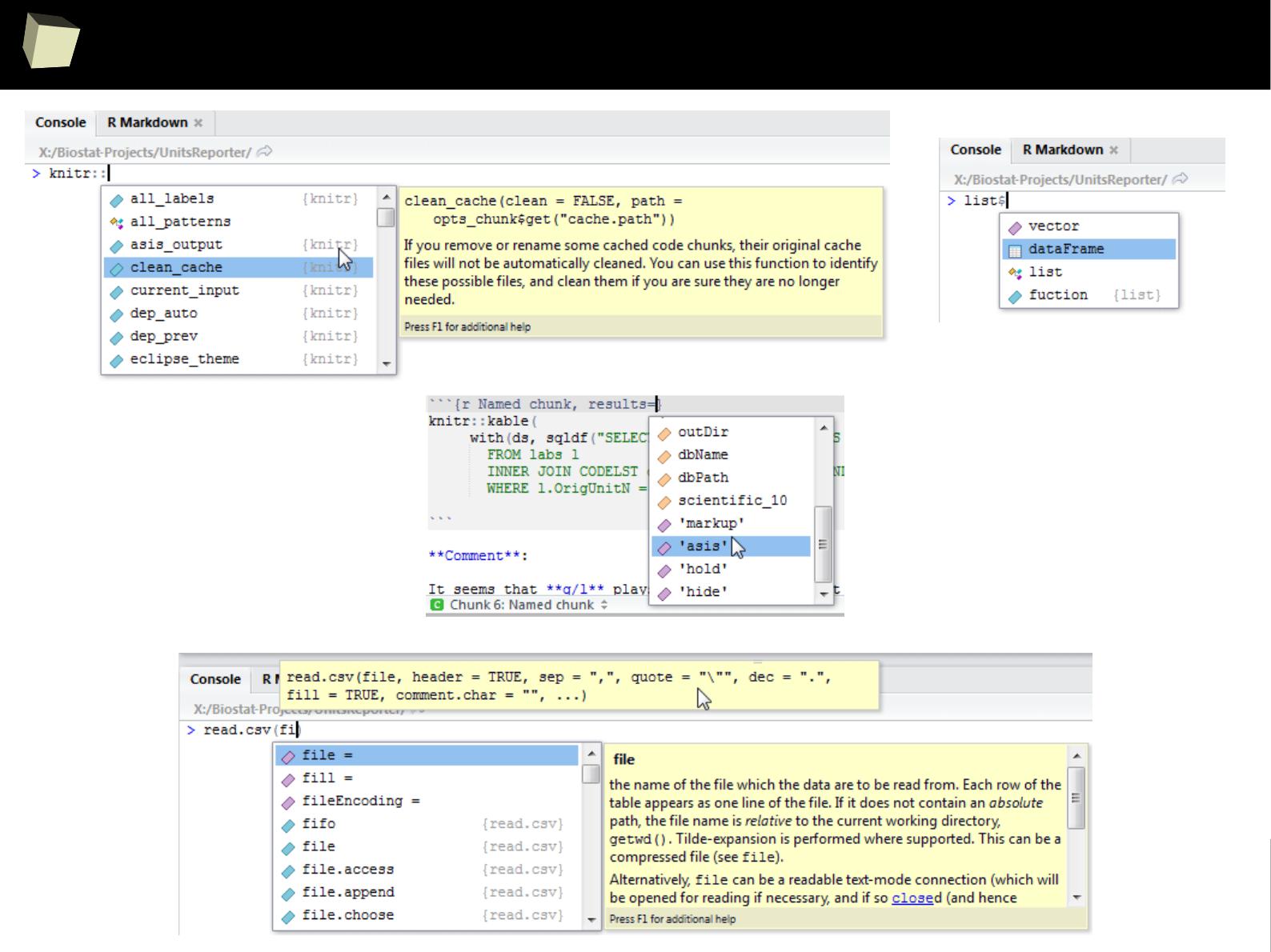

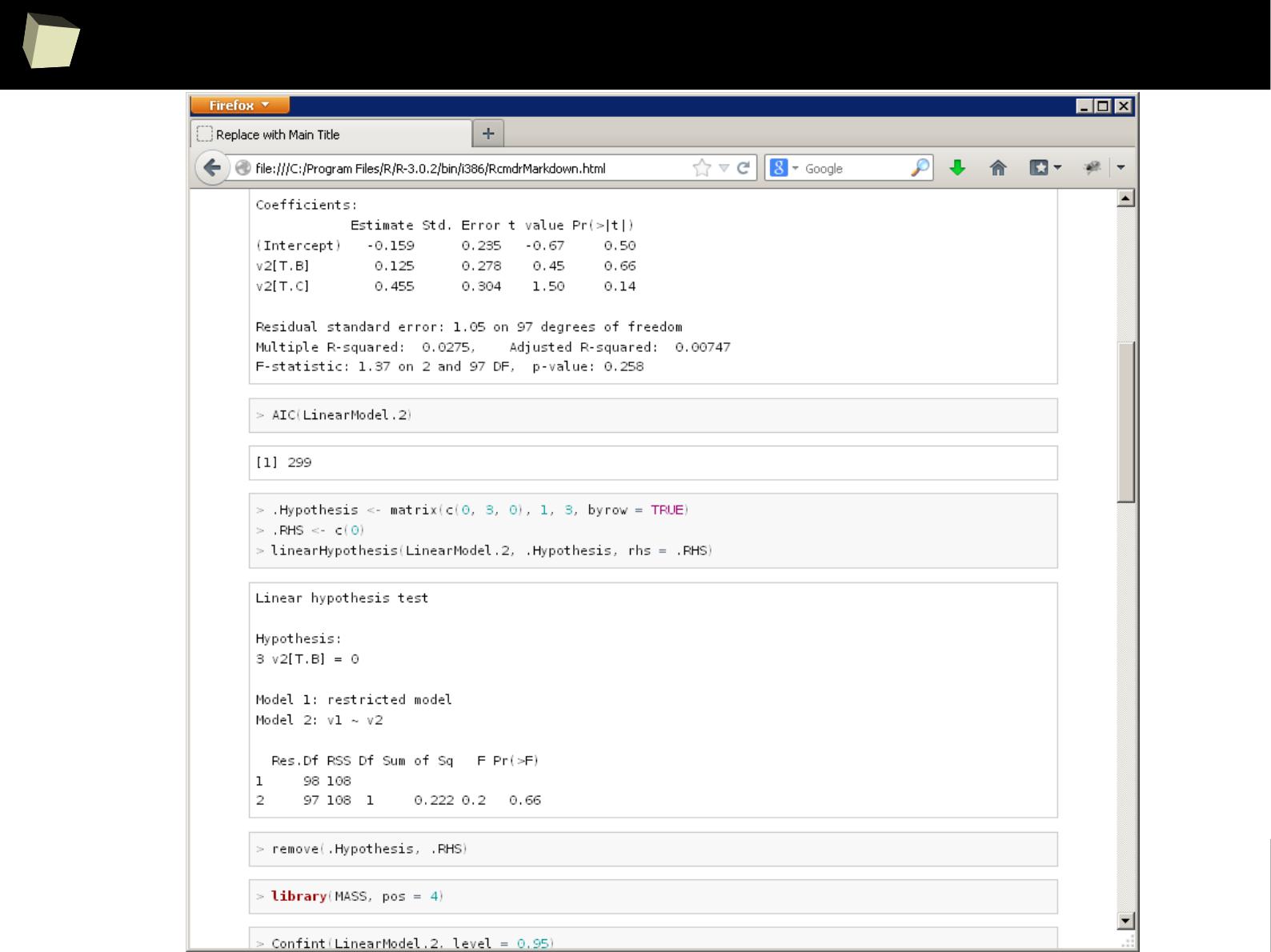

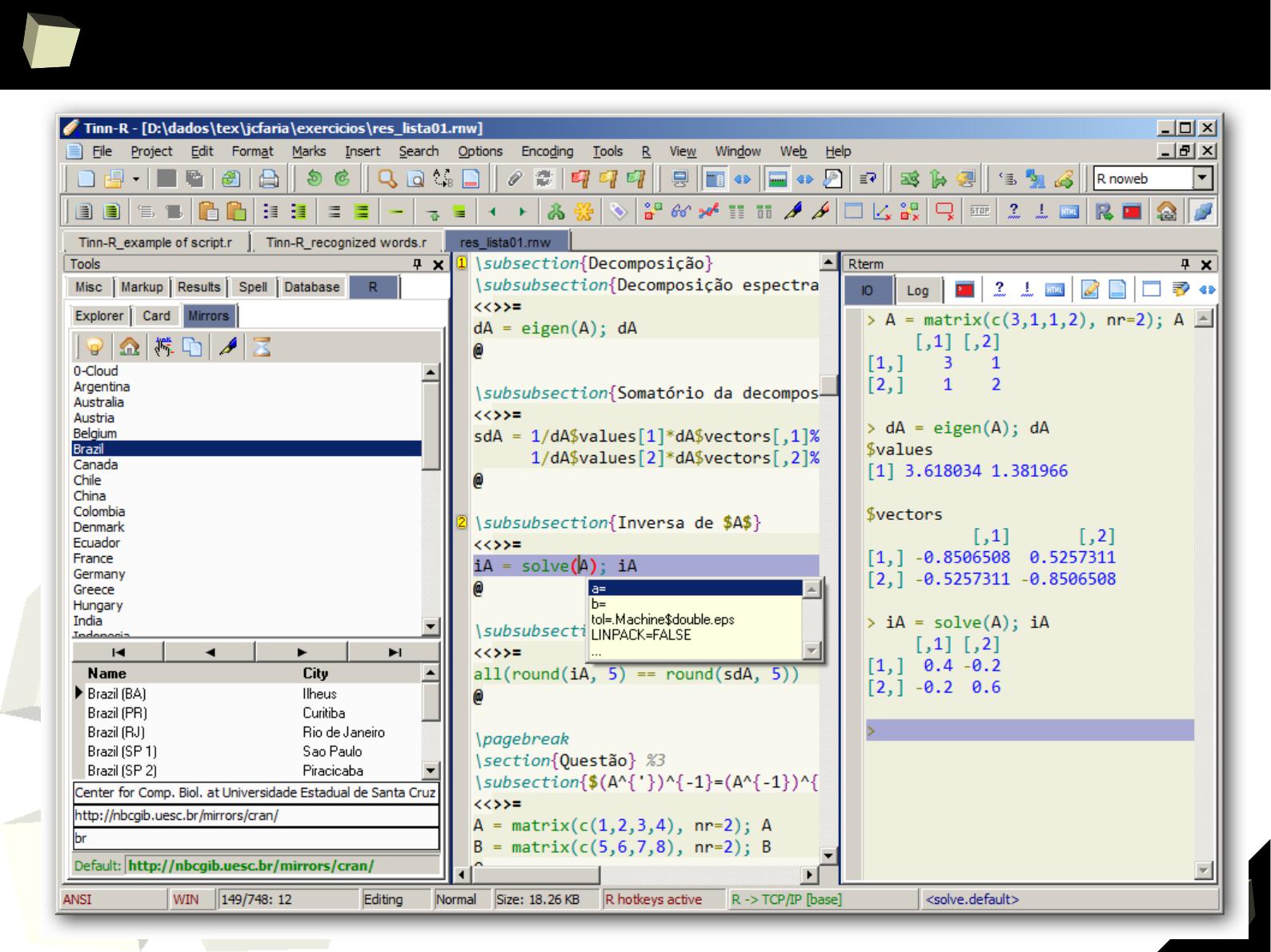

Reproducible Research – knitr and Sweave

With HTML, Latex, R Markdown, xtable and CSS processed by Sweave or knitr

and (optionaly) pandoc, one can produce complex, professionally looking and

reproducible reports in various formats, e.g. DOCX, ODT, HTML, RTF and PDF.

This R-scripts-driven approach might seem a bit complicated, but it gives the

user maximum flexibility and control over how the final product will look like.

HTML and CSS WYSIWYG editors are useful here.

Chunks of R code may be saved into named sub-reports for later reuse in many

places in the template. They can be turned on/off depending on the result of a

conditional expression.

Produced HTML file can be displayed in a web browser, opened directly in a

word processor or spreadsheet or converted to another format (DOCX, PDF).

This is a perfect tool for automated generation of reports or record the flow of

analyses (= R code along with results).

2

0

8

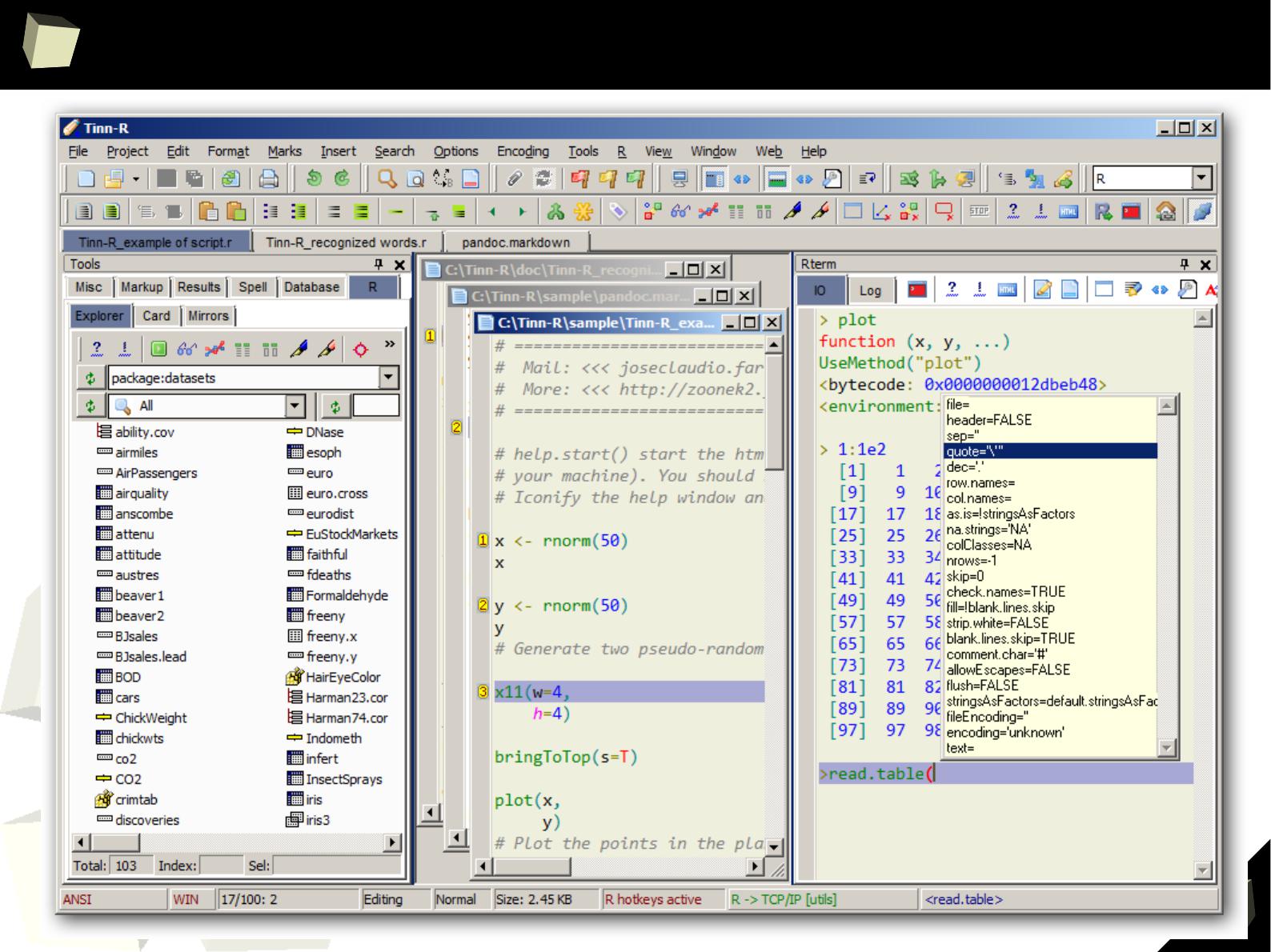

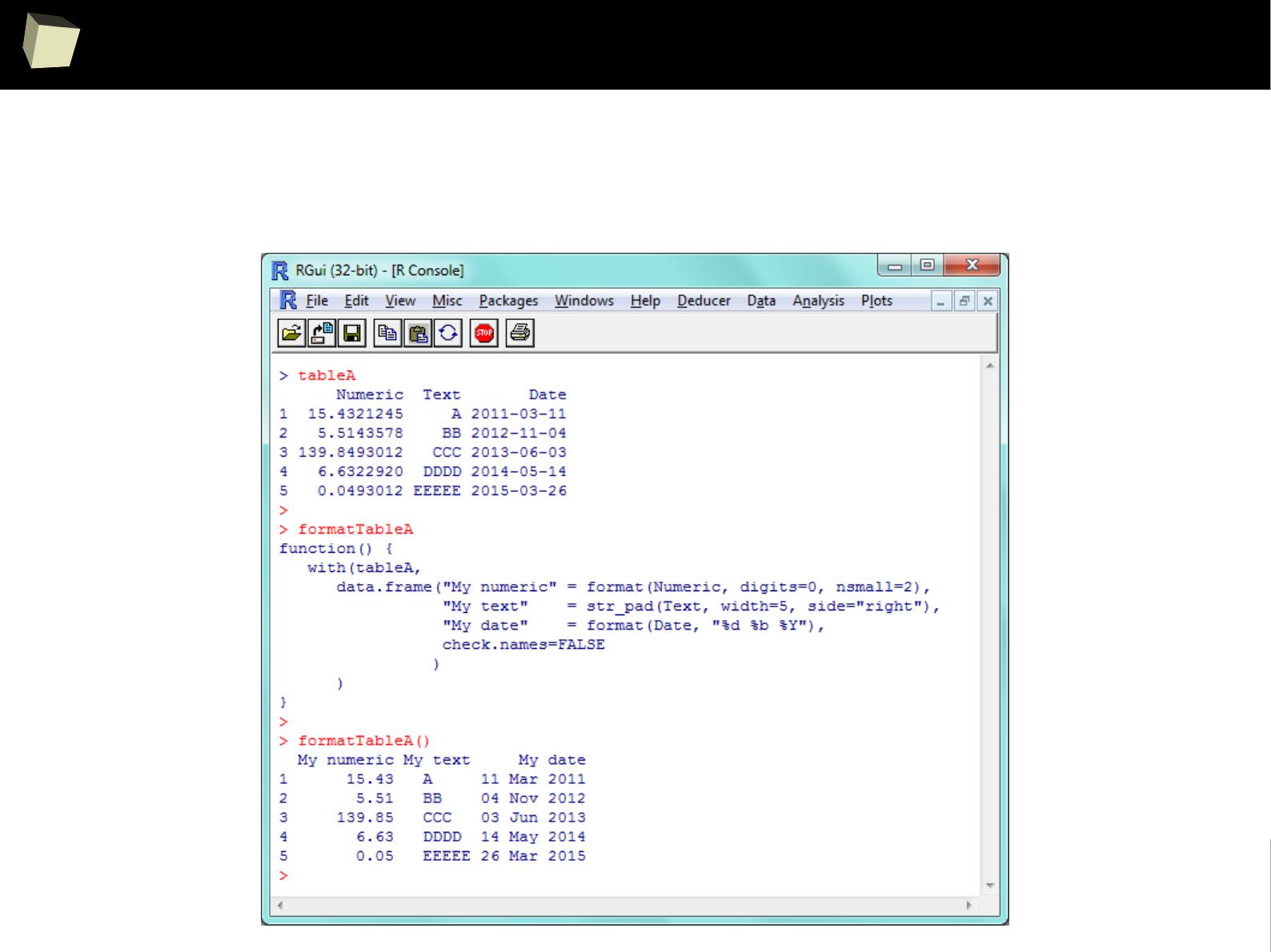

knitr + R Markdown + HTML + CSS

2

0

9

HTML report opened in Word and Excel

2

1

1

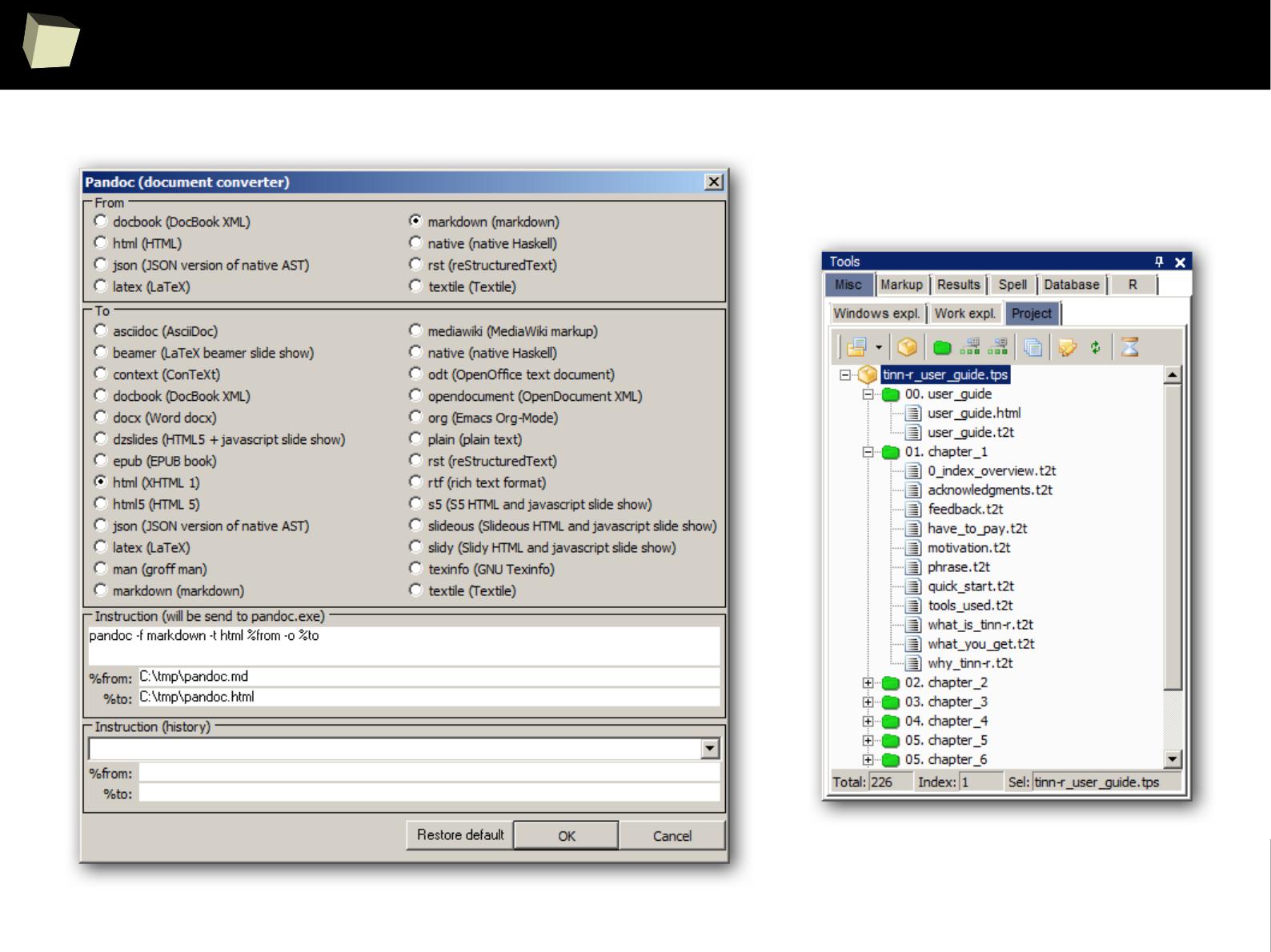

pandoc – convert to real DOC / OXML / PDF / ODF

2

1

2

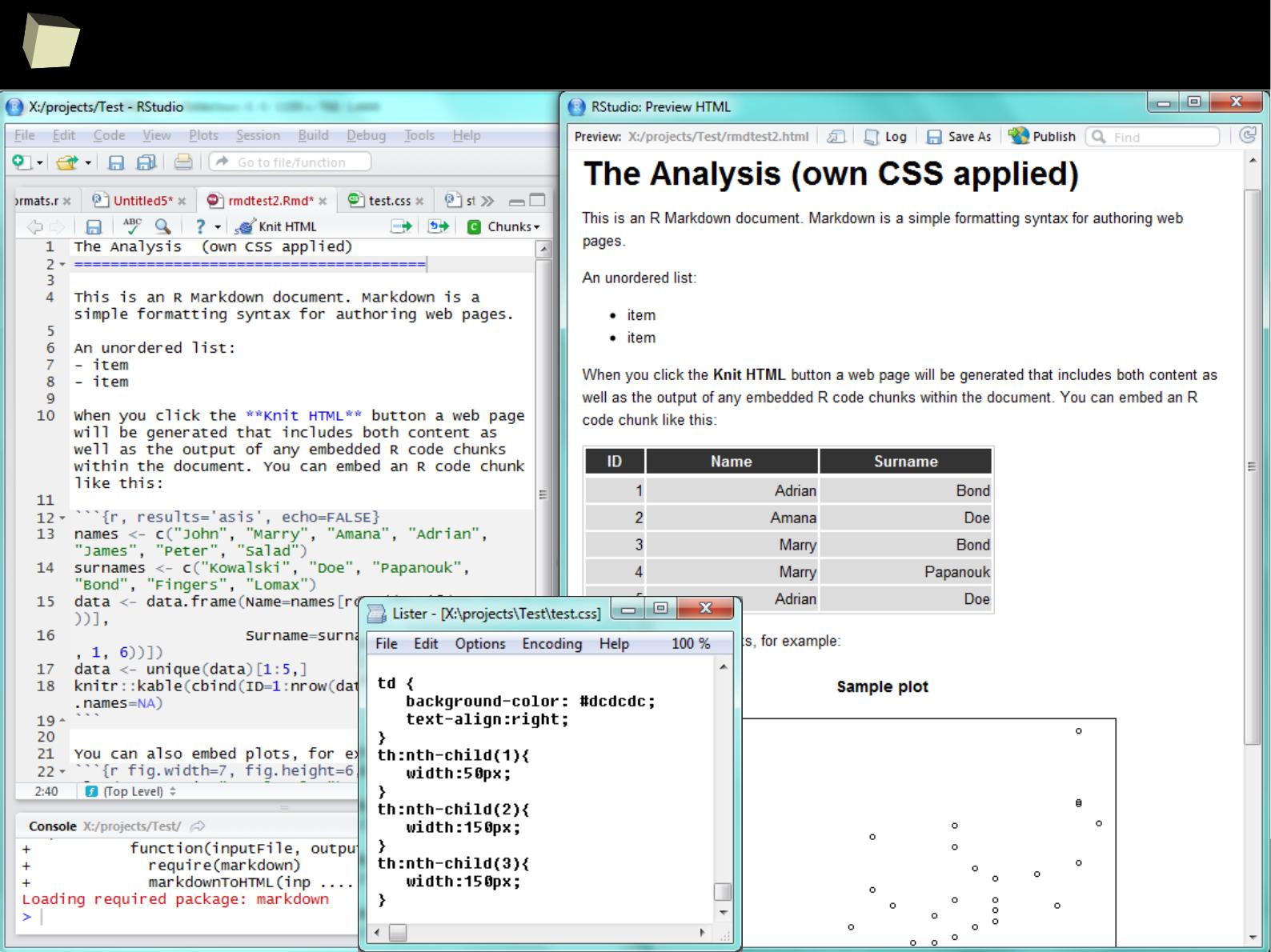

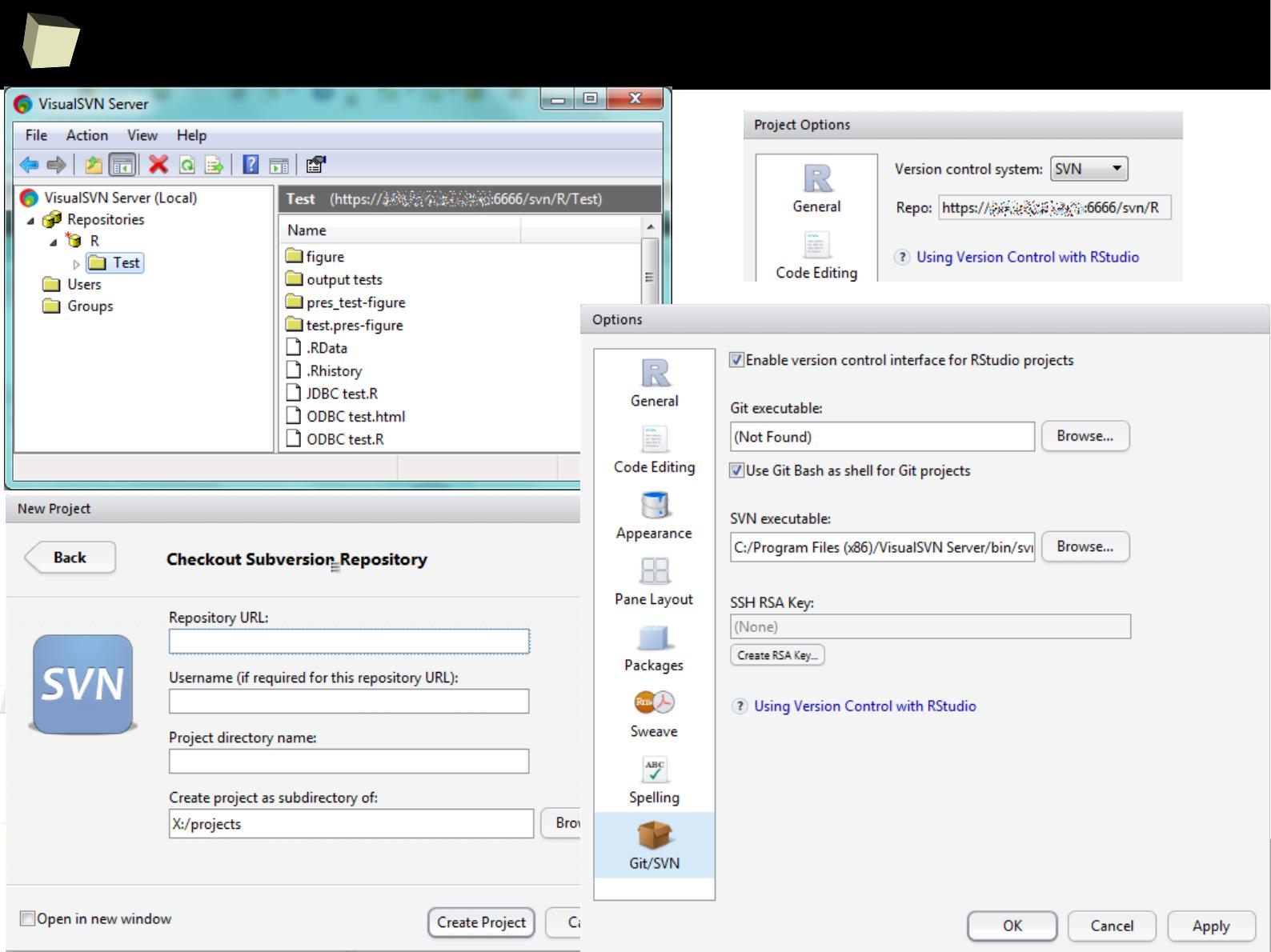

Reproducible Research with RMarkdown

RStudio

2

1

3

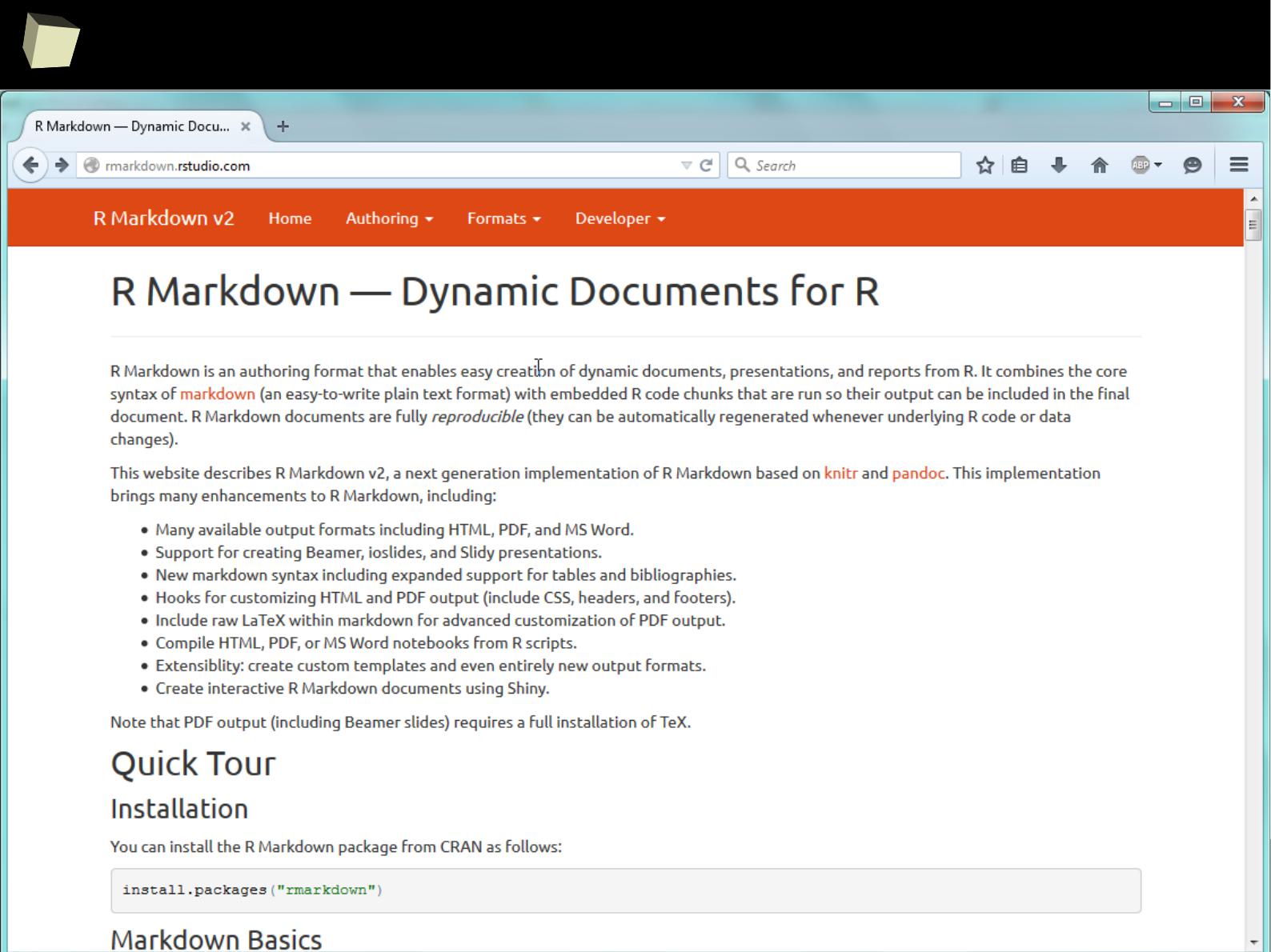

Take a moment and discover R Markdown!

2

1

4

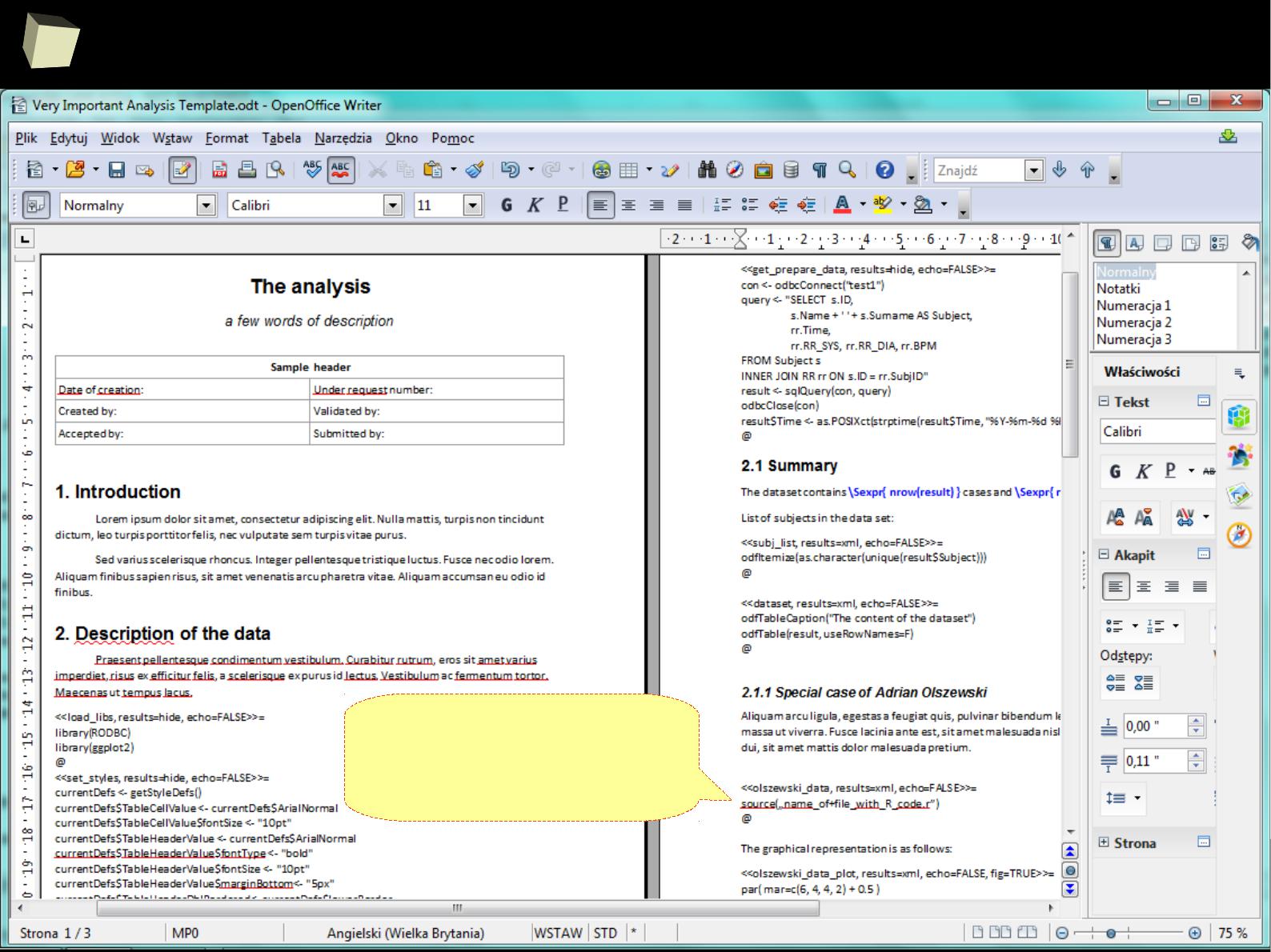

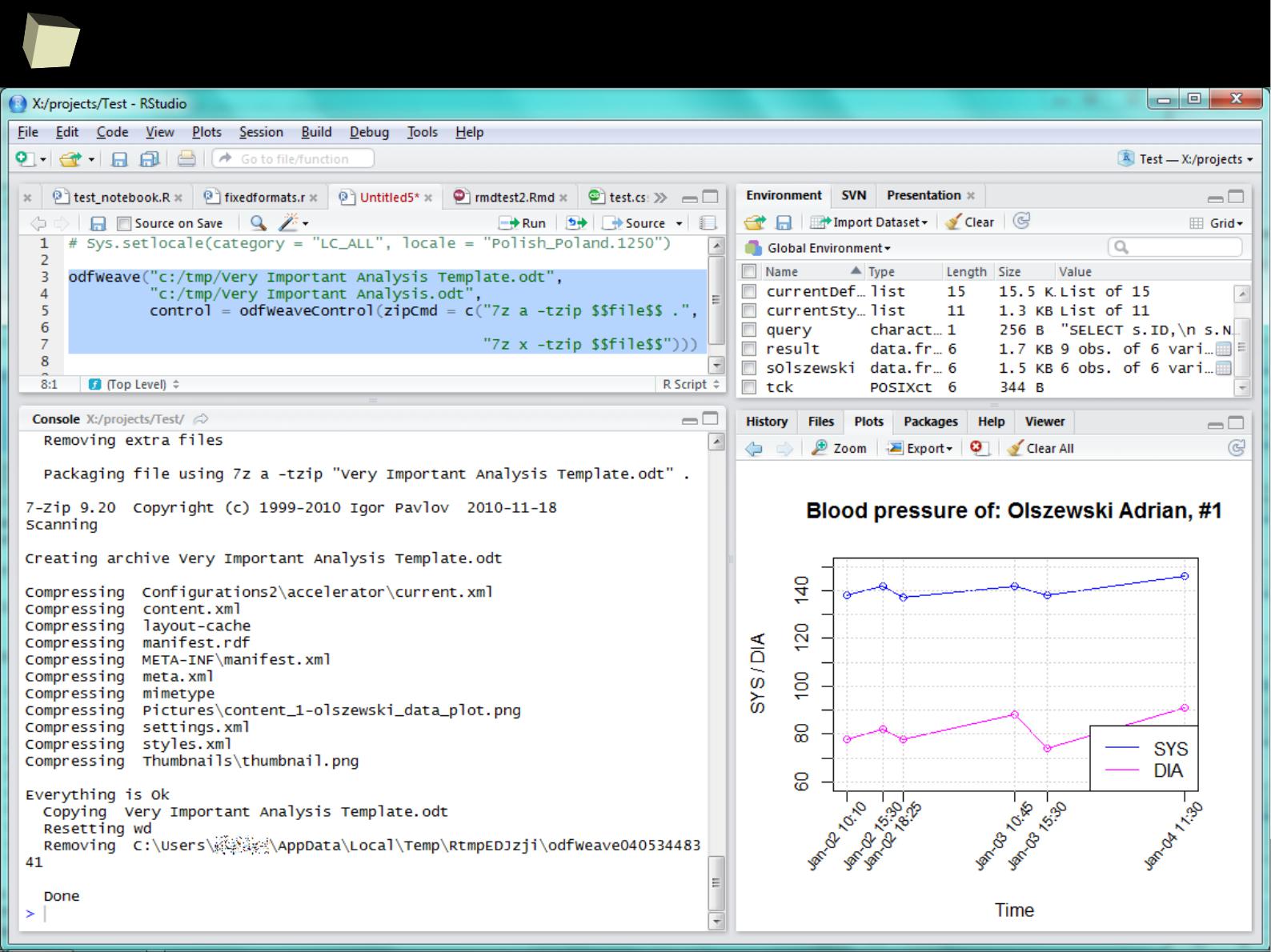

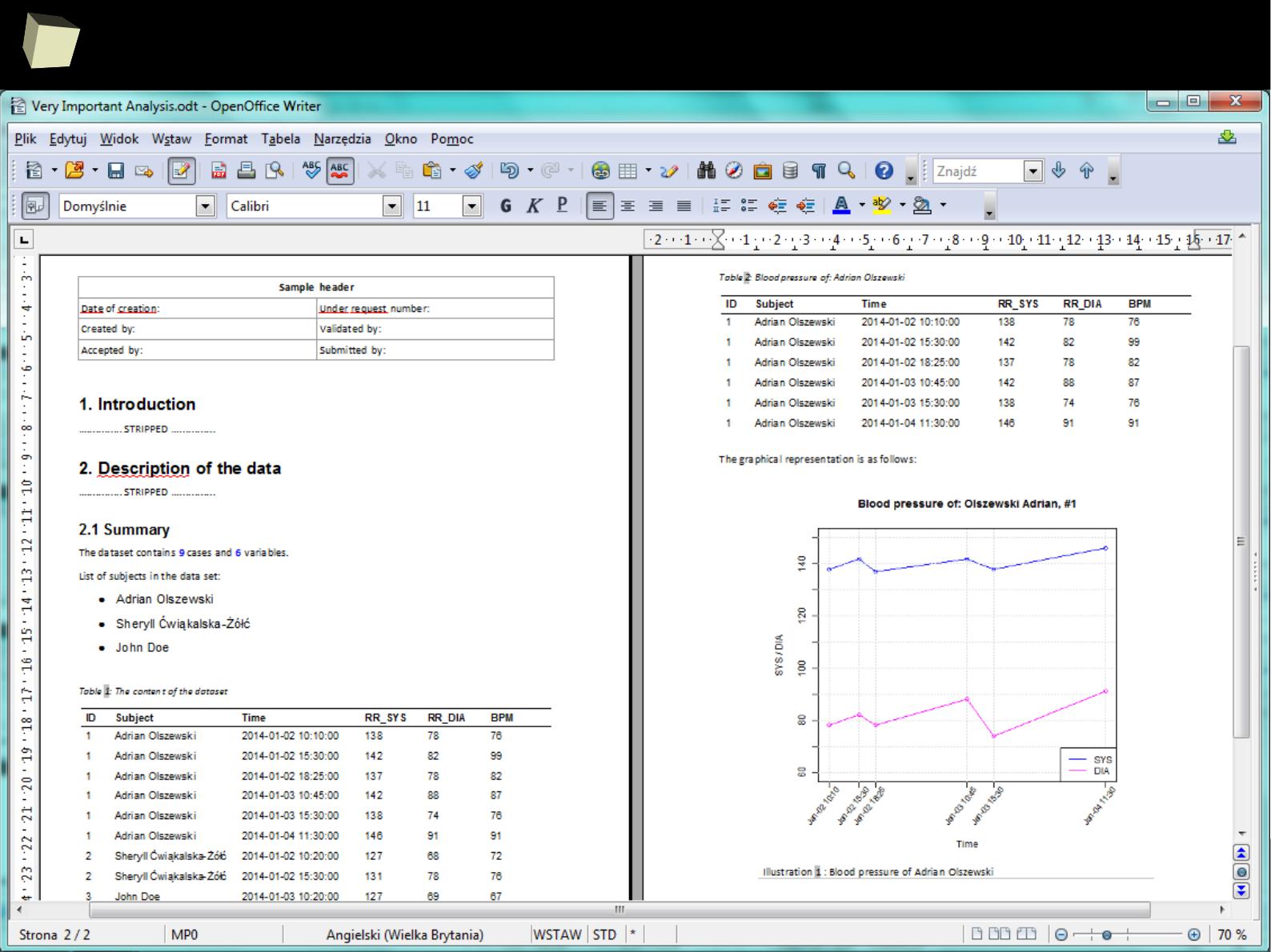

Reproducible Research with ODF – odfWeave

OdfWeave is an adaptation of Sweave for OpenDocument files. Such files serve

as a template of the report with R code embedded in. MS Office, Open/Libre

Office and Calligra Suite (formerly KOffice) work with the OpenDocument format

smoothly.

OdfWeave is flexible and simple to use. It allows to create templates directly in a

word processor. This is the most convenient way to accomplish the Reproducible

Research goals. Styling can be applied (in a limited extent) from the R code and,

mostly – in the editor.

The R code may be embedded into a template document or stored in a separate

file, which is then referenced in the template. Both approaches have their pros

and cons. The second approach is particularly useful, when the same code is

shared between (reused in) many documents.

2

1

5

Reproducible Research with ODF – odfWeave